AI Ethics Brief #69: AI certification, dataset mismanagement, Apple's privacy forays, and more ...

How far will you go to claim your face from Clearview AI?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~14-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

The Launch Space: A roadmap to more sustainable AI systems

🔬 Research summaries:

AI Certification: Advancing Ethical Practice by Reducing Information Asymmetries

📰 Article summaries:

The hacker who spent a year reclaiming his face from Clearview AI

Apple Walks a Privacy Tightrope to Spot Child Abuse in iCloud

AI datasets are prone to mismanagement, study finds

Training self-driving cars for $1 an hour

But first, our call-to-action this week:

Preserving the Ecosystem: AI, Data and Algorithms

The Montreal AI Ethics Institute is partnering with AI Policy Labs for a discussion on AI and the environment.

The discussion will span across how AI is being leveraged for a greener future. With the computational power required, such technology has the possibility to harm the environment, while also holding the key to innovation. Discussions surrounding this paradox through an environmental lens will be the mainstay of this meetup.

📅 September 9th (Thursday)

🕛 Noon –1:30PM EST

✍️ What we’re thinking:

From the Founder’s Desk:

The Launch Space: A roadmap to more sustainable AI systems

Our founder Abhishek Gupta will be speaking at this event hosted by Microsoft.

AI has a sizeable carbon footprint, both during training and deployment phases. How do we build AI systems that are greener? The first thing we need to understand is how to account and calculate the carbon impact of all the resources that go into the AI lifecycle. So what is the current state of carbon accounting in AI? How effective has it been? And can we do better? This conversation will answer these questions and dive into what the future of carbon accounting in AI looks like and what role standards can play in this, especially if we want to utilize actionable insights to trigger meaningful behavior change.

🔬 Research summaries:

AI Certification: Advancing Ethical Practice by Reducing Information Asymmetries

How can we incentivize the adoption of AI ethics principles? This paper explores the role of certification. Based on a review of the management literature on certification, it shows how AI certification can reduce information asymmetries and incentivize change. It also surveys the current landscape of AI certification schemes and briefly discusses implications for the future of AI research and development.

To delve deeper, read the full summary here.

📰 Article summaries:

The hacker who spent a year reclaiming his face from Clearview AI

What happened: A person living in Germany on reading the Clearview AI story in the NYT in 2020 wanted to check if Clearview AI had any data about him given his concern about his privacy and how rarely he shared pictures of himself online. He was shocked to discover that Clearview AI had found two images of him that he didn’t even know existed. He raised a complaint in the Hamburg Data Protection Authority which after a 12-month back and forth with the company finally ordered them to remove the mathematical hash that characterized his biometric data, his face.

Why it matters: With the way that Clearview AI has gathered data from public sources on people’s faces, the person from Germany rightly claims in the interview that the company has made it impossible to remain anonymous now. It is not like a regular search engine process in that on inputting faces it digs up specific matches to your face thus making it perhaps impossible to participate in a protest for the fear of being identified, even when it is legal to do so. More so, it has implications for what happens to all the data that is captured from CCTVs and other surveillance mechanisms that capture our data without our consent all the time, thus potentially limiting freedom of movement of people in the built environment.

Between the lines: Finally, the thing that caught my attention was the fact that the person from Germany mentioned that there were erroneous matches that were returned to him as a part of his data request. This is to be expected because no algorithm can be perfect but there is a severe consequence: if there are authorities that rely on this data to make determinations about the movements of people, they might draw false conclusions. Also, it might still be OK in a perfectly functioning democracy (which rarely if ever exists or will exist) but what would happen to this technology and its capabilities if the regime changes to something more authoritarian?

Apple Walks a Privacy Tightrope to Spot Child Abuse in iCloud

What happened: Apple has introduced a new feature for the devices that use iCloud which will scan images to determine if there is any child sexual abuse material (CSAM) in them. This is being heralded as a win in the fight against child abuse online while some privacy activists believe that this weakens the privacy protections offered by the Apple ecosystem to its users. The determination process is split between the device and the cloud where hashes are computed on the images and these are compared against a known database of CSAM that is downloaded through a blinding process to the user’s device. This prevents a user from reverse engineering all the hashes to prevent abuse and evasion of the detection system. It also uses something called NeuralHash that is robust to alterations in the images that abusers can use to evade detection. It also uses the notion of privacy set intersection to only alert the system when hashes are matched otherwise resting silent and preventing Apple from gaining access to hashes of all your images.

Why it matters: Online services have certainly made it easier to spread CSAM and this move by Apple is a huge win in combating this scourge. But, the concerns raised by privacy activists also have some merit in terms of what other demands might the company accede to in the future in the interest of law enforcement. The setting of the precedent is what scares the privacy scholars and activists more so than this particular instance which is quite clearly beneficial for the health of our information ecosystem.

Between the lines: The engineering solutions proposed to tackle this problem of CSAM detection in a privacy-preserving fashion will have lots of other positive downstream usage and it is a net win overall in designing technology that can keep harm at bay while still maintaining fundamental rights and expectations of users like privacy. Public discussion of such technology through open-source examination might be another way to boost the confidence that people have in the solutions being rolled out while also potentially pointing out holes in the technology leading to an overall more robust solution.

AI datasets are prone to mismanagement, study finds

What happened: Researchers from Princeton found that popular datasets containing images that are used to train computer vision models contain data for which they might not have had consent. In addition, they found misuses of the datasets through modifications made to it where it wasn’t clear if that was allowed under the licenses and even when they were not clearly allowed by the licenses on the original datasets. While two out of the three datasets have been taken down from their original sources, one continues to exist with a disclaimer that the data shouldn’t be used for commercial purposes. The other two datasets though are still accessible through non-official means via torrents and other places that have archived it.

Why it matters: Ethically dubious applications are powered using the training data offered by these datasets, often going beyond the original intentions and purposes for which they were created. The authors of the paper recommend stewardship of datasets throughout their existence, and being more proactive about potential misuses. They also recommend being more clear in the licenses associated with these datasets. This will hopefully reduce consent violations and downstream misuses.

Between the lines: What is still missing from the conversation is how the solutions mentioned in the article are non-binding, voluntary, and won’t actually lead to any change as long as the benefits to be derived from using the training datasets outweigh the (non-existent) costs associated with their misuses. If this problem is to be tackled effectively, the suggestions need to be a lot more rigorous and have elements of enforceability and legal might that will deter misuse and strongly mandate consent for any data used to compose the dataset.

Training self-driving cars for $1 an hour

What happened: The article highlights the abysmal rates that are paid out to workers who help to power the most lucrative and well-funded sub-industries within AI: self-driving vehicles. Given that the dominant paradigm for getting these systems to work effectively still requires large amounts of labeled data, it is not surprising that loads of money gets poured into building up datasets that can be used by companies to train their systems. Some interesting highlights from the article showcase how the demand for this has reshaped the crowdsourced work industry demanding much higher rates of accuracy from workers, supervision officers and checkers over the data labelers, and finally, additional distancing between those who provide these tasks and those who complete them.

Why it matters: Fair compensation for work, especially for work that is crucial to the existence and continuation of the self-driving industry is the least that can be done, particularly when such companies are extremely well-funded. The platform called Remoteworks discussed in the article is owned by ScaleAI, a giant AI company valued at close to $7b. The AI engineers who develop models are typically based in places like SF while the workers who painstakingly construct the datasets to power these systems work in the Global South with none of the benefits offered to the employees of the organizations that contract out this work.

Between the lines: The evolving requirements put forth as the demands for dataset construction for these systems become more rigorous will herald a further reshaping of the crowdsourced work industry. One of the examples provided in the article talks about a new label category called “atmospherics” that requires labeling rain drops in an image so that the powerful cameras onboard the vehicle which capture those raindrops in their images don’t mistake them for obstacles. The tasks are only going to become more tedious and will make the pace of such dataset construction unsustainable in the long run.

From our Living Dictionary:

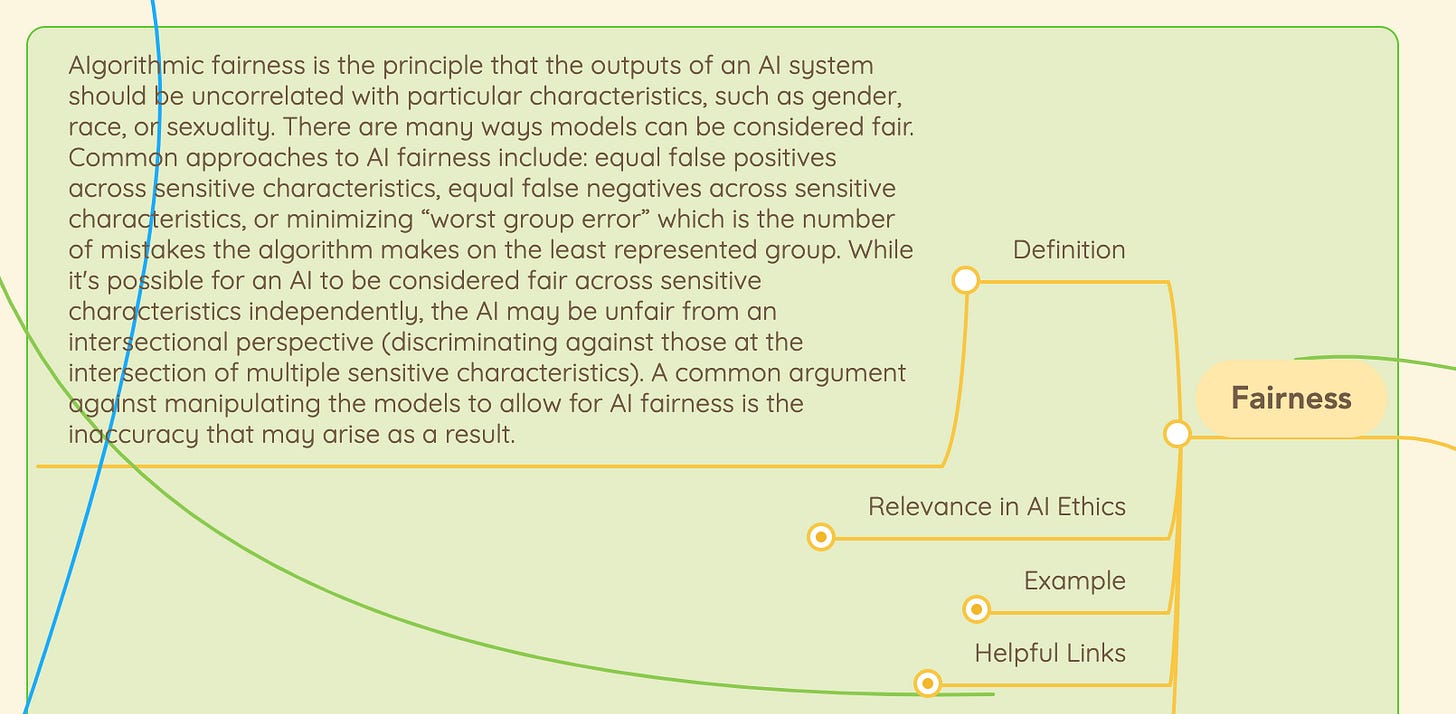

‘Fairness’

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

From elsewhere on the web:

AI Weekly: The road to ethical adoption of AI (VentureBeat)

Our founder Abhishek Gupta is featured in the article with the following quote from our State of AI Ethics Report Volume 5:

“Design decisions for AI systems involve value judgements and optimization choices. Some relate to technical considerations like latency and accuracy, others relate to business metrics. But each require careful consideration as they have consequences in the final outcome from the system. To be clear, not everything has to translate into a tradeoff. There are often smart reformulations of a problem so that you can meet the needs of your users and customers while also satisfying internal business considerations.”

To delve deeper, read the full article here.

The Launch Space: A roadmap to more sustainable AI systems

Our founder Abhishek Gupta will be speaking at this event hosted by Microsoft.

AI has a sizeable carbon footprint, both during training and deployment phases. How do we build AI systems that are greener? The first thing we need to understand is how to account and calculate the carbon impact of all the resources that go into the AI lifecycle. So what is the current state of carbon accounting in AI? How effective has it been? And can we do better? This conversation will answer these questions and dive into what the future of carbon accounting in AI looks like and what role standards can play in this, especially if we want to utilize actionable insights to trigger meaningful behavior change.

In case you missed it:

Decision Points in AI Governance

Newman embarks on the lonely and brave journey of investigating how to put AI governmental principles into action. To do this, 3 case studies are considered, ranging from ethics committees, publication norms and intergovernmental agreement. While all 3 of those aspects have their benefits, none of them are perfect, and Newman eloquently explains why. The challenges presented are numerous, but the way forward is visible, and that way is called practicality.

To delve deeper, read the full summary here.

Take Action:

Preserving the Ecosystem: AI, Data and Algorithms

The Montreal AI Ethics Institute is partnering with AI Policy Labs for a discussion on AI and the environment.

The discussion will span across how AI is being leveraged for a greener future. With the computational power required, such technology has the possibility to harm the environment, while also holding the key to innovation. Discussions surrounding this paradox through an environmental lens will be the mainstay of this meetup.

📅 September 9th (Thursday)

🕛 Noon –1:30PM EST