AI Ethics Brief #83: Preventing AI harms, evolution in age-verification apps, NATO's AI Strategy, and more ...

Who Is Governing AI Matters Just as Much as How It’s Designed

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~18-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

Representation and Imagination for Preventing AI Harms

Evolution in Age-Verification Applications: Can AI Open Some New Horizons?

🔬 Research summaries:

NATO Artificial Intelligence Strategy

📰 Article summaries:

What Apple’s New Repair Program Means for You (And Your iPhone)

How We Investigated Facebook’s Most Popular Content – The Markup

Uncovering bias in search and recommendations

📅 Event

Value Sensitive Design and the Future of Value Alignment in AI

📖 Living Dictionary:

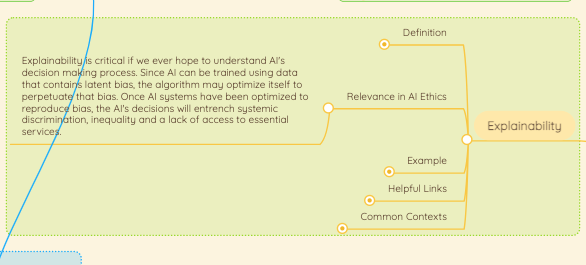

Explainability

🌐 From elsewhere on the web:

The Montreal Integrity Network: Workshop on the Ethics of Artificial Intelligence

International Conference on AI for People: Towards Sustainable AI - Keynote Speech titled “Turning the Gears: Organizational and Technical Challenges in the operationalization of AI Ethics”

International Conference on AI for People: Towards Sustainable AI - Roundtable on AI ethics

💡 ICYMI

Who Is Governing AI Matters Just as Much as How It’s Designed

But first, our call-to-action this week:

This online roundtable discussion aims to discuss whether and how VSD can advance AI ethics in theory and practice. We expect a large audience of AI ethics practitioners and researchers. We will discuss the promises, challenges, and potential of VSD as a methodology for AI ethics and value alignment specifically.

Geert-Jan Houben (Pro Vice-Rector Magnificus Artificial Intelligence, Data and Digitalisation, TU Delft will be giving opening remarks.

Jeroen van den Hoven: Professor of Ethics and Philosophy of Technology, TU Delft will act as moderator.

The panellists are as follows:

Idoia Salazar: President & co-founder of OdiselAI and Expert in European Parliament’s Artificial Intelligence Observatory.

Marianna Ganapini PhD: Faculty Director, Montreal AI Ethics Institute, Assistant Professor of Philosophy, Union College.

Brian Christian: Journalist, UC Berkeley Science Communicator in Residence, NYT best-selling author of ‘Value Alignment’, Finalist LA Times Best Science & Technology Book.

Batya Friedman: Professor in the Information School, University of Washington, inventor and leading proponent of VSD.

✍️ What we’re thinking:

Representation and Imagination for Preventing AI Harms

The AI Incident Database launched publicly in November 2020 by the Partnership on AI as a dashboard of AI harms realized in the real world. Inspired by similar databases in the aviation industry, its change thesis is derived from the Santayana aphorism, “Those who cannot remember the past are condemned to repeat it.” As a new and rapidly expanding industry, AI lacks a formal history of its failures and harms were beginning to repeat. The AI Incident Database thus archives incidents detailing a passport image checker telling Asian people their eyes are closed, the gender biases of language models, and the death of a pedestrian from an autonomous car. Making these incidents discoverable to future AI developers reduces the likelihood of recurrence.

To delve deeper, read the full article here.

Evolution in Age-Verification Applications: Can AI Open Some New Horizons?

Have you been ever asked to proof your age or verify your identity while you tried to buy any product or service? Many readers here may answer yes to this question. Whether it’d be buying a bottle of wine or signing up for our first driving lesson, age-verification requirements have existed for long. Similarly, a wide range of online applications now requires age-verification before providing service or content access to users. So, in this digital age, this has become a broad area of research and product development.

To delve deeper, read the full article here.

🔬 Research summaries:

NATO Artificial Intelligence Strategy

On October 21-22, 2021 during the NATO Defence Ministers Meeting, held in Brussels, the ministers agreed upon to adopt the NATO Artificial Intelligence Strategy (“hereinafter the strategy”). The strategy is not publicly available and what is accessible is a document titled ‘Summary of the NATO Artificial Intelligence Strategy’. This write-up provides an overview of the said summary. into practice.

To delve deeper, read the full summary here.

📰 Article summaries:

What Apple’s New Repair Program Means for You (And Your iPhone)

What happened: Apple has announced a new program under which they are making replacement parts available to a wider set of repair services providers, including to consumers so that they can make minor repairs either themselves, or take it to other repair shops to extend the life of their devices. This has direct implications in terms of increasing accessibility of these devices, since consumers who were charged a lot of money at Apple stores or authorized services can now get a cheaper pathway to continue using their devices. And most importantly, extending the lifespan of the device means that we will reduce the impact on the planet, given that the embodied carbon emissions constitute a major chunk of the environmental impacts of technology, this is a great step forward.

Why it matters: This is a huge win for advocates of the “Right to Repair” movement, and as mentioned in the article, a huge company like Apple making such a move can act as a trendsetter for other companies to follow suit and offer similar services. Given that we cycle through our devices fairly quickly, extending the lifespan of these devices can have an indirect impact also on the kind of software that is developed which can continue to leverage older hardware rather than constantly creating backward-incompatible updates that necessitate moving to newer devices.

Between the lines: The concerns that are usually flagged for not offering such programs has traditionally been that unauthorized repair centers might pose security and privacy risks to the data of the consumers on those devices. The current move might be coming on the heels of hints from the FTC that they might make more stringent regulations that mandate providing options to consumers to be able to repair their devices either on their own or get access to replacement parts so that they can pick replacement service providers outside of those authorized by the manufacturer.

How We Investigated Facebook’s Most Popular Content – The Markup

What happened: The team at The Markup reviewed the recently published “Widely Viewed Content Report” from Facebook, a document that is published by the company in the interest of transparency, to see how content disperses online, what the frequency of that content is on the platform, and how the rankings of various websites change based on what kind of methodology is used. They utilized their Citizen Browser project to simulate the calculations done by the team at Facebook, and applying statistical methods, determined that the sample size and approach that they are using lines up quite well to draw statistically significant conclusions about the performance of top performing content on the platform.

Why it matters: The article dives into methodological details that are well worth reviewing, but more importantly, they highlight the lack of transparency, ironic given the purpose of the report, in the methodology published accompanying the report from Facebook. The focus of that report was solely on the views for the content and much less so on the frequency with which that content might have appeared in the newsfeed of a user. This is an important distinction to make, since the frequency with which someone sees a piece of content, the chances that they assimilate its message increases, and it also correlates with the probability of them sharing that piece of content, thus amplifying its reach. Without that level of granularity, we get a poor facsimile of the actual distribution and influence of content on the platform.

Between the lines: In an effort to provide transparency, Facebook’s report is a great first step, but as the investigation done by The Markup points out, the report obscures quite a bit, especially given the limited information about the underlying methodology that was used to arrive at the final numbers. More so, something that is now backed by empirical evidence as per the work done by The Markup, is that sensationalist content and opinion sites do outperform mainstream news content on the platform and this isn’t apparent in the report from Facebook because of the way the calculations are carried out.

Uncovering bias in search and recommendations

What happened: The team at Vimeo, the video streaming platform, talks about their work in assessing bias in the search results and the recommendations that they provide to the users of their platform. They do so for gender bias as an entry point to this assessment and utilize LTR (Learning to Rank) and BM25 approaches as the underlying search results ranking comparing results from gender-neutral search terms and checking for the presence of gendered terms in the returned result list and the ordering of those results. Chock-full of technical details, one of the things that stand out in the article is an interesting challenge on ground truth labels, which are hard to get because the relevance of search results, especially for items saved in a library are highly specific to the user themselves and hence it is difficult to generalize to the broader user base from that. So, they used clicks to form the signal for the ground truth in the supervised learning task.

Why it matters: The way the experiments are run (e.g. presenting two variations of blue text for buttons and judging which users prefer, only provides information about users’ preferences for blue buttons and nothing about green buttons) and how data related to interactions is collected can have a tremend ous impact on downstream tasks that might use this data. In addition, the small sample sizes of self-declared gender pronouns on the Vimeo user base and drawing conclusions from that to apply to the broader user base also poses challenges. For example, some might not choose to identify, the limited options of gender pronoun identification offered by Vimeo are also acknowledged by the team that did this analysis. But, this does offer a great starting point for diving into how bias may manifest in search results and recommendations.

Between the lines: For platforms that are even larger than Vimeo, the impact of bias in what kind of results pop up when a user types in a search result, and particularly how those search results are ordered (think back to how many times you navigate beyond the first page of search results on Google) have the potential to amplify gender and other biases significantly if clicks and other user-driven metrics are used to drive the modelling of relevance for any downstream tasks. Having more studies conducted by platforms themselves instead of by external organizations has the upside that the platforms have the deepest access to all the metrics and interactions; of course, this comes with the caveat that negative outcomes from such an investigation may be suppressed for business interests.

📖 From our Living Dictionary:

“Explainability”

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

The Montreal Integrity Network: Workshop on the Ethics of Artificial Intelligence

Our Partnerships Manager, Connor Wright, is speaking at a session hosted by the Montreal Integrity Network in an enriching discussion about AI applications and their ethical considerations in a corporate environment. They’ll cover the following topics: What is AI, and what it is not, the current state of AI, and future trends, and ethical challenges of AI applications.

International Conference on AI for People: Towards Sustainable AI

At this conference hosted by AI for People, our founder delivered a Keynote Speech titled “Turning the Gears: Organizational and Technical Challenges in the operationalization of AI Ethics” and our staff also participated in a roundtable on AI ethics discussing common challenges and opportunities in the space.

The panel featured three professionals who engage with issues surrounding AI ethics in their respective disciplines: Masa Sweidan, McGill alumna and business development manager at the Montreal AI Ethics institute (MAIEI); Ignacio Cofone, assistant professor of privacy law, AI law, and business associations at McGill; and Mark Likhten, legal innovation lead at Cyberjustice Lab at L’Universite de Montreal (UdeM).

“Having education that includes women, BIPOC, and LGBTQ [communities] is extremely important,” Sweidan said. “Having people with different backgrounds, looking at it from the philosophy standpoint, [from computer science], from law, I think that is what leads to a more holistic education, and I think that is an extremely important first step.”

The Social Life of Algorithmic Harms - Call for Applications by Data & Society

Data & Society academic workshops enable deep dives with a broad community of interdisciplinary researchers into topics at the core of Data & Society’s concerns. They’re designed to maximize scholarly thinking about the evolving and socially important issues surrounding data-driven technologies, and to build connections across fields of interest.

Participation is limited; apply here by December 3, 2021 at 11:59 p.m. EST.

💡 In case you missed it:

Who Is Governing AI Matters Just as Much as How It’s Designed

The evolution of technology is at an exciting but also at an alarming pace. We have seen various cases displaying the implications of deploying technology without having foundational governance mechanisms to mould these technologies’ behaviour and consequences. Just as importantly, we do not have adequate governance mechanisms to protect communities, especially marginalized communities, from the lack of governance and regulation of AI.

The question of who is governing AI is significant. One of the primary reasons is that depending on the stakeholder who is setting the governance framework, they could be existing outside of the state legislative laws and policies. The discussion of private vs. public stakeholder governance is essential since the normative responsibilities and desired outcomes of deploying the technology vary greatly. Baseline design variables such as accuracy, fairness, and explainability, continue to evolve, change, and vary depending on who the AI application is serving. To create pan-sector definitions that do not work jointly together will inevitably cause mass scaleable harm.

To delve deeper, read the full summary here.

Take Action:

Value Sensitive Design and the Future of Value Alignment in AI

This online roundtable discussion aims to discuss whether and how VSD can advance AI ethics in theory and practice. We expect a large audience of AI ethics practitioners and researchers. We will discuss the promises, challenges, and potential of VSD as a methodology for AI ethics and value alignment specifically.

Geert-Jan Houben (Pro Vice-Rector Magnificus Artificial Intelligence, Data and Digitalisation, TU Delft will be giving opening remarks.

Jeroen van den Hoven: Professor of Ethics and Philosophy of Technology, TU Delft will act as moderator.

The panellists are as follows:

Idoia Salazar: President & co-founder of OdiselAI and Expert in European Parliament’s Artificial Intelligence Observatory.

Marianna Ganapini PhD: Faculty Director, Montreal AI Ethics Institute, Assistant Professor of Philosophy, Union College.

Brian Christian: Journalist, UC Berkeley Science Communicator in Residence, NYT best-selling author of ‘Value Alignment’, Finalist LA Times Best Science & Technology Book.

Batya Friedman: Professor in the Information School, University of Washington, inventor and leading proponent of VSD.