AI Ethics Brief #113: Auditing the auditors, virtue ethics, performative power, AI ethics in education, and more ...

Is Gmail silencing Republicans?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~34-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

🔬 Research summaries:

Who Audits the Auditors? Recommendations from a field scan of the algorithmic auditing ecosystem

A Virtue-Based Framework to Support Putting AI Ethics into Practice

Breaking Fair Binary Classification with Optimal Flipping Attacks

FaiRIR: Mitigating Exposure Bias from Related Item Recommendations in Two-Sided Platforms

Performative Power

Ethics of AI in Education: Towards a Community-wide Framework

📰 Article summaries:

Is Gmail Silencing Republicans?

Algorithms Quietly Run the City of DC—and Maybe Your Hometown

Will nationalism end the golden age of global AI collaboration?

📖 Living Dictionary:

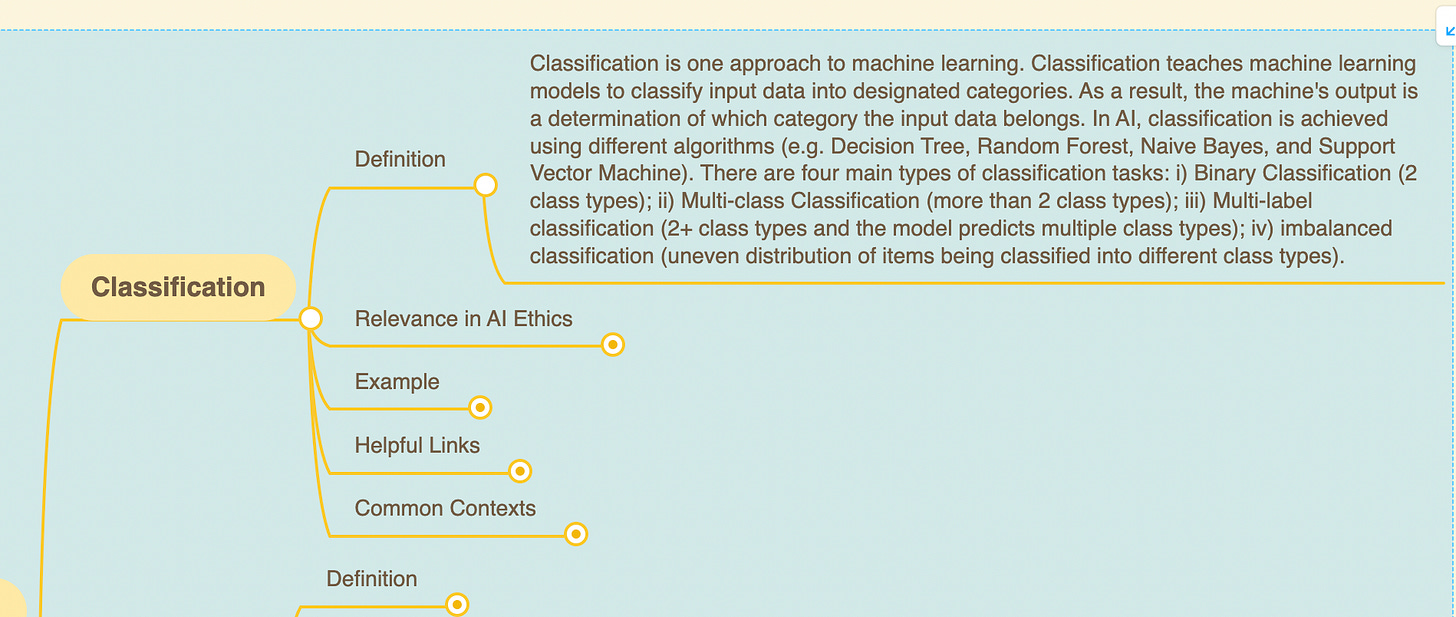

What is classification?

🌐 From elsewhere on the web:

How efficient code increases sustainability in the enterprise

💡 ICYMI

Do Less Teaching, Do More Coaching: Toward Critical Thinking for Ethical Applications of Artificial Intelligence

But first, our call-to-action this week:

Come hang out with me in Stanford, CA on Nov. 15th!

I’ll be in Stanford, CA on Nov. 15th (Tuesday) and would love to hang out in-person for a coffee with our reader community! We’ll chat about everything AI :)

🔬 Research summaries:

Who Audits the Auditors? Recommendations from a field scan of the algorithmic auditing ecosystem

The AI audit field is now larger than ever as a response to the variety of harms AI can cause. However, while there is consensus within the field, operationalizing such an agreement and the general commitment to the cause is questionable.

To delve deeper, read the full summary here.

A Virtue-Based Framework to Support Putting AI Ethics into Practice

A virtue-based approach specific to the AI field is a missing compound in putting AI ethics into practice, as virtue ethics has often been disregarded in AI ethics research this far. To close this research gap, a new paper describes how a specific set of “AI virtues” can act as a further step forward in the practical turn of AI ethics.

To delve deeper, read the full summary here.

Breaking Fair Binary Classification with Optimal Flipping Attacks

Fair classification has been widely studied since current machine learning (ML) systems tend to make biased decisions across different groups, e.g., race, gender, and age. We especially focus on fair classification under corrupted data, motivated by the fact that the web-scale data we use for training models is potentially poisoned by random or adversarial noise. This paper studies the minimum amount of data corruption required for a successful attack against a fairness-aware learner.

To delve deeper, read the full summary here.

FaiRIR: Mitigating Exposure Bias from Related Item Recommendations in Two-Sided Platforms

Related Item Recommendation (RIR) algorithms weave through the fabric of online platforms having far-reaching effects on different stakeholders. While customers rely on them for exploring products/services that appeal to them, producers depend on them for their livelihood. Such far-reaching effects warrant these systems to be fair to these different stakeholders. Though fairness in personalized recommendations has been well discussed in the community, fairness in RIRs has been overlooked. To this end, in the current work, we propose a novel suite of algorithms that aims at ensuring fairness in related item recommendations while preserving the underlying relatedness of the algorithms.

To delve deeper, read the full summary here.

We propose performative power as a measure to assess the power of a firm within a digital economy. In contrast to classical measures of market power, performative power does not rely on a specific market definition; it instead uses observational data to directly measure the effect an algorithmic system can have on the behavior of its participants.

To delve deeper, read the full summary here.

Ethics of AI in Education: Towards a Community-wide Framework

It is almost certainly the case that all members of the Artificial Intelligence in Education (AIED) research community are motivated by ethical concerns, such as improving students’ learning outcomes and lifelong opportunities. However, as has been seen in other domains of AI application, ethical intentions are not by themselves sufficient, as good intentions do not always result in ethical designs or ethical deployments (e.g., Dastin, 2018; Reich & Ito, 2017; Whittaker et al., 2018). Significant attention is required to understand what it means to be ethical, specifically in the context of AIED. The educational contexts which AIED technologies aspire to enhance highlight the need to differentiate between doing ethical things and doing things ethically, to understand and to make pedagogical choices that are ethical, and to account for the ever-present possibility of unintended consequences, along with many other considerations. However, addressing these and related questions is far from trivial, not least because it remains true that “no framework has been devised, no guidelines have been agreed, no policies have been developed, and no regulations have been enacted to address the specific ethical issues raised by the use of AI in education” (Holmes et al., 2018, p. 552).

To delve deeper, read the full summary here.

📰 Article summaries:

Is Gmail Silencing Republicans?

What happened: This spring, a study by researchers at North Carolina State University found that Gmail sent most emails from “left-wing” candidates to the inbox and most emails from “right-wing” candidates to the spam folder. After Republicans in Congress had private meetings with Google’s chief legal officer, a group of Republican senators introduced a bill called the Political BIAS Emails Act. This would forbid providers of email services from using algorithms to flag emails from political campaigns that consumers have elected to receive as spam.

Why it matters: In Gmail, “the percentage of emails marked as spam from the right-wing candidates grew steadily as the election date approached.” An increase in emails sent led to Google’s spam-filtering algorithm marking a larger fraction of them as spam. Moreover, user behavior has a major impact on spam filtering. Users are reporting Republicans’ emails as spam, which is training Google’s filtering algorithm to recognize similar emails as spam. “Could it be that people receiving emails from Republicans just … really don’t want those emails?”

Between the lines: Last month, the Republican National Committee (RNC) filed a lawsuit against Google, referring to common-carrier laws and antidiscrimination laws in California, to argue that the company was illegally discriminating against Republicans. The notion that Republicans are being “shadowbanned” on Big Tech platforms has been a major concern for the party over the past several years. In this particular instance, this strong sentiment does not seem to be shared widely.

Algorithms Quietly Run the City of DC—and Maybe Your Hometown

What happened: A new report from the Electronic Privacy Information Center (EPIC) found that algorithms were used across 20 city agencies in Washington, DC, with more than a third deployed in policing or criminal justice. This draws attention to the fact that cities have put bureaucratic algorithms to work across their departments, where they can contribute to decisions that affect citizens’ lives.

Why it matters: Government agencies can benefit from automation in various contexts, including screening housing applicants, predicting criminal recidivism, and identifying food assistance fraud, amongst other things. There is certainly an added element of efficiency, but it’s often difficult for citizens to know they are at work, and some systems have been found to discriminate. For example, in Michigan, an unemployment-fraud detection algorithm with a 93% error rate caused 40,000 false fraud allegations.

Between the lines: Agencies are generally unwilling to share information about their systems due to “trade secrecy and confidentiality.” EPIC says governments can help citizens understand their use of algorithms by requiring disclosure anytime a system makes an important decision. However, some experts warn against thinking that algorithm registries automatically lead to accountability. They can work if rules or laws are in place to require government departments to take them seriously, but in certain instances, it can be quite incomplete.

Will nationalism end the golden age of global AI collaboration?

What happened: PyTorch, an open-source machine learning framework, has become a foundational component of AI technology and has risen above geopolitical tensions between China and the U.S, facilitating the transfer of knowledge from people who are trying to learn from each other. However, efforts from both governments to prevent collaboration between the two countries could hurt the lively ecosystem.

Why it matters: The “stateless mashups advancing AI” include free bundles of code, data sets, and pre-built machine learning models that exist due to cross-border collaboration. Despite this, the Chinese Communist Party wants self-sufficiency. China is not only building applications on top of open-source AI frameworks created by U.S. technologists but also combining open-source components from around the world to produce new technologies. Similarly, the U.S. government is increasingly advocating for stronger AI tech protections to block the flow of advanced AI-related technologies to China.

Between the lines: The consequences of rising tensions between the U.S. and China are significant because the current information-sharing ecosystem for AI development is inherently international. “From a high level, decoupling as a broad brush, overall strategy — the fact that AI development is so globalized — renders that broad brush overall strategy relatively infeasible.”

📖 From our Living Dictionary:

What is classification?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

How efficient code increases sustainability in the enterprise

Everything counts in large amounts. You don’t have to be Google, or build large AI models, to benefit from writing efficient code. But how do you measure that?

It’s complicated, but that’s what Abhishek Gupta and the Green Software Foundation (GSF) are relentlessly working on. The GSF is a nonprofit formed by the Linux Foundation, with 32 organizations and close to 700 individuals participating in various projects to further its mission.

Its mission is to build a trusted ecosystem of people, standards, tooling and best practices for creating and building green software, which it defines as “software that is responsible for emitting fewer greenhouse gases.”

The likes of Accenture, BCG, GitHub, Intel and Microsoft participate in GSF, and its efforts are organized across four working groups: standards, policy, open source and community.

Gupta, who serves as the chair for the Standards working group at GSF, in addition to his roles as BCG’s Senior Responsible AI Leader & Expert and the Montreal AI Ethics Institute Founder & Principal Researcher, shared current work and roadmap on measuring the impact of software on sustainability.

💡 In case you missed it:

With new online educational platforms, a trend in pedagogy is to coach rather than teach. Without developing a critically evaluative attitude, we risk falling into blind and unwarranted faith in AI systems. For sectors such as healthcare, this could prove fatal.

To delve deeper, read the full summary here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.