AI Ethics Brief #71: Beginner's guide to AI ethics, collective action on AI, algorithmic accountability for the public sector, and more ...

How much do you trust explanations from AI systems?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~14-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

A Beginner’s Guide for AI Ethics

🔬 Research summaries:

Algorithmic accountability for the public sector

Collective Action on Artificial Intelligence: A Primer and Review

📰 Article summaries:

Deleting unethical data sets isn’t good enough

How Data Brokers Sell Access to the Backbone of the Internet

The Secret Bias Hidden in Mortgage-Approval Algorithms

Even experts are too quick to rely on AI explanations, study finds

But first, our call-to-action this week:

Preserving the Ecosystem: AI, Data and Algorithms

The Montreal AI Ethics Institute is partnering with AI Policy Labs for a discussion on AI and the environment.

The discussion will span across how AI is being leveraged for a greener future. With the computational power required, such technology has the possibility to harm the environment, while also holding the key to innovation. Discussions surrounding this paradox through an environmental lens will be the mainstay of this meetup.

📅 September 9th (Thursday)

🕛 Noon –1:30PM EST

✍️ What we’re thinking:

AI Ethics 101:

A Beginner’s Guide for AI Ethics

The fascinating and ever-changing world of AI Ethics is one that encompasses a variety of principles that continue to shape the development and use of technology. Perhaps its greatest appeal is that it unites such diverse disciplines in an effort to create more holistic solutions for complex problems that arise from the collection of data to train machine learning models. It is therefore not surprising that the very nature of AI Ethics can be overwhelming for anyone who is beginning to explore all that the field has to offer. Hours spent sifting through the detailed research on niche topics or the surface-level buzzwords can be quite confusing and frustrating at times. In order to help you navigate this intersection of AI and ethics in various contexts, here is a curated list of our top 10 recommendations that can kickstart your exciting journey.

To delve deeper, read the full article here.

🔬 Research summaries:

Algorithmic accountability for the public sector

The developments in AI are never-ending, and so is the need for policy regulation. The report exposes what has been implemented, their successes and failings while also presenting the emergence of two pivotal factors in any policy context. These are the importance of context and public participation.

To delve deeper, read the full summary here.

Collective Action on Artificial Intelligence: A Primer and Review

The development of safe and socially beneficial AI will require collective action, in the sense that outcomes will depend on the efforts of many different actors. This paper is a primer on the fundamental concepts of collective action in social science and a review of the collective action literature as it pertains to AI. The paper considers different types of AI collective action situations, different types of AI race scenarios, and different types proposed solutions to AI collective action problems.

To delve deeper, read the full summary here.

📰 Article summaries:

Deleting unethical data sets isn’t good enough

What happened: The rapid progress of AI systems’ capabilities has been fueled by the availability of large-scale, benchmark datasets. A lot of those were collected by scraping data from the internet, often without the consent of subjects whose data was scooped up in that scraping process. The paper highlighted in this article talks about DukeMTMC, MS-Celeb-1M, and other datasets which have been retracted after concerns were raised by the community. But, they continue to linger online in various forms, often morphed to serve new purposes: adding masks to faces in these datasets to improve the capability of facial recognition technology during the pandemic.

Why it matters: Some datasets do come with warnings and documentation on their limitations but these end up being ignored and derived datasets even lose those pieces of documentation. This means that we have problematic datasets, replete with biases and privacy violations, continuing to exist in the wild, with hundreds of papers being written and published at conferences based on the results from training AI systems on that data.

Between the lines: A potential solution mentioned in the article talks about data stewardship where a potentially independent organization can take on the role of stewarding the proper use of that data throughout the lifecycle of its existence. While noble, there are tremendous challenges in sourcing funding for such organizations and allocating sufficient recognition to such work where the emphasis in the academic domain continues to center on publishing state-of-the-art results and work such as stewardship would face an uphill battle. I’d be delighted if I’m proven wrong on this front and hope that we start to recognize the hard work that goes into preparing, maintaining and retiring datasets.

How Data Brokers Sell Access to the Backbone of the Internet

What happened: Netflow data is the data that tracks requests over the internet from one device to another. Piece enough of this together and you can learn about the patterns of communication of any individual or an organization. In this article, we learn more about Team Cymru, a firm that build products based on netflow data that it purchases from various internet service providers (ISPs) which are then sold on to other cybersecurity firms and organizations who want to perform analysis for intelligence, surveillance, and many other purposes.

Why it matters: This is important because it allows tracking even through virtual private networks (VPNs) stripping away anonymity on the internet even further than it already is. There is an inherent conflict with the collection of such data in the sense that on the one hand it is intrusive and strips away privacy but it also enables some great cybersecurity work that helps protect against virtual and physical threats. As with all other dual-purpose technologies, this one requires a thorough analysis of the pros and cons, especially the potential for misuse as the data stored with Team Cymru might fall into the hands of bad actors.

Between the lines: Something that caught my attention was how the Citizen Lab declined to comment on their use of a product from Team Cymru for a research report that they published. Given the strong upholding of rights and transparency that the Citizen Lab engages in, it seemed odd to not comment on the story. In addition, the Wyden requests for information to the Department of Defense for their purchase and use of internet metadata would also be interesting to examine to gain an understanding of the extent to which people’s internet activities are monitored.

The Secret Bias Hidden in Mortgage-Approval Algorithms

What happened: There are strong biases against people of color in lending decisions made by financial institutions in the US as found out by a recent study conducted by The Markup on data from 2019. They found that even after controlling for new factors that are supposed to tackle racial disparities, the differences persisted. Even those with very high income levels ($100,000+) with low debt were rejected over White applicants with similar income levels but higher debt. This analysis was sent to the American Bankers Association and the Mortgage Bankers Association both of whom denied the results from the study citing that there were missing slices of information in the public data used by The Markup thus making the results incorrect. But, they didn’t point out specific flaws in the analysis. Some of that data is not possible to include in the analysis because the Consumer Financial Protection Bureau strips it to protect borrower privacy.

Why it matters: While there are laws like the Equal Credit Opportunity Act and the Fair Housing Act that are supposed to protect against racial discrimination, with organizations like Freddie Mac and Fannie Mae driving how loans are approved through their opaque rating systems, it is very difficult to override decisions made by automated systems as mentioned in the case of the person discussed in the article who was denied a loan at the last moment, unresolvable by 15 or so loan officers who also looked at the loan application.

Between the lines: The algorithms being used by these organizations date back to more than 15 years and reward more heavily traditional credit which White people have more access to. They also unfairly penalize structural elements like missing payment reports filed by payday lenders who are disproportionately present in neighborhoods with people of color thus skewing the data on bad financial behavior while ignoring good financial behavior such as payment on time of utility payments. The lack of transparency on the part of organizations like Freddie Mac and Fannie Mae, and the protections that they have in not disclosing outcomes from their systems in public data and the opaqueness around their evaluation methodology will continue to exacerbate the problem.

Even experts are too quick to rely on AI explanations, study finds

What happened: The article covers a recently published research study that found discrepancies in the intention of features of AI systems as put together by designers and developers and those who use and interact with the systems in how they perceive them. Building on prior work from the domain of human-computer interaction (HCI), the researchers found that people both over-relied on the outputs from an AI system and misinterpreted what those outputs meant, even when they had knowledge about how AI systems work, when one might expect that to be the case only with those who don’t know how such systems operate. The study evaluated these discrepancies through a game where a robot had to gather supplies for stranded humans in space and explain its actions as it navigated the terrain to get those supplies. Humans who were recruited to be a part of the experiment judged those robots more who provided numerical descriptions of their actions compared to those who provided natural language explanations.

Why it matters: This has direct implications for how we design explainability requirements, especially as those put forth by the EU, NIST, etc. in the sense that we need to know whether the perception of the provided explanations is the same as the ones that we intend. In particular, a mismatch between the two can lead to disastrous results and over- or underconfidence in situations where more human attention is warranted.

Between the lines: The results from the research study are not all that surprising. Perhaps the only novel element is that even those with a background in AI tended to fall for this trap and this only serves to underscore the problem more: we need to be more deliberate in how we design explanations for AI systems so that the gap between intended meaning and perceived meaning is minimized.

From our Living Dictionary:

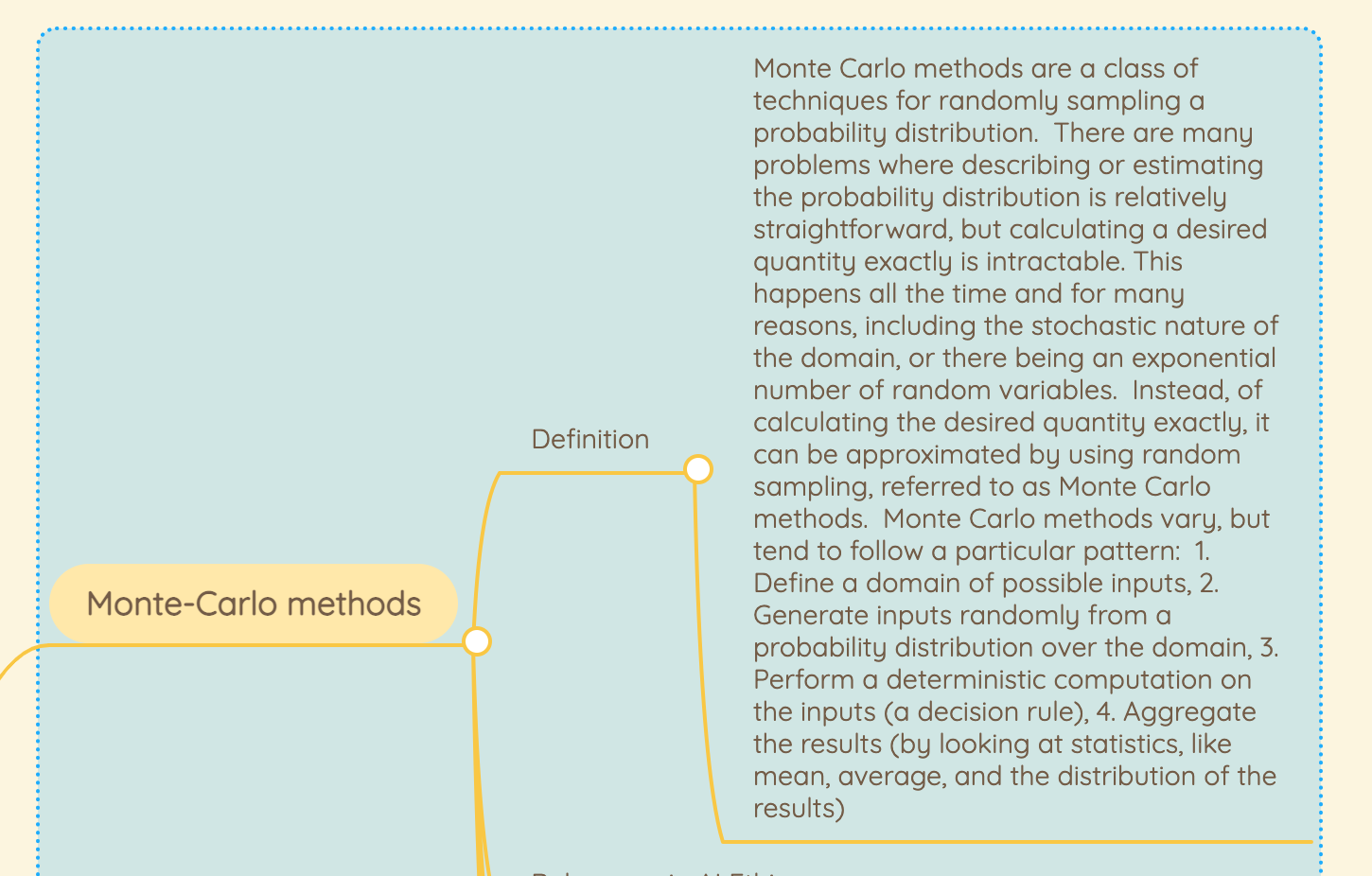

‘Monte Carlo methods’

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

In case you missed it:

Mass Incarceration and the Future of AI

The US, with a staggering 25% of the world’s prison population, has been called the incarceration nation. For millions of Americans, background checks obstruct their social mobility and perpetuate the stigma around criminal records. The digitization of individual records and the growing use of online background checks will lead to more automated barriers and biases that can prevent equal access to employment, healthcare, education and housing. The easy access and distribution of an individual’s sensitive data included in their criminal record elicits a debate on whether public safety overrides principles of privacy and human dignity. The authors, in this preliminary discussion paper, address the urgency of regulating background screening and invite further research on questions of data access, individual rights and standards for data integrity.

To delve deeper, read the full summary here.

Take Action:

Preserving the Ecosystem: AI, Data and Algorithms

The Montreal AI Ethics Institute is partnering with AI Policy Labs for a discussion on AI and the environment.

The discussion will span across how AI is being leveraged for a greener future. With the computational power required, such technology has the possibility to harm the environment, while also holding the key to innovation. Discussions surrounding this paradox through an environmental lens will be the mainstay of this meetup.

📅 September 9th (Thursday)

🕛 Noon –1:30PM EST