AI Ethics Brief #93: Chinese surveillance infrastructure in Africa, moral zombies, maintaining fairness across distribution shifts, and more ...

Should Deepfake Luke Skywalker Scare Us?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~28-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

Social Media Algorithms: The Code Behind Your Life

🔬 Research summaries:

Reports on Communication Surveillance in Botswana, Malawi and the DRC, and the Chinese Digital Infrastructure surveillance in Zambia

Bias in Automated Speaker Recognition

Moral Zombies: Why Algorithms Are Not Moral Agents

Group Fairness Is Not Derivable From Justice: a Mathematical Proof

Maintaining fairness across distribution shift: do we have viable solutions for real-world applications?

📰 Article summaries:

“Ukraine has been a giant test lab”: Russia’s cyberwar risks death and collateral damage

Lessons Learned on Language Model Safety and Misuse

Deepfake Luke Skywalker Should Scare Us

📖 Living Dictionary:

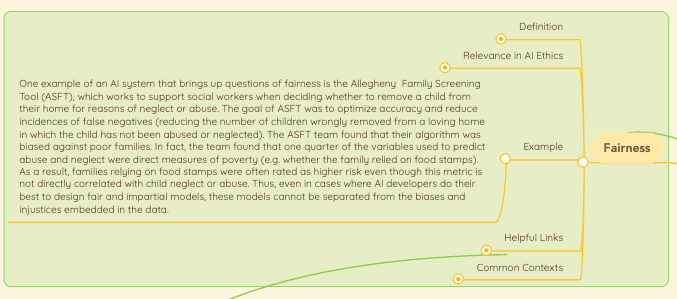

Fairness

💡 ICYMI

Achieving a ‘Good AI Society’: Comparing the Aims and Progress of the EU and the US

But first, our call-to-action this week:

What can we do as a part of the AI Ethics community to help those who are suffering in the current war in Ukraine? We’re looking for suggestions for example on tips (actions that we can all take) to prevent the spread of misinformation, better understanding of the deployment of LAWS on the battlefield, etc.

✍️ What we’re thinking:

Social Media Algorithms: The Code Behind Your Life

Social media has become a major part of any young Canadian’s life. Platforms like Instagram, Facebook, and Snapchat allow users to connect with each other at any time from anywhere, while TikTok and YouTube provide endless amounts of content and entertainment. Consequently, users should know what happens “behind the scenes” of their most-used apps to understand the impact they may have on their lives. Furthermore, the dangers - like the benefits - of social media are many; we therefore explore regulations that can mitigate some of these harms.

To delve deeper, read the full article here.

🔬 Research summaries:

Despite being on the periphery of the debate, African nations are strong consumers of digital surveillance technology. A continent lacking in rigorous data laws and comprehensive education on digital rights, it presents terrain ripe for digital surveillance to be installed. The key to disrupting this scenario is education, but it remains to be seen if governments want to form part of this initiative.

To delve deeper, read the full summary here.

Bias in Automated Speaker Recognition

AI enabled voice biometrics are a hidden and prevalent form of authentication. This paper examines sources of bias in the development and evaluation practices of voice-based identification systems. The authors show that speaker verification technology performance varies significantly based on speakers’ demographic attributes and that the technology is prone to bias.

To delve deeper, read the full summary here.

Moral Zombies: Why Algorithms Are Not Moral Agents

Despite algorithms impacting the world in morally relevant ways we do not, intuitively, hold algorithms accountable for the consequences they cause. In this paper, Carissa Véliz offers an explanation of why this is: we do not treat algorithms as moral agents because they are not sentient.

To delve deeper, read the full summary here.

Group Fairness Is Not Derivable From Justice: a Mathematical Proof

Many law procedures are nowadays based on algorithms, and all the algorithms are based on mathematics. However, it seems not trivial to introduce in this formal context the ethical concepts of justice and fairness. This paper exploits a mathematical structure “to show that theories of justice do not provide a sufficient normative grounding for reasonable accounts of group fairness”.

To delve deeper, read the full summary here.

If my model respects a desired fairness property in Hospital A, will it also be fair in Hospital B? In this work, we use examples from the healthcare domain to show how fairness properties can fail to transfer between the development and deployment environments. In these real-world settings, we show that this transfer is far more complex than the situations considered in current algorithmic fairness research, suggesting a need for remedies beyond purely algorithmic interventions.

To delve deeper, read the full summary here.

📰 Article summaries:

“Ukraine has been a giant test lab”: Russia’s cyberwar risks death and collateral damage

What happened: Historically Russia has tested cyberwarfare techniques, including spreading disinformation, in Ukraine with a view to refine them and then deploy them in campaigns in other parts of the world. With the ongoing onslaught, the phantom war being waged through Russia cyberops has the potential to aggravate harms beyond the physical attacks taking place. In particular, the targeting of critical infrastructure like healthcare facilities, electricity grid, etc. has the potential to make the situation even worse than it already is.

Why it matters: While the scope of these attacks has so far been limited compared to what might have been anticipated, the lurking shadow of cyberattacks has the potential to distract away resources and attention from the physical attacks taking place. In addition, some researchers claim that Russian cyber-operatives have already penetrated key infrastructure in Ukraine and might ratchet up efforts on the digital front as the war wages on.

Between the lines: Disinformation has been a potent weapon that predated the actual physical incursion. The scale at which a digital war can be waged remains far higher, requiring fewer resources, and fewer regulations and ways of attribution making it a likely and unfortunately effective companion to the atrocities being inflicted on Ukraine. What remains to be seen is what might happen if the cyber-attacks take on their full potential and what organizations and governments around the world can do to offer up support and defenses to protect critical Ukrainian infrastructure.

Lessons Learned on Language Model Safety and Misuse

What happened: GPT-3 is one of the most well-known AI systems with broad capabilities and associated risks to boot with. The safety team at OpenAI, developers of GPT-3, have invested into mitigating harm from the widespread use of these capabilities. They acknowledge that given the myriad ways how such a model might be used, the breadth and scope of risks is quite large. But, by slowly releasing API access through beta, studying pilot results, testing internally using red teams, and monitoring use in the wild, they’ve started to bridge gaps in understanding of how harms manifest.

Why it matters: Outcomes from this safety research has led to the development of pretraining data curation and filtering and other tools such as effective user documentation, mechanisms for screening harmful model outputs, retrospective reviews, and perhaps most importantly, the creation of new benchmark datasets and evaluation measures. This last development is salient because existing benchmarks in datasets are not geared towards biases and toxicity that can arise from the training and use of such large-scale language models.

Between the lines: As the trend for large-scale models marches in strength, what we see is that effective roll-out mechanisms, documentation, monitoring, and active prevention and defense research will be essential for the safe and meaningful use of this powerful technology. Transparency in steps being undertaken and building up ecosystem-wide competence will be essential so that we can all contribute to such efforts.

Deepfake Luke Skywalker Should Scare Us

What happened: Highlighting how Mark Hamill fails to pass the uncanny valley in The Mandalorian, the article explores a couple of recent studies on people’s abilities to distinguish deepfakes where they find that most people are not much better than chance at telling deepfakes apart from real images. In particular, and perhaps shockingly, even with training and feedback on mistakes, the accuracy only improves from 48% to 59%. One of the authors of the research studies argues that this might have to do with the characteristics of the deepfake faces (symmetry) which we are biased to trust more and exacerbated by our cognitive biases to detect faces all around us (for example, seeing a face in a wall socket). What they did find was that combining machines and humans for detection led to better results.

Why it matters: While benign replacements in movies and TV shows for our entertainment can hardly count as the most pressing problematic uses of deepfakes, the testing and refinement of this technology in the world of entertainment certainly creates some grounds for worries. In particular, given varying expectations of audiences when they encounter “deepfakes in progress,” we can reasonably anticipate that such technologies will continue to be experimented with as our desire for actors from days past rises and reboots and timeline extensions of our favorite movies and TV shows continue to be made.

Between the lines: What stood out to me from the experiment that was conducted was the fact that even with feedback and training, we didn’t do a lot better in being able to tell deepfakes apart. This raises important questions in terms of what do we need then to effectively counter this problem in the wild? One promising avenue is working in conjunction with machines since they seem to have complementary strengths, yet, there is no guarantee that future developments in creating better deepfakes to combat these machine-human hybrids won’t bring us back to square one. We will need continual investment to balance time and again the adversarial dynamic that exists in our information ecosystem.

📖 From our Living Dictionary:

“Fairness”

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

💡 In case you missed it:

Achieving a ‘Good AI Society’: Comparing the Aims and Progress of the EU and the US

Governments around the world are formulating different strategies to tackle the risks and the benefits of AI technologies. These strategies reflect the normative commitments highlighted in high-level documents such as the EU High-Level Expert Group on AI and the IEEE, among others. The paper “Achieving a ‘Good AI Society’: Comparing the Aims and Progress of the EU and the US compares strategies and progress made in the EU vs the US. The paper concludes by highlighting areas where improvement is still needed to reach a “Good AI Society”.re “autonomous, interactive, and adaptive”.

To delve deeper, read the full summary here.

Take Action:

What can we do as a part of the AI Ethics community to help those who are suffering in the current war in Ukraine? We’re looking for suggestions for example on tips (actions that we can all take) to prevent the spread of misinformation, better understanding of the deployment of LAWS on the battlefield, etc.