AI Ethics Brief #73: Deepfake voices, embedding values in AI, bravery in AI ethics, and more ...

Should moral considerations be made for nonhumans in the ethics of AI?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~16-minute read.

This week’s overview:

✍️ What we’re thinking:

Ethical and Transparent AI - what are the organizational and technical challenges to achieving Responsible AI in practice?

Carbon accounting as a way to build more sustainable AI systems: An analysis and roadmap for the community

🔬 Research summaries:

Embedding Values in Artificial Intelligence (AI) Systems

Moral Consideration of Nonhumans in the Ethics of Artificial Intelligence

Brave: what it means to be an AI Ethicist

📰 Article summaries:

This is the real story of the Afghan biometric databases abandoned to the Taliban

The Downside to Surveilling Your Neighbors

Stopping Deepfake Voices

The Stealthy iPhone Hacks That Apple Still Can't Stop

But first, our call-to-action this week:

✍️ What we’re thinking:

Ethical and Transparent AI - what are the organizational and technical challenges to achieving Responsible AI in practice?

Join our founder, Abhishek Gupta, in a live panel hosted by the City of Montreal and Secretariat of the Quebec Treasury Board on the subject of “Ethical and Transparent Artificial Intelligence” at the Canadian Open Data Summit 2021. Bring your questions on what are the most pressing organizational and technical challenges that we face in operationalizing Responsible AI in practice.

Carbon accounting as a way to build more sustainable AI systems: An analysis and roadmap for the community

Our founder, Abhishek Gupta, has been invited to share his work on the environmental impacts of AI and how to build more sustainable AI systems at the Workshop on leveraging AI in environmental sciences hosted by the NOAA Center for Artificial Intelligence. You can learn more about what it takes to build greener AI systems and engage with communities that are exploring the intersection of AI and sustainability in practice.

🔬 Research summaries:

Embedding Values in Artificial Intelligence (AI) Systems

Though there are numerous high-level normative frameworks, it is still quite unclear how or whether values can be implemented in AI systems. Van de Poel and Kroes’s (2014) have recently provided an account of how to embed values in technology. The current article proposes to expand that view to complex AI systems and explain how values can be embedded in technological systems that are “autonomous, interactive, and adaptive”.

To delve deeper, read the full summary here.

Moral consideration of nonhumans in the ethics of artificial intelligence

As AI becomes increasingly impactful to the world, the extent to which AI ethics includes the nonhuman world will be important. This paper calls for the field of AI ethics to give more attention to the values and interests of nonhumans. The paper examines the extent to which nonhumans are given moral consideration across AI ethics, finds that attention to nonhumans is limited and inconsistent, argues that nonhumans merit moral consideration, and outlines five suggestions for how this can better be incorporated across AI ethics.

To delve deeper, read the full summary here.

Brave: what it means to be an AI Ethicist

The position of AI Ethicist is a recent arrival to the corporate scene, with one of its key novelties being the importance of bravery. Whether taken seriously or treated as a PR stunt, alongside the need to decipher right or wrong is the ability to be brave.

To delve deeper, read the full summary here.

📰 Article summaries:

This is the real story of the Afghan biometric databases abandoned to the Taliban

What happened: As the US forces exited Afghanistan, the technical infrastructure and data trails left behind are causing problems as they fall into the hands of the Taliban. In particular, the article dives into the details of the Afghan Personnel and Pay System (APPS) used by the Ministry of the Interior and the Ministry of Defense that has access to highly sensitive information including biometrics of security personnel and their networks. The implications of that data extend beyond just breaches of privacy: there are real-world security concerns with how that data might be used against those who supported the previous regime.

Why it matters: Aside from the sensitive and immutable nature of the data that resides in these databases that have now been taken over by the Taliban, this is an unfortunate example of what happens when extensive data gathering operations are conducted without regard to what may happen when the information falls into the hands of malicious actors. In this case, there are incoming reports that mention how the data might have potentially been used to target individuals still within the country that had supported the previous regime. Given the immutability of the biometrics, the people who are captured in that data have no chance of erasing or escaping.

Between the lines: While the data is usually gathered under the guise of providing administrative services like access to government and social security benefits, without appropriate cybersecurity protections, and sometimes even with them, when data falls into the hands of those who can misuse that data or target individuals based on their identity or activity, there is little that people can do to escape the consequences of their presence in those datasets. This is one of the strongest reasons in support of data minimization and purpose limitation. As mentioned in the article, creating national ID schemes based on biometric data is not the best way to go about it, and this is one example where we see how this can go horribly wrong.

The Downside to Surveilling Your Neighbors

What happened: Apps like Citizen and Nextdoor that claim to make neighborhoods safer by empowering residents with more information through the deployment of surveillance infrastructure are under intense scrutiny as they have become platforms rife with racism and vigilantism. What is different compared to previous iterations of such neighborhood monitoring solutions is that these are now often tied in with official police departments, giving them a live feed into the happenings of a neighborhood. Exacerbating the problems is the fact that reported incidents can get blown out of proportion as residents might engage in racist behavior that disproportionately targets BIPOC members.

Why it matters: Some apps like Citizen are used in over 60 cities in the US. While a lot of apps have now severed connections with official police departments being able to read feeds directly, it doesn’t prohibit police forces from having non-official accounts to monitor the feeds. More so, the problems of content moderation and health of the information ecosystem that they maintain suffers from the same challenges that other social media platforms suffer from.

Between the lines: This is a piece of technological solution that doesn’t solve the real problem that it claims to provide a solution for. It just normalizes extreme views that some residents might hold on their neighbors by bringing those views out into the open and garnering support from other isolated pockets (hopefully) of the same viewpoints. By being loud and vocal on those platforms, they can spur hate and mistrust amongst the communities countering the goal of having safe and harmonious living. Ultimately, safer communities might just require steering away from technological fixes and more so focusing on community building IRL.

What happened: Researchers have discovered that voice assistants that use automatic speech recognition can be attacked using adversarial examples that can drop their performance accuracy from 94% to 0% in some cases. This work has also revealed strategies on how to add noise imperceptible to the human ear to surreptitiously attack such systems so that they behave in an unintended fashion aiding the malicious actor’s goals. The paper also shares some research directions on defense strategies that can be used to protect against such attacks.

Why it matters: Given the rising proliferation of listening devices all around us, arguably waking up only on specific prompts, such vulnerabilities are important to analyze and defend against if they control important facets of our lives. Examples of this include things like the home’s heating and cooling systems and security systems like door locks.

Between the lines: The field of adversarial machine learning and machine learning security are going to be foundational for the responsible deployment of AI technologies, and such research work helps to raise important questions and provide more research directions so that we can build more robust systems over time. While it is great to continue deploying AI systems and incorporating them into various products and services, without giving due consideration to how these systems might break down, we risk opening up new attack surfaces for malicious actors beyond the already vast vulnerabilities we face in the digital infrastructure that surrounds us.

The Stealthy iPhone Hacks That Apple Still Can't Stop

What happened: In a not-so-surprising revelation, high-profile individuals were targeted by the Bahraini government using zero-click attacks that targeted vulnerabilities in the iMessage app on the iPhone. Dubbed “Megalodon” and “Forced Entry” by Amnesty International and Citizen Lab respectively, the attacks bypass critical protections created by Apple, called BlastDoor, to guard against these kinds of attacks. The zero-click attacks don’t require any interaction from the user and that’s what gives them potency and effectiveness.

Why it matters: Though these attacks cost millions of dollars to develop and they often have short shelf-lives because of security patches and updates issued by manufacturers, they still pose an immense threat to the targeted individuals. The issue is exacerbated because Apple refuses to allow users to disable apps that they provide natively on the iPhone. Past releases have shown that the attack surface for such kinds of apps is quite large and protecting against such threats is increasingly difficult and requires significant overhauls of the core architecture, which will require tons of resources from Apple to make the necessary changes, something that they are unlikely to do in the short-run.

Between the lines: iMessage is not the only app that faces such zero-click attacks; there are other apps like Whatsapp that are also susceptible to different attacks that follow similar patterns. With the utilization of AI to discover vulnerabilities, we are perhaps entering an era whereby the detection of vulnerabilities is greatly accelerated allowing malicious actors to craft even more sophisticated attacks by directing their energies towards developments of those exploits more so than having to discover the vulnerabilities first before crafting the attacks. This places an additional burden on the manufacturers to ensure that they have more robust development practices guaranteeing security of the end user’s devices and apps.

From our Living Dictionary:

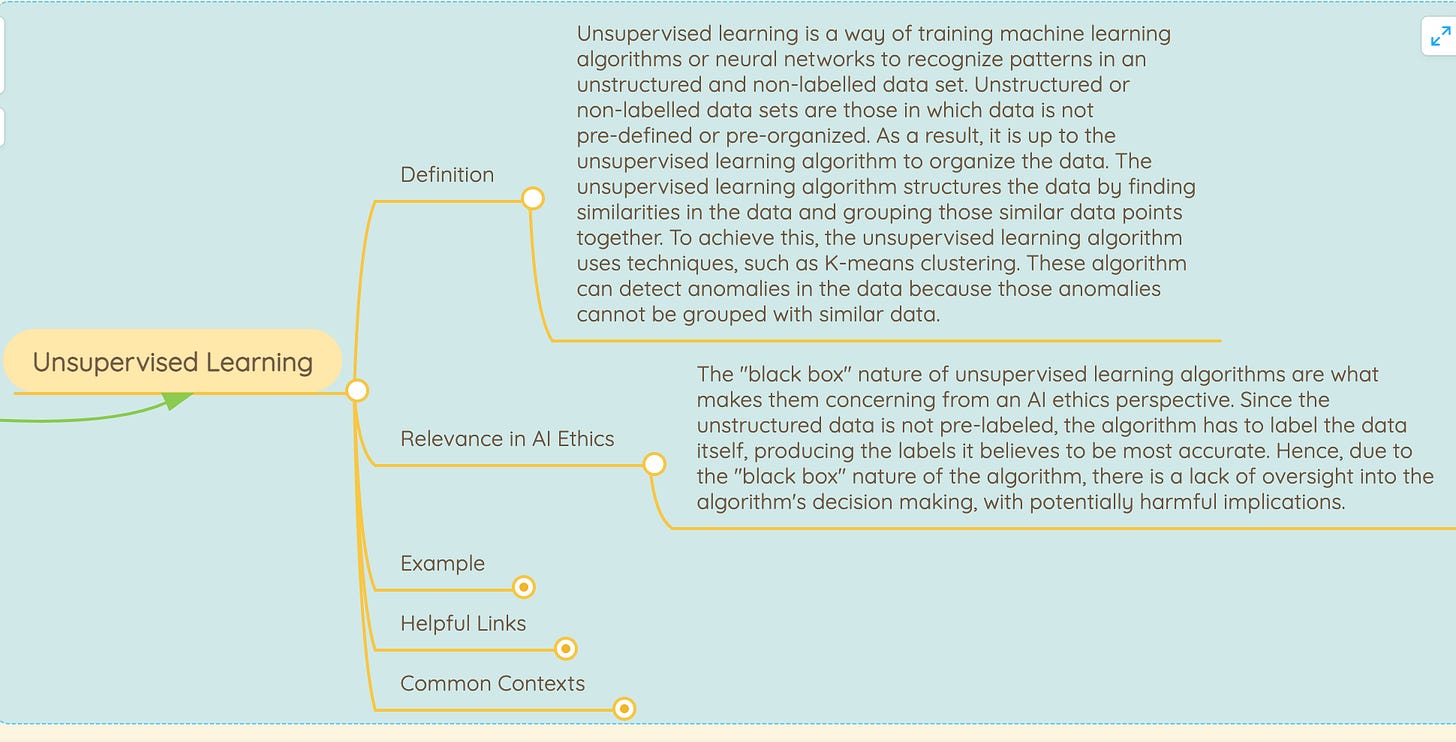

‘Unsupervised learning’

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

In case you missed it:

Changing My Mind About AI, Universal Basic Income, and the Value of Data

As Artificial Intelligence grows more ubiquitous, policy-makers and technologists dispute what will happen. The resulting labor landscape could lead to an underemployed, impoverished working class; or, it could provide a higher standard of living for all, regardless of employment status. Recently, many claim the latter outcome will come to pass if AI-generated wealth can support a Universal Basic Income – an unconditional monetary allocation to every individual. In the article “Changing my Mind about AI, Universal Basic Income, and the Value of Data”, author Vi Hart examines this claim for its practicality and pitfalls.

Through this examination, the author deconstructs the belief that humans are rendered obsolete by AI. The author notes this belief benefits the owners of profitable AI systems, allowing them to acquire the on-demand and data labor they need at unfairly low rates – often for less than a living wage or for free. And although a useful introduction to wealth redistribution, UBI does not address the underlying dynamics of this unbalanced labor market. Calling for the fair attribution of prosperity, the author proposes an extension to UBI: a model of compensation that assigns explicit value to the human labor that keeps AI systems running.

To delve deeper, read the full summary here.