AI Ethics Brief #107: Harmful "AI for Good", AI ethics going astray, judging the algorithm, and more ...

How do we understand what users really want?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~37-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

Social Context of LLMs – the BigScience Approach, Part 3: Data Governance and Representation

Connor’s review of “Rise of the machines: Prof Stuart Russell on the promises and perils of AI”

🔬 Research summaries:

The Challenge of Understanding What Users Want: Inconsistent Preferences and Engagement Optimization

Nonhuman humanitarianism: when ‘AI for good’ can be harmful

Fairness Amidst Non-IID Graph Data: A Literature Review

When AI Ethics Goes Astray: A Case Study of Autonomous Vehicles

Judging the algorithm: A case study on the risk assessment tool for gender-based violence implemented in the Basque country

Fair Interpretable Representation Learning with Correction Vectors

Rise of the machines: Prof Stuart Russell on the promises and perils of AI

📰 Article summaries:

How Microsoft and Google use AI red teams to “stress test” their system

The data-production dispositif: How to analyze power in data production for machine learning

Studying AI Explanations to Improve Healthcare for Underserved Communities

📖 Living Dictionary:

What is an example of explainability?

🌐 From elsewhere on the web:

How could we prevent the unreasonable concentration of AI power?

💡 ICYMI

Code Work: Thinking with the System in Mexico

But first, our call-to-action this week:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

✍️ What we’re thinking:

Social Context of LLMs – the BigScience Approach, Part 3: Data Governance and Representation

Large Language Models are data-driven; i.e., the behavior of the final model (including behaviors that might run contrary to the project’s values) is highly dependent on its “training data”: the text and documents it is exposed to during its training phase. Thus, meeting the overall BigScience Workshop objectives of openness, inclusivity, and responsible research requires us to approach the ethical, legal, and governance questions raised by its data aspect with intentionality. The following blog post describes our efforts in this area.

People: This aspect of our work focuses on direct and indirect categories of stakeholders. The direct categories include the data modelers, data owners, data custodians, and individuals directly represented in the language data we work with. The indirect stakeholders include language communities and communities represented in (or conversely absent from) the data we use to train the model.

Ethical focus: We adopt an approach grounded in value pluralism for the overall project that explicitly asserts the need for different aspects of the work to work with different sets of (consistent) values. In the case of the data aspect, this means identifying values for sourcing and governance that explicitly acknowledge the specific needs of data in its broader context and its intrinsic rights and responsibilities.

Legal focus: Our legal work on the data aspect has two main focuses. First, identifying legislation that is relevant to the workshop’s use of data, with a focus on data protection laws and understanding how data choices flow down to aspects of the trained model and its applications. Second, devising a flexible data agreement to facilitate data exchanges for research that respect the identified data rights.

Governance: In order to meet all of the BigScience project’s goals of reproducibility, diversity, openness, and responsibility, we need a new data management approach between the two extrema of keeping all of the training data private or only making use of data that can be disseminated without harms. To that end, we propose an international organization for distributed data governance that enables accessible and reproducible research without compromising the data subjects’ rights.

To delve deeper, read the full article here.

Connor’s review of “Rise of the machines: Prof Stuart Russell on the promises and perils of AI”.

🔬 Research summaries:

The Challenge of Understanding What Users Want: Inconsistent Preferences and Engagement Optimization

Engagement optimization is a foundational driving force behind online platforms: to understand what users want, platforms look at what they do and choose content for them based on their past behavior. But both research and personal experience tell us that what we do is not always a reflection of what we want; we can behave myopically and browse mindlessly, behaviors that are all too familiar online. In this paper, we develop a model to investigate the consequences of engagement-optimization when users’ behaviors do not align with their underlying values.

To delve deeper, read the full summary here.

Nonhuman humanitarianism: when ‘AI for good’ can be harmful

This paper analyzes the use of chatbots in humanitarian applications to critically examine the assumptions behind “AI for good” initiatives. Through a mixed-method study, the author observes that current implementations of humanitarian chatbots are quite limited in their abilities to hold conversation and do not often deliver on stated benefits. On the other hand, they often carry risks of real harm due to the potential of data breaches, exclusion of the most marginalized, and removal of human connection. The article concludes that ‘AI for good’ initiatives often benefit tech companies and humanitarian organizations at the expense of vulnerable populations and thus rework the colonial legacies of humanitarianism while occluding the power dynamics at play.

To delve deeper, read the full summary here.

Fairness Amidst Non-IID Graph Data: A Literature Review

Fairness in machine learning (ML) commonly assumes the underlying data is independent and identically distributed (IID). On the other hand, graphs are a ubiquitous data structure to capture connections among individual units and are non-IID by nature. This survey reviews recent advances on ubiquitous non-IID graph representations and points out promising future directions.

To delve deeper, read the full summary here.

When AI Ethics Goes Astray: A Case Study of Autonomous Vehicles

Can we abstract human ethical decision making processes into a machine readable and learnable language? Some AI researchers think so. Most notably, researchers at MIT recorded our fickle, contradictory, and error-prone moral compasses and suggested machines can follow along. This paper opposes this field of AI ethics and suggests ethical decision making should be left up to the human drivers, not the cars.

To delve deeper, read the full summary here.

Algorithms designed for use by the police have been introduced in courtrooms to assist the decision-making process of judges in the context of gender-based violence. This paper examines a risk assessment tool implemented in the Basque country (Spain) from a technical and legal perspective and identifies its risks, harms and limitations.

To delve deeper, read the full summary here.

Fair Interpretable Representation Learning with Correction Vectors

Neural networks are inherently opaque. While it is possible to train them to learn “fair representations”, it is still hard to make sense of their decisions on an individual basis. This is in contrast with law requirements in the EU. We propose a new technique to open the “black box” of fair neural networks.

To delve deeper, read the full summary here.

Rise of the machines: Prof Stuart Russell on the promises and perils of AI

Will the rise of the machines solve our problems or prove detrimental to our existence? A robot uprising is not really on the cards, but there are equally scary prospects taking place today.

To delve deeper, read the full summary here.

📰 Article summaries:

How Microsoft and Google use AI red teams to “stress test” their system

What happened: Borrowing from a term that originated in the military in the 60s, “red teaming” an AI system consists of intentionally trying to break the AI system such that it misbehaves by outputting results that are unexpected or outside the thresholds of normal behavior. The article describes work done at Microsoft (my former employer) where dedicated teams work on probing systems for brittleness and then building safeguards and solutions to prevent things like model inversion and theft of personal data through reconstruction attacks. Similar efforts are underway at Google where staff who are a part of broader responsible innovation teams poke and prod AI systems to suss out vulnerabilities to manipulation before malicious actors do so in the wild.

Why it matters: As AI systems get embedded into critical infrastructure such as medical devices, self-driving vehicles, electricity grids, financial markets, etc. making sure that they can’t be “hacked” or coerced into “misbehavior” through adversarial inputs or other attacks that try to discern underlying private training data will become crucial if we can safely integrate these systems into essential services that power our modern way of life.

Between the lines: The field of machine learning security is emerging and currently requires the combination of very niche sets of expertise in cybersecurity and machine learning to come together to make meaningful contributions in securing production-grade machine learning systems. In work done with a former colleague of mine titled “Green Lighting ML: Confidentiality, Integrity, and Availability of Machine Learning Systems in Deployment”, we highlighted the kinds of changes that needs to be made in current machine learning practices to make such a transition into building secure ML systems a reality.

The data-production dispositif: How to analyze power in data production for machine learning

What happened: The article highlights the problem with the paradigm today of the data labeling industry that is kept at an arm's-length with little flexibility in the way they operate through iron-clad contracts and precarity in their employment. The labor doing the data labeling often comes from countries where they are financially dependent on this work and hence afraid to raise questions or flaws in the work that they are assigned. The instructions that they are given often impose a US-centric perspective, for example in gender assignment to facial images (binary only) or hate speech recognition (US-centric taxonomy only) that leads to a narrow world-view imposition on global outcomes creating a disconnect with culture and context in other parts of the world.

Why it matters: When we think about what powers current production-grade machine learning systems, most rely on supervised learning that requires well-labeled datasets to achieve good performance. There is a vast chain of labor that is involved in producing this data, most of it remaining quite hidden behind contractual distance and disconnect from the engineers developing these systems. The fact that they are not accorded labor rights and are not seen as essential partners in the development of ML systems is a cause for concern because it creates unequal power structures and ignores valuable input that could help ML systems avoid problems such as a lack of cultural and contextual sensitivity that they could gather from the feedback of those involved in the data labeling process.

Between the lines: As calls for making this labor visible mount, starting in academia and permeating to industry, we should hopefully see the implementation of the recommendations from the authors of the study in the real world. In particular, starting with contracts that provide flexibility in sharing feedback would be one place that brings direct value to the firms employing such services since they can scale the gathering of cultural and contextual sensitivity through the data labeling process, something that they currently struggle with, as we have seen with content moderation on many social media platforms.

Studying AI Explanations to Improve Healthcare for Underserved Communities

What happened: In a joint study done with Portal Telemedicina, a digital healthcare organization in Brazil, researchers from Partnership on AI (Montreal AI Ethics Institute is a Partner in PAI) embarked on a multistakeholder study with a view to improve understanding of what is required to make XAI useful, in particular, the benefits of a collaborative approach considering the needs of all stakeholders leads to ML systems that help solve tough challenges faced by communities where these systems are deployed. The study examined EKGs for quality issues that needed to be flagged earlier in the patient evaluation protocol to prevent repeat visits in case the clinician found them to be low quality. By working with technicians who administer the EKG to evaluate the quality of EKGs through saliency maps (heat map that shows where there might be issues in the EKG), the study demonstrated a model for an effective use of XAI in practice.

Why it matters: Most work in XAI centers on the needs of ML practitioners, often with a view to help them debug and improve the performance of the systems. The reframing and emphasis provided by this research alongside a practical case study strengthen the need for us to consider the requirements of end-users who are an integral part of the fielding of an ML system. In this case, empowering technicians early in the lifecycle through XAI helps to prevent expensive revisits from patients, for some of whom such a revisit might not be possible since they live in remote regions and might not have enough funds to travel again to undergo an EKG.

Between the lines: As Responsible AI methods move from principles to practice, we will need further such studies that assess real-world usefulness of proposed methods and align them with the needs of all stakeholders, be those end-users or ultimately the community members for whom the systems are being built. A deeper understanding of the on-the-ground needs of practitioners can be unearthed by co-developing XAI methods with them, and then testing and iterating on them till they are refined to meet their needs concretely. The model of identifying relevant stakeholders, engaging with them, and then understanding the purpose of the explanations in XAI is one that screams for applications in other mission-critical domains as well outside healthcare.

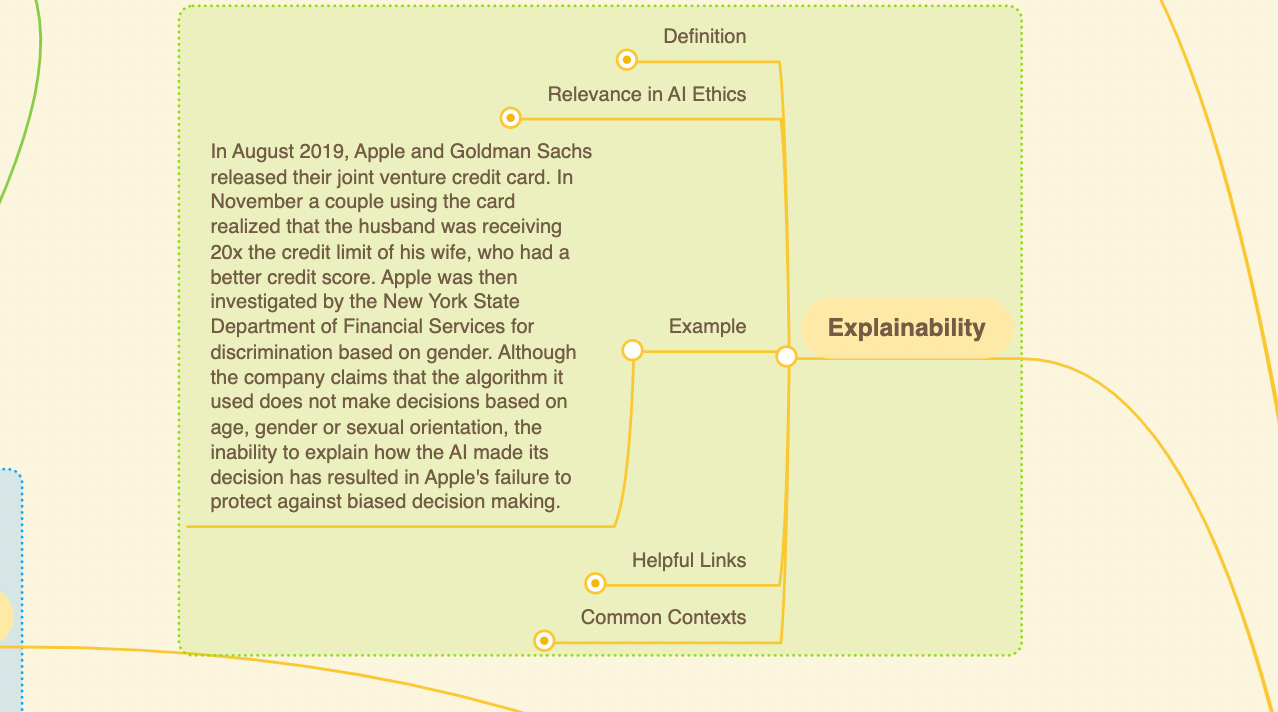

📖 From our Living Dictionary:

What is an example of explainability?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

How could we prevent the unreasonable concentration of AI power?

Our founder, Abhishek Gupta, contributed to the 60 Leaders in AI publication that brings together diverse views from global thought leaders artificial intelligence.

The unreasonable concentration of power in AI comes from three components: compute, data, and talent.

In terms of compute, the more complex AI systems we choose to build, the more compute infrastructure they require to be successful. This is evidenced in the rising costs for training very large language models like Turing , GPT-3 , and others that frequently touch millions of dollars. This is all but inaccessible except to the most well-funded industry and academic labs. This creates homogenization in terms of ideas that are experimented with and also a severe concentration of power, especially in the hands of a small set of people who determine what the agenda is going to be in research and development, but also in the types of products and services that concretely shape many important facets of our lives.

💡 In case you missed it:

Code Work: Thinking with the System in Mexico

Hackathons are now part of a global phenomenon, where talented youth participate in a gruelling technical innovation marathon aimed at solving the world’s most pressing problems. In his ethnographic study of hackathons in Mexico, Hector Beltran illustrates how code work offers youth a set of technical tools for social mobility as well as a way of thinking and working within the country’s larger political-economic system.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.