AI Ethics Brief #84: Managing humans and robots together, queer as the future of digital assistants, public risk and trust perceptions of AI, and more ...

Robots Won’t Close the Warehouse Worker Gap Anytime Soon

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~16-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

Managing Human and Robots Together – Can That Be a Leadership Dilemma?

🔬 Research summaries:

Risk and Trust Perceptions of the Public of Artificial Intelligence Applications

Foundations for the future: institution building for the purpose of artificial intelligence governance

📰 Article summaries:

The Future of Digital Assistants is Queer

Robots Won’t Close the Warehouse Worker Gap Anytime Soon

How Facebook and Google fund global misinformation

📅 Event

AI Ethics: Image+Bias. Filmic Explorations

📖 Living Dictionary:

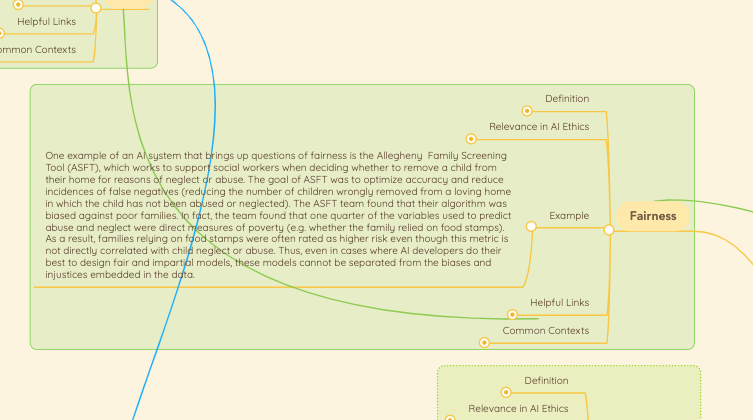

Fairness

🌐 From elsewhere on the web:

Watch the keynote and roundtable from the Montreal AI Ethics Institute staff at the International Conference on AI for People: Towards Sustainable AI

💡 ICYMI

Artificial Intelligence and the Privacy Paradox of Opportunity, Big Data and The Digital Universe

But first, our call-to-action this week:

This workshop on AI Ethics is being hosted by the Montreal AI Ethics Institute (MAIEI) in partnership with Goethe-Institut to make the ideas of the societal impacts of AI more accessible with the goal of equipping and empowering us all to reshape this powerful technology so that we can achieve a more fair, just, and well-functioning society.

The workshop will be centred on the films screened by Goethe-Institut during the week prior to the workshop along with some other materials provided by MAIEI as suggested readings and viewings. Drawing from those as inspiration, this will be a collaborative workshop bringing together people from all walks of life to discuss the subjects of human-machine interaction, machine-mediated conversations, behaviour shaping and nudging, future of work, and alternative futures in an AI-infused world.

The participants of this workshop will walk away with a more nuanced understanding of the impacts that this technology has on our lives and how we can engage our own communities, colleagues, and families in a more critically informed discussions to move towards a positive future.

✍️ What we’re thinking:

Managing Human and Robots Together – Can That Be a Leadership Dilemma?

Have we all written poems sometime at some point in our lives? Some of us may have tried so, some may not. Poetry is mostly considered as a gifted talent. Now, can we expect robots to be poets? Well, that is quite possible now.

On 26 November 2021, Ai-Da (the world’s first ultra-realistic humanoid robot artist) gave a live demonstration of her poetry [1]. The event took place at the University of Oxford’s famous Ashmolean Museum, as part of an exhibition marking the 700th death anniversary of the great Italian poet Dante. Ai-Da produced poems there as an instant response to Dante’s epic “Divine Comedy”, which she consumed entirely , used her algorithms to analyze Dante’s speech patterns, and then created her work utilizing her own word collection.

To delve deeper, read the full article here.

🔬 Research summaries:

Risk and Trust Perceptions of the Public of Artificial Intelligence Applications

Does the general public trust AI more than those studying a higher education programme in computer science? The report aims to answer this very question, emphasizing the importance of civic competence in AI.

To delve deeper, read the full summary here.

To implement governance efforts for artificial intelligence (AI), new institutions require to be established, both at a national and an international level. This paper outlines a scheme of such institutions and conducts an in-depth investigation of three key components of any future AI governance institution, exploring benefits and associated drawbacks. Thereafter, the paper highlights significant aspects of various institutional roles specifically around questions of institutional purpose, and frames what these could look like in practice, by placing these debates in a European context and proposing different iterations of a European AI Agency. Finally, conclusions and future research directions are proposed.

To delve deeper, read the full summary here.

📰 Article summaries:

The Future of Digital Assistants is Queer

What happened: The article dives into building upon the case that was laid out in the UN report “I’d blush if I could” that highlighted how a lot of smart voice assistants have a feminized voice and are made to take on archaic, stereotypical feminine characteristics of obeisance emerging from the lack of diversity and other problems in the domain of technology. In particular, it showcases how the future for these assistants might be queer, not just in the formulation of the actual timbre of the voice, but more so in what being outside of traditional binaries mean when it comes to whether such an assistant should mimic humans in the first place.

Why it matters: Not only does such an approach eschew the problematic formulation of digital assistants today, it also enriches the discussion by providing alternate formulations for what digital assistants can look like. It helps us imagine an alternate future. One of the examples that they mention include an exploration of having multiple personalities that more accurately reflect the many versions of femininity, but even more on the point that such bots are not human. The example of Eno, the bot from Capital One stands out as an example where it talks about binary as 1s and 0s rather than gender when asked about its gender.

Between the lines: California in 2019 created the first legal precedent asking bots to identify themselves, something that is increasingly important as we have capabilities like Duplex from Google being capable of making appointments on our behalf sounding human. While the legal precedent is far from perfect, it lays down an imperative for us to start thinking differently about such technologies, especially as they inch into mimicking human assistants more and more. It will also shape the interactions between humans and machines much more.

Robots Won’t Close the Warehouse Worker Gap Anytime Soon

What happened: Anytime there is a conversation about the labor impacts of AI, the first thing that we hear about are the impacts that will take place on the factory floor. This article dives deeper into how that is actually manifesting and what it means for the future of work. Most of the robot deployments on the factory floor today are things that require limited intelligence and still rely heavily on human co-workers to complete jobs, where they only play a small part by taking over some tasks.

Why it matters: As we look for more nuance on the direct impacts from automation on factory floors and elsewhere, it helps to gain an understanding of which industries are deploying automation in what manners and to what extent. For example, when we look at Amazon putting out numbers saying they’re hiring 150,000 more seasonal workers to meet the holiday demand, it helps to understand how they co-work in the warehouse environment, and given the capabilities of where robotics are headed, what can we reasonably expect to change in the future.

Between the lines: As is mentioned in the article by a lot of the robotics companies who supply places like FedEx and Amazon, there are a lot of unsolved and unanticipated edge cases which we can’t design for just yet. What that means is that, at least in the near-future, we will continue to have both humans and machines working side-by-side. Or at least through isolated environments, given the current safety concerns where machines are housed in separate cages to prevent any accidents from taking place. The takeaway for me from this article is that as we think about upskilling and redeploying human labor capacities, keeping a keen eye on the edge cases that are still unsolved, and speaking with technical experts to gain an understanding of the timeline to solve them will be critical to better prepare for labor transitions as the need for those arise.

How Facebook and Google fund global misinformation

What happened: Algorithmic amplification of problematic information online is nothing new to the readers of this newsletter. We’ve covered it time and again. But, this article sheds a new light on the machinery that feeds this information ecosystem, in particular, it highlights some of the funding mechanisms that power malicious actors to continue their activities. In particular, the introduction of Instant Articles by Facebook brought into the fray additional incentives, financial ones, that directed the energies of otherwise undirected malicious actors into the political arena, given the high engagement rates of political content on the website. What this meant is that not only were there politically motivated malicious actors, but also those who aren’t really connected with any political objectives and are seeking to eke out a profit by milking the content dissemination machinery that Facebook and Google proffer.

Why it matters: While addressing the algorithmic basis of how information spreads online is one way of going about tackling the proliferation of problematic information, we need to also focus on the underlying business mechanisms. Especially as highlighted by this article when new tools like Instant Articles propel clickbait and non-mainstream media outfits to outcompete and overcome the platform when it comes to the content that is viewed and engaged with by the users. The fact that the information ecosystem is dominated by a few giants and that what happens on one platform (say YouTube) has a dramatic impact on content that shows up on and dominates another platform (videos trending on Facebook), tells us that we also need to examine what such a monopolization means for the health of the information ecosystem.

Between the lines: Adding financial incentives to an already charged ecosystem where there are many motivations for adversaries and malicious actors to pollute the information ecosystem demonstrates a worsening state of affairs. Having higher transparency on who is paid out and how much from monetization mechanisms, along with access to external auditors and researchers (who have had their access taken away from conducting independent research on Facebook) and demonstration of action on the recommendations that are provided by civil society organizations and individual watchdogs is going to be essential to curb the spread of misinformation online, and reduce the very real harms inflicted on people as a result of this proliferation as seen in Myanmar amongst other places.

📖 From our Living Dictionary:

“Fairness”

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Watch the keynote and roundtable from the Montreal AI Ethics Institute staff at the International Conference on AI for People: Towards Sustainable AI

At this conference hosted by AI for People, our founder delivered a Keynote Speech titled “Turning the Gears: Organizational and Technical Challenges in the operationalization of AI Ethics” and our staff also participated in a roundtable on AI ethics discussing common challenges and opportunities in the space.

💡 In case you missed it:

Artificial Intelligence and the Privacy Paradox of Opportunity, Big Data and The Digital Universe

Thanks to the pandemic, internet connectivity increasing, and companies more efficiently sharing our data, even our most private data…isn’t. This paper explores data privacy in an AI-enabled world. Data awareness has increased since 2019, but the fear remains that Smith’s findings will stay too relevant for too long.

To delve deeper, read the full summary here.

Take Action:

AI Ethics: Image+Bias. Filmic Explorations

This workshop on AI Ethics is being hosted by the Montreal AI Ethics Institute (MAIEI) in partnership with Goethe-Institut to make the ideas of the societal impacts of AI more accessible with the goal of equipping and empowering us all to reshape this powerful technology so that we can achieve a more fair, just, and well-functioning society.

The workshop will be centred on the films screened by Goethe-Institut during the week prior to the workshop along with some other materials provided by MAIEI as suggested readings and viewings. Drawing from those as inspiration, this will be a collaborative workshop bringing together people from all walks of life to discuss the subjects of human-machine interaction, machine-mediated conversations, behaviour shaping and nudging, future of work, and alternative futures in an AI-infused world.

The participants of this workshop will walk away with a more nuanced understanding of the impacts that this technology has on our lives and how we can engage our own communities, colleagues, and families in a more critically informed discussions to move towards a positive future.