AI Ethics Brief #102: LLMs and ethics, AI bias in healthcare, speciesist bias in AI, and more ...

How can the lens of Ubuntu enrich our thinking on AI ethics?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~32-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

As we head into the next “century” (we published edition #100 last week) of AI Ethics Briefs, please do share your feedback with us on how we can do better in continuing to bring you value. Thanks!

This week’s overview:

🔬 Research summaries:

Prediction Sensitivity: Continual Audit of Counterfactual Fairness in Deployed Classifiers

What lies behind AGI: ethical concerns related to LLMs

AI Bias in Healthcare: Using ImpactPro as a Case Study for Healthcare Practitioners’ Duties to Engage in Anti-Bias Measures

NIST Special Publication 1270: Towards a Standard for Identifying and Managing Bias in Artificial Intelligence

Speciesist bias in AI – How AI applications perpetuate discrimination and unfair outcomes against animals

The Ethics of Artificial Intelligence through the Lens of Ubuntu

Resistance and refusal to algorithmic harms: Varieties of ‘knowledge projects’

📰 Article summaries:

Widely Available AI Could Have Deadly Consequences

Accused of Cheating by an Algorithm, and a Professor She Had Never Met

📖 Living Dictionary:

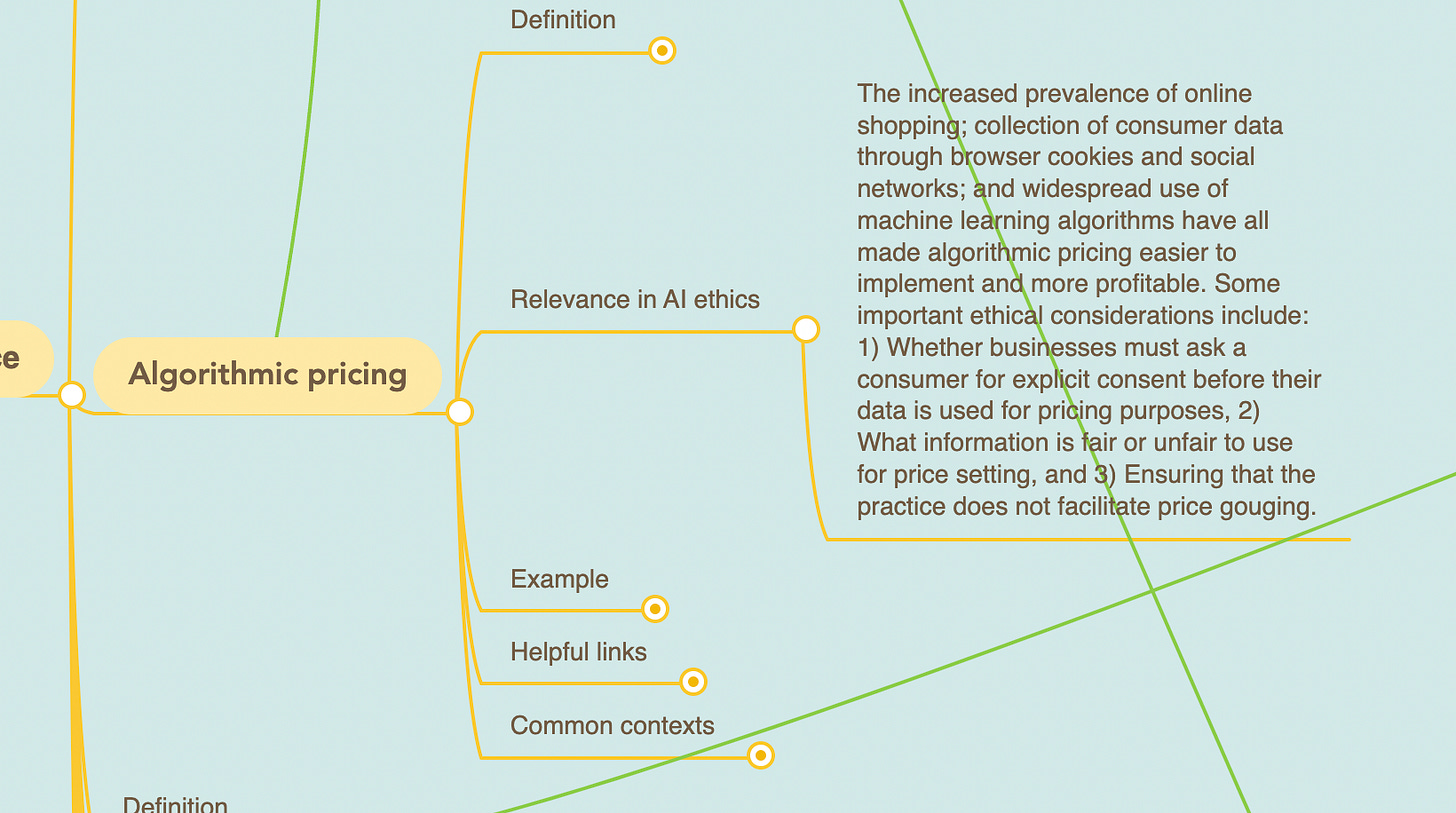

What is the relevance of algorithmic pricing to AI ethics?

💡 ICYMI

The Logic of Strategic Assets: From Oil to AI

But first, our call-to-action this week:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

🔬 Research summaries:

Prediction Sensitivity: Continual Audit of Counterfactual Fairness in Deployed Classifiers

In a world where AI-based systems dominate many parts of our daily lives, auditing these systems for fairness represents a growing concern. Despite being used to audit these systems, group fairness metrics do not always uncover discrimination against individuals and they are difficult to apply once the system has been deployed. Counterfactual fairness describes an individual notion of fairness, which is particularly difficult to evaluate after deployment. In this paper, we present prediction sensitivity, an approach for auditing counterfactual fairness while the model is deployed and making predictions.

To delve deeper, read the full summary here.

What lies behind AGI: ethical concerns related to LLMs

Through the lens of moral philosophy, this paper raises questions about AI systems’ capabilities and goals, the treatment of humans hiding behind them, and the risk of perpetuating a monoculture through the English language.

To delve deeper, read the full summary here.

The paper discusses the rise of implicit biases in datasets and algorithms in healthcare as well as suggestions for potential steps to mitigate them. The author introduces the case study of ImpactPro, illustrating how issues such as using the wrong measures to evaluate data, outdated practices in healthcare, beliefs and embedded prejudices, as well as the failure to recognize existing biases, lead to bias in datasets and hence in algorithms. The article also provides suggestions on how to mitigate these biases in the future, concluding that bias in healthcare is not an isolated issue of AI but rather a general problem in healthcare that needs to be solved in order to rebuild trust in the system.

To delve deeper, read the full summary here.

While there may be several benefits associated with Artificial Intelligence (AI), there are some biases associated with AI that can lead to harmful impacts, regardless of intent. Why does this matter? Harmful outcomes create challenges for cultivating trust in AI. Current attempts to address harmful effects of AI bias remain focused on computational factors, but the currently overlooked systemic and human, as well as societal factors are also significant sources of AI bias. We need to take all forms of bias into account when creating trust in AI.

To delve deeper, read the full summary here.

A lot of efforts are made to reduce biases in AI. However, up to now, all these efforts have been anthropocentric and exclude animals, despite the immense influence AI systems can have on either increasing or reducing violence that is inflicted on them, especially on farmed animals. A new paper describes and investigates the “speciesist bias” in many AI applications and stresses the importance of widening the scope of AI fairness frameworks.

To delve deeper, read the full summary here.

The Ethics of Artificial Intelligence through the Lens of Ubuntu

The near unanimity of Western (meaning European and North American) influences on the design of artificially intelligent algorithms inherently encodes their understanding of the world through Western lenses. This homogenization has led to algorithmic design that philosophically and economically finds itself at odds with cultural philosophies and interests of the Global South. Van Norren attempts to show these issues, and possible solutions, through an analysis of existing Global AI design guidelines using the lens of Ubuntu.

To delve deeper, read the full summary here.

Resistance and refusal to algorithmic harms: Varieties of ‘knowledge projects’

When it comes to issues in AI, we have two categories of response: resistance and refusal. Resistance comes from Big Tech, but this proves futile without the refusal responses produced by external actors. Above all, a holistic approach is required for any response approach to be successful.

To delve deeper, read the full summary here.

📰 Article summaries:

Widely Available AI Could Have Deadly Consequences

What happened: AI is a general-purpose technology and a simple flip of the switch can lead it to optimize for negative outcomes just as easily as it does for positive outcomes. Researchers from behind a system called MegaSyn altered its optimization objective from creating highly specific and low toxicity compounds to highly toxic compounds, as lethal as VX sending shockwaves across the “AI for drug discovery” community. The system was able to generate over 40000 compounds that could be used in biowarfare.

Why it matters: Given that most of the underlying data, algorithms, and capabilities (from a software infrastructure and tooling perspective) remain in public domain, it is not far-fetched to imagine that motivated malicious actors can replicate such results. In particular, there has always been a spirited debate on whether such results should be publicized. The researchers in this case thought it best to provide concrete examples to the community on how easy it is to generate harm through repurposing a system with a “simple flip of the switch” in its objective. Similar debates on harm were raised when OpenAI had published the GPT family of models of varying sizes.

Between the lines: The ease with which a prosocial system could be repurposed for nefarious purposes shows the importance of thorough analysis and “threat modeling” that should be done prior to researchers embarking on a project, especially one that can have such direct consequences. As highlighted by the researchers, the original authors of the system hadn’t really thought about the generation of toxic compounds from their system as much as the “more traditional” concerns in Responsible AI such as privacy. Our take is that involving domain experts can help unearth these kind of harms beyond just the regular repertoire of considerations raised in the field of Responsible AI.

Accused of Cheating by an Algorithm, and a Professor She Had Never Met

What happened: A teenager in Florida received a zero on her biology exam because she had been accused of cheating after an automated facial detection tool from Amazon called Rekognition observed her frequently looking down from the screen before answering questions. Despite a recording of the girl and her screen while she took the test, the accusation of cheating was ultimately a human judgment call.

Why it matters: Keeping test takers honest has become a multimillion-dollar industry, especially after the pandemic, but there has been some frustration around the software’s invasiveness, glitches, false allegations of cheating and failure to work equally well for all types of people. Honorlock, the start-up using Rekognition, evaded responsibility by stating that it was not their role to advise schools on how to deal with behavior flagged by its product.

Between the lines: It likely comes as no surprise that Rekognition has been accused of bias. In a series of studies, it was found that gender classification software, including Rekognition, worked least well on darker-skinned females. This story begs the question: how will FRT impact students’ ability to learn if they are consumed by the fear of being wrongfully accused of cheating by an algorithm? The additional stress that may be caused by this technology will certainly drive the rising levels of anxiety amongst students.

📖 From our Living Dictionary:

What is the relevance of algorithmic pricing to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

💡 In case you missed it:

The Logic of Strategic Assets: From Oil to AI

Does AI qualify as a strategic good? What does a strategic good even look like? The paper aims to provide a framework for answering both of these questions. One thing’s for sure; AI is not as strategic as you may think.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.