AI Ethics Brief #96: Helping people understand AI, embedded ethics, problematic machine behaviours, and more ...

How far are you willing to go to get back your personal data from Amazon?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~28-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

How to Help People Understand AI

🔬 Research summaries:

Embedded ethics: a proposal for integrating ethics into the development of medical AI

Problematic Machine Behavior: A Systematic Literature Review of Algorithm Audits

Regulatory Instruments for Fair Personalized Pricing

South Korea as a Fourth Industrial Revolution Middle Power?

📰 Article summaries:

Chinese internet chatter on the Ukraine war is a warped lens

I Want You Back: Getting My Personal Data From Amazon Was Weeks of Confusion and Tedium

How Native Americans Are Trying to Debug A.I.’s Biases

📖 Living Dictionary:

What is the relevance of the Internet of Things to AI Ethics?

🌐 From elsewhere on the web:

Responsible Artificial Intelligence (Politico)

Beyond the North-South Fork on the Road to AI Governance

💡 ICYMI

The Paradox of AI Ethics in Warfare

But first, our call-to-action this week:

What are some differences that you see in the way AI regulations and governance are taking shape in the Global North compared to the Global South? Do you have any useful resources that you would like for us to share with the community?

✍️ What we’re thinking:

How to Help People Understand AI

Will AI come alive and take over the world? This is not a scenario that AI experts worry about. However, I write about science and technology for kids. And the movies and TV shows they watch are packed with story lines about rogue robots and intelligent machines turned evil. AI is never a data-crunching algorithm that folds proteins or turns speech into text. It’s either a hero or a villain. It is C3PO and R2D2 in Star Wars. It is the Terminator and the mechanical spiders controlling the Matrix.

Unfortunately, kids aren’t the only ones who misunderstand AI. Most adults in the developed world probably realize that AI technology lets computers do smart things. But that may be all they know. A 2017 study by Pegasystems asked respondents if they had ever interacted with AI technology. Only 34% said “yes,” while the others said “no” or “not sure.” In fact, based on the devices these respondents reported using, 84% of them had interacted with AI. This type of vague familiarity with AI can lead to some serious misunderstandings about what this technology is and what it can do – now or in the future.

To delve deeper, read the full article here.

Connor Wright, Partnerships Manager at the Montreal AI Ethics Institute, shares his review of "Algorithmic Domination in the Gig Economy".

🔬 Research summaries:

Embedded ethics: a proposal for integrating ethics into the development of medical AI

High-level ethical frameworks serve an important purpose but it is not clear how such frameworks influence the technical development of AI systems. There is a skills gap between technical AI development and the implementation of high-level frameworks. This paper explores an embedded form of AI development where ethicists and developers work in lockstep to address ethical issues and implement technical solutions within the healthcare domain.

To delve deeper, read the full summary here.

Problematic Machine Behavior: A Systematic Literature Review of Algorithm Audits

As algorithmic systems become more pervasive in day-to-day life, audits of AI algorithms have become an important tool for researchers, journalists, and activists to answer questions about their disparate impacts and potential problematic behaviors. Audits have been used to prove discrimination in criminal justice algorithms, racial discrimination in Google search, race and gender discrimination in facial algorithms, and many other impacts. This paper does a systematic literature review of external audits of public facing algorithms and contextualizes their findings in a high level taxonomy of problematic behaviors. It is a useful read to learn what problems have audits found so far as well as get recommendations on future audit projects.

To delve deeper, read the full summary here.

Regulatory Instruments for Fair Personalized Pricing

How can we regulate personalized pricing to ensure consumers’ benefits? In this work, we propose two simple but effective regulatory policies under an idealized circumstance. Our findings and insights shed light on regulatory policy design for the increasingly monopolized business in the digital era.

To delve deeper, read the full summary here.

South Korea as a Fourth Industrial Revolution Middle Power?

As a self-identified middle power, South Korea’s adequacy for the label is assessed within the context of the fourth industrial revolution. While the country bears the qualities to adopt the title, doing so does not come without its own baggage.

To delve deeper, read the full summary here.

📰 Article summaries:

Chinese internet chatter on the Ukraine war is a warped lens

What happened: With a lack of access to official polling on public sentiment in China around the war in Ukraine, social media might serve as a gauge to ascertain how people feel about this. The article mentions Sima Nan, a popular account on Weibo (microblogging platform in China) who doesn’t have any state-affiliation but has been leveraging his large follower base to drive traffic and inflame pro-war, anti-US, anti-NATO sentiments. The algorithmic underpinnings of platforms that optimize for views and clicks are only aggravating the situation. It also draws attention to “stan”, ardent fans, culture who show their support vociferously for the accounts that they follow. This translates to emotional and heated exchanges on the platform, further exacerbating the situation.

Why it matters: Yet, as the article cautions the reliance on social media to understand national sentiment is rife with problems, both through censorship coming top-down and organic, bottom-up opportunism disguised as nationalism and patriotism to align with Party agenda that conflate what people are really thinking about the war. The official Party stance is for a peaceful resolution while social media exchanges might make it appear like the case is the opposite where most people support the war effort and are aligned with Putin’s interests.

Between the lines: Homogenizing people’s opinions through a singular lens and gleaning insights from the loudest voices in the (social media) room is unsurprisingly a problematic approach. Given the particular dynamics of what is prized and rewarded on social media, it is also unsurprising that some enterprising folks have taken to it to eke out profits by inflaming extreme views polluting the information ecosystem with confused signals on what the Chinese people really think about the war and driving engagement and discourse in unwanted directions by leveraging their “soft power” bolstered by large “stan” bases.

I Want You Back: Getting My Personal Data From Amazon Was Weeks of Confusion and Tedium

What happened: A privacy-conscious individual’s battle of endurance against Amazon’s pervasive data practices paints a grim picture for anyone seeking to extricate their personal information from the massive apparatus that is in operation at all times around us sucking up our most intimate details. The article’s author documents their journey of going through multiple hoops to just get a sense of what kind of personal information the company has hoarded about them. Finally on making it through the arduous process that involves dodging many dark design patterns and navigation and email confirmations, they discover that they are offered up 70+ individual zip files which they have to download individually and manually unpack to find what is contained inside them. Certainly not an accessible procedure for those who are not programmers and wouldn’t be able to automate the process to collate and gather up a picture of what pieces of information about them are stored by Amazon.

Why it matters: There is significant asymmetry in data collection, Amazon can (and most of the time does without your informed consent) easily collect data on you but you have to jump through Kafkaesque hoops just to find out what they have on you. They clearly employed many dark design patterns such as multiple page navigations with prompts meant to discourage people from following through on requesting their information. The delays in returning your data might not be deliberate given that prior coverage has shown that Amazon doesn’t actually know where your data is and what data they have on you.

Between the lines: A frightening insight into what the data and privacy costs are to the convenience that Amazon offers when you place orders and even browse around on any of its vast set of online assets. The hoops that you have to jump through to get your information are a real test in endurance. What is shocking (or perhaps not so!) is how nonchalant they are in how long it will take to get back to you when it is a company that otherwise prides itself on speed. More so, in disclosures with respect to the CCPA, they mention that the median time is 1.5 days to reply to a data request which doesn’t really seem to be true in the notices you are provided as you navigate through the process (which frequently mentions a timescale of months! It took the article’s author 19 days though to get back their data) While one can engage in tactics such as browsing incognito, shipping to a PO box, and using burner phones, in today’s modern life, those are impractical steps, and mostly inaccessible / unintelligible to the less tech-savvy placing undue burden on consumers rather than the company.

How Native Americans Are Trying to Debug A.I.’s Biases

What happened: Without adequate representation in data, concepts that aren’t a part of the “majority” don’t have great performance in AI systems. The article documents how an effort led by researchers is working on gathering Indigenous knowledge through an Indigenous Knowledge Graph created by a start-up called Intelligent Voices of Wisdom that is seeking to preserve culture through AI and to combat biases in extant AI systems. A powerful example quoted in the article refers to a workshop where high-school students came together to provide metadata tags for images that contained ceremonies and depicted images that specific significance to their communities such as sites of genocide which were tagged as generic plants and schools respectively showcasing how limited AI systems can be in capturing cultural significance in images as an example.

Why it matters: While such efforts are labor-intensive and expensive, they are essential for plugging in gaps in datasets that are used for training AI systems. A “cultural engine”, as the researchers call it, will be critical in injecting relevance in context into datasets without which we risk perpetuating biases. Another example quoted in the article mentions an effort to gather up indigenous recipes and convert them into graph databases which are then used to train an interactive AI system exposed to people through Google Assistant making this knowledge more accessible to those beyond just the ones who have a technical background to process such codified information.

Between the lines: In a powerful quote, one of the researchers says, “Machines cannot replace humans. They can only be there with us around the campfire and inform us.” Indeed! As we seek to bring in AI systems to various aspects of our lives, we can’t forget the agency that we should and must exercise in the influence that they have on our lives. It starts with the data that is fed into the systems but goes beyond that to reimagining and reshaping the societal structures around us that can put humans at the center rather than being peripheral to machines.

📖 From our Living Dictionary:

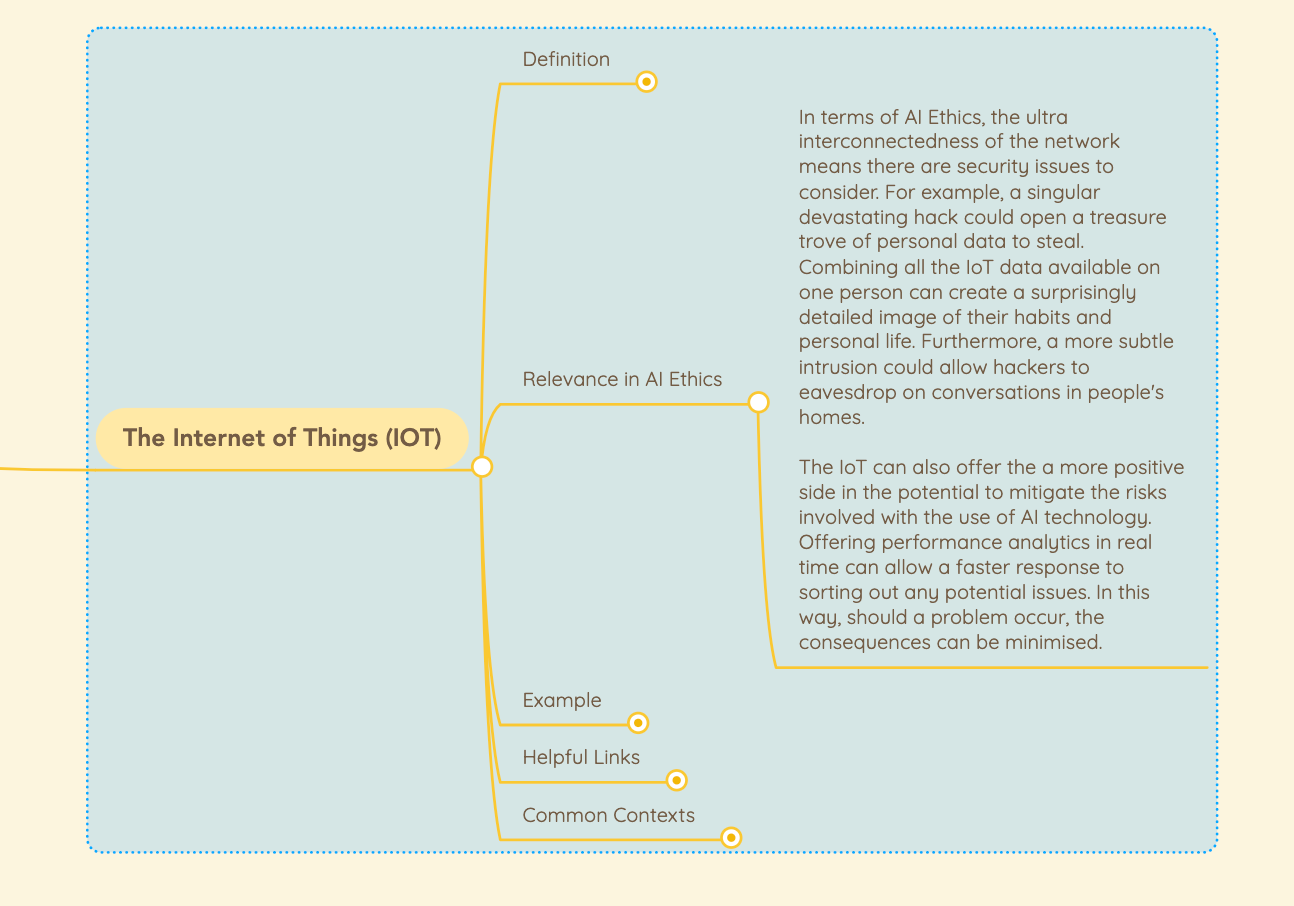

What is the relevance of the Internet of Things to AI Ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Responsible Artificial Intelligence (Politico)

Our founder, Abhishek Gupta, spoke to Politico on what AI regulation should look like, on the difference between EU and U.S. approaches to AI governance, on the role of China in setting global standards, and on why companies should adopt responsible AI.

Beyond the North-South Fork on the Road to AI Governance

Our founder, Abhishek Gupta, was part of a multidisciplinary group of experts who convened over several months to contribute to this white paper.

Artificial intelligence (AI) is transforming the world faster than the world can mitigate intensifying geopolitical divisions and socio-economic disparities. As technological change outpaces regulatory policy, no common platform has yet emerged to coordinate a variety of governance approaches across multiple national contexts. The concerns and interests of the citizens and civil society of the Global South – broadly, the post-colonial nations of Latin America and the Caribbean, Africa, the Middle East, South and Central Asia, and the Asia-Pacific – must be prioritised by policy makers to reverse increasing fragmentation in the governance of algorithmic platforms and AI-powered systems worldwide. Particular attention must be given to the varied ways in which national governments and transnational corporations deploy such systems to monitor, manage, and manipulate civic-public spaces across the Global South.

The Global South represents a major source of the human-generated data and, indeed, the very raw materials upon which complex computing networks and AI systems rely. It therefore follows that the societies of the Global South are entitled to both equitable economic benefits and meaningful protections from powerful platforms and tools largely controlled by corporations based in the Global North and the great powers, particularly the United States (US) and the People’s Republic of China, but also the European Union (EU). This equity must be predicated upon what we define as an ‘AI constitutionalism’ that approaches AI and big data as fundamental resources within the modern economy akin to electricity and water, essential components for economic and social development in the 21st century.

Call for proposals – Human-Centered AI Book

The HAICU Lab (Human-Centered AI for/by Colleges and Universities Lab), a U7+ initiative comprised of 13 universities from across the globe, is launching a call for proposals to academics from the U7+ Alliance network who wish to contribute their unique and diverse perspectives in a book on the topic of Human-Centered AI (HCAI).

HCAI was selected as one of the core areas in which the U7+ wants to have an impact on universities and society. This book should therefore benefit from the support of the U7+, which should have an interest in endorsing and promoting it throughout its network and beyond.

The book will be published by Routledge’s Chapman & Hall/CRC Artificial Intelligence and Robotics Series in 2023. It will be available as an open access publication in 2024.

💡 In case you missed it:

The Paradox of AI Ethics in Warfare

Interview with Michael Conlin, inaugural Chief Data Officer and Chief Business Analytics Officer (2018-2020), US Department of Defense, May 2021

Perhaps the most fundamental paradox when discussing AI ethics emerges when exploring AI within a domain that is itself regarded by many as unethical. Warfare is arguably the most extreme case. Such domains represent harsh realities that are nonetheless better confronted than avoided. For this interview, I was largely inspired by Abhishek Gupta’s writings on the use of AI in war and his summary of the paper, “Cool Projects” or “Expanding the Efficiency of the Murderous American War Machine?” that investigated AI practitioners’ views on working with the U.S. Department of Defense. (See Gupta’s summary here, full paper here.)

To delve deeper, read the full article here.

Take Action:

What are some differences that you see in the way AI regulations and governance are taking shape in the Global North compared to the Global South? Do you have any useful resources that you would like for us to share with the community?