AI Ethics Brief #86: Public discourse on AI ethics in China, shadows of removed posts on Reddit, and more ...

Can industry self-governance increase trust in AI?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~16-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

🔬 Research summaries:

Enhancing Trust in AI Through Industry Self-Governance

Online public discourse on artificial intelligence and ethics in China: context, content, and implications

Anthropomorphism and the Social Robot

📰 Article summaries:

The Shadows of Removed Posts Are Hiding in Plain Sight on Reddit

What Happens When an AI Knows How You Feel?

The Future of Tech Is Here. Congress Isn't Ready for It

📖 Living Dictionary:

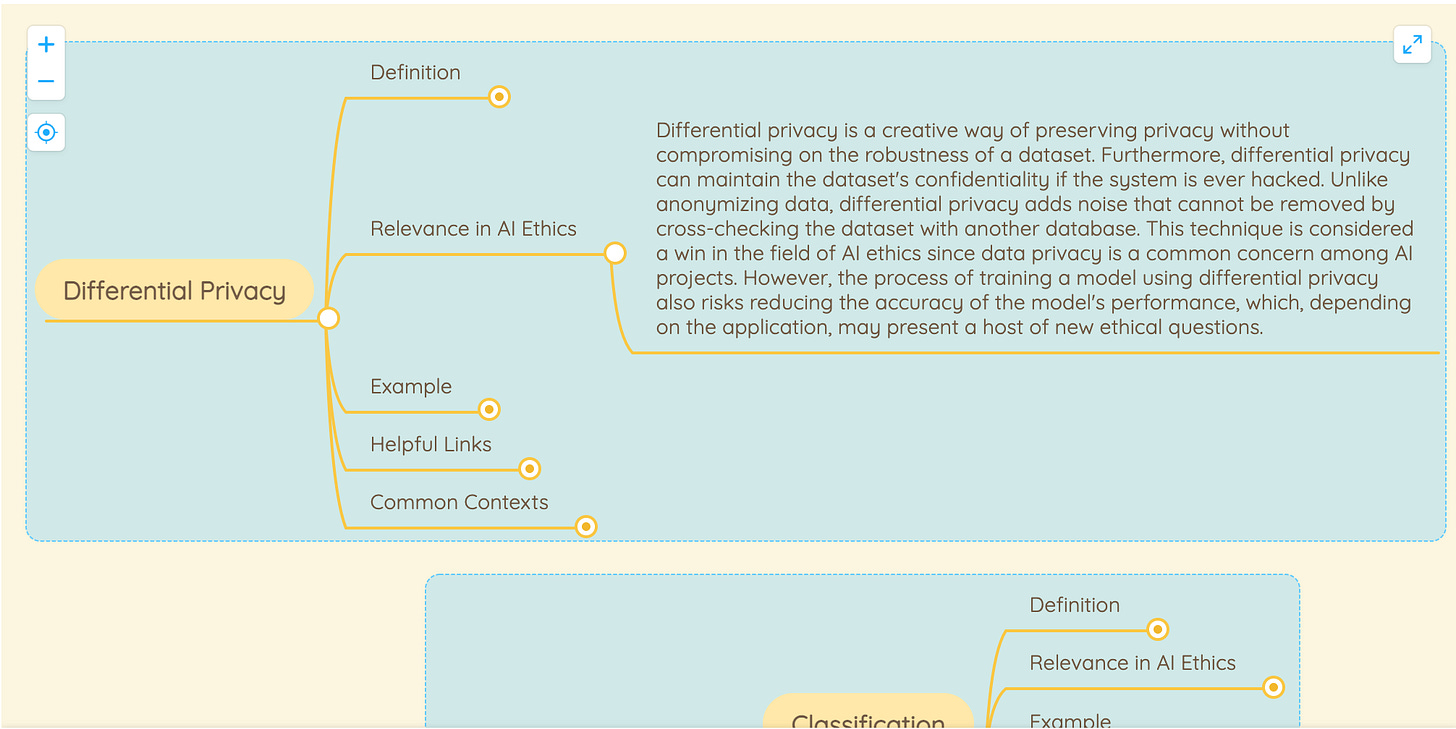

Differential Privacy

🌐 From elsewhere on the web:

Does Military AI Have Gender? Understanding Bias And Promoting Ethical Approaches To AI Military Systems

💡 ICYMI

Data Statements for Natural Language Processing: Toward Mitigating System Bias and Enabling Better Science

But first, our call-to-action this week:

Happy New Year! Welcome to 2022!

We are excited to kickstart 2022 and bring you well-rounded perspectives and content covering a variety of areas in the domain of AI ethics!

If you would like to have your work featured on our website and included in this newsletter to reach thousands of technical and policy leaders in AI ethics, reach out to us!

We are currently looking for research summary writers and will open up more writing opportunities in the coming months for regular contributors.

🔬 Research summaries:

Enhancing Trust in AI Through Industry Self-Governance

The trust of users in AI systems used in the healthcare sector is waning as the systems are not generating their publicized breakthroughs. Given such a scenario, this paper describes a process for how a diverse group of stakeholders could develop and define standards for promoting trust, as well as AI risk-mitigating practices through greater industry self-governance.

To delve deeper, read the full summary here.

The societal and ethical implications of artificial intelligence (AI) have sparked vibrant online discussions in China. This paper analyzed a large sample of these discussions which offered a valuable source for understanding the future trajectory of AI development in China as well as implications for global dialogue on AI governance.

To delve deeper, read the full summary here.

Anthropomorphism and the Social Robot

Have you ever found a technology too human for your liking? The benefit of utilising anthropomorphism to involve AI in our lives is a popular strategy. Yet, its success depends on how well the balance between too much and too little is achieved.

To delve deeper, read the full summary here.

A message from our sponsor this week:

All datasets are biased.

This reduces your AI model's accuracy and includes legal risks.

Fairgen's platform solves it all by augmenting your dataset, rebalancing its classes and removing its discriminative patterns.

Increase your revenues by improving model performance, avoid regulatory fines and become a pro-fairness company.

Want to be featured in next week's edition of the AI Ethics Brief? Reach out to masa@montrealethics.ai to learn about the available options!

📰 Article summaries:

The Shadows of Removed Posts Are Hiding in Plain Sight on Reddit

What happened: An insightful read into what happens when a piece of content goes into the moderator’s pile, this article points out that traces of content that has been removed from a subreddit can still persist on the website. It is accessible in the comment history of the moderator along with an explanation for why it was removed (presumably because it broke the side-wide Reddit rules, or the rules imposed on that subreddit). But, the links therein along with any images are accessible to those who know where to look, partially defeating the effort of moderating the content on the website.

Why it matters: This mechanism is particularly problematic because the moderator’s comment history then itself becomes an index to all the banned content, which in the case of NSFW or other kinds of content can become a cesspool. The way to completely get rid of that material is to ask the original poster of that material to delete it, otherwise shadows of that content survive.

Between the lines: Content moderation is an incredibly difficult challenge to solve and every approach comes with its pros and cons. In this case, the archiving of moderator activity is a huge leakage that undermines the efforts of maintaining the health of the information ecosystem. For motivated actors, this lacuna means they can easily source a ton of banned material in an accessible fashion. What we really need is a stress test and red-teaming approach to content moderation to unearth other such gaps that need to be plugged to make these approaches truly effective.

What Happens When an AI Knows How You Feel?

What happened: ToneMeter and other similar tools are discussed in this article as a part of co-parenting apps pointing out how they are being used in scenarios to algorithmically mediate conversations between separated partners with shared childcare duties and other situations where communication may be charged between individuals. The purpose of using such a tool is to help the participants in that conversation maintain a positive tone, especially when there is history and an inclination to adopt negative framing. This can be in things like who is responsible for picking up the child from school, taking them to classes, or any other case that requires short and functional communication. The results from the use of the tool have been positive in reducing conflicts and are now even recommended by courts.

Why it matters: The natural language processing system can help diffuse tensions by indicating when a conversation might be headed towards conflict, thus pre-empting it. Yet, given the numerous limitations of such systems in terms of bias and high performance being limited to a few dominant languages also hinders how effective they can be. Lastly, there is the problem of context and maintaining that context over a long time, something that we're able to do naturally as humans but not so the case with a lot of language models which struggle with retaining it over long-running exchanges which can make it over- or under-sensitive to triggers for conflicts in conversation.

Between the lines: Emotion detection is always rife with areas of problems, never more so than in the case of using the detected emotions to mediate and shape interactions between individuals. What is even more problematic than the things pointed out earlier is that there is a risk also of over-reliance on machines to smoothen over our interactions with each other, stripping agency from people to make mistakes and learn from them, an essential quality of being human.

The Future of Tech Is Here. Congress Isn't Ready for It

What happened: An interview with former US Congressperson Will Hurd dives into his outlook for the future of technology and the challenges facing congress in trying to come up with meaningful and timely regulations. In particular, he was behind pushing for some of them relating to cybersecurity and responsible AI. He remarks that even a year after the attack on the US Capitol, disinformation runs rampant on social media paralysis in lawmaking stems from a slowdown in action due to extreme polarization on these issues, especially as some of them remain politically charged.

Why it matters: As we live more and more of our lives digitally, slow responses to rapidly developing technology poses even greater threat as adversaries, foreign and domestic, leverage chinks in platforms to push their agendas through targeted misinformation and disinformation campaigns. With cryptocurrencies and metaverse raising new concerns related to their impacts on society, the current speed of drawing and enacting legislations isn't encouraging.

Between the lines: Problems articulated by Hurd in this interview are applicable beyond the US ecosystem as well. Similar problems of policy and lawmakers' limited understanding of cutting-edge technology and how to effectively regulate it plague all nations, more so those who are net importers of these technologies and don't have a strong local research and development ecosystem. Finding ways to accelerate the feedback loop so that regulations are nimble and able to quickly change in response to the capabilities and limitations of these technologies will be essential to properly harness them to achieve both economic and social goals.

📖 From our Living Dictionary:

“Differential Privacy”

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Our founder, Abhishek Gupta, spoke at this event that addressed the significance of gender issues in the development and deployment of military artificial intelligence (AI) systems, providing an opportunity to present findings from UNIDIR research about gender bias in data collection, algorithms, and computer processing and their implications for AI military systems.

💡 In case you missed it:

This paper provides a new methodological instrument to give people using a dataset a better idea about the generalizability of a dataset, assumptions behind it, what biases it might have, and implications from its use in deployment. It also details some of the accompanying changes required in the field writ large to enable this to function effectively.

To delve deeper, read the full summary here.

Take Action:

We are excited to kickstart 2022 and bring you well-rounded perspectives and content covering a variety of areas in the domain of AI ethics!

If you would like to have your work featured on our website and included in this newsletter to reach thousands of technical and policy leaders in AI ethics, reach out to us!

We are currently looking for research summary writers and will open up more writing opportunities in the coming months for regular contributors.