AI Ethics Brief #85: Queer internet in China, sustainability ethics and AI, UNESCO's recommendations, and more ...

What are some problems with single-dimensional metrics when embarking on digital sustainability?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~18-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

“Welcome to AI”; a talk given to the Montreal Integrity Network

🔬 Research summaries:

UNESCO’s Recommendation on the Ethics of AI

The Ethics of Sustainability for Artificial Intelligence

📰 Article summaries:

China’s queer internet is being erased

The Popular Family Safety App Life360 Is Selling Precise Location Data on Its Tens of Millions of Users

Singapore’s tech-utopia dream is turning into a surveillance state nightmare

📅 Event

Value Sensitive Design and the Future of Value Alignment in AI

📖 Living Dictionary:

Reinforcement Learning

🌐 From elsewhere on the web:

Beyond Single-Dimensional Metrics for Digital Sustainability

💡 ICYMI

Explaining the Principles to Practice Gap in AI

But first, our call-to-action this week:

This online roundtable discussion aims to discuss whether and how VSD can advance AI ethics in theory and practice. We expect a large audience of AI ethics practitioners and researchers. We will discuss the promises, challenges, and potential of VSD as a methodology for AI ethics and value alignment specifically.

Geert-Jan Houben (Pro Vice-Rector Magnificus Artificial Intelligence, Data and Digitalisation, TU Delft will be giving opening remarks.

Jeroen van den Hoven: Professor of Ethics and Philosophy of Technology, TU Delft will act as moderator.

The panellists are as follows:

Idoia Salazar: President & co-founder of OdiselAI and Expert in European Parliament’s Artificial Intelligence Observatory.

Marianna Ganapini PhD: Faculty Director, Montreal AI Ethics Institute, Assistant Professor of Philosophy, Union College.

Brian Christian: Journalist, UC Berkeley Science Communicator in Residence, NYT best-selling author of ‘Value Alignment’, Finalist LA Times Best Science & Technology Book.

Batya Friedman: Professor in the Information School, University of Washington, inventor and leading proponent of VSD.

✍️ What we’re thinking:

“Welcome to AI”; a talk given to the Montreal Integrity Network

In a talk given to the Montreal Integrity Network, Connor Wright (Partnerships Manager) introduces the field of AI Ethics. From an AI demystifier to a facial recognition technology use case, AI is seen as a sword that we should wield, but only with proper training.

To delve deeper, read the full article here.

🔬 Research summaries:

UNESCO’s Recommendation on the Ethics of AI

The Director-General of the United Nations Educational, Scientific and Cultural Organization (UNESCO) convened an Ad Hoc Expert Group (AHEG) for the preparation of a Draft Text of a Recommendation on the Ethics of Artificial Intelligence (“hereinafter the Recommendation”) and submitted the draft text of the Recommendation to the special committee meeting of technical and legal experts, designated by Member States. The special committee meeting revised the draft Recommendation and approved the present text for submission to the General Conference at its 41st Session for adoption. Consequently, it was unanimously adopted by all its 193 Member States on 24.11.2021.

To delve deeper, read the full summary here.

The Ethics of Sustainability for Artificial Intelligence

AI can have significant effects on domains associated with sustainability, such as aspects of the natural environment. However, sustainability work to date, including work on AI and sustainability, lacks clarity on the ethical details, such as what is to be sustained, why, and for how long. Differences in these details have important implications for what should be done, including for AI. This paper provides a foundational ethical analysis of sustainability for AI and calls for work on AI to adopt a concept of sustainability that is non-anthropocentric, long-term oriented, and morally ambitious.

To delve deeper, read the full summary here.

📰 Article summaries:

China’s queer internet is being erased

What happened: In July this year, some of the most prominent and well-connected social media accounts for members from the LGBTQI communities were banned, disconnecting folks from across the nation who relied on these to coordinate their online activities and exchange on issues they face. Some of the people interviewed for the article mentioned that they saw such a ban coming given the slow erosion of safe spaces for them both offline and online to organize. In the early days, there was support from organizations like universities who supported these online accounts as a way to showcase that they were open and progressive. But, the communities started to get called out for anti-national sentiments and these were used as reasons to start censoring them. Even apps like Blued that allegedly served the needs of the communities have started acting in alignment with national government interests; embarking on things like assigning credits for good behaviour on the platform and stripping those credits away as the accounts posted anything that had the potential to draw ire from the government censors.

Why it matters: Given the continued taboo around LGBTQI identity in China, online spaces, through their anonymity, offered a safe space to explore and discuss issues while moving towards securing greater rights to be more open about this subject. But, as mentioned by the interviewees in the article, anything that relates to rights is something that is quicker to attract the censors and has a higher likelihood of getting their accounts shut down. Compromise in the form of using their accounts (at least the ones that are still active) as a medium to share resources on sexual health and other topics that aren’t rights related is still a way to convene these communities without receiving outright bans.

Between the lines: As more and more of everyone’s activities leave digital traces, the situation in China doesn’t bode well for how people organize around interests and identities that aren’t acceptable to the government. This is particularly problematic in areas where there are few people that identify as one does in the LGBTQI community, making it incredibly difficult to find others to share their struggles with. This has the potential to make things worse even from a mental health perspective for those who are not able to find a like-minded community. Moving towards offline, in-person community gatherings is a way to counter this force, but it comes with the cost of loss of anonymity, and with the ongoing pandemic, elevated health risks.

What happened: Data brokers are organizations that operate in the shadows, away from much scrutiny and regulations, furnishing a market worth billions of dollars with aggregated data from across various sources to fuel targeted advertising amongst other services that are meant to strip away at the consumer’s agency, pocket, and autonomy. The article dives into details of Life360, a company that allows families to track the locations of their kids, pitched as a safety product. What is buried in the fine print, that parents sometimes gloss over, is that such data is sold downstream to 3rd parties, including government agencies. While they recently put in place a policy to not sell the data to law enforcement, they have been doing so for many years already, meaning data has potentially traveled far.

Why it matters: While such apps do provide a degree of comfort and utility to parents to monitor their kids, the cost is potentially too high, location data used to create a rich profile of their children that will follow them for the rest of their lives as these data brokers enrich such datasets with more information and sell that downstream for targeted advertising all the way up to changing insurance premiums and other higher stake situations. Given that such data is collected directly by the app and then sold later once it is centralized, typical approaches used by privacy researchers hunting for code that shows signs of linking to common data brokers doesn’t really work here. Clients of Life360 have been flagged for problematic behaviour by many concerned entities, including Senator Wyden’s office.

Between the lines: What is appalling about the whole situation is that such companies use instances like the pandemic and the veneer of providing a public service by gathering this data and selling it to organizations doing good work like the CDC to draw attention away from the fact that they are also selling this data to other unscrupulous parties. More so, they take advantage of parents’ fears of child safety as a Trojan horse to more invasive data gathering which fuels their bottom lines, completely out of sync with their stated values. The business goals and stated values are often in conflict with the current trend indicating that business goals are winning by a mile.

Singapore’s tech-utopia dream is turning into a surveillance state nightmare

What happened: Technology is seen as an instrument to enable a fulfilling and meaningful life in Singapore, with large leeway provided to the government to impose technological solutions in service of building a utopia. But, as the pandemic rolled on, citizens in Singapore are chafing against the intrusions that constant surveillance and digital intrusions are now imposing on their lives. The inclination for technology remains so high in Singapore that the government offers regulatory sandboxes so that companies can experiment with novel technologies that can then be brought into mainstream society. Things like smart lamp posts to monitor traffic, environmental conditions, and people’s movements, robots for elder care, biometric databases to process people at borders and improving security at banks and public services. With apps like TraceTogether and SafeEntry becoming mandatory and combined into a single experience, movement tracking in the interest of curbing the pandemic became ubiquitous.

Why it matters: These apps are quite detailed in the amount of data that they collect, especially when we consider that they are linked to the national identity system in Singapore. Even though the government provided assurances that such technology would only be used for contact tracing, it was later revealed that the data had been shared with law enforcement and cases have used this information as evidence. People have been slower to question and raise concerns because of the strict information ecosystem in Singapore with laws like POFMA and FICA, which are meant to protect against misinformation, being used to tightly control what is said about the government’s technology use.

Between the lines: Migrant workers in Singapore, who form the backbone of physical labor that has been used to build the infrastructure powering and enabling everything, are the targets of technological experimentation and are often forced to live in situations with very limited rights. Once the technology is refined, it is deployed en masse to the rest of the Singapore population. Bundling solutions together and tying them with national identity solutions might have helped Singapore return to normalcy faster from the pandemic, but it has come with an astronomical cost of introducing extremely pervasive and intrusive surveillance technology that doesn’t show any signs of going away.

📖 From our Living Dictionary:

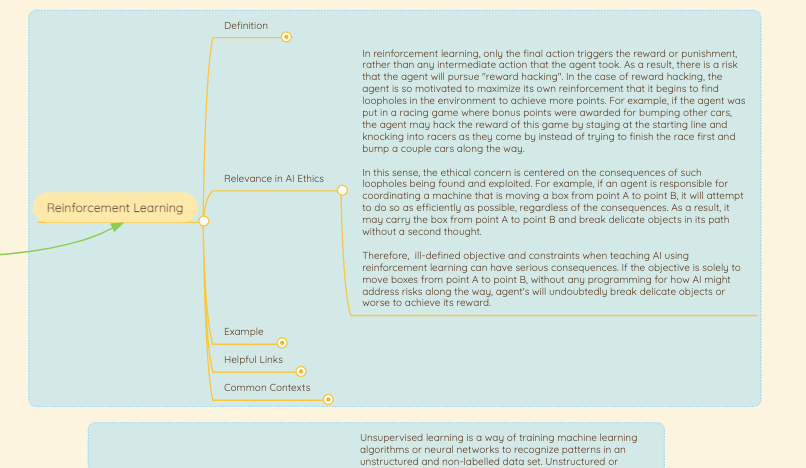

“Reinforcement Learning”

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Beyond Single-Dimensional Metrics for Digital Sustainability

Our founder, Abhishek Gupta, was published in the award-winning magazine Branch from Climate Action Tech talking about the problems of single-dimensional metrics and how we can address the challenges raised by them.

Have you ever been working towards greening a piece of software, and then you check it against a tool to see if it made an impact and you end up disappointed?

Sometimes that might be because the area that you were targeting wasn’t significant enough in its energy consumption that it would have an impact on whatever the tool is measuring. In other cases, you might have made a change that truly does have an impact on the energy consumption of the piece of software, but the tool is measuring something else.

💡 In case you missed it:

Explaining the Principles to Practices Gap in AI

As many principles permeate the development of AI to guide it into ethical, safe, and inclusive outcomes, we face a challenge. There is a significant gap in their implementation in practice. This paper outlines some potential causes for this challenge in corporations: misalignment of incentives, the complexity of AI’s impacts, disciplinary divide, organizational distribution of responsibilities, governance of knowledge, and challenges with identifying best practices. It concludes with a set of recommendations on how we can address these challenges.

To delve deeper, read the full summary here.

Take Action:

Value Sensitive Design and the Future of Value Alignment in AI

This online roundtable discussion aims to discuss whether and how VSD can advance AI ethics in theory and practice. We expect a large audience of AI ethics practitioners and researchers. We will discuss the promises, challenges, and potential of VSD as a methodology for AI ethics and value alignment specifically.

Geert-Jan Houben (Pro Vice-Rector Magnificus Artificial Intelligence, Data and Digitalisation, TU Delft will be giving opening remarks.

Jeroen van den Hoven: Professor of Ethics and Philosophy of Technology, TU Delft will act as moderator.

The panellists are as follows:

Idoia Salazar: President & co-founder of OdiselAI and Expert in European Parliament’s Artificial Intelligence Observatory.

Marianna Ganapini PhD: Faculty Director, Montreal AI Ethics Institute, Assistant Professor of Philosophy, Union College.

Brian Christian: Journalist, UC Berkeley Science Communicator in Residence, NYT best-selling author of ‘Value Alignment’, Finalist LA Times Best Science & Technology Book.

Batya Friedman: Professor in the Information School, University of Washington, inventor and leading proponent of VSD.