AI Ethics Brief #111: Responsible data sourcing, data documentation desiderata, toxicity triggers on Reddit, virtue ethics, and more ...

How do we better scope AI governance?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~41-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

Responsible sourcing and the professionalization of data work

🔬 Research summaries:

Human-centred mechanism design with Democratic AI

Scoping AI Governance: A Smarter Tool Kit for Beneficial Applications

Predatory Medicine: Exploring and Measuring the Vulnerability of Medical AI to Predatory Science

Understanding Machine Learning Practitioners’ Data Documentation Perceptions, Needs, Challenges, and Desiderata

Understanding Toxicity Triggers on Reddit in the Context of Singapore

Designing a Future Worth Wanting: Applying Virtue Ethics to Human–Computer Interaction

Broadening AI Ethics Narratives: An Indic Art View

📰 Article summaries:

The messy morality of letting AI make life-and-death decisions

Dollars to Megabits, You May Be Paying 400 Times As Much As Your Neighbor for Internet Service

In the ultimate Amazon smart home, each device collects your data

📖 Living Dictionary:

What is differential privacy?

🌐 From elsewhere on the web:

When choosing a responsible AI leader, tech skills matter

💡 ICYMI

Zoom Out and Observe: News Environment Perception for Fake News Detection

But first, our call-to-action this week:

Now I'm Seen: An AI Ethics Discussion Across the Globe

We are hosting a panel discussion to amplify the approach to AI Ethics in the Nepalese, Vietnamese and Latin American contexts.

This discussion aims to amplify the enriching perspectives within these contexts on how to approach common problems in AI Ethics. The event will be moderated by Connor Wright (our Partnerships Manager), who will guide the conversation to best engage with the different viewpoints available.

This event will be online via Zoom. The Zoom link will be sent 2-3 days prior to the event taking place.

✍️ What we’re thinking:

Responsible sourcing and the professionalization of data work

The bulk of machine learning activities involves the tedious work of data preparation, including annotation and labeling. Somewhat ironically, much of this work is performed by humans. It is typically outsourced to companies – data suppliers – operating in low-wage countries with little to no standards or regulations.

As awareness of these exploitative conditions in today’s AI industry grows, organizations are pushing for responsible sourcing practices, including the data suppliers and the AI companies whose businesses they compete for.

Beyond providing living wages, employee benefits, and opportunities for career advancement, the efforts of these organizations serve to increasingly expose, validate and professionalize the occupation, making it less vulnerable to unfair practices.

In this interview, Natalie Klym explore how one such company, Sama (formerly Samsource), is leading these initiatives.

To delve deeper, read the full article here.

🔬 Research summaries:

Human-centred mechanism design with Democratic AI

An under-explored area in AI is how it can help humans design thriving societies. Hence, could an AI design an appropriate economic mechanism to help achieve a democratic goal? This research says yes.

To delve deeper, read the full summary here.

Scoping AI Governance: A Smarter Tool Kit for Beneficial Applications

The public sector can play an important role in governing artificial intelligence, acting directly or indirectly to help shape AI systems that benefit society. This paper examines the various tools and approaches policymakers can leverage to accomplish this by generating AI governance strategies that promote fairness and transparency. By exploring the benefits and drawbacks of these policy tools in more detail, the author aims to generate conversation amongst policymakers about which approaches are best suited for creating strong frameworks for AI governance.

To delve deeper, read the full summary here.

Predatory Medicine: Exploring and Measuring the Vulnerability of Medical AI to Predatory Science

The security, integrity, and credibility of Medical AI (MedAI) tools are paramount issues because of dependent patient care decisions. MedAI solutions are often heavily dependent on scientific medical research literature as a primary data source that draws the attacker’s attention as a potential target. We present a first study of identifying the existing predatory publication presence in the MedAI inputs and demonstrating how predatory science can jeopardize the credibility of MedAI solutions, making their real-life deployment questionable.

To delve deeper, read the full summary here.

Data documentation, a practice whereby engineers and ML/AI practitioners provide detailed information about the process of data creation, its current and future uses, is an important on the ground practice of the push towards responsible AI. The paper interviews 14 ML practitioners at a large international information technology company to explore their data documentation practices. The authors then propose 7 design criteria to make data documentation more streamlined and integrated into ML practitioners’ day-to-day work.

To delve deeper, read the full summary here.

Understanding Toxicity Triggers on Reddit in the Context of Singapore

While the contagious nature of online toxicity sparked increasing interest in its early detection and prevention, most of the literature focuses on the Western world. In this work, we demonstrate that 1) it is possible to detect toxicity triggers in an Asian online community, and 2) toxicity triggers can be strikingly different between Western and Eastern contexts.

To delve deeper, read the full summary here.

Designing a Future Worth Wanting: Applying Virtue Ethics to Human–Computer Interaction

As the ethical consequences of digital technology become more and more apparent, designers and firms are looking for guiding frameworks to design digital experiences that are better for humanity. This paper introduces designers and researchers to virtue ethics, a framework first developed millennia ago but which has not been much appreciated in contemporary circles.

To delve deeper, read the full summary here.

Broadening AI Ethics Narratives: An Indic Art View

The paper explores how non-Western ethical abstractions, methods of learning, and participatory practices observed in Indian arts, one of the most ancient yet perpetual and influential art traditions, can inform the AI ethics community. We derive insights such as the need for incorporating holistic perspectives, recognizing AI ethics as a multimodal, dynamic and shared lifelong learning process, and the need for identifying ethical commonalities across multiple cultures and using those to inform AI design.

To delve deeper, read the full summary here.

📰 Article summaries:

The messy morality of letting AI make life-and-death decisions

What happened: Philip Nitschke (founder of Exit International) is in the last few rounds of testing his new Sarco machine, which will “demedicalize death,” before shipping it to Switzerland. The Sarco, from the word sarcophagus, is an automated euthanasia machine. A person who has chosen to die will be sealed in this machine and asked three questions: (1) Who are you? (2) Where are you? (3) Do you know what will happen when you press that button? Afterward, the Sarco will fill with nitrogen gas, and the occupant will pass out in less than a minute before dying by asphyxiation in around five minutes.

Why it matters: Exit International is working on an algorithm that aims to allow people to perform a psychiatric self-assessment on a computer, which (if passed) would allow them to activate the Sarco by themself. Although Nitschke sees AI as a way to empower individuals to make the ultimate choice by themselves, this raises the concern that AI’s role may be expanding from medical decisions to moral ones.

Between the lines: “Certain processes—bureaucratic, technical, and algorithmic—can make difficult questions seem neutral and objective. They can obscure the moral aspects of a choice.” Nitschke’s story highlights complex topics, such as what counts as a medical decision, what counts as an ethical one, and who gets to choose. For Nitschke, assisted suicide is an ethical decision that individuals must make for themselves.

Dollars to Megabits, You May Be Paying 400 Times As Much As Your Neighbor for Internet Service

What happened: After analyzing more than 800,000 internet service offers from AT&T, Verizon, Earthlink, and CenturyLink in 38 cities across America, The Markup found fast base speeds at or above 200 Mbps in some neighborhoods for the same price as connections below 25 Mbps in others. Neighborhoods with lower median incomes were offered the worst deals in 9 out of 10 cities in the analysis. Moreover, in two-thirds of the cities with enough data to compare, the providers gave the worst offers to the least-White neighborhoods.

Why it matters: None of the providers denied charging the same fee for different internet speeds in different neighborhoods. They said their intentions were not to discriminate against communities of color. However, these internet providers have the power because the federal government does not regulate internet prices. After all, internet service is not considered a utility, so providers can make their own decisions about how much to charge.

Between the lines: These findings show how much of America’s internet market is based on the unfairness of internet service providers deciding not to invest in high-speed infrastructure in marginalized areas. This has several significant consequences, such as residents of specific neighborhoods being denied the ability to participate in remote learning, well-paying remote jobs, and even family connection.

In the ultimate Amazon smart home, each device collects your data

What happened: According to Consumer Intelligence Research Partners, two-thirds of Americans who shop on Amazon own at least one of its smart gadgets. The fact that Amazon collects more data than almost any other company makes this statistic even more alarming. The company says it doesn’t “sell” our data, but unfortunately, there aren’t many U.S. laws to restrict how it uses the information.

Why it matters: This article goes through various Amazon products and services and outlines the data it collects, in addition to why it matters. For example, the Echo speaker collects audio recordings through an always-on microphone, keeps voice IDs, detects coughs, etc. Amazon proclaims privacy controls like a physical microphone mute button, but in certain instances, it has recorded sensitive conversations after its microphone was activated unintentionally.

Between the lines: The growing number of devices that can collect user data in our homes is a cause for concern. Though they can be seen as neat gadgets that improve certain aspects of our lives, it is also essential to be cognizant of the privacy issues that are arising as a result of these technologies.

📖 From our Living Dictionary:

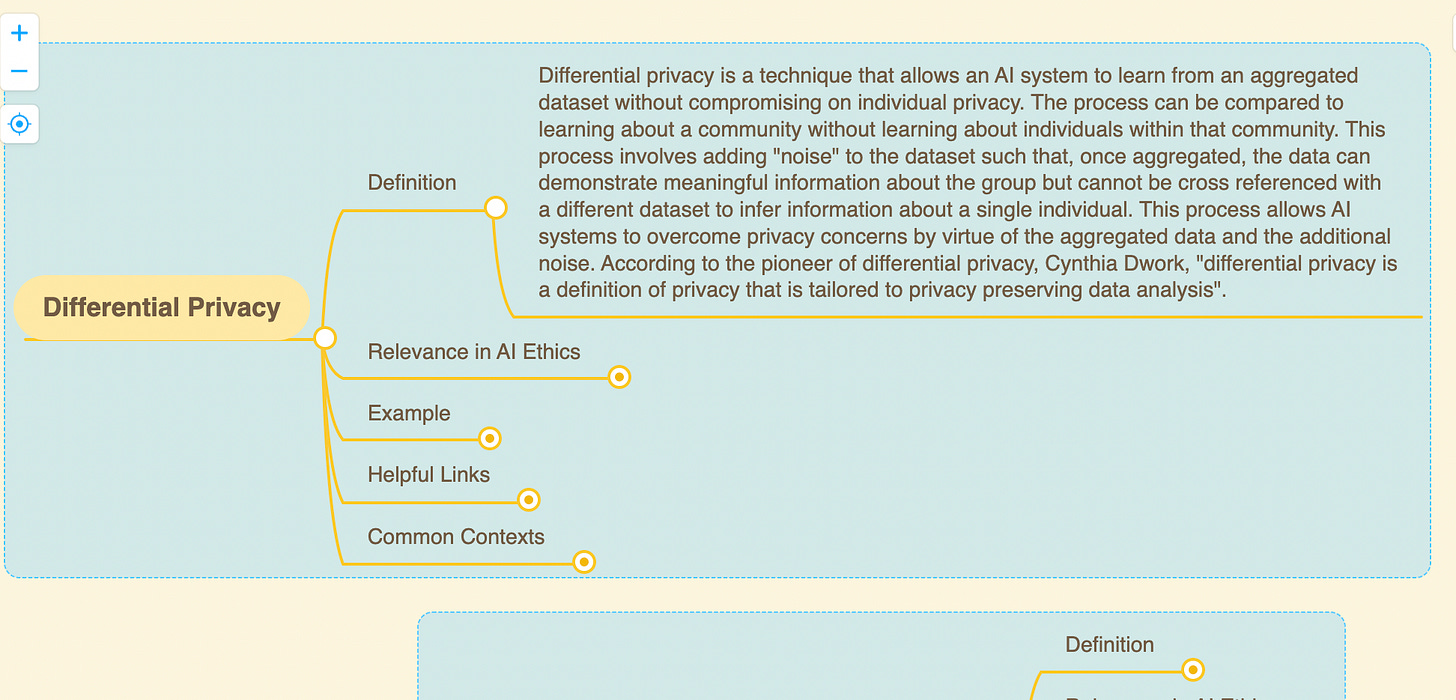

What is differential privacy?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

When choosing a responsible AI leader, tech skills matter

Our founder, Abhishek Gupta, wrote about how hiring RAI leaders with only philosophy and legal backgrounds might not be good enough for incorporating ethics in today’s fast-evolving AI.

2022 Tech Ethics Symposium How Can Algorithms Be Ethical? Finding Solutions through Dialogue

Our founder, Abhishek Gupta, will be speaking at this event hosted by Duquesne University on “How Can Social Institutions Work Toward Ethical Outcomes in Tech? The Needs and Hopes for Better Tech Governance, Policy, and Transparency”.

💡 In case you missed it:

Zoom Out and Observe: News Environment Perception for Fake News Detection

Recent years have witnessed a surge of fake news detection methods that focus on either post contents or social contexts. In this paper, we provide a new perspective—observing fake news in the “news environment”. With the news environment as a benchmark, we evaluate two factors much valued by fake news creators, the popularity and the novelty of a post, to boost the detection performance.

To delve deeper, read the full summary here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

First and foremost, AI is part of the CIO portfolio. So AI is inherently part of the current CIO IT/IS/Cloud Governance program under either PPBS or CPIC. This also includes any required audits of IT/IS/Cloud. AI isn't a separate category unto itself. So, both the ISO IT Governance and AI Governance standards are used to shape an update IT Modernization Governance program.