AI Ethics Brief #79: Anthropomorphic interactions with robots, UK's AI Roadmap, the power of small data, and more ...

Why is Europe not investing in policing biased AI in recruiting?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~15-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

UK’s roadmap to AI supremacy: Is the ‘AI War’ heating up?

🔬 Research summaries:

Co-Designing Checklists to Understand Organizational Challenges and Opportunities around Fairness in AI

Anthropomorphic interactions with a robot and robot-like agent

📰 Article summaries:

A tiny tweak to Zomato’s algorithm led to lost delivery riders, stolen bikes and missed wages

Europe wants to champion human rights. So why doesn’t it police biased AI in recruiting?

Small Data Are Also Crucial for Machine Learning

📖 Living Dictionary:

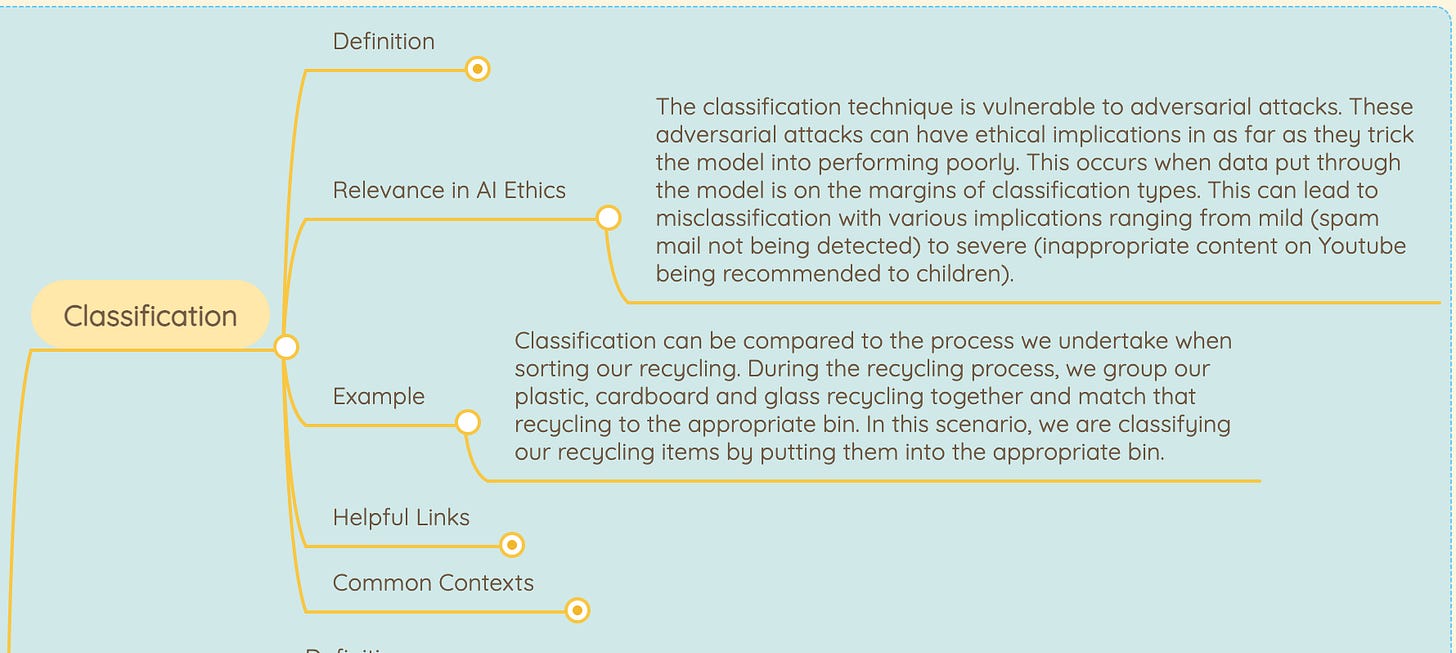

Classification

💡 ICYMI

Reliabilism and the Testimony of Robots

But first, our call-to-action this week:

The Trustworthy ML Initiative celebrates its one year anniversary with this special event, held jointly with Montreal AI Ethics Institute.

To achieve the promise of AI as a tool for societal impact, black-box models must not only be "accurate" but also satisfy trustworthiness properties that facilitate open collaboration and ensure ethical and safe outcomes. The purpose of this un-symposium is to discuss the interdisciplinary topics of robustness, fairness, privacy, and ethics of AI tools. In particular, we want to highlight the significant gap in deploying these AI models in practice when the stakes are high for commercial applications of AI where millions of human lives are at risk. We welcome researchers, stakeholders, and domain experts to join us.

✍️ What we’re thinking:

UK’s roadmap to AI supremacy: Is the ‘AI War’ heating up?

The UK’s first National Artificial Intelligence Strategy was presented to the Parliament by Nadine Dorries, Secretary of State for Digital, Culture, Media and Sport by Command of Her Majesty on September 22, 2021. The highlight of the strategy is the highly ambitious ten-year plan ‘to make Britain a global AI superpower’. Further, according to Dorries, ‘this strategy will signal to the world UK’s intention to build the most pro-innovation regulatory environment in the world’.

To delve deeper, read the full article here.

🔬 Research summaries:

Among the burgeoning literature on AI ethics and the values that would be important to respect in the development and use of artificial intelligence systems (AIS), fairness comes up a few times, perhaps as an echo of the very current notion of social justice. Authors Madaio, Vaughan, Stark and Wallach (Microsoft Research) have co-developed a checklist that seeks to ensure fairness, while recognizing that a procedure alone cannot overcome the value tensions and incompatibilities in ethical practice.

To delve deeper, read the full summary here.

Anthropomorphic interactions with a robot and robot-like agent

Would you be more comfortable disclosing personal health information to a physical robot or a chatbot? In this study, anthropomorphism may be seen as the way to interact with humans, but most definitely not in acquiring personal information.

To delve deeper, read the full summary here.

📰 Article summaries:

A tiny tweak to Zomato’s algorithm led to lost delivery riders, stolen bikes and missed wages

What happened: Zomato, a food delivery app popular in India, increased the delivery radius for workers from 10 km to 40 km which had an immediate impact on the number of deliveries they are able to complete in a day. The workers are forced to take on deliveries that push them progressively further from their “home zones.” They tried things like switching off their GPS so that they would not receive orders taking them far away but that meant time off the app which reduced their earning potential. After significant protests by workers in Bengaluru, Zomato rolled the change back for workers who have been with the platform for more than 3 years, but not for the ones who are new.

Why it matters: The agents are incentivized based on the number of deliveries they are able to complete in a day and having to travel further diminishes the number of deliveries they are able to complete. Rejecting orders is also not an option since that directly affects their rating within the platform and the number of deliveries they get allocated based on that status. Finally, redressal mechanisms are mostly automated, fixed menu options that don’t give them much agency with the company.

Between the lines: Workers have tried to organize and raise concerns with the Supreme Court of India to be classified as wage workers rather than contractors so that they have more rights and labor law protections but that has been unsuccessful so far. Similar to the gig economy issues elsewhere in the world, workers are disempowered and helpless, especially in a country like India where the wages they do receive are very low, barely helping them meet basic needs on a daily basis.

Europe wants to champion human rights. So why doesn’t it police biased AI in recruiting?

What happened: Making the case for how Europe is in dire need of innovation and growth, something that diversity in hiring can enable, the author makes the case that at all levels of the regulations and legislations, the impact of biased hiring algorithms is being ignored, leaving job seekers at the mercy of systems that are highly problematic. For those unfamiliar with hiring practices in Europe, the CVs typically include pictures along with the name which can lead to implicit bias on race. The Digital Services Act in Europe is currently ill-equipped to handle this.

Why it matters: This goes against laws in several countries in Europe that prohibit the use of race in hiring decisions. Given that a lot of companies use automated systems to process incoming applications and fast-track the process that is time- and resource-intensive, illegality might be getting buried behind an opaque wall of black-box systems where it is difficult to point out what factors have been used to make a hiring decision.

Between the lines: Many examples have already demonstrated that hiring decisions made on the basis of algorithmic filtering tend to reproduce strong biases, especially along gender and race lines. This happens even when data related to these protected attributes is not collected and this manifests itself in the form of proxy variables that capture correlations between the protected attributes and non-protected attributes, negating the effectiveness of no data collection related to the protected attributes. Without stronger mandates in the form of law, firms may continue to exercise such biased systems severely impacting the livelihoods of people who become the subjects of algorithmic discrimination.

Small Data Are Also Crucial for Machine Learning

What happened: The article makes the case that the technique of transfer learning whereby a small, highly domain-specific dataset can be used to leverage a pre-trained model to fine-tune performance on a task has a lot of promise and remains under-explored at the moment. It points out that there has been a lot of success in applying this to tasks in computer vision (CV) and natural language processing (NLP) such as the use of models pre-trained on ImageNet. But, it also points out if there is limited overlap in the domains of the new task and the dataset on which the model was pre-trained, performance can suffer.

Why it matters: Nonetheless, transfer learning is a promising area of research that deserves attention given that it can elevate the power of small data. Especially when there is a high financial cost to training large models, the ability to use pre-trained models fine-tuned using transfer learning can provide an avenue to resource-constrained researchers to harness the power of AI. This can also help us mitigate the environmental impact of AI systems by preventing the need to train really large models from scratch and operate well in small data regimes.

Between the lines: There are many benefits to having large, generalized models which can be taken off-the-shelf and fine-tuned for new tasks because they demonstrate the “generalizability” of such powerful models, one of the key things that any AI practitioner would love to have when developing AI systems. The more we are able to harness existing models where investments have already been made to bring them up to a baseline level of performance, the more we’ll be able to democratize access to performant AI systems in novel domains to people who were previously limited in their ability to build and access such systems.

From our Living Dictionary:

“Classification”

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

In case you missed it:

Reliabilism and the Testimony of Robots

In this paper, the author Billy Wheeler asks whether we should treat the knowledge gained from robots as a form of testimonial versus instrument-based knowledge. In other words, should we consider robots as able to offer testimony, or are they simply instruments similar to calculators or thermostats? Seeing robots as a source of testimony could shape the epistemic and social relations we have with them. The author’s main suggestion in this paper is that some robots can be seen as capable of testimony because they share the following human-like characteristic: their ability to be a source of epistemic trust.

To delve deeper, read the full summary here.

Take Action:

The Trustworthy ML Initiative celebrates its one year anniversary with this special event, held jointly with Montreal AI Ethics Institute.

To achieve the promise of AI as a tool for societal impact, black-box models must not only be "accurate" but also satisfy trustworthiness properties that facilitate open collaboration and ensure ethical and safe outcomes. The purpose of this un-symposium is to discuss the interdisciplinary topics of robustness, fairness, privacy, and ethics of AI tools. In particular, we want to highlight the significant gap in deploying these AI models in practice when the stakes are high for commercial applications of AI where millions of human lives are at risk. We welcome researchers, stakeholders, and domain experts to join us.