AI Ethics Brief #112: AI and science fiction, algorithmic recourse, intersectionality in ML, and more ...

Can smaller neural networks achieve fairness on edge devices?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~37-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

The Ethical AI Startup Ecosystem 04: Targeted AI Solutions and Technologies

Principios éticos para una inteligencia artificial antropocéntrica: consensos actuales desde una perspectiva global y regional.

🔬 Research summaries:

Visions of Artificial Intelligence and Robots in Science Fiction: a computational analysis

The Larger The Fairer? Small Neural Networks Can Achieve Fairness for Edge Devices

Let Users Decide: Navigating the Trade-offs between Costs and Robustness in Algorithmic Recourse

Assessing the Fairness of AI Systems: AI Practitioners’ Processes, Challenges, and Needs for Support

Towards Intersectionality in Machine Learning: Including More Identities, Handling Underrepresentation, and Performing Evaluation

📰 Article summaries:

Behind TikTok’s boom: A legion of traumatised, $10-a-day content moderators

What's in the US 'AI Bill of Rights' - and what isn't

We used to get excited about technology. What happened?

📖 Living Dictionary:

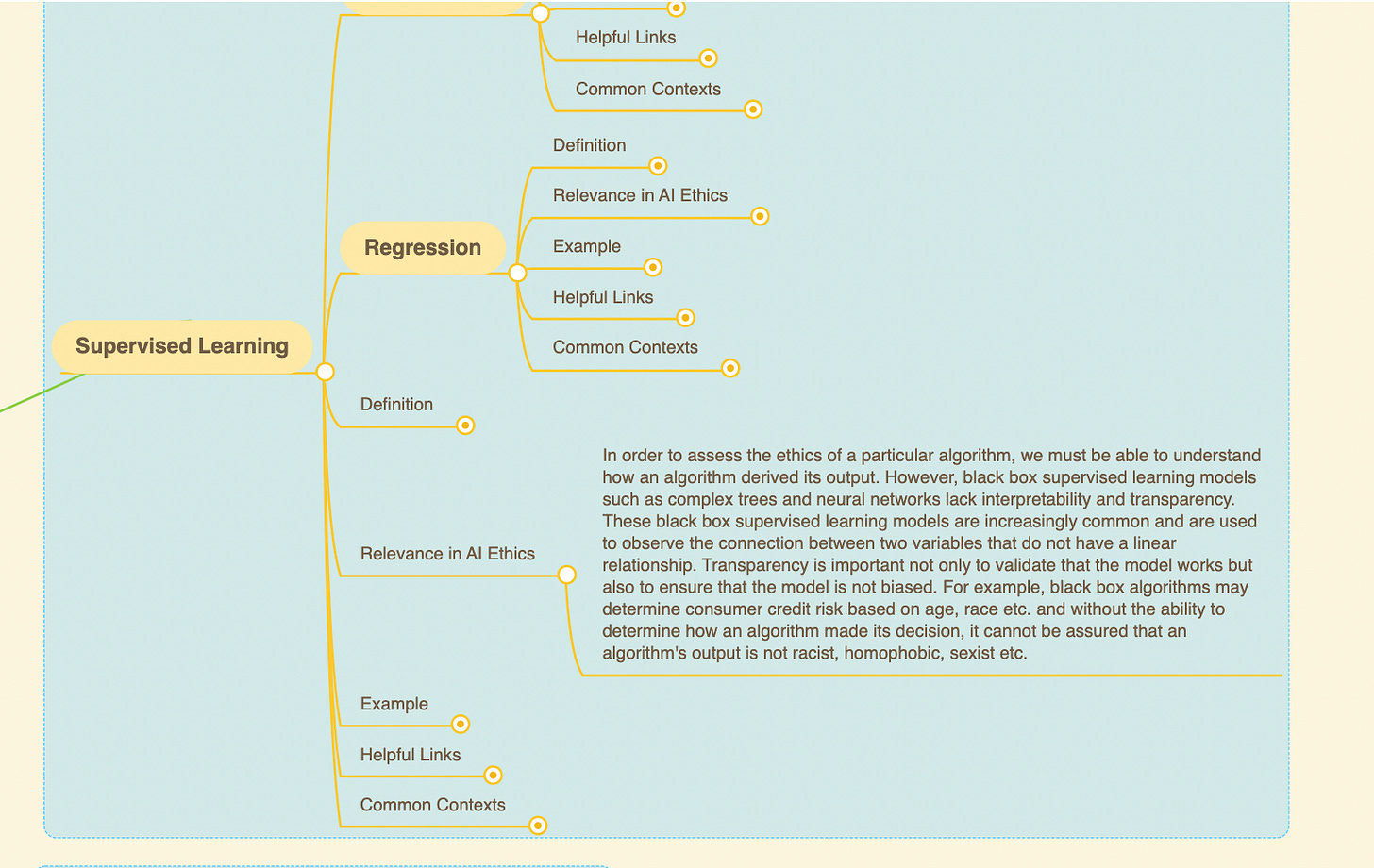

What is relevance of supervised learning to AI ethics?

🌐 From elsewhere on the web:

WHAT THEY ARE SAYING: White House Blueprint for an AI Bill of Rights Lauded as Essential Step Toward Protecting the American Public

💡 ICYMI

Longitudinal Fairness with Censorship

But first, our call-to-action this week:

Come hang out with me in Dallas, TX on Nov. 8th!

I’ll be in Dallas, TX on Nov. 8th (Tuesday) and would love to hang out in-person for a coffee with our reader community! We’ll chat about everything AI :)

Come work on my team at BCG - I’m hiring!

I’m hiring for two positions on my Responsible AI team at BCG - if you’re interested and would like a referral as a part of our awesome AI Ethics Brief community, click through below. Information about the positions is also included in the link.

Now I'm Seen: An AI Ethics Discussion Across the Globe

We are hosting a panel discussion to amplify the approach to AI Ethics in the Nepalese, Vietnamese and Latin American contexts.

This discussion aims to amplify the enriching perspectives within these contexts on how to approach common problems in AI Ethics. The event will be moderated by Connor Wright (our Partnerships Manager), who will guide the conversation to best engage with the different viewpoints available.

This event will be online via Zoom. The Zoom link will be sent 2-3 days prior to the event taking place.

✍️ What we’re thinking:

The Ethical AI Startup Ecosystem 04: Targeted AI Solutions and Technologies

In the other categories of EAIDB, we see a more significant number of companies focusing on horizontal game plans as a method of diversifying customer acquisition. We call these companies generalists. For example, many companies begin in MLOps but expand to include aspects of GRC once it is understood that demand for responsible MLOps is somewhat limited in Europe and the United States. The idea is to attract potential customers from MLOps and GRC use cases.

On the opposite side of the spectrum are the “Targeted AI Solutions and Technology” companies (specialists). Most have focused on a particular set of use cases and emphasize the customer journey through those use cases. While there is no distinct advantage to one approach over the other (both have produced several high-performance startups), specialists have historically enjoyed higher funding rates. About 92% of the startups in this category have raised funding of some kind, as opposed to 77% and 78% for our “MLOps, Monitoring, and Explainability” and “Data for AI” categories, respectively.

To delve deeper, read the full article here.

Note that this piece is in Spanish and it is a part of our ongoing effort to expand into other languages to increase accessibility of AI ethics related content.

Artificial intelligence is one of the great engines of the fourth revolution due to the impact it has on various industries and sectors of society. And as the possibilities of using AI grow, several of its benefits are observed but also warnings about the potential risks it poses and the ethical challenges associated with its design, development and deployment. The paper deals with the risks associated with the use of intelligent systems and tries to answer why the design, development and deployment of artificial intelligence linked to ethical principles must be guaranteed. Likewise, based on a selection that seeks to be representative of various geographical areas of the world, the work reviews various relevant documents on the subject issued in the last five years, with the aim of extracting from them some conclusions regarding the existing consensus around what principles are necessary to achieve an anthropocentric AI.

To delve deeper, read the full article here.

🔬 Research summaries:

Visions of Artificial Intelligence and Robots in Science Fiction: a computational analysis

How is AI portrayed in science fiction? While this is a powerful tool, science fiction sometimes gets interpreted as science non-fiction, separating ourselves from the reality of technology.

To delve deeper, read the full summary here.

The Larger The Fairer? Small Neural Networks Can Achieve Fairness for Edge Devices

Along with the progress of AI democratization, neural networks are being deployed more frequently in edge devices for a wide range of applications. Therefore, fairness concerns gradually emerge in applications such as face recognition and mobile medical. One fundamental question arises: what will be the fairest neural architecture for edge devices? To address this challenge, a new work proposes a novel framework, FaHaNa.

To delve deeper, read the full summary here.

Let Users Decide: Navigating the Trade-offs between Costs and Robustness in Algorithmic Recourse

As machine learning (ML) models are increasingly being deployed in high-stakes applications, there has been growing interest in providing recourse to individuals adversely impacted by model predictions. However, recent studies suggest that individuals often implement recourses noisily and inconsistently [1]. Motivated by this observation, we introduce and study the problem of recourse invalidation in the face of noisy human responses. We propose a novel framework, EXPECTing noisy responses (EXPECT), by explicitly minimizing the probability of recourse invalidation in the face of noisy responses and demonstrate that more robust algorithmic recourse can be achieved at the expense of more costly recourse.

To delve deeper, read the full summary here.

Assessing the Fairness of AI Systems: AI Practitioners’ Processes, Challenges, and Needs for Support

Various tools and processes have been developed to support AI practitioners in identifying, assessing, and mitigating fairness-related harms caused by AI systems. However, prior research has highlighted gaps between the intended design of such resources and their use within particular social contexts, including the role that organizational factors play in shaping fairness work. This paper explores how AI teams use one such process—disaggregated evaluations—to assess fairness-related harms in their own AI systems. We identify AI practitioners’ processes, challenges, and needs for support when designing disaggregated evaluations to uncover performance disparities between demographic groups.

To delve deeper, read the full summary here.

We consider the complexities of incorporating intersectionality into machine learning and how this is much more than simply including more axes of identity. We consider the next steps of deciding which identities to include, handling the increasingly small number of individuals in each group, and performing a fairness evaluation on a large number of groups.

To delve deeper, read the full summary here.

📰 Article summaries:

Behind TikTok’s boom: A legion of traumatised, $10-a-day content moderators

What happened: TikTok’s rapid growth in Latin America has meant that hundreds of moderators in Colombia work six days a week, with some paid as little as £235 a month (compared to about £2,000 a month for content moderators based in the UK). The workers in this article that the Bureau interviewed worked on TikTok content but were contracted through Teleperformance, a multinational services outsourcing company with more than 42,000 workers in Colombia.

Why it matters: TikTok’s recommendation algorithm is widely considered one of the most effective applications of AI worldwide because it can accurately identify the content each user finds appealing to display on their “For You Page.” However, TikTok uses human workers alongside AI to help keep its platform scrubbed of harmful content. It is especially tricky when they are asked to quickly remove content that nobody has ever seen before.

Between the lines: The mental harms of content moderation work are well-documented, with symptoms including depression, anxiety, and sleep loss. These impacts on mental health were exacerbated after the shift to working from home because Teleperformance rolled out extensive surveillance systems to monitor its employees. Workers in Colombia had been pressured to sign contracts giving the company the right to install cameras in their homes. Often, if workers did not get through a certain number of videos, they could lose out on a monthly bonus worth up to a quarter of their salary.

What's in the US 'AI Bill of Rights’ - and what isn't

What happened: Approximately 60 countries now have National AI Strategies, and many have or are creating policies that allow for the responsible use of technology. Last week, the US White House Office of Science and Technology Policy (OSTP) released a “Blueprint for an AI Bill of Rights,” which provides a framework for how government, technology companies, and citizens can work together to ensure more accountable AI.

Why it matters: The Blueprint is meant to “help guide the design, use, and deployment of automated systems to protect the American Public.” The principles are non-regulatory and non-binding and are being advertised as a "Blueprint" rather than an enforceable “Bill of Rights” with legislative protections. The Blueprint contains five principles: (1) Safe and Effective Systems, (2) Algorithmic Discrimination Protections, (3) Data Privacy, (4) Notice and Explanation, (5) Human Alternatives, Consideration, and Fallback

Between the lines: Some advocates for government control believe it doesn’t go far enough because it doesn’t include enough checks and balances. Moreover, there are fears from some tech execs that regulation could stifle AI innovation. On the other hand, this can be seen as a great starting point. Policy experts have highlighted the protections this document could have for various groups, including Black and Latino Americans.

We used to get excited about technology. What happened?

What happened: The priorities in our tech ecosystem have changed, contributing to a growing unease amongst individuals who work in the field or study it. Consumer tech development used to revolve around the goal of designing and building something of value to people while giving them a reason to buy it. But this vision of tech is outdated. For example, it is not enough for a refrigerator to keep food cold because today’s version offers cameras and sensors.

Why it matters: While privacy risks are a key concern, this change indicates the incentives that currently drive the entire model for innovation. From the perspective of tech companies, rather than simply aiming for one profit-taking transaction, it has become much more appealing to design a product that will extract a monetizable data stream from every buyer. This leads to recurring revenue for years to come.

Between the lines: “The visible focus of tech culture is no longer on expanding the frontiers of humane innovation—innovation that serves us all.” One of the dangers is that today’s technology is no longer focused on our civilizational needs. Indispensable technologies like roads, power grids, and transit systems used to be in the spotlight. But now, many of our resources have shifted to speed up the development of data-heavy consumer devices and apps. Unfortunately, common themes in our tech ecosystem have increasingly started to include domination, surveillance, and control.

📖 From our Living Dictionary:

What is the relevance of supervised learning to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Abhishek Gupta, founder and principal researcher, Montreal AI Ethics Institute:

“It does a very, very good job of moving the ball forward, in terms of what we need to do, what we should do and how we should do it…It harmonizes both technical design interventions and organizational structure and governance as a joint objective which we’re seeking to achieve, rather than two separate streams to address responsible AI issues.” [Statement, 10/7/22]

💡 In case you missed it:

Longitudinal Fairness with Censorship

AI fairness has gained attention within the AI community and the broader society beyond with many fairness definitions and algorithms being proposed. Surprisingly, there is little work quantifying and guaranteeing fairness in the presence of censorship. To this end, this paper rethinks fairness and reveals idiosyncrasies of existing fairness literature assuming certainty on the class label that limits their real-world utility.

To delve deeper, read the full summary here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.