The AI Ethics Brief #169: From Helpful to Harmful - The Hidden Costs of Agentic AI

Exploring agentic AI's privacy risks alongside web governance shifts, healthcare automation challenges, and the global AI literacy crisis.

Welcome to The AI Ethics Brief, a bi-weekly publication by the Montreal AI Ethics Institute. We publish every other Tuesday at 10 AM ET. Follow MAIEI on Bluesky and LinkedIn.

📌 Editor’s Note

In this Edition (TL;DR)

We explore how agentic AI systems are breaking down digital privacy boundaries by requiring unprecedented access across apps and platforms, creating what Signal's president calls a "profound" threat to user autonomy while conflating automated task completion with meaningful human choice.

Cloudflare's "Content Independence Day" announcement marks a pivotal shift from opt-out to compensation models for AI training data, defaulting to blocked access for new domains and potentially reshaping how AI companies acquire training content.

The UK’s NHS AI-enabled healthcare transformation promises to make every doctor's AI assistant a reality, but automation failures like the Post Office Horizon scandal highlight the risks of rushing AI deployment in critical systems without adequate testing, transparency, and accountability measures.

In our AI Policy Corner series with the Governance and Responsible AI Lab (GRAIL) at Purdue University, we examine Japan's AI Promotion Act, which represents a strategic bet on light-touch regulation to become "the most AI-friendly country in the world" through voluntary compliance rather than punitive enforcement.

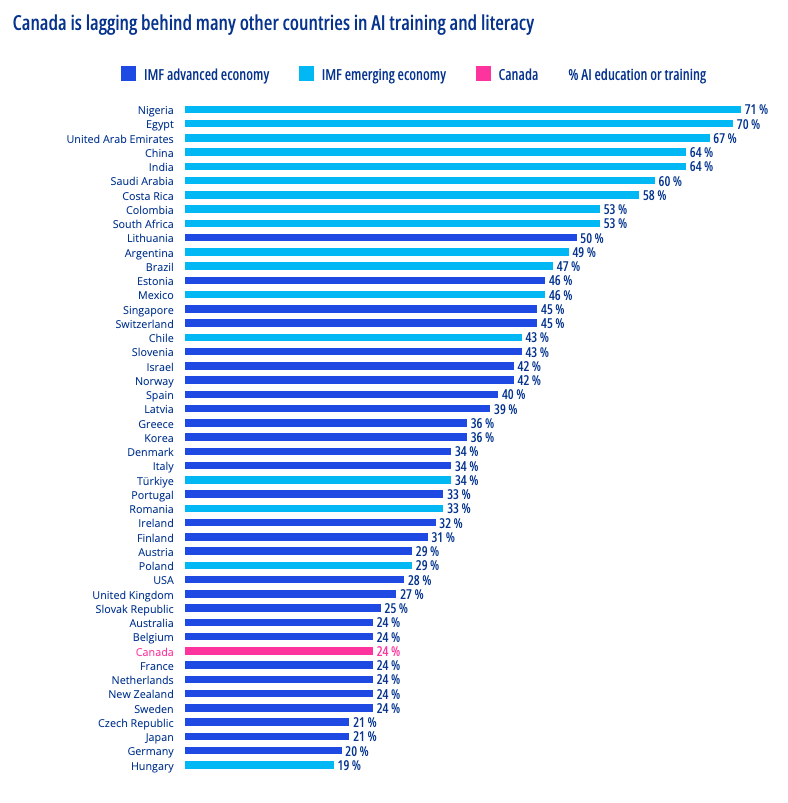

A KPMG-University of Melbourne study reveals Canada's alarming AI literacy gap, ranking 44th out of 47 countries in AI knowledge and training, highlighting the urgent need for coordinated institutional responses and dedicated public AI literacy infrastructure across the country.

Brief #169 Banner Image Credit: Web of Influence I by Elise Racine, featured in The Bigger Picture, licensed under CC-BY 4.0. View the work on Better Images of AI here.

🔎 One Question We’re Pondering:

Are we trading away human agency in the name of convenience, and if so, how do we recalibrate our relationship with the technologies that mediate our lives?

Recent developments point to the convergence of several trends: the expansion of agentic AI systems, the integration of AI into enforcement infrastructure, and a shifting understanding of human autonomy in digital environments. As discussed in Brief #168, the growing use of algorithmic surveillance in immigration enforcement highlights how these trajectories are beginning to intersect. Together, they raise urgent questions about how technologies built for convenience are quietly reinforcing systems of monitoring and control.

At SXSW, Signal President Meredith Whittaker warned that so-called agentic AI systems pose “profound” security and privacy concerns. Designed to perform tasks on a user’s behalf, such as booking tickets, messaging contacts, or updating calendars, these systems require elevated permissions across various apps, web browsers, calendars, and communication platforms. Whittaker described this as breaking the “blood-brain barrier” between apps and operating systems, creating a seamless but opaque data pipeline between intimate digital behaviours and remote cloud servers.

Implemented at scale, these systems would fundamentally reshape digital privacy boundaries. Agentic systems must access, summarize, and act upon a user’s data, including credit card information, messages, and location history, often with root-level permissions, which can potentially undermine both user consent and platform integrity. Whittaker noted that these operations are unlikely to remain fully on-device and will increasingly rely on cloud processing, creating systemic vulnerabilities to surveillance and data misuse.

Vilas Dhar, president and trustee of the Patrick J. McGovern Foundation, adds crucial nuance to this picture. He argues that "agentic" systems lack genuine human agency, which involves compassion, kindness, empathy, and protection of our vision for a just world. We've conflated automated task completion with meaningful human choice and expression.

Research from Stanford’s Institute for Human-Centred AI reinforces this concern. Their recent study on worker-AI interaction found that most people prefer AI systems that augment human control, rather than replace it. Workers consistently expressed a desire for AI tools that assist with mundane tasks while preserving decisional autonomy. This preference sharply contrasts with the current deployment path of agentic systems, which often sidestep user input in favour of end-to-end automation.

Taken together, these developments highlight a systemic risk: that technologies positioned as helpful and efficient are simultaneously creating a data substrate that can be co-opted for surveillance and control. In parallel, enforcement agencies are expanding predictive monitoring programs, using social media sentiment, geolocation data, blockchain activity, and communication metadata to identify risk patterns. These two trajectories, agentic AI and predictive enforcement, are not isolated. They are deeply interoperable.

Each interaction with an AI assistant generates behavioural data, which can be used to train future surveillance models. Each API permission granted to a digital agent expands its reach across personal and institutional systems. Without strong data minimization safeguards, algorithmic transparency, and constraints on cross-system integration, the infrastructure built for convenience may serve broader systems of control.

Preserving meaningful human agency will require technical, legal, and civic interventions:

Adopting privacy-focused defaults and granular consent models.

Demanding greater transparency on what agentic systems access and how that data is stored or shared.

Supporting legislation that limits interoperability between commercial AI agents and government surveillance systems.

The core challenge involves the kinds of futures we are encoding through architecture. Preserving human agency requires intentionality about the systems we build and the values they reflect.

Please share your thoughts with the MAIEI community:

🚨 Here’s Our Take on What Happened Recently

From Opt-Out to Compensation: The Next Phase of AI Web Governance

Cloudflare’s July 1, 2025 announcement marks a pivotal shift in the governance of AI access to web content. Often described as the backbone of the modern internet, Cloudflare routes traffic for approximately 20 percent of global web activity. Its decision to block AI bots by default for all new customer domains establishes the first infrastructure-level barrier to automated data extraction.

Framed as “Content Independence Day,” the update signals Cloudflare’s intent to move beyond opt-out protections to actively support content licensing models. Whereas the company’s July 3, 2024 release introduced a one-click tool to block AI bots and scrapers from accessing websites, this new approach envisions a future where content used for AI training is not only protected by default but also compensated directly.

📌 MAIEI’s Take and Why It Matters:

Cloudflare’s policy evolution reflects broader tensions around the economics of AI development. Over the past year, legal and technical debates have converged on the question of how generative models acquire and use training data. As covered in Brief #168, U.S. courts have largely upheld the fair use doctrine in favour of AI companies, even in cases involving unauthorized content scraping. At the same time, institutions such as the U.S. Copyright Office have experienced significant internal disruption, including the controversial dismissal of its director. These events illustrate the uncertain governance landscape surrounding cultural data in the AI era.

Saanya Ojha, a partner at Bain Capital Ventures, notes that Cloudflare’s new stance reframes the structure of participation in the AI ecosystem, redefining the balance of power between AI companies and the open web.

From open to closed.

From assumed permission to enforced consent.

From passive scraping to active negotiation.

This shift signals a broader reckoning with the economics of data. Rather than treating content scraping as a neutral technical act, Cloudflare reframes it as a form of uncompensated extraction. By offering web services that default to non-consent, Cloudflare has quietly laid the groundwork for a content licensing infrastructure that prioritizes alignment over automation.

This reframing has direct implications for the future of copyright, consent, and AI development. If the open web adopts similar policies, AI companies building these systems may be forced to negotiate access, pay licensing fees, or build new data partnerships. This could benefit content creators who have long been excluded from the value chains of AI innovation. It also introduces new tensions. Fragmentation across platforms and inconsistent implementation may produce further disparities in whose content is represented in training data and whose is not.

While Cloudflare’s update does not resolve questions of copyright ownership or authorship, it introduces a technical intervention that can shift the incentive landscape. The ability to block AI scraping by default is a step toward more equitable negotiation, but it remains one piece of a broader debate about how cultural data is sourced, protected, and monetized.

Together with recent legal decisions, these changes reveal a digital ecosystem in a state of flux. As AI-generated content becomes more pervasive and indistinguishable from human work, the architecture of consent and the norms of participation are being rewritten in real-time. Cloudflare’s decision, Ojha’s framing, and the evolving role of copyright enforcement all point to the same conclusion: the governance of AI is inseparable from the governance of information itself.

NHS Deploys AI Early Warning System in Maternity Care, But the Bigger Picture Raises Critical Questions

Last month, the UK's National Health Service (NHS) announced the deployment of an AI early warning system in maternity care, set to launch in November 2025. This system will monitor near real-time hospital data to detect anomalies such as elevated rates of stillbirths, brain injury, or neonatal death, and trigger rapid inspections when necessary. The rollout follows years of reports citing failures in maternity care delivery across NHS trusts.

The announcement coincides with the UK’s Fit for the Future – 10 Year Health Plan for England, which outlines the digital transformation of NHS services, “making AI every nurse’s and doctor’s trusted assistant, saving them time and supporting them in decision making.”

Key pillars include:

Clinical Applications: AI for diagnostics, decision support, drug discovery, and predictive care

Administrative AI: Scribes, care plan generation, smart hospitals, and single patient records

Patient-Facing Tools: Wearables, triage bots, remote assessments, and 24/7 AI health assistants

The goal is for all NHS hospitals to become “AI-enabled” within the plan’s timeframe, supported by a new Health Data Research Service (HDRS) and large-scale genomic initiatives, making it the most AI-enabled care system in the world.

📌 MAIEI’s Take and Why It Matters:

While AI has the potential to improve patient outcomes and reduce clinician burden, the risks are significant if systems are poorly designed or deployed without transparency and accountability.

1. Bias and False Positives/Negatives

AI systems can replicate and amplify systemic biases. As highlighted in Brief #102, the U.S. tool ImpactPro disproportionately misclassified Black patients as lower risk, due to flawed proxies in training data. In the NHS context, false negatives in triage could delay care, while false positives may overwhelm staff and distress patients unnecessarily. Healthcare workers may also develop automation bias, overrelying on AI recommendations even when clinical judgment suggests otherwise, which can potentially compromise patient safety when systems fail.

2. Lessons from Automation Failures

The UK Post Office Horizon scandal, which wrongly prosecuted over 900 sub-postmasters due to incorrect information provided by the Horizon computer system, and the Department for Work and Pensions (DWP) algorithm, which falsely flagged over 200,000 individuals for fraud, demonstrate how automation without adequate redress mechanisms can destroy lives. The urgency to address maternity care failures should not lead to the rushed deployment of AI without rigorous testing and safeguards.

3. Data Governance and Patient Autonomy

The NHS aims to make patient data widely accessible for innovation, including to private firms, raising ethical questions. Even anonymized datasets carry re-identification risks, especially with longitudinal “cradle-to-grave” coverage. The UK’s exemption of anonymized data from GDPR adds urgency to clarify privacy safeguards. Beyond consent, patients need meaningful ways to understand, question, or appeal AI-driven decisions about their care, i.e. true data autonomy, and not just access rights.

4. Regulatory Gaps and "Faster" Approval Routes

While the 10 Year Health Plan promises faster regulatory reviews and ethical governance frameworks, the emphasis on "faster, risk proportionate and more predictable routes to market" (p. 117) raises concerns about potentially lowered safety thresholds or streamlined approval processes that could compromise thorough evaluation. The plan lacks specifics on how deployed AI models will be audited, monitored for bias or hallucination, or corrected when errors occur.

5. Regulatory Gaps and "Faster" Approval Routes

The plan does not specify how AI performance data will be communicated to the public or how liability will be handled when AI recommendations prove wrong. Currently, individual care providers are responsible for explaining how patient data is used, with NHS guidance stating that organizations are legally obliged to make this information readily available, including patients’ right to object. This may be manageable on a case-by-case basis today, but as AI tools are deployed system-wide, public-facing accountability measures will be needed. Healthcare workers will need clarity on their role and liability when AI systems provide incorrect guidance.

6. International Context and Timeline Concerns

While this initiative could set a global standard, other regions are taking a more cautious approach. The EU AI Act establishes specific requirements for high-risk AI systems in healthcare, including mandatory third-party conformity assessments and access to regulatory sandboxes for testing before market deployment. The November 2025 deployment timeline for maternity care, while addressing urgent safety concerns, may not allow sufficient time for comprehensive testing and staff training across the complex NHS system.

The digitalization of the NHS has the potential to advance medical research and deliver care more efficiently while reducing bureaucratic burden on healthcare workers. However, the deployment of maternity care AI will serve as a critical test case for whether the UK can implement AI responsibly at scale, striking a balance between innovation, patient safety, equity, and public trust.

Did we miss anything? Let us know in the comments below.

💭 Insights & Perspectives:

AI Policy Corner: Japan’s AI Promotion Act

This edition of our AI Policy Corner, produced in partnership with the Governance and Responsible AI Lab (GRAIL) at Purdue University, examines Japan's AI Promotion Act, enacted in May 2025 as the country's first comprehensive AI legislation. The Act represents Japan's strategic pivot toward becoming "the most AI-friendly country in the world," prioritizing innovation through light-touch regulation that promotes research, development, and utilization of AI technologies. Key provisions include establishing basic principles for AI policy, creating an AI Strategy Headquarters within the Cabinet led by the Prime Minister, and implementing measures to enhance international competitiveness while maintaining domestic research and development (R&D) capabilities. Notably, the Act relies on voluntary compliance without penalties for non-compliance, instead authorizing government investigations and countermeasures when citizen rights are infringed, reflecting Japan's deliberate approach to encourage investment while addressing AI governance through reputational incentives rather than punitive enforcement mechanisms.

To dive deeper, read the full article here.

Study shows Canada among least AI literate nations: Canada 44th out of 47 countries in AI literacy

A recent KPMG-University of Melbourne study places Canada 44th in AI training and literacy out of 47 countries, and 28th among the 30 advanced economies as defined by the International Monetary Fund (IMF), revealing critical gaps that reinforce Kate Arthur's call for a national AI literacy strategy and support our case for establishing an independent Office for Public AI Literacy within the Ministry of AI and Digital Innovation.

The study found that fewer than four in 10 (38%) Canadians reported moderate or high knowledge of AI, compared to 52% globally. Additionally, less than one-quarter (24%) of Canadian respondents indicated that they had received AI training, compared to 39% globally. When it comes to trust in AI systems, Canada ranked 42nd out of 47 countries and 25th out of 30 advanced economies.

For a G7 nation, these rankings represent a national competitiveness challenge that requires coordinated, institutional responses through dedicated public AI literacy infrastructure.

To dive deeper, read the results of the KPMG-University of Melbourne study here

❤️ Support Our Work

Help us keep The AI Ethics Brief free and accessible for everyone by becoming a paid subscriber on Substack or making a donation at montrealethics.ai/donate. Your support sustains our mission of democratizing AI ethics literacy and honours Abhishek Gupta’s legacy.

For corporate partnerships or larger contributions, please contact us at support@montrealethics.ai

✅ Take Action:

Have an article, research paper, or news item we should feature? Leave us a comment below — we’d love to hear from you!

https://open.substack.com/pub/notgoodenoughtospeak/p/my-chat-with-chatgpt-abridged?r=3zzc32&utm_medium=ios

Love the newsletter. The convenience over connection piece has troubled me for some time, accelerated now by AI.