The AI Ethics Brief #181: We're Stronger Together: Collective Action & AI Ethics

Happy New Year! We kick off our first Brief of 2026 with our State of AI Ethics Report Part V: Collective Action, and mark the return of our Recess collaboration with Encode Canada

Welcome to The AI Ethics Brief, a bi-weekly publication by the Montreal AI Ethics Institute. We publish every other Tuesday at 10 AM ET. Follow MAIEI on Bluesky and LinkedIn.

📌 Editor’s Note

In this Edition (TL;DR)

Happy 2026! From everyone at MAIEI, we wish you optimism and strength as we navigate through the AI ethics space.

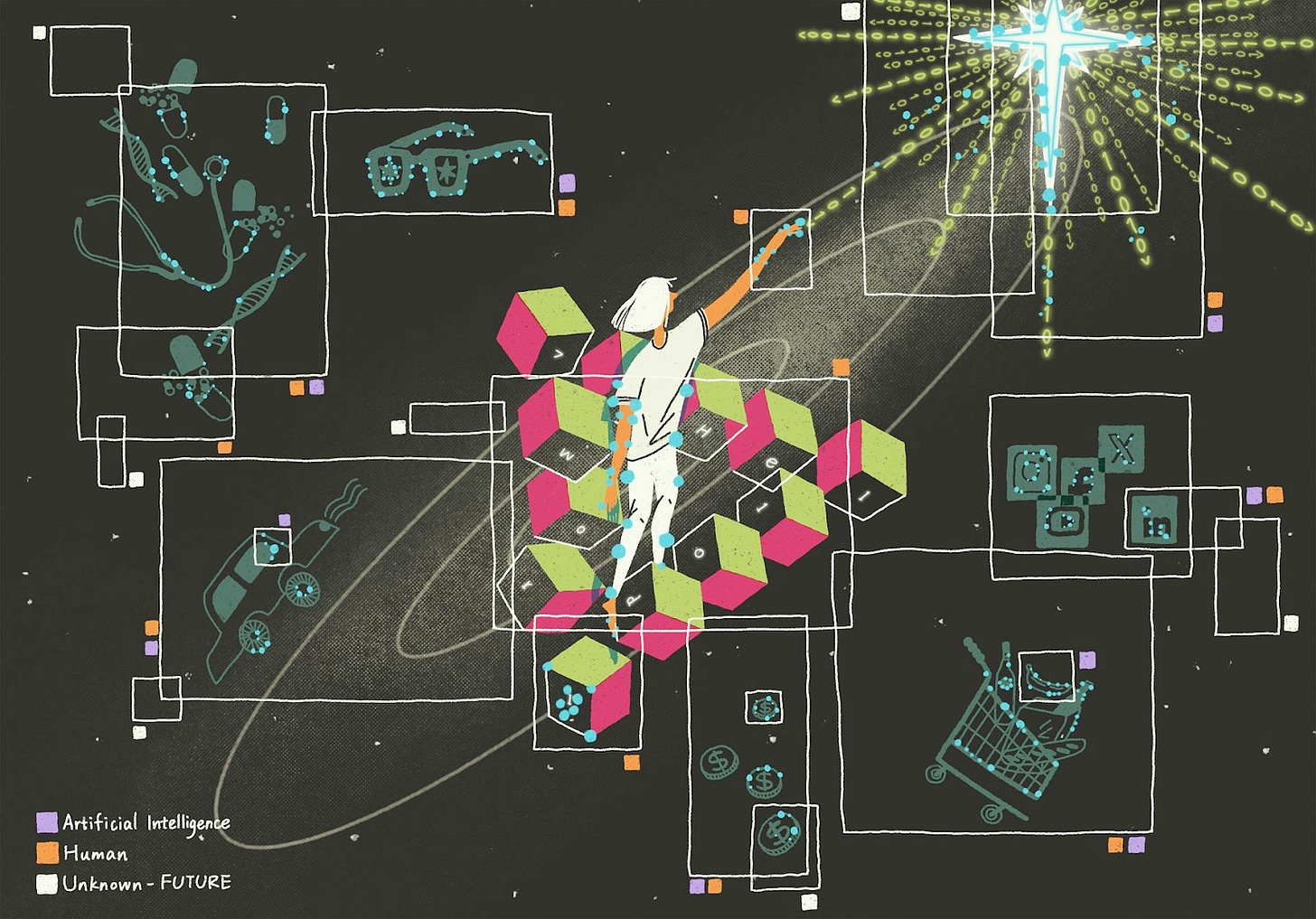

Collective Action: In Part V of the State of AI Ethics Report (SAIER), we explore emerging initiatives seeking to reclaim decision power regarding AI, across literacy, civil society and government.

Recess: Your wrist, your data, their access: Are you trading convenience for control? This week marks the return of our Recess series, a collaboration with Encode Canada that showcases student insights into current AI applications. This week’s piece analyzes AI wearables and the ethical grey areas that arise regarding privacy and data collection.

What connects these stories:

2026 has certainly begun on a low note, with tensions hastily increasing across America. But it is in this context that we call on our readers to find optimism in the good things that make us human. Empathy, care and compassion are just some of the things we can share with one another and with nature; a collective demonstration of solidarity that transcends the superfluous relations dictated by many social norms.

It is in this context that many initiatives challenging social systems have emerged. Collective action is given centre stage in the final part of the SAIER. Unionization, as Ana Brandusescu (McGill University) notes, has become a major force for good across AI ecosystems and may continue to be a key to future movements resisting AI in 2026. But what can people and collectives draw on when campaigning for better AI practices? “Critical AI literacy” may be the answer based on Tania Duarte’s (We and AI) analysis, as this emergent field of research and study tackles the entanglement of AI in larger systems of oppression head-on. Conversely, related questions are posed by Kennedy O’Neil (Encode Canada), who challenges individuals to ask about the extractive data practices embedded in wearable technologies.

With these insights and the many others in the present Brief, the SAIER, and those we produce in the year to come, we hope you find not only the strength to handle adverse news about the world, but a sense of community. Over 20,000 people are joining you on this journey, and many more are believing and fighting for AI ecosystems that reflect a positive AI future.

Please share your thoughts with the MAIEI community:

🎉 Happy 2026!

2026 presents itself as most years often do: a beacon of hope for positive change. As January follows its course, we may realize that nothing but the year on our calendars has changed. Government policy advances at a sluggish pace, the tangible impacts of climate change remain ever-present, geopolitical conflicts persist or flare up, and power continues to concentrate in the hands of a few individuals and corporations.

It is in this complex context that we wish you a happy 2026 – not as a naïve response to relentless local, national and international injustices, but as an indicator of what can drive the positive change we want to see as we enter the new year.

Before signing off in 2025, we promised we would bring you and all Brief readers more energy in 2026. Today’s Brief, MAIEI’s 181st and the year’s first, is a sign of what is to come: not despair, doomerism, nor frustration, but optimism before the real and potential initiatives people can lead that make a difference.

🎉 SAIER is back - Part V: Collective Action

On November 4th, 2025, we launched SAIER Volume 7, a snapshot of the global AI ethics arena, with perspectives from Canada, the US, Africa, Asia and Europe. Of course, not all voices could be captured, but SAIER Volume 7 presents a holistic understanding of the current state of play in the world of AI ethics. Part V of the report —which includes nine essays over three chapters— serves as a primer on collective initiatives that seek to empower the masses rather than accept AI as a technology controlled by and benefiting the few.

Chapter 15 tackles AI literacy, which Kate Arthur argues is a right and not a luxury; a necessity during the ongoing fourth industrial revolution. Arthur explains this has become obvious to policy-makers in the past year, mentioning initiatives developed across North America, Europe and Asia. But are AI literacy frameworks good enough? Tania Duarte (We and AI) calls into question the efficacy of many such programs, asking for confidence in assessing if and when their use is appropriate. Jae-Seong Lee (Electronics and Telecommunications Research Institute) brings Chapter 15 to a close with what may follow from AI literacy: not just understanding but co-creation and co-production. Lee uses the case study of Taiwan to explain co-production, whereby “participants learn by doing: reviewing data, proposing policy alternatives, and translating technical issues into public dialogue.” Conversely, Amsterdam’s algorithm register serves to illustrate co-production, where “participants act as co-governors, deciding which functions to maintain, retire, or reform. This empowerment turns oversight into shared governance, embedding AI literacy in everyday democratic life.”

Who is needed to shape the AI literacy agenda? Chapter 16 of the SAIER suggests that civil society is central to the effort. Michelle Baldwin (Equity Cubed) and Alex Tveit (Sustainable Impact Foundation) begin the chapter by suggesting civil society’s potential “ethical oversight” and practical implementation given the sector’s experience with managing complex data ecosystems. In this regard, Baldwin and Tveit see Canada’s sociohistorical context as being particularly powerful for a civil society that informs decolonial and future-proof AI practices. Denise Williams (former CEO of First Nations Technology Council) strengthens this case by articulating the approaches to innovation that would result from Indigenous forms of thought, specifically Seven-generation thinking. In Williams’ words, seven-generation thinking “asks us to honour the generations who came before and consider carefully those yet to come.” In practice, Indigenous approaches to AI are already present in the OCAP® (Ownership, Control, Access, and Possession) and CARE (Collective benefit, Authority to control, Responsibility, and Ethics) principles. Yet, civil society is also an adopter of AI technologies. Jenni Warren and Bryan Lozano (Tech:NYC Foundation) remind us of this in the closing of Chapter 16, which introduces case studies that illustrate what works and what doesn’t work when adopting AI tools across the sector.

A further important adopter of AI tools is presented in the final chapter of the SAIER: the public sector. Ana Brandusescu (McGill University) opens up Chapter 17 with a deep dive into what collective action can look like in government settings. Brandusescu describes how labour unions and whistleblower protections make robust AI governance practices possible. Tariq Khan (London Borough of Camden County Council) follows this idea up with two main suggestions: let’s not celebrate the tenuous productivity gains of AI but the important improvements to accessibility; and let’s avoid public sector systems becoming beholden to the interests of Big Tech. Finally, Jennifer Laplante (Government of Nova Scotia) suggests building the tech futures of the public sector by “doing the unglamorous work first: strengthening foundations, aligning systems, training people, and building governance that supports safe innovation.”

💭 Insights & Perspectives:

Recess: Your wrist, your data, their access: Are you trading convenience for control?

This piece is part of our Recess series, featuring university students from Encode’s Canadian chapter at McGill University. The series aims to promote insights from university students on current issues in the field of AI ethics. In this article, Kennedy O’Neil analyzes AI wearables and the ethical grey areas that arise regarding privacy and data collection.

To dive deeper, read the full article here.

❤️ Support Our Work

Help us keep The AI Ethics Brief free and accessible for everyone by becoming a paid subscriber on Substack or making a donation at montrealethics.ai/donate. Your support sustains our mission of democratizing AI ethics literacy and honours Abhishek Gupta’s legacy.

For corporate partnerships or larger contributions, please contact us at support@montrealethics.ai

✅ Take Action:

Have an article, research paper, or news item we should feature? Leave us a comment below — we’d love to hear from you!