The AI Ethics Brief #182: When Guardrails Fracture

Why 2026 marks the end of "business as usual" for AI ethics.

Welcome to The AI Ethics Brief, a bi-weekly publication by the Montreal AI Ethics Institute. We publish every other Tuesday at 10 AM ET. Follow MAIEI on Bluesky and LinkedIn.

📌 Editor’s Note

In this Edition (TL;DR)

ELITE App: ICE’s new Palantir-built geospatial targeting tool turns surveillance research into operational deportation infrastructure.

Venezuela & Greenland: Two developments reshaping assumptions underlying AI governance frameworks.

Professional Purpose: Clarifying where AI ethics energy is best spent in the current moment.

Four Things to Watch in 2026: Synthetic content, critical AI literacy, agentic surveillance, and labour organizing.

AI Policy Corner: South Korea’s AI Framework Act takes effect January 22, 2026.

Recess: How algorithmic social media is fragmenting the public sphere and what Canada can learn from the EU’s Digital Service Act.

What connects these stories: As international norms fracture (see: Venezuela & Greenland), the operational tools of enforcement are sharpening (see: Palantir’s ELITE). We explore what this divergence means for the future of AI advocacy.

🚨Recent Developments: What We’re Tracking

The Surveillance Infrastructure Is Now Operational

When we released SAIER Volume 7 in November 2025, we documented the growing integration of surveillance technologies into enforcement systems. In January 2026, we can now point to a specific application: ELITE (Enhanced Leads Identification & Targeting for Enforcement) by Palantir.

What ELITE Does

According to reporting by 404 Media, internal ICE materials, and court testimony from a deportation officer:

Geospatial targeting: The Palantir app populates a map with potential deportation targets, allowing agents to draw shapes around neighbourhoods or apartment complexes and query all known individuals within that area.

Dossier generation: Each target has a profile including name, date of birth, photograph, and Alien Registration Number.

Confidence scoring: The system calculates “address confidence scores” (0-100) based on data recency and source reliability, including utility bills, medical records, DMV updates, and court appearances.

Data sources: The app integrates information from the Department of Health and Human Services (including Medicaid), along with federal repositories, utility providers, and court records.

As one deportation officer testified, agents use it “kind of like Google Maps,” but instead of finding restaurants, they identify locations with high concentrations of potential targets.

Why This Matters

ELITE represents the operationalization of what SAIER Volume 7’s social justice section documented as emerging risk. The shift is significant:

From individual targeting to neighbourhood-level analysis: The tool enables bulk identification rather than case-by-case investigation.

From human judgment to statistical prioritization: Confidence scores determine which addresses receive enforcement attention.

From pilot programs to permanent infrastructure: Palantir’s $29.9 million ICE contract extension signals ongoing investment.

This is not a theoretical harm. In Woodburn, Oregon, raids have already been conducted based on ELITE-generated intelligence. In Minneapolis, the infrastructure is actively supporting enforcement operations.

Two Developments Reshaping the Governance Landscape

AI governance frameworks, from the EU AI Act to voluntary corporate commitments, generally assume a baseline of international law, institutional constraints, and good-faith consultation. Two January developments warrant attention for what they suggest about that baseline.

Venezuela: On January 3, 2026, U.S. military forces captured Venezuelan President Nicolás Maduro in Caracas, transferring him to New York to face narco-terrorism charges from a 2020 federal indictment. The UN Secretary General stated he remains “deeply concerned that rules of international law have not been respected.”

Greenland: President Trump has intensified efforts to acquire Greenland, with the White House confirming “all options are on the table” including military action against a NATO ally. On January 17, Trump announced tariffs on eight European countries until a deal is reached. In turn, European leaders warned in a joint statement that these tariff threats “undermine transatlantic relations and risk a dangerous downward spiral,” raising concerns about the stability of transatlantic data flows and trade agreements that underpin frameworks like the EU AI Act.

What This Means for AI Governance

AI governance frameworks depend on states honouring agreements, submitting to shared oversight, and engaging in good-faith negotiation. The EU AI Act’s extraterritorial reach, for instance, assumes trading partners will comply rather than retaliate. Cross-border data governance assumes mutual recognition of legal processes. Multilateral AI safety commitments, like those from the AI Safety Summits, assume participants won’t simply exit when constraints become inconvenient.

When a major power demonstrates willingness to bypass these norms in other domains, it raises practical questions: Will AI-specific agreements hold? Can smaller nations negotiate meaningful terms, or will market access be leveraged to override local governance choices? These are questions we’ll be following closely in 2026.

🔎 Looking Ahead

Strategic Outlook: Clarifying Our Purpose in 2026

In light of this crumbling international consensus, the current moment requires honesty about what pathways remain open for AI ethics work.

Here are some questions and thoughts on our minds as we go into 2026.

The Reality Check

Many in this field, MAIEI included, entered believing that evidence-based advocacy could steer institutional policy, i.e., that documenting harms, proposing frameworks, and engaging regulators would bend the curve toward safety. That era appears to be closing.

We should be clear-eyed: some of these pathways have narrowed. When corporate AI ethics teams are restructured into “compliance” functions, when consultation periods become procedural checkboxes, and when international coordination faces fundamental obstacles, the theory of change shifts.

A Return to Roots

This is not despair, and it’s not retreat. It’s clarification. The work of the Montreal AI Ethics Institute was never primarily about influencing powerful institutions from above. It has always been about:

Literacy: Building the capacity for communities to understand algorithmic systems shaping their lives.

Documentation: Creating the evidentiary record of how AI systems operate and whom they affect.

Solidarity: Connecting practitioners, researchers, and affected communities across sectors and borders.

These remain viable. In some ways, they’ve become more urgent.

Where We Focus Now

As we move through 2026, we see particular value in:

Supporting community-based resistance: Organizations helping people contest and navigate automated systems affecting them directly.

Building critical AI literacy: Not just technical understanding, but the capacity to ask who benefits, who bears costs, and who decides.

Maintaining documentation: The evidentiary record matters, even when it doesn’t immediately change policy.

We are evolving to meet the moment, and this community is our strongest asset. How can we best support your work in 2026? Leave a comment below or email us at support@montrealethics.ai

Four Things to Watch in 2026

1. Synthetic Content in Governance Contexts

AI “slop”, the low-quality, AI-generated content flooding digital platforms, was Merriam-Webster’s 2025 Word of the Year. The concern for 2026 is its application in governance contexts.

What to watch: AI-generated content that obscures the line between authentic public response and manufactured narrative, particularly during crises or contested political moments. Researchers at Graphika found that major state-sponsored propaganda campaigns have “systematically integrated AI tools,” often producing low-quality content, but at unprecedented volume.

Why it matters: When it becomes harder to distinguish genuine public sentiment from synthetic content, the informational foundations of democratic governance erode.

2. Critical AI Literacy as Community Infrastructure

Academic research on “critical AI literacy” has been developing frameworks for years. In 2026, we expect to see this work translated into practical community resources.

What to watch: Community organizations building capacity to understand algorithmic systems, not just how they work technically, but how they’re entangled with broader systems of power. The Open University, for example, released a framework for critical AI literacy skills in Spring 2025. Similar resources are emerging across educational and community contexts.

Why it matters: In the absence of robust top-down governance, bottom-up literacy becomes essential for communities to navigate automated systems affecting their lives.

3. Expansion of Agentic Surveillance Tools

ELITE represents a specific instantiation of a broader trend: AI systems that operate with minimal human oversight, using statistical confidence scores to prioritize enforcement actions.

What to watch: The extension of this approach beyond immigration enforcement into other domestic contexts: predictive policing, benefits administration, and housing decisions.

Why it matters: These tools shift accountability from individual decision-makers to algorithmic systems, creating what researchers have called a “responsibility vacuum.”

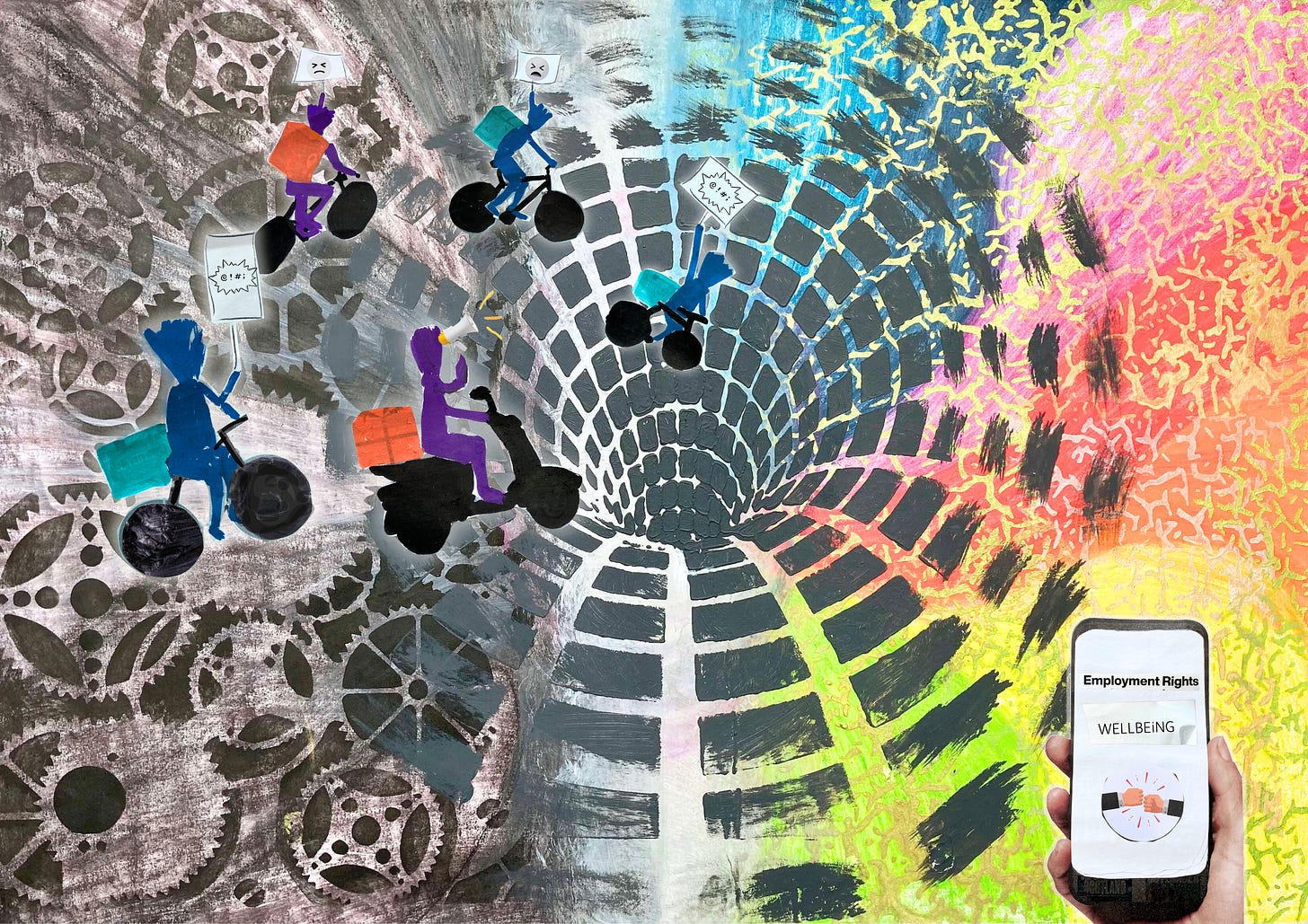

4. Labour Organizing as Ethical Guardrails

In October 2025, the AFL-CIO launched AI guidelines calling for transparency, human review of automated decisions, and the prohibition of AI as a surveillance tool. Major unions, including the WGA, SAG-AFTRA, and the International Longshoremen’s Association, have all won binding AI provisions.

In Canada, 2025 also marked a turning point: ACTRA’s Independent Production Agreement and British Columbia’s Master Production Agreement now include AI provisions built on consent, compensation, and control, requiring performer approval for digital replicas, payment for all uses, and safe data storage. The agreements also address “synthetic performers,” recognizing AI-generated assets as labour deserving compensation. (See SAIER Volume 7, Chapter 11.2, for full analysis by ACTRA’s Anna Sikorski and Kent Sikstrom).

What to watch: Collective bargaining agreements establishing concrete AI constraints. ACTRA is also tracking Denmark’s copyright proposals, which would grant individuals copyright over their likeness and voice.

Why it matters: In the absence of comprehensive regulation, labour agreements provide sector-specific guardrails, though as ACTRA notes, unions cannot bear this issue alone.

💭 Insights & Perspectives:

AI Policy Corner: AI Governance in East Asia: Comparing the AI Acts of South Korea and Japan

This edition of our AI Policy Corner, produced in partnership with the Governance and Responsible AI Lab (GRAIL) at Purdue University, examines South Korea’s AI Framework Act and compares it with Japan’s AI Promotion Act. South Korea’s “Framework Act on the Development of Artificial Intelligence and Establishment of Trust Foundation” takes effect on January 22, 2026, making South Korea the first country to enforce a comprehensive AI regulatory framework. Both countries share the goal of national AI competitiveness, with Japan aspiring to be "the most AI-friendly country," and South Korea aiming for "global top-three AI power." However, South Korea's framework addresses high-risk areas that Japan's does not, while both remain more relaxed than the EU AI Act's comprehensive obligations.

To dive deeper, read the full article here.

Recess: Reprogramming the Public Sphere: AI and News Visibility on Social Media

This piece is part of our Recess series, featuring university students from Encode’s Canadian chapter at McGill University. The series aims to promote insights from university students on current issues in the AI ethics space. In this article, Natalie Jenkins notes how 62% of young Canadians aged 15-24 get their news from social media. Natalie examines what this means for democracy when AI-powered recommendation systems, not journalists, decide what information reaches us.

Key insights:

Platforms have replaced journalists as primary news intermediaries, with algorithmic curation based on engagement rather than civic value

Facebook’s algorithm changes resulted in a 78% decline in news reactions between 2021 and 2024

Political influencers exploit engagement-based metrics, gaining visibility through outrage rather than accountability

TikTok’s recommender system can learn user vulnerabilities in under 40 minutes

Natalie argues Canada should look to the EU’s Digital Services Act as a model, particularly its requirements for algorithmic transparency and user control over recommendation systems.

To dive deeper, read the full article here.

❤️ Support Our Work

Help us keep The AI Ethics Brief free and accessible for everyone by becoming a paid subscriber on Substack or making a donation at montrealethics.ai/donate. Your support sustains our mission of democratizing AI ethics literacy and honours Abhishek Gupta’s legacy.

For corporate partnerships or larger contributions, please contact us at support@montrealethics.ai

✅ Take Action:

Have an article, research paper, or news item we should feature? Leave us a comment below — we’d love to hear from you!

This comprehensive analysis of AI governance frameworks really highlights the tension between technical capability and institutional accountability. The ELITE system example is particularly concerning - when surveillance tools become this automated, the question of who's accountable for targeting decisions becomes nearly impossible to answer. I appreciate how you're connecting these specific implementations back to broader governance challenges. The section on critical AI literacy as community infrastructure is spot-on; we need more bottom-up awareness alongside top-down policy work.

I'm past frowning and feeling concerned now and have moved on to downright anger that FB posts by respected individuals (who help Americans see, know and understand ICE and Trump's horrid actions) that they haven't informed people of the powers behind all the crap. The secretive and complicit Rockbridge Network of billionaire oligarchs and the Heritage Foundation's fascist playbook that guides Trump and his cohorts. They are the worst gps. although not the only ones. I wish your org. would meet with the people behind the public influencers, like Robert Arnold, The Resistance, the Chris Hedges Report, Rachel Maddow, Robert Reich, Trevoe Poutine, perhaps CNN and Mother Jones, any unbiased news sites, etc and update them on the most pressing AI concerns for American citizens right now - because they don't respond to my post comments ... bit hopefully wouldn't dare ignore your group's crucial AI info concerning the ICE use of Palantir's ELITE app that targets neighborhoods versus individuals. The general public has no clue about how the dreaded ICE rely on AI or even how much AI generated political fakes flood the news systema and affect and change the playing field. I'd like to see you add a bottom-up approach in addition to your wonderful reports. Somebody ought to tell the somebody-ies concerned with preventing the fall of democracy - somebody like the protesters or citizens. Because that is the gp that will affect the change that your AI reports address most. Thanks.