AI Ethics Brief #106: TikTok in Kenya, safe AI deployment, ethics of AI business practices, and more ...

How should we define organizational AI governance?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~35-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

AI Ethics During Warfare: An Evolving Paradox

Social Context of LLMs - the BigScience Approach, Part 2: Project Ethical and Legal Grounding

Masa’s review of “The Ethics of AI Business Practices: A Review of 47 AI Ethics Guidelines”

🔬 Research summaries:

From Dance App to Political Mercenary: How disinformation on TikTok gaslights political tensions in Kenya

Subreddit Links Drive Community Creation and User Engagement on Reddit

Structured access to AI capabilities: an emerging paradigm for safe AI deployment

Towards User-Centered Metrics for Trustworthy AI in Immersive Cyberspace

The Ethics of AI Business Practices: A Review of 47 AI Ethics Guidelines

Defining organizational AI governance

📰 Article summaries:

Why AI fairness tools might actually cause more problems

U.S. court will soon rule if AI can legally be an ‘inventor’

How DALL-E could power a creative revolution

📖 Living Dictionary:

What is the relevance of explainability to AI ethics?

🌐 From elsewhere on the web:

How does AI and ML Impact Climate Change?

The Africa Chatbot & Conversational AI Summit 2nd Edition

💡 ICYMI

Quantifying the Carbon Emissions of Machine Learning

But first, our call-to-action this week:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

✍️ What we’re thinking:

AI Ethics During Warfare: An Evolving Paradox

There is a saying- “all is fair in love and war”. Particularly for war, ethical aspects have been puzzling for centuries. There remain some basic humane principles to uphold even during wartime, as defined by international laws like Geneva convention. However, we see violations of those so often, just like the way we are observing now during the Russian invasion of Ukraine.

Simultaneously, wars boost arms business. And in this era of automation, this boost is happening for some AI industries also. Unmanned Aerial Vehicles (UAV) or drones are widely used in the ongoing Russia-Ukraine war. While exact figures are still not available, it is assumed that drone sales to corresponding regions have increased almost exponentially. It will continue to rise as many western nations are now increasing their military expenditure. In fact, in several recent wars drones have been a major catalyst (Azerbaijan- Armenia war in 2021 is a solid example of that) [1].

Wars often play a driving role in advancing technologies. Two world wars are great examples of that. So it is not unlikely to see certain AI mechanisms advancing further as a side-effect of the on-going war. However, that never counts as an excuse or explanation, in comparison with the immense destruction and suffering this war is causing to so many people. Its far reaching and long-lasting consequence on the entire world is alarming enough. Like we are often now even hearing about nuclear threats!

To delve deeper, read the full article here.

Social Context of LLMs – the BigScience Approach, Part 2: Project Ethical and Legal Grounding

The previous post in this series provided an overview of how the BigScience Workshop’s approach to addressing the social context of Large Language Model (LLM) research and development across its project governance, data, and model release strategy. In the present article, we further dive into this first aspect, and particularly into the work that enabled a value-driven, consensus-based, and legally grounded research approach.

Our ability to promote the kind of open, accountable, and conscientious research we believe is necessary to steer the development of new language technology toward more beneficial and equitable outcomes hinges first on the implementation of these values within the project’s internal processes. Thus, we first apply the analysis outlined in the previous article to the project’s internal governance and ethical and legal grounding, as this aspect of the workshop defines the framework for all of the other research questions we aim to address:

People. Our work on this aspect of the workshop is focused on two main categories of stakeholders: the workshop participants themselves whose research will follow our proposed processes and be informed by its ethical and legal work, and the broader ML and NLP research community for whom we hope to showcase a working example of a large-scale value-grounded distributed research organization.

Ethical focus. Finding a common approach and shared values that can guide ethical discussions within the project while valuing the diversity of contexts and perspectives at play is instrumental to enabling value-grounded decision-making within the workshop. We organize our work to that end around the elaboration of a collaborative ethical charter.

Legal focus. We study the growing number of regulations relevant to our area emerging around the world on two accounts: both in order to allow all of our participants to fully engage in research without exposing themselves to sanctions and to understand how different jurisdictions operationalize the values that are represented in our ethical charter.

Governance. A project’s internal governance processes can disrupt or entrench disparities and determine whose voice is welcomed to the table and taken into account when making decisions. We adopt decision processes guided by the ideal of consensus, as we see it as most consistent with our ethical charter.

This blog post outlines two mains effort that helped structure our work toward the goals outlined above: a multidisciplinary effort to gather inputs from all workshop participants in order to collaboratively build an ethical charter reflecting shared driving values for the project, and a week-long “hackathon” during which legal scholars from around the world worked on answering questions that had come up during the preceding workshop months in 9 different jurisdictions.

To delve deeper, read the full article here.

Masa’s review of “The Ethics of AI Business Practices: A Review of 47 AI Ethics Guidelines”.

🔬 Research summaries:

The upcoming Kenyan elections on August 9th will be one of the hottest events in 2022 within the African context. Yet, disinformation’s role on TikTok’s platform is proving far too prominent.

To delve deeper, read the full summary here.

Subreddit Links Drive Community Creation and User Engagement on Reddit

On Reddit, subreddit links are often used to directly reference another community. This use potentially drives traffic or interest to the linked subreddit. To understand and explore this phenomena, we performed an extensive observational study. We found that subreddit links not only drive activity within referenced subreddits, but also contribute to the creation of new communities. These links therefore give users the power to shape the organization of content online.

To delve deeper, read the full summary here.

Structured access to AI capabilities: an emerging paradigm for safe AI deployment

With increasingly powerful AIs, safe access to these models becomes an important question. One such paradigm addressing this is that of structured capability access (SCA). SCA aims to restrict model misuse by employing strategies to limit the end-users’ access to various parts of a given model.

To delve deeper, read the full summary here.

Towards User-Centered Metrics for Trustworthy AI in Immersive Cyberspace

AI plays a key role in current cyberspace and will drive future immersive ecosystems. Thus, the trustworthiness of such AI systems (TAI) is vital as failures can hurt adoption and cause user harm especially in user-centered ecosystems such as the metaverse. This paper gives an overview of the historical path of existing TAI approaches and proposes a research agenda towards systematic yet user-centered TAI in immersive ecosystems.

To delve deeper, read the full summary here.

The Ethics of AI Business Practices: A Review of 47 AI Ethics Guidelines

AI ethics guidelines tend to focus on issues of algorithmic decision-making rather the ethics of business decision-making and business practices involved in developing and using AI systems. This paper presents the results of a review and thematic analysis of 47 AI ethics guidelines. We argue that in order for ethical AI to live up to its promise, future guidelines must better account for harmful AI business practices, such as overly speculative and competitive decision-making, ethics washing, and corporate secrecy.

To delve deeper, read the full summary here.

Defining organizational AI governance

Organizations can translate ethical AI principles into practice through AI governance. In this paper, we wish to facilitate the adoption of AI governance tools by defining and positioning organizational AI governance.

To delve deeper, read the full summary here.

📰 Article summaries:

Why AI fairness tools might actually cause more problems

What happened: The 80% rule, also known as the 4/5 rule, has been used by federal agencies to compare the hiring rate of protected groups and white people in an effort to determine whether hiring practices have led to discriminatory impacts. The goal of the rule is to encourage companies to hire protected groups at a rate that is at least 80% that of white men. At surface level, this seems like a step in the right direction. However, harms that disparately affect some groups could be exacerbated as the rule is baked into tools used by machine-learning developers.

Why it matters: The potential harm of codifying the 4/5 rule into popular AI fairness software toolkits has been increasing. One of the main concerns pertains to the timing of application. The rule is usually applied in real-life scenarios as a first step in a longer process used to understand why disparate impact has occurred and how to fix it. However, fairness tools are typically used by engineers at the end of a development process, meaning that the “human element of decision-making gets lost.” The simplistic utilization of the rule also misses other factors that are weighed in traditional assessments, such as which subsections of applicant groups should be measured.

Between the lines: It is important to note that the incorporation of this rule in AI fairness tools was most likely well-intentioned. Developers are not incentivized in software development cycles to do slow, deeper work. “They need to collaborate with people trained to abstract and trained to understand those spaces that are being abstracted.”

U.S. court will soon rule if AI can legally be an ‘inventor’

What happened: Current intellectual property controls call for a human inventor to file for a patent. With the rapid technological evolution we are currently going through, can AI be legally treated as an inventor? This is a question that the U.S. Court of Appeals heard again last week, and the ruling could affect the pace of AI technology development, especially within the pharmaceutical and life science industries.

Why it matters: A ruling in favor would impact healthcare companies that use the technology to create tools that find new treatments for existing drugs. The main argument pushing for this outcome is that it is critical to interpret the patent act consistently with its purpose, which is to promote innovation. If the judges rule in favor of AI, one of the expected results would be many more patent filings. It would also be an incentive for healthcare companies to use AI to develop new drugs and repurpose existing drugs.

Between the lines: This topic is particularly interesting in the context of healthcare, since “AI approaches for accelerating drug repurposing or repositioning are not just formidable but are also necessary.” In the case that businesses cannot profit from a patent, they could choose to reduce their investment in AI or even be incentivised to keep their inventions as trade secrets, which would ultimately deprive society of the benefits of new technologies.

How DALL-E could power a creative revolution

What happened: DALL-E, which was created by OpenAI and combines surrealist Salvador Dalí and Pixar’s WALL-E, takes text prompts and generates images from them. The images are now 1,024 by 1,024 pixels and can incorporate techniques such as “inpainting”, which refers to the replacement of one or more elements in an image with another. Only a few thousand people have access, but the company is hoping to add 1,000 people a week. It should be noted that DALL-E’s content policy is designed to prevent most of the potential abuses of the platform, such as hate, harassment, violence, sex, or nudity.

Why it matters: DALL-E has an impressive ability to capture emotion and express a concept in various ways. There are several interesting use cases popping up, such as artists creating augmented reality filters for social apps or chefs getting new ideas for how to plate dishes. However, there are also a couple concerns that stand out. What will this sort of automation do to professional illustrators? Moreover, there will likely be harmful applications of this tool as well, no matter how robust OpenAI designs their content moderation policies to be.

Between the lines: This tool highlights the nuances of creativity. The algorithm is simply making probabilistic guesses when provided with a text prompt, yet the results can evoke similar emotions to that of real art. Although there seems to be lots of uncertainty around the application of this tool in the future, it will be interesting to witness how the evolution of content policies impacts the use of a tool such as DALL-E.

📖 From our Living Dictionary:

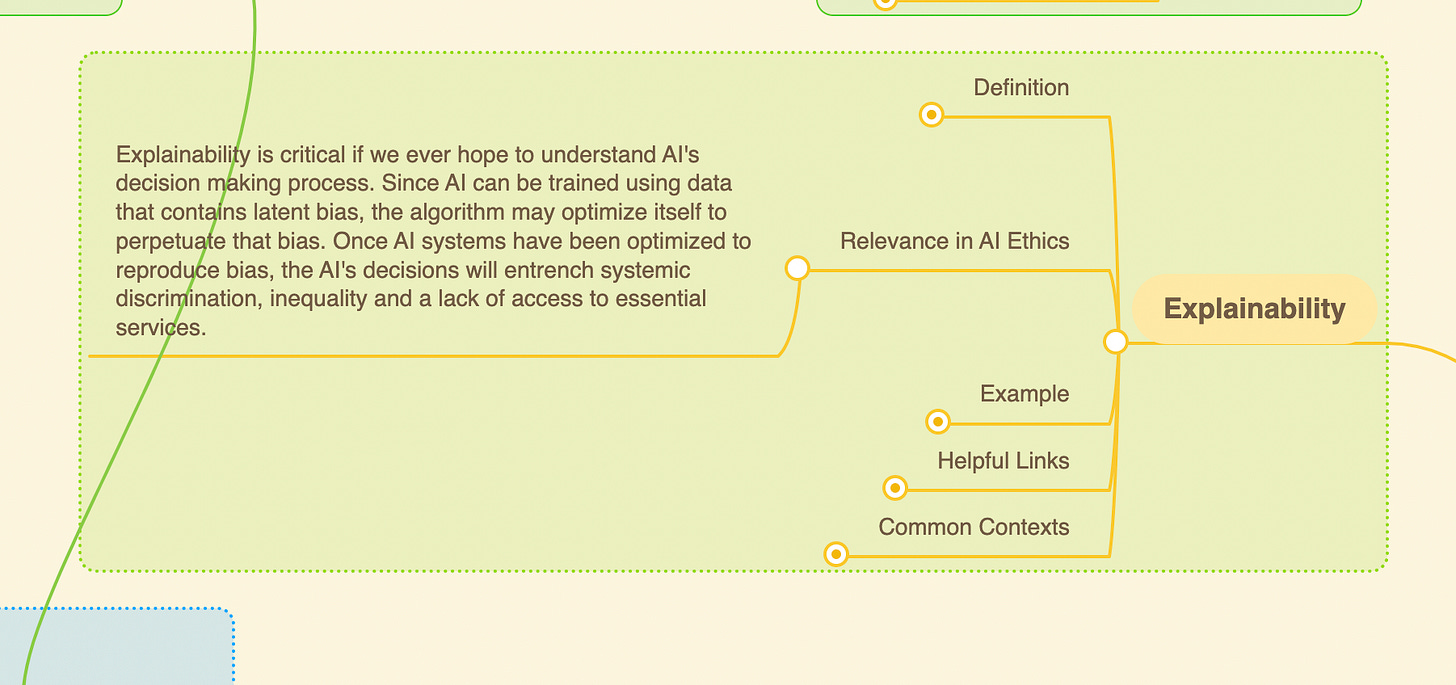

What is the relevance of explainability to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

How does AI and ML Impact Climate Change?

Our founder, Abhishek Gupta, spoke on the Environment Variables podcast about his work as the Chair of the Standards Working Group at the Green Software Foundation on the impact that AI and ML are having on climate change.

This week Chris Adams takes over the reins from Asim Hussain to discuss how does artificial intelligence and machine learning impact climate change. He is joined by Will Buchanan of Azure ML (Microsoft), Abhishek Gupta; the chair of the Standards Working Group for the Green Software Foundation and Lynn Kaack; assistant professor at the Hertie School in Berlin. They discuss boundaries, Jevon’s paradox, the EU AI Act, inferencing and supply us with a plethora of materials regarding ML and AI and the climate!

The Africa Chatbot & Conversational AI Summit 2nd Edition

Our partnerships manager, Connor Wright, will be presenting on viewing chatbots from an Ubuntu perspective, referring especially to the presence of anthropomorphism within the space on the 22nd of June. Check out the link to see the list of other speakers, the agenda and for ticket information!

💡 In case you missed it:

Quantifying the Carbon Emissions of Machine Learning

As discussions on the environmental impacts of AI heat up, what are some of the core metrics that we should look at to make this assessment? This paper proposes the location of the training server, the energy grid that the server uses, the training duration, and the make and model of the hardware as key metrics. It also describes the features offered by the ML CO2 calculator tool that they have built to aid practitioners in making assessments using these metrics.

To delve deeper, read the full summary here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.