AI Ethics Brief #77: US-EU Trade and Tech Council, public strategies for AI, Chinese AI ethics guidelines, and more ...

What is the Meaning of “Explainability Fosters Trust in AI”?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~17-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

U.S.-EU Trade and Technology Council Inaugural Joint Statement – A look into what’s in store for AI?

🔬 Research summaries:

The Meaning of “Explainability Fosters Trust in AI”

Public Strategies for Artificial Intelligence: Which Value Drivers?

Artificial Intelligence: the global landscape of ethics guidelines

📰 Article summaries:

Chinese AI gets ethical guidelines for the first time, aligning with Beijing’s goal of reining in Big Tech

Leaked Documents Show How Amazon's Astro Robot Tracks Everything You Do

The limitations of AI safety tools

The Facebook whistleblower says its algorithms are dangerous. Here’s why.

📖 Living Dictionary:

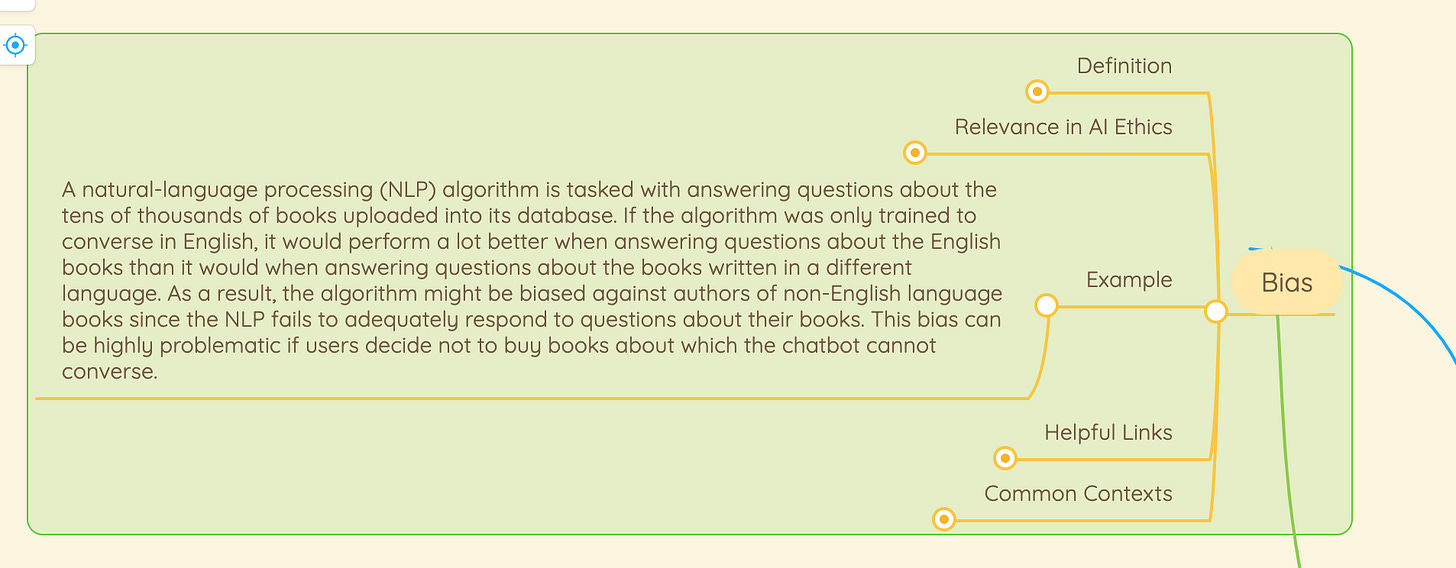

Bias

🌐 From elsewhere on the web:

Abhishek Gupta awarded the inaugural Gradient Prize for his research article on building more sustainable AI systems!

💡 ICYMI

On the Edge of Tomorrow: Canada’s AI Augmented Workforce

But first, our call-to-action this week:

The Montreal AI Ethics Institute is committed to democratizing AI Ethics literacy. But we can’t do it alone.

Every dollar you donate helps us pay for our staff and tech stack, which make everything we do possible.

With your support, we’ll be able to:

Run more events and create more content

Use software that respects our readers’ data privacy

Build the most engaged AI Ethics community in the world

Please make a donation today.

✍️ What we’re thinking:

U.S.-EU Trade and Technology Council Inaugural Joint Statement – A look into what’s in store for AI?

This write-up focuses on the conclusions reached at the inaugural meeting of the U.S.-EU Trade and Technology Council (“TTC”) held in Pittsburgh, Pennsylvania on September 29, 2021. It concerns only those aspects in the meeting that deal with the use of AI, its effects, and the areas of cooperation envisioned, going forward.

To delve deeper, read the full article here.

🔬 Research summaries:

The Meaning of “Explainability Fosters Trust in AI”

Can we trust AI? When is AI trustworthy? Is it true that “explainability fosters trust in AI”? These are some of the questions tacked in this paper. The authors provide an account for when it is rational or permissible to trust AI by focusing on the use of AI in healthcare, and they conclude that explainability justifies trust in AI only in a very limited number of “physician-medical AI interactions in clinical practice.”

To delve deeper, read the full summary here.

Public Strategies for Artificial Intelligence: Which Value Drivers?

Different nations are now catching on to the need of national AI strategies for the good of their futures. However, what really drives AI and whether this is in line with the current fundamental values at the heart of different nations is a different question.

To delve deeper, read the full summary here.

Artificial Intelligence: the global landscape of ethics guidelines

Many private companies, research institutions, and public sectors have formulated guidelines for ethical AI. But, what constitutes “ethical AI,” and which ethical requirements, standards, and best practices are required for its realization. This paper investigates whether there is an emergence of a global agreement on these questions. Further, it analyzes the current corpus of principles and guidelines on ethical AI.

To delve deeper, read the full summary here.

📰 Article summaries:

What happened: The Chinese Ministry of Science and Technology released more focused guidelines on AI ethics that place human control over the technology at its center. It has brought the broader Beijing AI Principles published earlier much more in line with the emphasis that the Chinese Government has placed on reigning in Big Tech. Some of the other values emphasized in the document include improving human well-being, promoting fairness and justice, protecting privacy and safety, and raising ethical literacy.

Why it matters: With the emphasis on human control, the guidelines set a strong example in terms of how the interaction between humans and machines will take place. In particular, the mention of the ability of humans to exit the interaction with an AI system at any time, discontinuing the AI system, and accepting to interact with the AI system in the first place will have severe consequences for the large number of AI-infused products and services that are used daily across the most popular apps in China. How this comes into effect and how strict the enforcement will be will determine to what extent the guidelines achieve their intended goals.

Between the lines: Given the mandate at the Montreal AI Ethics Institute, it is very interesting to see “raising ethical literacy” be included as a core consideration in the AI ethics guidelines. We believe that achieving AI ethics in practice will require education and empowerment of all stakeholders, not just having guidelines and enforcing regulations for those who develop and deploy AI systems. Perhaps this is a harbinger of other countries adopting this as a core consideration as well.

Leaked Documents Show How Amazon's Astro Robot Tracks Everything You Do

What happened: Amazon has unveiled a new robot dubbed Astro that integrates with Alexa Guard and Ring (other products from Amazon) to provide automated home security solutions. It is a $999 robot that will patrol the home of the user and constantly surveil it for incidents that warrant the attention of the owner in the case of unusual activity and also to monitor strangers inside the house. If this doesn’t already spook you, the article mentions a leaked memo that details the numerous flaws in the system that go counter to the marketing effort from Amazon.

Why it matters: Constant surveillance, data privacy, targeted advertising, and the list goes on in terms of concerns that arise from the use of a persistent sentry moving around the most private spaces of our lives: our homes. What is even more problematic is that people who have worked on Astro point out that the system is flawed in its person recognition capabilities, struggles to navigate spaces, and is sold as an accessibility enhancer within the home though it has notable failure modes where it is known to get into jams. What’s even more interesting is that Amazon doesn’t have a policy to allow for returning broken Astros as mentioned in the article referencing the leaked memo.

Between the lines: From a practical and technical standpoint, there are many challenges in getting social robots right: above all, getting it to operate in line with human expectations most of the time to be welcome in their most private spaces. The demonstrated failures of Astro along with the almost insurmountable combination of challenges of being able to respond to myriad voice commands from the owner, navigating a complex, dynamic, and uncertain environment, and interacting with dynamic live and static elements in that environment makes it highly unlikely that Astro succeeds in winning a place in people’s home. As consumers become savvier about privacy and other ethical concerns regarding some of the tech that is required to power the Astro, Amazon will have to provide very robust guarantees before people are going to bring one home.

The limitations of AI safety tools

What happened: With the inclusion of the word “safety” in various trustworthy AI proposals from the EU HLEG and NIST, this article talks about the role something like the Safety Gym from OpenAI can play in achieving safety in AI systems. It provides an environment to test reinforcement learning systems in a constrained setting to evaluate their performance and assess them for various safety concerns. The article features interviews with some researchers in the field who mention how such a gym might be inadequate since it still depends on specifying rules to qualify what constitutes safe behaviour. And such rules will have to constantly grow and adapt as the systems “explore” new ways of achieving the specified tasks.

Why it matters: Environments like the Safety Gym help to provide a sandbox to test digital twins of systems that will be deployed in production, especially when the costs of such testing might be prohibitive in the real-world or too risky. This applies to cases of autonomous driving, industrial robots working alongside other humans, and other use-cases with humans and machines operating in a shared environment.

Between the lines: A single environment with a specific modality of operation can never comprehensively help to identify all the places where alignment problems might arise for an AI system, but they do provide a diagnostic test to at least identify how the system can misbehave or deviate from expectations. Using that information to iterate is a useful outcome from the use of such an environment. More so, such environments offer a much more practical way to go about safety testing rather than just theoretical formulations which to a certain extent are limited by the ingenuity of the testers and developers’ to imagine how an AI system might behave.

The Facebook whistleblower says its algorithms are dangerous. Here’s why.

What happened: Frances Haugen, the primary source for The Facebook Files included in the WSJ investigative series on the company, testified in a Senate hearing confirming a lot of things that people assumed about how Facebook operates and where it falls short in terms of practically addressing problems on its platform. One of the main arguments put forward by Haugen in the Senate hearing was that the company knew about the problems, and didn’t act on them. More so, the emphasis on content moderation as a tool for creating a healthier information ecosystem is inherently flawed and instead we should be focusing on the design of the algorithms powering the platform to address the root causes of the problems plaguing the platform.

Why it matters: Scathing in its criticism of the platform and what it is doing to address the challenges including misinformation, polarization, addictive engagement, data misuse for targeted advertising, and others, the fact that existing mechanisms like content moderation because of limitations in their language and context capabilities are just proverbial band-aids on a broken dam are the call-to-action that should spur Facebook to make more investments in reshaping the platform to mitigate the emergence of such problems in the first place. What this also does is shows that presented evidence of investments into content moderation, we should be more cognizant of the actual impact that such measures will have in solving the root problems at the heart of the platform.

Between the lines: At the center of all the proposed mechanisms, the problems, proposed regulations, and everything else to create a more healthy ecosystem is a fundamental tension: the business incentives of the platform in realizing profits are stacked against the interests of the users of the platform. Yes, there might be ways of giving one side more of an edge but the tension remains because of the business model which ultimately drives a lot of the activity on the platform’s design, development, and deployment. Without acknowledging that more fully, and working towards resolving that tension, the solutions will only address the root problems in a piece-meal fashion.

From our Living Dictionary:

‘Bias’

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

From elsewhere on the web:

Abhishek Gupta awarded the inaugural Gradient Prize for his research article on building more sustainable AI systems!

Earlier this year, The Gradient announced the inaugural Gradient Prize to reward their most outstanding contributions from the past several months. Gradient editors picked five finalists, which they sent to their three guest judges: Chip Huyen, Shreya Shankar, and Sebastian Ruder.

Our founder, Abhishek Gupta, was awarded the top prize for his research article titled “The Imperative for Sustainable AI Systems”!

In case you missed it:

On the Edge of Tomorrow: Canada’s AI Augmented Workforce

Following the 2008 financial crisis, the pursuit of economic growth and prosperity led many companies to pivot from labor intensive to capital intensive business models with the espousal of AI technology. The capitalist’s case for AI centered on potential gains in labor productivity and labor supply. The demand for AI grew with increased affordability of sensors, accessibility of big data and a growth of computational power. Although the technology has already augmented various industries, it has also adversely impacted the workforce. Not to mention, the data, which powers AI, can be collected and used in ways that put fundamental civil liberties at risk. Due to Canada’s global reputation and extensive AI talent, the ICTC recommends Canada take a leadership role in the ethical deployment of this technology.

To delve deeper, read the full summary here.

Take Action:

The Montreal AI Ethics Institute is committed to democratizing AI Ethics literacy. But we can’t do it alone.

Every dollar you donate helps us pay for our staff and tech stack, which make everything we do possible.

With your support, we’ll be able to:

Run more events and create more content

Use software that respects our readers’ data privacy

Build the most engaged AI Ethics community in the world

Please make a donation today.