AI Ethics Brief #134: AI's carbon footprint, FTC changes, military human-machine teams, generative elections, and more.

What can history teach us about regulating AI systems?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What are the advantages and implications of the recent changes enacted by the FTC to empower its staff to pursue AI-related investigations?

✍️ What we’re thinking:

Engaging the Public in AI’s Journey: Lessons from the UK AI Safety Summit on Standards, Policy, and Contextual Awareness

🤔 One question we’re pondering:

How should a Board be better structured to avoid mishaps, like the OpenAI saga, for companies that play a critical role in the AI ecosystem?

🪛 AI Ethics Praxis: From Rhetoric to Reality

How to avoid negative urgency within an organization that can harm the implementation of a Responsible AI program?

🔬 Research summaries:

Exploring the Carbon Footprint of Hugging Face’s ML Models: A Repository Mining Study

Designing for Meaningful Human Control in Military Human-Machine Teams

The Role of Relevance in Fair Ranking

📰 Article summaries:

The Generative Elections: How AI could influence the 2024 US presidential race (and beyond)

Workers could be the ones to regulate AI | Financial Times

AI regulation and the imperative to learn from history | Ada Lovelace Institute

📖 Living Dictionary:

What is the relevance of underfitting and overfitting in AI ethics?

🌐 From elsewhere on the web:

OpenAI's meltdown prompts further questions around the future of AI safety surveillance

💡 ICYMI

Rethink reporting of evaluation results in AI

🚨 AI Ethics Praxis: From Rhetoric to Reality

Aligning with our mission to democratize AI ethics literacy, we bring you a new segment in our newsletter that will focus on going beyond blame to solutions!

We see a lot of news articles, reporting, and research work that stops just shy of providing concrete solutions and approaches (sociotechnical or otherwise) that are (1) reasonable, (2) actionable, and (3) practical. These are much needed so that we can start to solve the problems that the domain of AI faces, rather than just keeping pointing them out and pontificate about them.

In this week’s edition, you’ll see us work through a solution proposed by our team to a recent problem and then we invite you, our readers, to share in the comments (or via email if you prefer), your proposed solution that follows the above needs of the solution being (1) reasonable, (2) actionable, and (3) practical.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

This week, one of our readers wrote in to inquire about the recent changes from the FTC as it relates to AI systems investigations and what some of its advantages and implications are going to be.

📃 The FTC's new approach to streamline the issuance of civil investigative demands (CIDs) in AI-related investigations offers several core benefits. First, it enhances the FTC's ability to protect consumers and maintain fair competition in the rapidly evolving AI market. By enabling the FTC to more efficiently obtain documents, information, and testimony, it can more effectively investigate potential misuse of AI, such as fraud, deception, privacy infringements, and unfair practices.

📟 Second, this approach can help address concerns about companies overstating the capabilities of their AI systems or using AI as a buzzword in advertising without substantial backing. The FTC's new powers could deter such practices, ensuring that companies provide truthful, non-deceptive information backed by evidence.

🏛️ This approach aligns with the Biden Executive Order on AI, which emphasizes the need for safe, secure, and trustworthy AI. The Executive Order establishes new standards for AI safety and security, protects Americans' privacy, and advances equity and civil rights. It also encourages international cooperation to support the responsible deployment and use of AI worldwide.

The FTC's resolution complements these goals by providing a mechanism to investigate and address potential risks and violations associated with AI, thereby promoting the responsible use and development of AI technologies.

And in a slightly different vein:

How would you compose the Board at OpenAI? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

The Montreal AI Ethics Institute is a partner organization with Partnership on AI (PAI). Our Partnerships Manager, Connor, attended their UK AI Safety Summit fringe event in London on the 24th and 25th of October, 2023. Impressed by the variety in both speakers and thoughts, his main takeaway came from the importance of public engagement when deploying AI systems. That is in strong alignment with the mission of MAIEI in democratizing AI ethics literacy, which aims to build civic competence so that the public is better prepared and informed about the nuances of AI systems to properly engage in how AI systems are governed from a technical and policy perspective.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

After everything we saw with the OpenAI saga recently, namely the ouster of Sam Altman (CEO) and Greg Brockman (co-founder) and their return back to the company, alongside all the back-and-forth with Microsoft and other stakeholders to help allay concerns of the entire company falling apart (~95% of the staff was willing to leave the company as well) - how should a Board be better structured to avoid such mishaps for companies that play a critical role in the AI ecosystem?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

The idea of negative urgency within an organization is the case when there are arbitrary deadlines and incentive structures that push staff towards outcomes that aren’t in alignment with the stated purpose and values of the organization. This has an impact on the successful implementation of Responsible AI programs and can be avoided by following some ideas outlined below (this draws from our work advising organizations on how to successfully implement RAI programs without falling into traps of negative urgency):

📋Design for Transparency

In the context of negative urgency, transparency plays a pivotal role in fostering ethical AI practices. For designers, this means clearly communicating the purpose and functioning of AI systems without obfuscation or misleading interfaces. On the organizational level, transparency about the reasons for urgency in AI projects is crucial. This involves openly discussing timelines, expectations, and the potential impact of these urgencies on the quality and ethical considerations of AI development. By doing so, organizations can avoid scenarios where teams are rushed into compromising ethical standards for the sake of expediency.

🌐 Ethical Standards

The principle of adhering to ethical standards is essential in combating deceptive patterns like negative urgency. Designers must prioritize ethical considerations in their AI systems, eschewing shortcuts that compromise these values. For organizations, this means cultivating a culture that actively rejects the notion of negative urgency. Policies and hiring practices should reflect a commitment to ethical AI development, ensuring that deadlines and targets do not overshadow the importance of responsible AI design and implementation. This approach helps in aligning business practices with ethical AI frameworks, thereby ensuring long-term sustainability and trust.

🤲 Empathy as the Cornerstone

Empathy is a crucial element in ethical AI design, particularly in the context of negative urgency. Designers must empathize with the end-users, ensuring that AI systems are user-centric and free from deceptive elements. For organizations, fostering empathy means understanding and addressing the concerns of all stakeholders, including employees, customers, and the broader community. Recognizing the human impact of rushed decisions and the potential ethical pitfalls they can create is crucial. This approach ensures that the organization values people over processes, leading to more thoughtful and responsible AI implementations.

⚖️ Balancing User Needs and Business Goals

Balancing user needs with business objectives is a delicate yet essential aspect of ethical AI design, particularly when negative urgency is in play. Designers must collaborate with various stakeholders to ensure that the AI systems they create are beneficial to all parties involved. This involves a careful consideration of user experience and ethical implications. Organizations, on the other hand, must clearly communicate their business goals and how these align with individual tasks and responsibilities. By doing so, they can prevent the misalignment of priorities that often leads to negative urgency, ensuring that business objectives do not compromise ethical standards or user welfare.

🔄 Overall Integration for Ethical AI

Addressing deceptive organizational patterns like negative urgency is crucial for businesses committed to ethical practices. By integrating these principles into every aspect of AI program implementation, organizations can ensure sustainable success and a positive reputation. This involves a holistic approach where transparency, ethical standards, empathy, and balanced priorities are not just ideals but practiced realities. Such an approach not only improves staff retention and brand reputation but also contributes to the broader goal of responsible and ethical AI development in the industry.

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

🔬 Research summaries:

Exploring the Carbon Footprint of Hugging Face’s ML Models: A Repository Mining Study

The paper explores insights regarding the carbon emissions of machine learning models on Hugging Face, uncovers current shortcomings in carbon consumption reporting, and introduces a carbon efficiency classification system. It emphasizes the need for sustainable AI practices and improved carbon reporting standards.

To delve deeper, read the full summary here.

Designing for Meaningful Human Control in Military Human-Machine Teams

Ethical principles of responsible AI in the military state that moral decision-making must be under meaningful human control. This paper proposes a way to operationalize this principle by proposing methods for analysis, design, and evaluation.

To delve deeper, read the full summary here.

The Role of Relevance in Fair Ranking

Online platforms generate rankings and allocate search exposure, thus mediating access to economic opportunity. Fairness measures proposed to avoid unfair outcomes of such rankings often aim to (re-)distribute exposure in proportion to ranking worthiness or merit. In practice, relevance is frequently used as a proxy for worthiness. In our paper, we probe this choice and propose desiderata for relevance as a valid and reliable proxy for worthiness from an interdisciplinary perspective. Then, with a case-study-based approach, we assess if these desired properties are empirically met in practice. Our analyses and results surface the pressing need for novel approaches to collect worthiness scores at scale for fair ranking.

To delve deeper, read the full summary here.

📰 Article summaries:

The Generative Elections: How AI could influence the 2024 US presidential race (and beyond)

What happened: Over the past three years, there has been a global technological revolution that has transformed the way we create and consume media content, including images, audio, and text. The 2024 U.S. presidential campaign season recently began, and AI-generated images and audio have already made their presence felt in campaign ads. While there are concerns about AI's potential to spread misinformation, experts believe it could also be used positively to inform voters. However, this transformation may not occur in time for the 2024 elections.

Why it matters: The impact of AI-generated misinformation extends beyond just voters and candidates; online platforms and social media sites will play a significant role in this landscape. Although the Federal Elections Commission is considering regulations on AI-generated deepfakes in campaign ads, Congress must take comprehensive action on AI regulation. Online platforms will face challenges in governing AI use and distribution. Election administrators expect a resurgence of disinformation tactics in 2023, potentially spreading more convincingly and rapidly, with strategies to combat AI-powered disinformation depending on the platform used.

Between the lines: While AI-generated misinformation poses challenges, there is potential for campaigns to use AI to enhance voter engagement through precise targeting. AI could make complex voter information more accessible, allowing voters to interact with AI bots about political issues. People are not powerless against this technology and should actively engage with it, verify information from multiple sources, and be aware of targeted messaging. Putting extra effort into fact-checking and informed discussions can lead to a more informed public.

Workers could be the ones to regulate AI | Financial Times

What happened: The debate on regulating AI has predominantly been driven by tech giants, who claim they want elected officials to establish limits. However, the complexities of AI, combined with the desire to maintain legal protection for innovation, have made it challenging to determine the right regulatory approach. In a significant development, the Writers Guild of America, representing Hollywood writers, negotiated new rules around AI use in the entertainment industry. These rules give union writers the choice of using AI and require studios to disclose whether AI-generated materials are used without infringing on a writer's intellectual property.

Why it matters: This development demonstrates that AI can indeed be regulated, challenging the notion that the tech industry desires Washington's intervention solely for protection. Technology companies aim to craft regulations that provide legal cover for potential issues while allowing innovation to continue. This achievement is also significant because the rules were not imposed from the top down but were established through negotiations with workers with firsthand AI technology experience. Unions have historically played a vital role in shaping regulations for transformative technologies, and this bottom-up approach can ensure AI benefits workers and productivity, avoiding contentious relations between labor and management.

Between the lines: While labor relations in the US can be contentious, there is an opportunity for a more collaborative approach to AI technology. Management should engage workers in discussions about AI to understand its impact on productivity, privacy, and new opportunities. This could lead to a continuous improvement process, where workers and management incrementally enhance their understanding of AI together. The bottom-up approach is essential because AI must be human-centered and labor-enhancing, as AI-driven changes in their tasks will affect a significant portion of the workforce. This union-led AI regulation is likely to spread to other labor organizations. It could lead to unions acting as data stewards, safeguarding worker and citizen interests, and counterbalancing Big Tech and government.

AI regulation and the imperative to learn from history | Ada Lovelace Institute

What happened: The idea of conducting model evaluations or audits to ensure the safety of cutting-edge AI models has gained traction, especially in parallel with regulatory initiatives. For example, the UK Government has identified the evaluation of AI model capabilities as a priority for the Global Summit on AI safety. Evaluations are meant to determine whether these models have the potential to cause harm or possess dangerous capabilities and are often advised to be carried out independently by third parties to ensure impartial risk assessment. While proposals for evaluation are a positive step, they are still in the early stages and require further development through lessons learned from past policy and regulatory failures.

Why it matters: The complexity of emerging technology policy poses inherent challenges. Technology can evolve much faster than the human systems designed to regulate it. New institutions and regulations must be created to address this gap, even if they are imperfect and potentially outdated responses to the rapidly changing landscape. Furthermore, government agencies face unique pressures, like the need to spend budgets within the fiscal year, that can hinder effective regulation. The slow progress in understanding how AI works and ensuring AI safety exacerbates these challenges, as there has been insufficient investment in AI safety research and understanding how AI technologies make decisions. In summary, there needs to be more understanding of what frontier AI can do, its inherent risks, and how it should be regulated, emphasizing the need for both reflection and agile regulatory responses.

Between the lines: Policymakers, particularly in the UK, are determined to comprehend and mitigate various AI risks, from immediate concerns for marginalized groups and exploited workers to existential threats. However, the text highlights the potential for history to repeat itself with future AI regulation. It emphasizes the importance of learning from past mistakes and challenges policymakers and regulators to manage better the unique challenges presented by new technology frontiers. The quote by George Santayana, "Those who cannot remember the past are condemned to repeat it," serves as both a warning and an opportunity to make more informed mistakes in AI regulation.

📖 From our Living Dictionary:

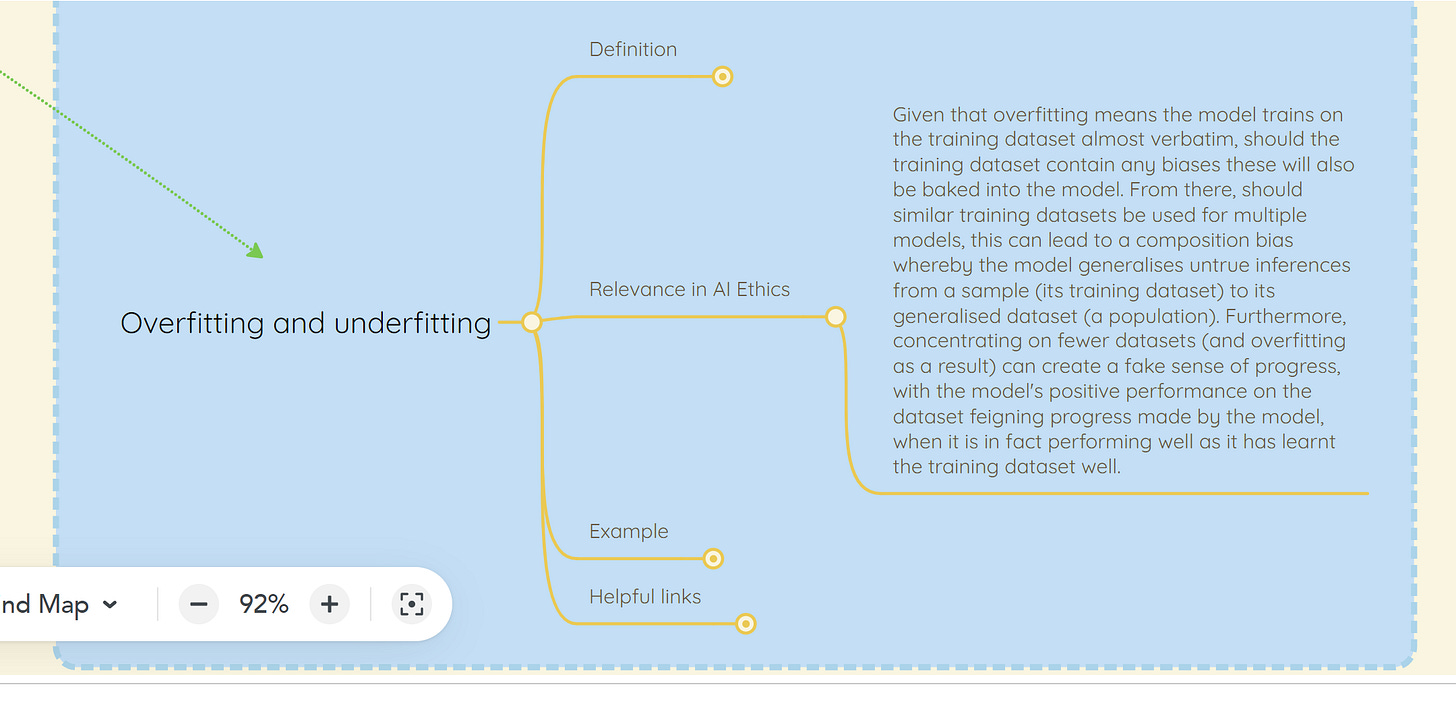

What is the relevance of underfitting and overfitting to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

OpenAI's meltdown prompts further questions around the future of AI safety surveillance

Efforts to regulate AI safety are still a work in progress. Governments are mostly leaving it up to AI companies since the risks are poorly understood, and they don’t want to slow down innovations. Lessons from other kinds of post-market surveillance could inform the future of these efforts.

To delve deeper, read the full article here.

💡 In case you missed it:

Rethink reporting of evaluation results in AI

In order to make informed decisions about where AI systems are safe and useful to deploy, we need to understand the capabilities and limitations of these systems. Yet current approaches to AI evaluation make it exceedingly difficult to build this understanding. This paper details several key problems with common AI evaluation methods and suggests a broad range of solutions to help address them.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.