AI Ethics Brief #117: Algorithmic recourse, 4chan's content moderation approach, ethical AI startup ecosystem, and more.

Are developers necessarily the right stakeholders to do documentation of ML systems?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $8/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What do we do when faced with the difficulty of separating the signal from noise in recent AI developments?

✍️ What we’re thinking:

Be careful with ChatGPT

The Ethical AI Startup Ecosystem 05: Governance, Risk, and Compliance (GRC)

🤔 One question we’re pondering:

Are developers necessarily the right stakeholders to do documentation of ML systems?

🔬 Research summaries:

Humans are not Boltzmann Distributions: Challenges and Opportunities for Modelling Human Feedback and Interaction in Reinforcement Learning

Looking before we leap: Expanding ethical review processes for AI and data science research

The philosophical basis of algorithmic recourse

📰 Article summaries:

How the media is covering ChatGPT

Inside 4chan’s Top-Secret Moderation Machine

‘Fundamentally dangerous’: reversal of social media guardrails could prove disastrous for 2024 elections

📖 Living Dictionary:

A simplified explanation of LLMs

🌐 From elsewhere on the web:

Why ethical AI requires a future-ready and inclusive education system

💡 ICYMI

System Safety and Artificial Intelligence

But first, our call-to-action this week:

Generated using DALL-E 2 with the prompt “isometric scene with high resolution in pastel colors with flat icon imagery depicting researchers reading books in a university library“

We are looking for summer book recommendations that have been published in the last 6-12 months that touch on Responsible AI - especially those that highlighted unique aspects of the Responsible AI discussion, such as the environmental impacts of AI that are less discussed than issues such as bias and privacy.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours and we’ll answer it in the upcoming editions.

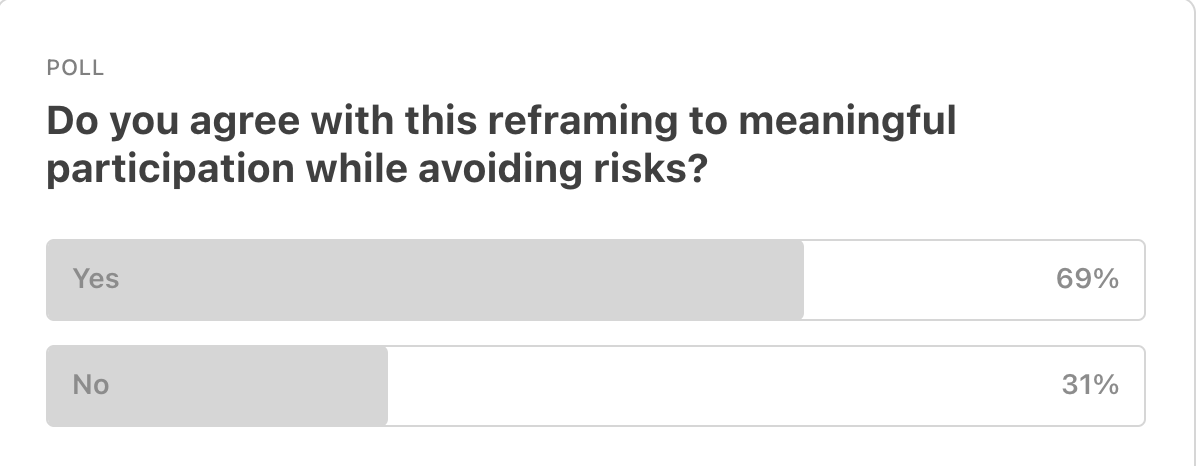

From last week’s edition where we discussed “Should BigTech CEOs be involved with AI regulations?“, poll results indicate that a majority of folks agree with a reframing that aims to meaningfully bring in BigTech CEOs into the AI regulations conversation rather than shunning them outright.

One of our readers, Louise, shared the following in her response to this discussion

🚨 This week, our community asked “What do we do when faced with the difficulty of separating the signal from noise in recent AI developments?“:

So, this is a very interesting question, and there are perhaps several intents hidden in the question from our reader. In particular, we see the following:

Signal vs. noise in terms of capabilities and limitations of AI systems

Signal vs. noise in terms of seriousness of issues related to AI ethics

#1 - Signal vs. noise in terms of capabilities and limitations of AI systems

With a heated race to make announcements, product demos, and releases, it is certainly difficult to ascertain whether a product/service offering actually delivers on all the claims that it makes. In particular, when systems are closed-source, proprietary, or rolled out to a limited set of users, exploring the full extent of what the systems can do relies on second- or third-hand information.

There is also a strong incentive to minimize any severe (read: funding-threatening) limitations that arise in the everyday (and adversarial) uses of the system.

And let’s not forget that the financial stakes involved for a lot of these startups and companies are very high - so there is a risk that they will exaggerate their AI capabilities claims to outdo the competition in the market while limiting the discussion and examination of their system’s limitations (technical performance).

You can counter this by speaking to a wider set of people with technical expertise (research or industry) in that specific area (e.g., for a startup in NLP claiming SOTA on STT in an IoT device, speak with someone who has experience in NLP and TinyML to validate the capability claims and explore what potential limitations have gone unmentioned.)

#2 - Signal vs. noise in terms of seriousness of issues related to AI ethics

Expanding on the point above, the seriousness of AI ethics related issues come down along two axes: severity and frequency. This is a common framework practised in the world of cybersecurity to prioritize allocation of resources to address the issues.

Here, we often find that the full scope of how a product/service might be used can be somewhat anticipated ahead of time, but not its full extent unfortunately. This happens due to the concept of capability overhang whereby there are a ton of hidden capabilities and limitations that only come to surface when the product/service is deployed in the real world. Just take a look at JailbreakChat to find many (creative) examples of how systems can fail and the ethics issues that might arise therefrom.

Keeping tabs on resources like JailbreakChat and the AI Incident Database can help you stay abreast (and discover via lateral thinking) specific ethics issues that might fall outside the canon of current AI ethics discourse.

What are some strategies that you’ve used in your research and reading to separate the wheat from the chaff? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Existing Responsible AI approaches leave unmitigated risks in ChatGPT and other Generative AI systems. We need to evolve our approaches and refine our thinking.

Ethical challenges are only getting exacerbated with increasing experimentation. We are unearthing issues like the generation of very convincing scientific misinformation, biased images and avatars, hate speech, and more. How we put these systems together within human organizations and empower ourselves to take action will be critical in determining whether we get ethical, safe, and inclusive uses out of them.

Let’s dive deeper into these areas and highlight why we must act now.

To delve deeper, read the full article here.

The Ethical AI Startup Ecosystem 05: Governance, Risk, and Compliance (GRC)

In our last issue, we talked about the “Targeted Solutions and Technologies” space. We now move to EAIDB’s last (but not least) category: AI governance, risk, and compliance (GRC). We consider these companies to be the “brakes” of the industry because they provide very deliberate checks on the limits of AI / ML. We particularly like this metaphor because to accelerate safely, brakes are essential.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

Are developers necessarily the right stakeholders to do documentation of ML systems?

We have a rich body of work, including Datasheets for Datasets, Model Cards for Model Reporting, Dataset Nutrition Labels, Reward Reports, FactSheets, etc. that aim to lean into documentation that should be completed by those building ML systems. While it is true that they are perhaps the right stakeholders with the most information about how the system works, but are they the most suitable stakeholders to take on this task?

In particular, we are wondering what they can learn from historians, archivists, and other knowledge management and documentation specialists (or hire them!) so that documentation of ML systems can be improved. At the moment, without further training on how to do documentation really well, we believe that they’re either (1) not the most suitable stakeholders or (2) they require further targeted training before the documentation that they produce can achieve the goals it has set out to achieve.

We’d love to hear from you and share your thoughts back with everyone in the next edition:

🔬 Research summaries:

Current work in human-in-the-loop reinforcement learning often assumes humans are noisily rational and unbiased. This paper argues that this assumption is too simplistic and calls for developing more realistic models that consider human feedback’s personal, contextual, and dynamic nature. The paper encourages interdisciplinary approaches to address these open questions and make human-in-the-loop (HITL) RL more robust.

To delve deeper, read the full summary here.

Looking before we leap: Expanding ethical review processes for AI and data science research

Products and services built through AI and data science research can substantially affect people’s lives, so research must be conducted responsibly. In many corporate and academic research institutions, a primary mechanism for assessing and mitigating risks is using Research Ethics Committees (RECs), also known in some regions as Institutional Review Boards (IRBs). This report explores academic and corporate RECs’ role in evaluating AI and data science research, providing recommendations to businesses, academia, policymakers, and funders working in this context.

To delve deeper, read the full summary here.

The philosophical basis of algorithmic recourse

Many things we care most about are modally robust because they systematically deliver correlative benefits across various counterfactual scenarios. We contend that recourse — the systematic capacity to reverse unfavorable decisions by algorithms and bureaucracies — is a modally robust good. In particular, we argue that two essential components of a good life — temporally extended agency and trust — are underwritten by recourse, and we offer a novel account of how to implement it in a morally and politically acceptable way.

To delve deeper, read the full summary here.

📰 Article summaries:

How the media is covering ChatGPT

What happened: The text highlights the media coverage of generative AI chatbots like ChatGPT and discusses the pattern of hype and sensationalism often associated with reporting on new technologies. It emphasizes the structural problem of media coverage driven by companies' claims and the CEOs' efforts to control the narrative. The volume of coverage for ChatGPT has surpassed that of other technologies, possibly due to its direct implications for journalism and its potential to disrupt various industries.

Why it matters: The coverage of generative AI chatbots raises concerns about the lack of clarity and the need to combat outrageous hype. The framing of these technologies as sentient beings or as having human-like attributes can mislead the public and hinder important discussions on ethics, usage, and the future of work. There is a call for more sober reporting to address the consolidation of power and wealth among a small group of people and to focus on these tools' actual capabilities and limitations.

Between the lines: The media's sensationalized coverage of generative AI chatbots diverts attention from critical questions that should be addressed, such as newsrooms' potential dependence on big tech companies, governance decisions, ethics, and bias concerns, and the environmental impact of these tools. There is a need for better media representations that provide clarity on how these technologies work and their implications. Ground rules and style guides for newsrooms could help temper the hype cycle and promote more responsible coverage.

Inside 4chan’s Top-Secret Moderation Machine

What happened: The US House of Representatives’ January 6 committee subpoenaed 4chan, and the New York Attorney General's Office ordered the company to provide records, investigating its role in the Capitol assault and the Gendron terrorist attack. Internal 4chan documents obtained by WIRED reveal how the site's moderators, known as janitors, have used their power to shape and promote edgelord white supremacy, embracing its toxic influence as a deliberate design.

Why it matters: While many social media platforms try to remove extremist content, 4chan operates with distinct rules and moderation practices that entrench its politics and culture, allowing and encouraging racism and extremist ideologies. The arbitrary application of rules and the permissive attitude towards racism on certain boards contribute to the platform's dangerous environment, where violence and hatred thrive.

Between the lines: In the past, 4chan had a different trajectory under its founder's management, actively resisting racism and political extremism. However, as the site developed, it shifted towards a harder edge. In contrast, platforms like Reddit and Discord have taken steps to moderate and ban communities advocating racism and violence. 4chan, on the other hand, appears reluctant to make significant changes, potentially enabling further acts of domestic terrorism by its users.

What happened: Platforms like YouTube, Meta, and Twitter have made controversial decisions regarding content moderation, allowing the spread of misinformation and reinstating accounts known for disseminating false information. Experts warn that the convergence of increasing misinformation, scaling back of content moderation, and the rise of AI pose a significant risk to democracy, particularly in the context of the upcoming 2024 elections.

Why it matters: The decisions made by these platforms raise concerns about accountability and the potential impact on election integrity. The lack of transparency around moderation decisions and the algorithms' tendency to amplify and recommend misinformation further exacerbate the problem. The profit-driven motives of platforms and the use of generative AI in creating and spreading manipulated content add another layer of alarm, wearing down trust in online information.

Between the lines: The fact that engagement and revenue take precedence on platforms may contribute to the reluctance to combat harmful political speech and misinformation effectively. The economic implications of enforcing rules and monetizing content create a disincentive for platforms to take stronger action. Additionally, the use of generative AI technologies raises concerns about the potential for further manipulation and distortion of public opinion, destabilizing the information ecosystem.

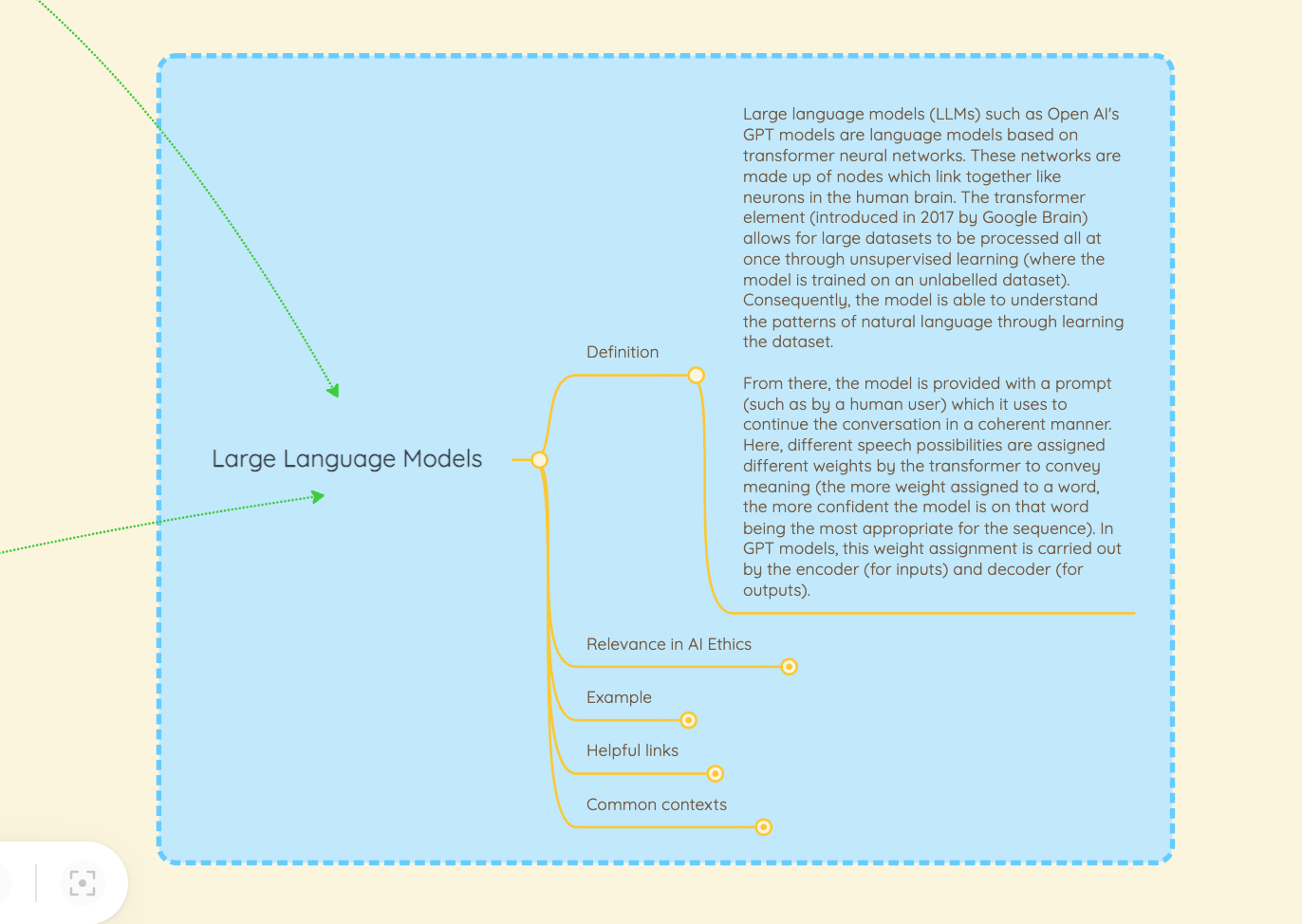

📖 From our Living Dictionary:

A simplified explanation of LLMs

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Why ethical AI requires a future-ready and inclusive education system (World Economic Forum)

Research shows that marginalised groups are particularly susceptible to job loss or displacement due to automation.

We must address the fundamental issue of AI education and upskilling for these underrepresented groups.

Public and private sector stakeholders can collaborate to ensure an equitable future for all.

To delve deeper, read the full article here.

💡 In case you missed it:

System Safety and Artificial Intelligence

The governance of Artificial Intelligence (AI) systems is increasingly important to prevent emerging forms of harm and injustice. This paper presents an integrated system perspective based on lessons from the field of system safety, inspired by the work of system safety pioneer Nancy Leveson. For decades, this field has grappled with ensuring safety in systems subject to various forms of software-based automation. This tradition offers tangible methods and tools to assess and improve safeguards for designing and governing current-day AI systems and to point out open research, policy, and advocacy questions.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.