AI Ethics Brief #116: Instagram Effect in ChatGPT, AI talent gap, many meanings of democratization of AI, and more ...

Should BigTech CEOs be involved in AI regulations?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $8/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

Should BigTech CEOs be involved with AI regulations?

✍️ What we’re thinking:

The Instagram Effect in ChatGPT

Can ChatGPT replace a Spanish or philosophy tutor?

🤔 One question we’re pondering:

What would games designed for AI systems look like?

🔬 Research summaries:

Beyond Bias and Compliance: Towards Individual Agency and Plurality of Ethics in AI

Towards Responsible AI in the Era of ChatGPT: A Reference Architecture for Designing Foundation Model based AI Systems

Democratising AI: Multiple Meanings, Goals, and Methods

📰 Article summaries:

We could run out of data to train AI language programs

When AI can make art – what does it mean for creativity?

The AI talent gap

📖 Living Dictionary:

What is the relevance of differential privacy to AI ethics?

🌐 From elsewhere on the web:

Emerging AI Governance is an Opportunity for Business Leaders to Accelerate Innovation and Profitability

💡 ICYMI

Can LLMs Enhance the Conversational AI Experience?

But first, a short message:

Hello everyone! It’s good to be back in your inboxes.

We’ve been away for a bit and a lot has changed. Generative AI has taken the world by storm (but you already knew that!)

It is now easier than ever to interact with articles and research papers in a Q&A format too. So, we’ve been examining our approach to this newsletter, keeping in mind your precious time (and generous support), so that we can still deliver value in an era with these monumental changes.

We’re doing the following to realize our mission of Democratizing AI Ethics Literacy:

All our content will remain human-written.

We do this with a view of bringing in original insights on research and reporting in the field and to honor the time you spend reading our work.

We are bringing in a couple of new sections (and supplementing existing sections) to increase original content for you:

(🚨 new) 🙋 Ask an AI Ethicist - this will feature and answer a question that is burning on the minds of our community.

(🚨 new) 🤔 One question we’re pondering - this will turn the tables and lean on you to help us better explore a question that is burning on our minds.

✍️ What we’re thinking - our long-running segment with op-ed, commentary, columns, and other insights to refine and elevate our thinking on Responsible AI.

We also invite you to share your thoughts with us indicated by the blue buttons next to key pieces of content in this newsletter.

It is an opportunity for us to learn more from you!

🌐 And, an opportunity for you to exchange with the other 10k+ like-minded readers from 124 countries!

Finally, our most beloved content, the research and article summaries will remain a staple of this newsletter.

🔬Research summaries come to you from either (1) the authors of the original papers themselves or (2) a trusted group of researchers and writers who support the work of making scientific knowledge more accessible for all.

📰 Article summaries are curated by our staff to give you the signal from the noise in the current research and reporting landscape in AI to help you make better and more well-informed decisions on all topics related to AI ethics.

🗓️ Expect to see us every week on Wednesdays in your inbox!

We’re glad to have your support and welcome any feedback on this approach. Thanks!!

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours and we’ll answer it in the upcoming editions.

This week, our community asked: Should BigTech CEOs be involved with AI regulations?

A recent article in the Financial Times titled We need to keep CEOs away from AI regulation prompted our community members to reach out and ask if BigTech CEOs should be involved in the formulation of AI regulations?

When we think about the current framing, we believe that this creates an antagonistic narrative and potentially loses out on valuable insights that BigTech CEOs can bring to the conversation. The strongest argument against involving CEOs in the formulation of AI regulations is that they might skew the conversations toward directions that serve their business purposes. There is also the risk of acceleration and adoption of AI in use cases that are high-risk but having them downgraded into something that isn’t truly reflective of the real risk.

Instead of outright exclusion, we should ask the question on how can we involve CEOs in the AI regulations discussion, while managing the risk identified above, and still benefit from insights they could bring such as:

Latest developments and capabilities of AI systems that their firms are working on

Limitations of current AI systems as they’ve deployed them in their products and services

Failure modes that they’ve experienced along with frequencies and severities of those failures that can help make regulations more targeted and effective

Business constraints that will have economic impacts, such as internal firm incentives and employee structures and governance

Planned development efforts that can help make regulations more robust to capabilities and limitations changes

Ultimately, given core tenets of the Responsible AI community, such as inclusivity, reframing the narrative from hard-line exclusion to meaningful participation can help us arrive at better solutions for our shared purpose of using AI systems (or not using them at all when applicable) for human flourishing.

How can we minimize the risks identified above from deeper CEO involvement in AI regulations development? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

The Instagram Effect in ChatGPT

“We only see the final, picture-perfect outputs from ChatGPT (and other Generative AI systems), which skews our understanding of its real capabilities and limitations. ”

A lot is required to achieve those shareable trophies of taming ChatGPT into producing what you want: tinkering, rejected drafts, invocations of the right spells (we mean prompts!), and learning from observing Twitter threads and Reddit forums. But, those early efforts remain hidden, a kind of survivorship bias, and we are lulled into a false sense of confidence in these systems being all-powerful.

To delve deeper, read the full article here.

Can ChatGPT replace a Spanish or philosophy tutor?

ChatGPT has been the language model on everyone’s lips since its launch. Claims have been made that it will revolutionize the education sector, potentially spelling the end of students writing their papers. So, as a philosophy and Spanish tutor, Connor Wright decided to see if ChatGPT would nullify his services.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

What would games designed for AI systems look like?

A recent paper titled Voyager: An Open-Ended Embodied Agent with Large Language Models prompted an interesting discussion with our MAIEI staff on what might happen to skill acquisition and development of AI agents if we targeted game development and simulation environments that are optimized for the learning of AI agents rather than injecting them into existing games and simulation environments that were built for another purpose? The questions that we’re thinking about in addition to “What would games designed for AI systems look like?” are as follows:

Would this lead to faster skill acquisition?

Would this lead to different skills acquisition that we can’t envision?

What would be the utility of those skills in the real-world (if any)?

And what risks could such targeted games pose to the development trajectory of more capable AI systems?

We’d love to hear from you and share your thoughts back with everyone in the next edition:

🔬 Research summaries:

Beyond Bias and Compliance: Towards Individual Agency and Plurality of Ethics in AI

The fixation on bias and compliance within the AI ethics market leaves out actual ethics: leveraging moral principles to decide how to act. This paper examines the current AI ethics solutions and proposes an alternative method focused on ground truth for machines: data labeling. The paper builds a foundation for how machines understand reality, unlocking the means to integrate freedom of individual expression within AI systems.

To delve deeper, read the full summary here.

Incorporating foundation models into software products like ChatGPT raises significant concerns regarding responsible AI. This is primarily due to their opaque nature and rapid advances. Moreover, as foundation models’ capabilities are growing fast, there is a possibility that they may absorb other components of AI systems eventually, which introduces challenges in architecture design, such as shifting boundaries and evolving interfaces. This paper proposes a responsible-AI-by-design reference architecture to tackle these challenges for designing foundation model-based AI systems.

To delve deeper, read the full summary here.

Democratising AI: Multiple Meanings, Goals, and Methods

Numerous parties are calling for “the democratization of AI,” but the phrase refers to various goals, the pursuit of which sometimes conflict. This paper identifies four kinds of “AI democratization” that are commonly discussed—(1) the democratization of AI use, (2) the democratization of AI development, (3) the democratization of AI profits, and (4) the democratization of AI governance—and explores the goals and mentors of achieving each. This paper provides a foundation for a more productive conversation about AI democratization efforts and highlights that AI democratization can not and should not be equated with AI model dissemination.

To delve deeper, read the full summary here.

📰 Article summaries:

We could run out of data to train AI language programs

What happened: Text online from various sources, such as Wikipedia, articles, and books, has been and continues to be used to train language models. The goal has recently been to train these models on more data to make them more accurate, but AI forecasters are warning that we might run out of data to train them on by as early as 2026.

Why it matters: Language AI researchers filter the data used to train models into high-quality and low-quality, though the line between the two categories can sometimes be unclear. Researchers typically only train models using high-quality data because that is the type of language they want the models to reproduce, and this has led to impressive results for large language models such as GPT-4.

Between the lines: Reassessing the definitions of high- and low-quality could mean that AI researchers incorporate more diverse datasets into the training process. Moreover, large language models are currently trained on the same data just once due to performance and cost constraints. However, it may be possible to extend the life of data and train a model several times using the same data.

When AI can make art – what does it mean for creativity?

What happened: There have been various sentiments about the photorealism of compositions produced by AI image generator Dall-E 2, ranging from unease to excitement. AI image generators transpose written phrases into pictures through a process known as diffusion, where large datasets are scraped together to train the AI for it to concoct new content that resembles the training data but isn’t identical. For example, once it has seen millions of pictures of dogs, it can lay down pixels in the shape of a new dog that resembles the dataset closely.

Why it matters: Some artists are upset about the “theft of their artistic trademark” because the tools have already been trained on artists’ work without their consent. This phenomenon extends beyond various artworks. Analysis of the training database for Stable Diffusion, one of DALL-E’s rivals that have chosen to open source its code and share details of their database of images, has revealed that they’ve gathered private medical photography, photos of members of the public, and pornography.

Between the lines: “Do these tools put an entire class of creatives at risk?” Artwork such as illustrations for books or album covers may face competition from AI, though the owners of AI image generators argue that these tools democratize art. These issues tie into the debate about how much we can credit AI with creativity. According to Marcus du Sautoy, an Oxford University mathematician, image generators come closest to replicating “combinational” creativity because the algorithms are taught to create new images in the same style as millions of others in the training data.

What happened: One of the main goals in U.S. academia and corporate tech is to ensure that more computer scientists and AI engineers come to study and work in the U.S. This article discusses how the U.S. is trying to attract Chinese AI talent. Recently, Big Tech and AI investors have been trying to entice China’s top computer scientists to the U.S., but this mission may backfire by actually alienating those Chinese researchers.

Why it matters: Some numbers prove Chinese scientists in the U.S. feel estranged by the Trump administration’s China initiative, perpetuated by anti-China national security voices. “A survey by the Asian American Scholar Forum of roughly 1,300 Chinese American scientific researchers in the U.S. involved in computer science and engineering, math, and other sciences found that 72% did not feel safe as an academic researcher.”

Between the lines: Although AI researchers in the U.S. and China have worked together for decades, things are starting to change. For example, at this year’s Association for Computing Machinery (ACM) MobiSys conference held in Oregon, work involving on-device machine learning by researchers from various universities and institutes in China was presented by proxies rather than the researchers themselves. Many are examining how the potential for more blockades may affect the research speed in this field.

📖 From our Living Dictionary:

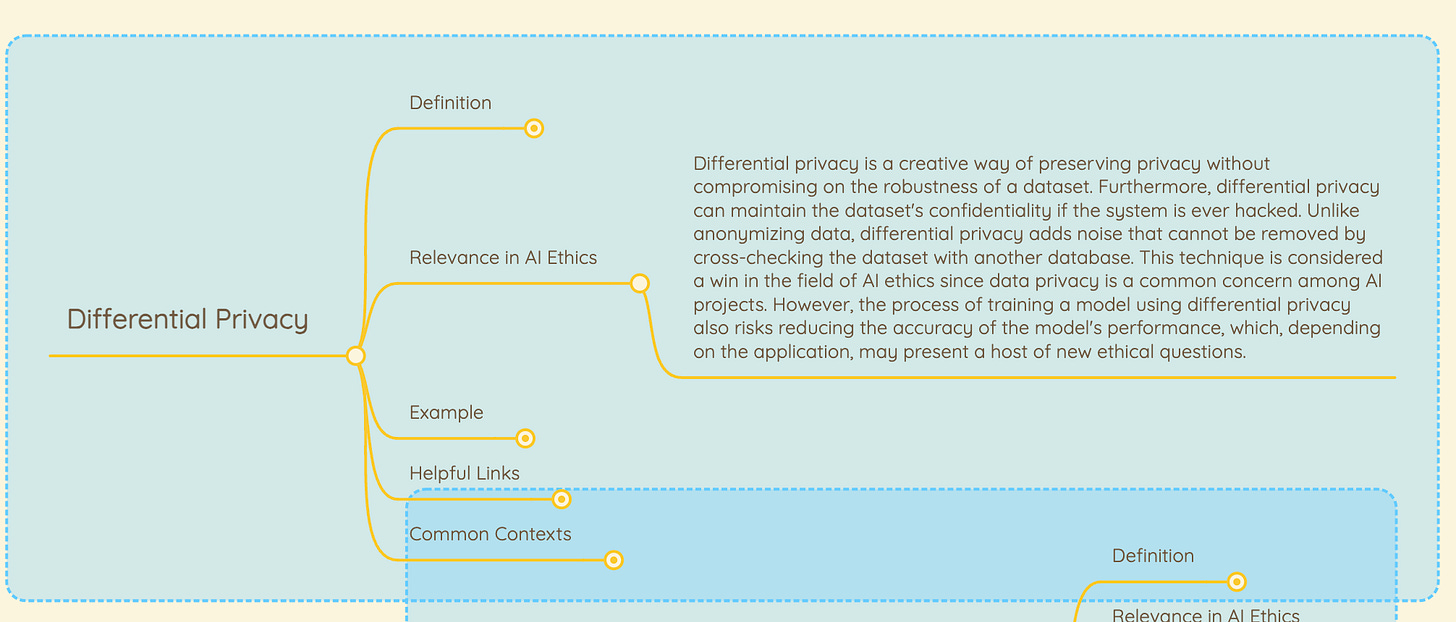

What is the relevance of differential privacy to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

As AI capabilities rapidly advance, especially in generative AI, there is a growing need for systems of governance to ensure we develop AI responsibly in a way that is beneficial for society. Much of the current Responsible AI (RAI) discussion focuses on risk mitigation. Although important, this precautionary narrative overlooks the means through which regulation and governance can promote innovation.

Suppose companies across industries take a proactive approach to corporate governance. In that case, we argue that this could boost innovation (similar to the whitepaper from the UK Government on a pro-innovation approach to AI regulation) and profitability for individual companies as well as for the entire industry that designs, develops, and deploys AI. This can be achieved through a variety of mechanisms we outline below, including increased quality of systems, project viability, a safety race to the top, usage feedback, and increased funding and signaling from governments.

Organizations that recognize this early can not only boost innovation and profitability sooner but also potentially benefit from a first-mover advantage.

To delve deeper, read the full article here.

💡 In case you missed it:

Can LLMs Enhance the Conversational AI Experience?

During conversations, sometimes people finish each other’s sentences. With the advent of LLMs or large language models, that ability is now available to machines. Large language models are distinct from other language models due to their size, which are typically several gigabytes and billions of training parameters larger than their predecessors. By learning from colossal chunks of data, which could come as text, image, or video, these LLMs are poised to notice a thing or two about how people communicate.

Conversational AI products, such as chatbots and voice assistants, are the prime beneficiaries of this technology. OpenAI’s GPT-3, for example, can generate text or code from short prompts entered by users. OpenAI recently released ChatGPT, a version optimized for dialogue. This is one of many models driving the field of generative AI, where the “text2anything” phenomenon is letting people describe an image or idea in a few words and letting AI output its best guesses. Using this capability, bots and assistants could generate creative, useful responses to anyone conversing.

However, LLMs have their faults. Beyond the lack of transparency in training these models, the costs are typically exorbitant for all but massive enterprises. There are also several instances of them fabricating scientific knowledge and promoting discriminatory ideals. While this technology is promising, designers of conversational AI products must carefully assess what LLMs can do and whether that creates a beneficial user experience.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

Your readers may be interested in the following paper that discusses the impact of generative AI on the justice system. See https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4460184. Thanks.

Q: Are there any good resources that distill the system-level view of LLM-based AI systems that you are aware of?

A: Useful source is this from Ada Lovelace Institute see the 'foundation model supply chain' graphic: https://www.adalovelaceinstitute.org/resource/foundation-models-explainer/