AI Ethics Brief #131: Auditing for human expertise, Waymo's approach to safety, moral machines or tyranny of majority, and more.

Are we ready for a multispecies Westworld?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What are some key considerations in designing a policy around responsible AI use within an organization?

✍️ What we’re thinking:

Are we ready for a multispecies Westworld?

🤔 One question we’re pondering:

Does a global or local governance and lawmaking approach better accommodate the appropriate context for social benefit determination?

🔬 Research summaries:

REAL ML: Recognizing, Exploring, and Articulating Limitations of Machine Learning Research

Auditing for Human Expertise

Building a Credible Case for Safety: Waymo’s Approach for the Determination of Absence of Unreasonable Risk

📰 Article summaries:

How Silicon Valley doomers are shaping Rishi Sunak’s AI plans – POLITICO

AI incident response plans: Not just for security anymore

Google’s Bard Just Got More Powerful. It’s Still Erratic. - The New York Times

📖 Living Dictionary:

What are AI text-detection tools?

🌐 From elsewhere on the web:

Wyden, Booker, and Clarke Introduce Bill to Regulate Use of Artificial Intelligence to Make Critical Decisions like Housing, Employment and Education

💡 ICYMI

Moral Machine or Tyranny of the Majority?

🚨 The Responsible AI Bulletin

We’ve restarted our sister publication, The Responsible AI Bulletin, as a fast-digest every Sunday for those who want even more content beyond The AI Ethics Brief. (Our lovely power readers 🏋🏽, thank you for writing in and requesting it!)

The focus of the Bulletin is to give you a quick dose of the latest research papers that caught our attention in addition to the ones covered here.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

Here are the results from the previous edition for this segment:

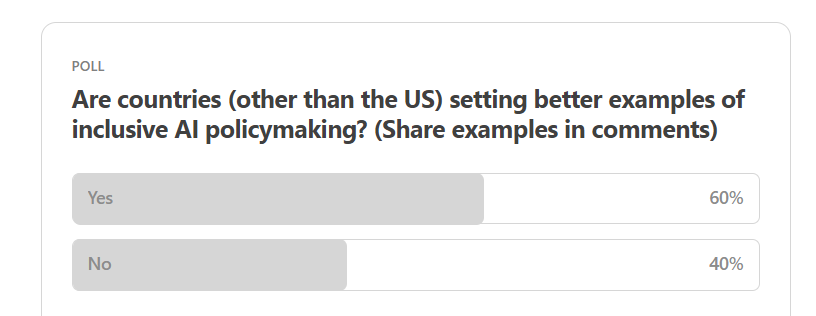

Interesting outcome from last week’s poll, given the heavy activity taking place in the US around policymaking related to AI. We will have to wait and see how other parts of the world approach the thorny issues around balancing the innovation potential of AI against the risks and harm posed by unmitigated deployment.

This week, one of our readers asked a very relevant question they faced from senior leadership in their organization around creating a new policy for AI use within their organization, “What are some key considerations in designing a policy around responsible AI use within an organization?“

- Align with organizational values and goals. The policy should reflect the company's core values, principles, and objectives. It can help guide ethical AI practices that align with the organization's mission.

- Prioritize fairness, transparency, and accountability. The policy should promote developing AI systems that are fair, transparent, and accountable. It should mitigate bias, explainability issues, and potential harms.

- Establish oversight procedures. The policy could establish procedures for reviewing and approving AI systems before deployment. This provides governance and ensures that AI systems meet standards.

- Address data practices. Detail proper data collection, storage, usage, and deletion protocols. Include considerations around consent, privacy, security, and data biases.

- Outline impact assessments. Require impact assessments for high-risk AI systems to evaluate their implications on fairness, bias, privacy, security, and other areas. This can adopt a risk-tiered approach as mandated in the EU AI Act requirements.

- Create grievance redressal mechanisms. Implement channels for feedback and redressal for those negatively impacted by the organization's AI systems.

- Mandate documentation and reporting. Require documentation of AI systems development, functionality, and limitations. Mandate regular reporting on responsible AI practices.

- Assign responsibility. Designate roles to oversee policy implementation, compliance, and reporting. Provide resources and training to build organizational capacity.

- Continual review and updates. Set procedures for regular reviews of the policy effectiveness and updating based on learnings, stakeholder feedback, and evolving best practices.

Are there any items that we’ve missed? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Are we ready for a multispecies Westworld?

This column discusses the ethical implications of creating artificial animals to replace real animals in captivity for entertainment and education. While this technology could reduce the suffering of biological animals in captivity, artificial animals may undermine the purpose of zoos and soon become sentient beings themselves capable of being harmed. The authors conclude we should pursue this technology cautiously, limiting risks like moral uncertainty, and not view artificial animals as an adequate replacement for phasing out zoos and aquariums.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

The fast pace of AI progress requires flexible but principled governance. Laws should focus on managing risks while encouraging AI innovation for social benefit. Technical expertise and multistakeholder perspectives are essential for balanced AI policymaking. Does a global or local governance and lawmaking approach better accommodate the appropriate context for social benefit determination?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🔬 Research summaries:

REAL ML: Recognizing, Exploring, and Articulating Limitations of Machine Learning Research

Limitations are inherent in conducting research; transparency about these limitations can improve scientific rigor, help ensure appropriate interpretation of research findings, and make research claims more credible. However, the machine learning (ML) research community lacks well-developed norms around disclosing and discussing limitations. This paper introduces REAL ML, a set of guided activities to help ML researchers recognize, explore, and articulate the limitations of their research—co-designed with 30 researchers from the ML community.

To delve deeper, read the full summary here.

In this work, we develop a statistical framework to test whether an expert tasked with making predictions (e.g., a doctor making patient diagnoses) incorporates information unavailable to any competing predictive algorithm. This ‘information’ may be implicit; for example, experts often exercise judgment or rely on intuition which is difficult to model with an algorithmic prediction rule. A rejection of our test thus suggests that human experts may add value to any algorithm trained on the available data. This implies that optimal performance for the given prediction task will require incorporating expert feedback.

To delve deeper, read the full summary here.

Autonomous driving technology holds the potential to revolutionize on-road transportation. It can break down barriers to mobility access for disabled individuals, improve the safety of all road users, and transform the way we travel from point A to point B. But how do we assess the safety of an autonomous vehicle and judge that a company has done sufficient due diligence to field a product on public roads? A safety case helps answer those questions, and this paper unboxes the approach taken by Waymo (prev. Google Self-Driving Car Project).

To delve deeper, read the full summary here.

📰 Article summaries:

How Silicon Valley doomers are shaping Rishi Sunak’s AI plans – POLITICO

What happened: Initially, the UK government took a relatively relaxed stance on the rise of AI, addressing its "existential risks" briefly in a white paper by describing them as "high impact, low probability." However, within six months, Prime Minister Rishi Sunak has expressed greater concern about the potential dangers posed by AI. He has announced an international AI Safety Summit, established an AI safety taskforce, and started discussing "existential risk." This shift is partly due to the influence of the effective altruism (EA) movement, which argues that super-intelligent AI could either lead to utopia or annihilation.

Why it matters: While the focus on existential risks from super-intelligent AI has gained prominence, some researchers are worried that it has overshadowed more immediate concerns related to today's AI models, including issues like bias, data privacy, and copyright. Critics argue that the EA movement's influence, particularly in Silicon Valley, lacks evidence and can be alarmist. They also raise concerns about a potential conflict of interest when EA principles are intertwined with AI companies, leading to regulatory capture.

Between the lines: The EA movement's influence on AI policy in the UK has prompted discussions about regulating advanced AI models, suggesting licensing powerful AI models. Such ideas are gaining traction, as seen in the formation of the "Frontier AI Taskforce" and the government's focus on regulating "frontier AI." However, there are differing perspectives on whether the government's shift in AI policy is primarily driven by external circumstances or the effectiveness of the EA lobby. Some argue that the world's challenges have intensified, making existential risk considerations more relevant. Others suggest that the EA movement has advocated for various risks, including AI, for years, and the government is now more receptive to their concerns.

AI incident response plans: Not just for security anymore

What happened: The text highlights the importance of preparing for failures in AI systems, emphasizing the need for organizations involved in AI to anticipate and effectively manage these failures. It discusses various types of AI failures, including security breaches, discriminatory outcomes, privacy violations, and lack of transparency, drawing attention to the potential legal, financial, and reputational consequences. It also underscores the importance of maintaining accurate data for AI systems.

Why it matters: The ongoing accuracy of AI models, particularly in the context of changing circumstances like the COVID-19 pandemic, is a significant concern. Complex AI systems, such as deep learning and neural networks, can be challenging to troubleshoot when errors occur, as they deal with probabilities and uncertainties. Security risks specific to AI systems, like model extraction and adversarial attacks, are also highlighted as potential threats. To address these challenges, the text suggests the importance of inventorying AI systems, establishing baselines for model operations, and adapting traditional incident response processes.

Between the lines: In the realm of AI, failures are inevitable but should not lead to catastrophic outcomes. Organizations must proactively prepare for AI failures to ensure controlled and timely responses. These failures can range from technical issues to ethical concerns, affecting users, consumers, and society. The complexity of AI systems makes it difficult to pinpoint errors, and there is a need for continuous monitoring, recalibration, and a focus on critical models. Building an incident response plan based on AI inventory and model performance baselines is recommended to address these unique challenges effectively.

Google’s Bard Just Got More Powerful. It’s Still Erratic. - The New York Times

What happened: This week, Bard, Google's competitor to ChatGPT, received an upgrade introducing a new feature called Bard Extensions. This feature enables the AI chatbot to connect with a user's Gmail, Google Docs, and Google Drive accounts. It addresses a common limitation of AI chatbots, which usually function in isolation, unable to access users' calendars, emails, or other relevant information necessary to provide comprehensive assistance.

Why it matters: Bard Extensions is currently available only for personal Google accounts, requiring users to activate it through the app's settings. It's also limited to English at the moment. While Google claims not to use personal data for Bard's AI training or to display it to employees reviewing Bard's responses, users are advised to exercise caution about sharing sensitive data.

Between the lines: Bard demonstrated competence in simple tasks, such as summarizing recent emails or responding to specific email-related queries. However, it faced challenges with more complex tasks. For instance, when asked to summarize the 20 most important emails, it included seemingly random emails. It also struggled with specific requests like drafting responses and generating lists of most-emailed contacts. Despite initial hiccups, Google anticipates Bard's improvement over time and envisions AI assistants evolving into collaborative tools that enhance users' lives through data-driven tasks.

📖 From our Living Dictionary:

What are AI text-detection tools?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Algorithmic Accountability Act Requires Assessment of Critical Algorithms and New Transparency About When and How AI is Used; Bill Endorsed by AI Experts and Advocates; Sets the Stage For Future Oversight and Legislation

To delve deeper, read the full article here.

💡 In case you missed it:

Moral Machine or Tyranny of the Majority?

Given the increased application of AI and ML systems in situations that may involve tradeoffs between undesirable outcomes (e.g., whose lives to prioritize in the face of an autonomous vehicle accident, which loan applicant(s) can receive a life-changing loan), some researchers have turned to stakeholder preference elicitation strategies. By eliciting and aggregating preferences to derive ML models that agree with stakeholders’ input, these researchers hope to create systems that navigate ethical dilemmas in accordance with stakeholders’ beliefs. However, in our paper, we demonstrate via a case study of a popular setup that preference aggregation is a nontrivial problem, and if a proposed aggregation method is not robust, applying it could yield results such as the tyranny of the majority that one may want to avoid.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.