AI Ethics Brief #132: AI's carbon conundrum, attacking fake news detectors, automating extremism, ethics of generative audio models, and more.

What are some safe piloting strategies that can help organizations navigate this trade-off?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

In cases where organizations can truly benefit from using AI, how do we make a case for AI adoption while still emphasizing the need for dedicated investments in fundamentals to meet responsible AI requirements?

✍️ What we’re thinking:

The Carbon Conundrum and Ethical Quandaries in the Expanding Realm of AI

🤔 One question we’re pondering:

What are some safe piloting strategies that can help organizations navigate this trade-off?

🔬 Research summaries:

Automating Extremism: Mapping the Affective Roles of Artificial Agents in Online Radicalization

Attacking Fake News Detectors via Manipulating News Social Engagement

Impacts of Differential Privacy on Fostering More Racially and Ethnically Diverse Elementary Schools

📰 Article summaries:

AI-Generated 'Subliminal Messages' Are Going Viral. Here's What's Really Going On

‘We have a bias problem’: California bill addresses race and gender in venture capital funding

I asked Meta's A.I. chatbot what it thought of my books. What I learned was deeply worrying.

📖 Living Dictionary:

What is the relevance of AI text-detection tools in AI ethics?

🌐 From elsewhere on the web:

AI chatbots let you 'interview' historical figures like Harriet Tubman. That's probably not a good idea.

Open-Sourcing Highly Capable Foundation Models

💡 ICYMI

The Ethical Implications of Generative Audio Models: A Systematic Literature Review

🚨 The Responsible AI Bulletin

We’ve restarted our sister publication, The Responsible AI Bulletin, as a fast-digest every Sunday for those who want even more content beyond The AI Ethics Brief. (Our lovely power readers 🏋🏽, thank you for writing in and requesting it!)

The focus of the Bulletin is to give you a quick dose of the latest research papers that caught our attention in addition to the ones covered here.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

We missed including a poll for last week’s edition. Still, we had some great email exchanges from our readers on the core question: “What are some key considerations in designing a policy around responsible AI use within an organization?“

One of our readers wrote to us asking about a challenge that they’re facing at a Fortune 500 company: In cases where organizations can truly benefit from using AI, how do we make a case for AI adoption while still emphasizing the need for dedicated investments in fundamentals to meet responsible AI requirements?

Some recommendations would include:

1. Financial incentives - Seek tax credits, grants, and subsidies to offset the costs of procuring AI solutions and training employees. This reduces barriers to adoption for businesses.

2. Awareness programs - Seek out ecosystem-wide campaigns showcasing AI success stories and benefits to make businesses more receptive to adoption. Also, educate on risks to mitigate concerns.

3. Support networks - Facilitate peer learning by creating spaces/channels for companies to share AI adoption experiences and best practices.

4. Accessible AI infrastructure - Invest in shared data repositories, computing resources, tools, and sandboxes that provide affordable access for organizations with limited in-house AI capacity.

5. Talent development - Expand educational initiatives like vocational training, apprenticeships, and incentives for re-skilling employees to work alongside AI.

What are some recommendations that have worked in your organization? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

The Carbon Conundrum and Ethical Quandaries in the Expanding Realm of AI

AI systems require significant computational resources for various tasks such as training, hyperparameter optimization, and inference. They rely on vast amounts of data, often requiring specialized hardware and data center facilities, consuming electricity and contributing to carbon emissions. The substantial carbon footprint raises concerns about environmental sustainability and social justice implications.

As AI models continue to grow in size, the pace of compute consumption is increasing exponentially. For instance, high-compute AI systems have doubled their consumption every 3.4 months, and this rate may even be faster now. Despite the rapid increase in computational requirements, the improvements in performance achieved by these large-scale AI models are only marginal. Moreover, large-scale AI models introduce challenges such as biases, privacy concerns, vulnerability to attacks, and high training costs.

These challenges are compounded by the fact that the models are widely accessible through public application programming interfaces (APIs), limiting the ability to address the problems downstream (for those wondering, making large-scale AI models accessible through public APIs amplifies the risk of widespread bias and errors due to their extensive use, limited user control, and potential privacy breaches, and raises concerns about security vulnerabilities, scalability costs, and ethical challenges across diverse applications and user groups).

To delve deeper, read the full article here.

🤔 One question we’re pondering:

Many organizations are struggling with the overwhelming demand from top-level executives to start using Generative AI. At the same time, many risks need to be addressed. What are some safe piloting strategies that can help organizations navigate this trade-off?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🔬 Research summaries:

Automating Extremism: Mapping the Affective Roles of Artificial Agents in Online Radicalization

Conversational AI bots are now augmenting and substituting human efforts across various fields, including advertising, finance, mental health counseling, dating, and wellness. This paper suggests that even Jihadis and neo-Nazis may not be safe from the AI job takeover. As conversational bots continue to evolve, the authors warn that they have become a vital radicalization and recruitment tool for extremist organizations.

To delve deeper, read the full summary here.

Attacking Fake News Detectors via Manipulating News Social Engagement

Although recent works have exploited the vulnerability of text-based misinformation detectors, the robustness of social-context-based detectors has not yet been extensively studied. In light of this, we propose a multi-agent reinforcement learning framework to probe the robustness of existing social-context-based detectors. We offer valuable insights to enhance misinformation detectors’ reliability and trustworthiness by evaluating our method on two real-world misinformation datasets.

To delve deeper, read the full summary here.

Impacts of Differential Privacy on Fostering More Racially and Ethnically Diverse Elementary Schools

In the past decade, differential privacy, the de facto standard for privacy protection, has gained prevalence among statistical agencies (e.g., US Census Bureau) and corporations (e.g., Apple and Google), particularly in releasing sensitive information. This paper studies one under-explored yet important application based on demographic information—the redrawing of elementary school attendance boundaries (“redistricting”) for racial and ethnic diversity—and evaluates how and to what extent privacy-protected demographic information might impact racial and socioeconomic integration efforts across US public schools.

To delve deeper, read the full summary here.

📰 Article summaries:

AI-Generated 'Subliminal Messages' Are Going Viral. Here's What's Really Going On

What happened: A new generative AI technique called ControlNet has gained attention on social media, allowing users to create optical illusions and incorporate images or words within other images. Some users have referred to this as a way to create "hidden messages" within generated images, such as embedding a McDonald's "M" logo within a movie poster outline.

Why it matters: ControlNet utilizes the AI image-generation tool Stable Diffusion, initially used to generate intricate QR codes. Users have expanded upon this by developing a process that lets them specify any image or text as a black-and-white mask, integrating it into the generated image, similar to Photoshop's masking tool. This raises questions about the potential applications of this technology, particularly in advertising and visual messaging.

Between the lines: While ControlNet has sparked excitement, it's essential to recognize that its real-world impact may not be as extreme as the initial hype suggests. It is unlikely to lead to brands hiding their logos within seemingly innocent images to manipulate viewers subliminally. However, it may still find practical use in advertising and generate discussions about double meanings and visual intrigue in ads.

‘We have a bias problem’: California bill addresses race and gender in venture capital funding

What happened: A bill in California awaits Governor Gavin Newsom's signature, which would mandate venture capital firms to disclose the race and gender of the founders of the companies they fund. This legislation has faced opposition from the business community. Still, civil rights groups and female entrepreneurs support it as a way to address the significant gender and racial disparities in startup funding, particularly in Silicon Valley.

Why it matters: This legislation is significant in the context of the ongoing discussions about racial and gender bias in AI products, which have received substantial venture capital investment. It aligns with the Biden administration's efforts to combat algorithmic discrimination and promote equity. If the bill becomes law, venture firms must provide demographic data by March 1, 2025, with potential penalties for non-compliance.

Between the lines: The California legislation emerges amid a broader national debate on government initiatives aimed at promoting diversity and equity. It comes after the US Supreme Court's ruling against racial and gender preferences in college admissions and legal challenges to similar preferences in various areas. The proposed California law does not involve preferences but focuses on transparency and data collection. While affirmative action has been banned in California for years, this legislation could enhance public accountability and encourage firms to adjust their practices to address disparities.

I asked Meta's A.I. chatbot what it thought of my books. What I learned was deeply worrying.

What happened: The author discovered that Meta, the parent company of Facebook, had downloaded 183,000 books to teach its AI machines how to write. Curious about whether his own books were included, he used a search tool and found three books were part of this database while three others were not. Additionally, there was confusion between the author and another person with the same name.

Why it matters: The situation raises concerns about how AI systems manage and categorize information. The author suggests that AI software often tries to provide answers that cater to user expectations rather than accurately assessing complex situations. This raises questions about the AI's ability to create literature, technical manuals, or content requiring nuanced understanding from a vast database. The criteria used to select books for this AI database, such as cost and availability, also prompt questions about Meta's intentions.

Between the lines: The author questions the AI's ability to discern subtle differences, as it failed to distinguish between two individuals with the same name despite their different backgrounds and professions. Additionally, the author highlights that criteria like cost and availability seem irrelevant for an AI database that teaches machines how to write, suggesting that Meta may have additional purposes, potentially involving cataloging, reproducing, or selling existing books, raising important ethical and copyright considerations.

📖 From our Living Dictionary:

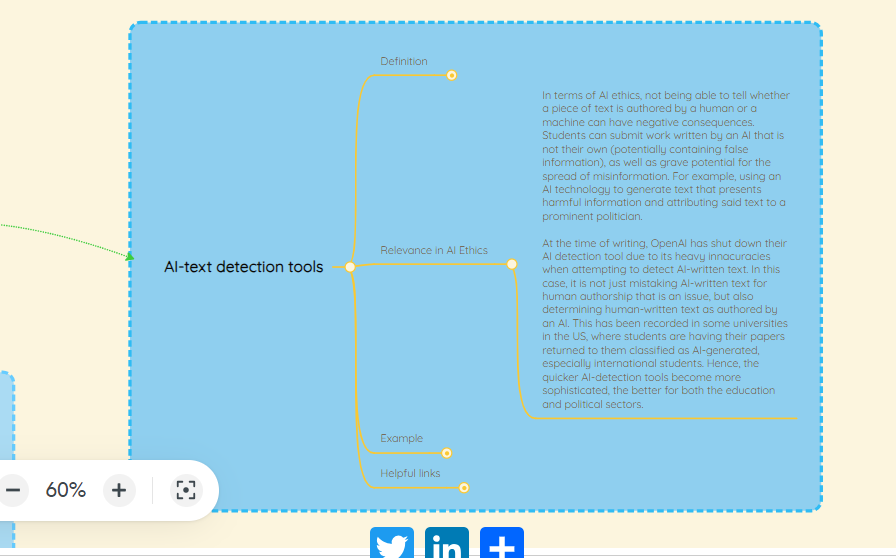

What is the relevance of AI text-detection tools in AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

But the bots present a major problem for teachers and students alike, as they "often provide a poor representation and imitation of a person's true identity," Abhishek Gupta, the founder and a principal researcher at Montreal AI Ethics Institute, told Insider by email.

Gupta said that to use the bots in an ethical manner, they would at least need defined inputs and a "retrieval-augmented approach," which could "help ensure that the conversations remain within historically accurate boundaries."

It's also "crucial to have extensive and detailed data in order to capture the relevant tone and authentic views of the person being represented," Gupta said.

Gupta also pointed to a deeper issue with using bots as educational tools.

He said that overreliance on the bots could lead to "a decline in critical-reading skills" and affect our abilities "to assimilate, synthesize, and create new ideas" as students may start to engage less with original source materials.

"Instead of actively engaging with the text to develop their own understanding and placing it within the context of other literature and references, individuals may simply rely on the chatbot for answers," he wrote.

To delve deeper, read the full article here.

Open-Sourcing Highly Capable Foundation Models

Recent decisions by leading AI labs to either open-source their models or to restrict access to their models has sparked debate about whether, and how, increasingly capable AI models should be shared. Open-sourcing in AI typically refers to making model architecture and weights freely and publicly accessible for anyone to modify, study, build on, and use. This offers advantages such as enabling external oversight, accelerating progress, and decentralizing control over AI development and use. However, it also presents a growing potential for misuse and unintended consequences. This paper offers an examination of the risks and benefits of open-sourcing highly capable foundation models. While open-sourcing has historically provided substantial net benefits for most software and AI development processes, we argue that for some highly capable foundation models likely to be developed in the near future, open-sourcing may pose sufficiently extreme risks to outweigh the benefits. In such a case, highly capable foundation models should not be open-sourced, at least not initially. Alternative strategies, including non-open-source model sharing options, are explored. The paper concludes with recommendations for developers, standard-setting bodies, and governments for establishing safe and responsible model sharing practices and preserving open-source benefits where safe.

To delve deeper, read the full paper here.

💡 In case you missed it:

The Ethical Implications of Generative Audio Models: A Systematic Literature Review

This paper analyzes an exhaustive set of 884 papers in the generative audio domain to quantify how generative audio researchers discuss potential negative impacts of their work and catalog the types of impacts being considered. Jarringly, less than 10% of works discuss any potential negative impacts—particularly worrying because the papers that do so raise serious ethical implications and concerns relevant to the broader field, such as the potential for fraud, deep-fakes, and copyright infringement.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.