AI Ethics Brief #80: Ethics-based audits, a new AI patent model, critical examination of interpretability, and more ...

What do we need to build more sustainable AI systems?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

⏰ This week’s Brief is a ~20-minute read.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for as little as $5/month. If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support the democratization of AI ethics literacy and to ensure we can continue to serve our community.

*NOTE: When you hit the subscribe button, you may end up on the Substack page where if you’re not already signed into Substack, you’ll be asked to enter your email address again. Please do so and you’ll be directed to the page where you can purchase the subscription.

This week’s overview:

✍️ What we’re thinking:

What do we need to build more sustainable AI systems?

🔬 Research summaries:

Against Interpretability: a Critical Examination

Transparency as design publicity: explaining and justifying inscrutable algorithms

Ethics-based auditing of automated decision-making systems: intervention points and policy implications

Summoning a New Artificial Intelligence Patent Model: In the Age of Pandemic

📰 Article summaries:

How Big Tech Is Pitching Digital Elder Care to Families

Facebook, Citing Societal Concerns, Plans to Shut Down Facial Recognition System

How Alibaba tracks China’s delivery drivers

📅 Event

Value Sensitive Design and the Future of Value Alignment in AI

📖 Living Dictionary:

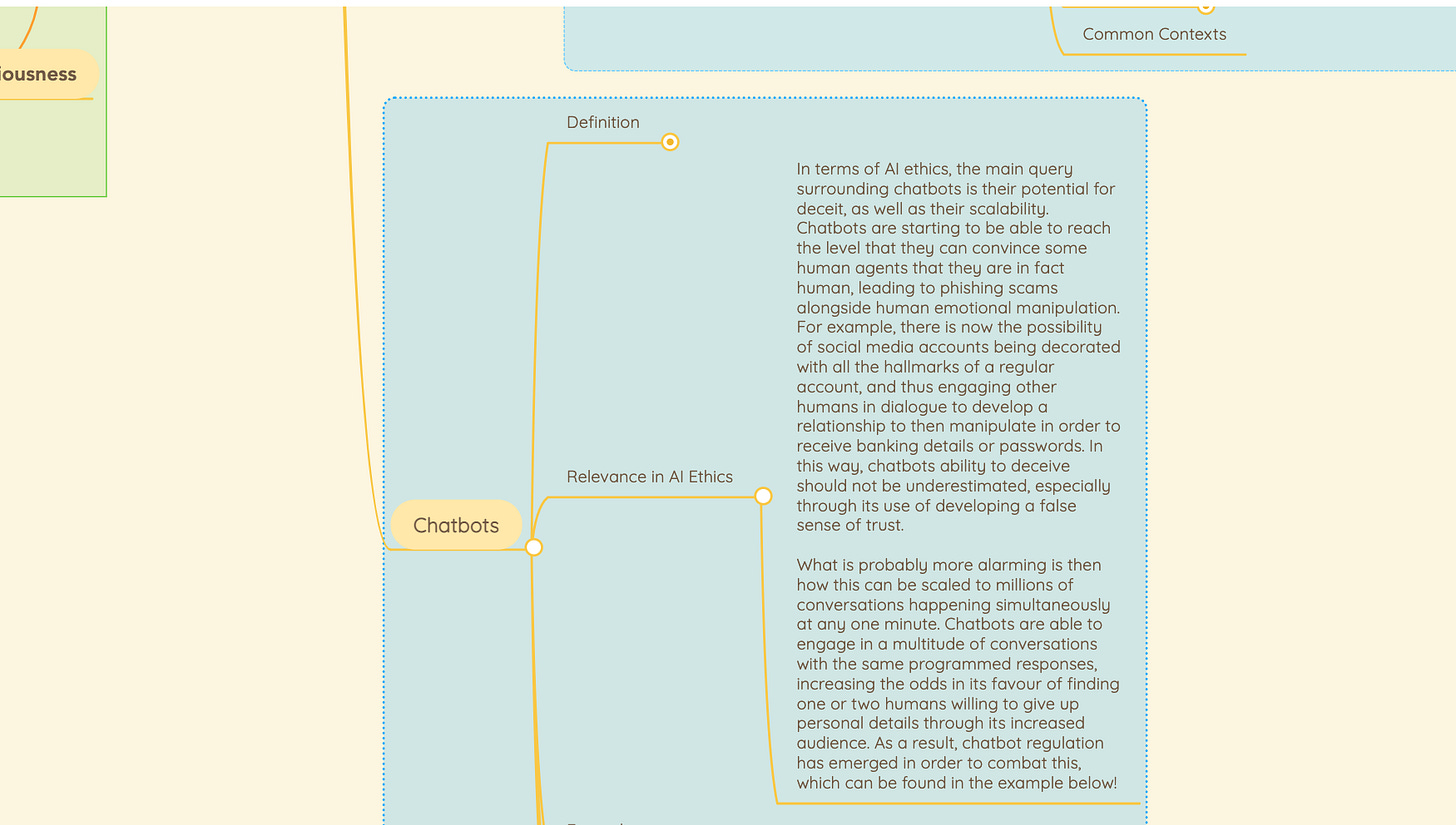

Chatbots

💡 ICYMI

Breaking Your Neural Network with Adversarial Examples

But first, our call-to-action this week:

Value Sensitive Design and the Future of Value Alignment in AI

There is ample discussion of the risks, benefits, and impacts of Artificial Intelligence (AI). Although the exact effects of AI on society are neither clear nor certain, AI is doubtlessly having a profound effect on overall human development and progress, and it will continue to do so in the future. An important line of work in AI and ethics seeks to identify the values or norms that AI ought to be aligned with.

However, it is far from clear how appropriate targets for alignment would look like and how they could be identified in light of persistent philosophical challenges about the nature and epistemic accessibility of values. Value sensitive design (VSD) is a prominent and influential approach to ethical design that pre-dates current concerns with the alignment of AI. VSD has been applied to specific technologies such as energy systems, mobile phone usage, architecture projects, manufacturing, and augmented reality systems, to name a few. VSD has also been proposed as a suitable design framework for technologies emerging in the near- and long-term future. VSD might be a practical methodology to tackle the problem of value alignment for AI.

This online roundtable discussion aims to discuss whether and how VSD can advance AI ethics in theory and practice. We expect a large audience of AI ethics practitioners and researchers. We will discuss the promises, challenges, and potential of VSD as a methodology for AI ethics and value alignment specifically.

The panelists are as follows:

Idoia Salazar President & co-founder of OdiselAI and Expert in European Parliament’s Artificial Intelligence Observatory.

Batya Friedman: Professor in the Information School, University of Washington, inventor and leading proponent of VSD.

Brian Christian: Journalist, UC Berkeley Science Communicator in Residence, NYT best-selling author of ‘Value Alignment’, Finalist LA Times Best Science & Technology Book.

Geert-Jan Houben (Pro Vice-Rector Magnificus Artificial Intelligence, Data and Digitalisation, TU Delft with opening remarks.

Jeroen van den Hoven: Professor of Ethics and Philosophy of Technology, TU Delft as moderator.

✍️ What we’re thinking:

What do we need to build more sustainable AI systems?

You’ve probably heard about the intersection of artificial intelligence (AI) and the environment. Yes, we can build AI solutions to address climate change and other environmental issues. But, AI systems themselves have an environmental impact. In this article, we’ll talk about the latter, especially as the scale of AI systems grows tremendously and we risk severe environmental and social harm if we don’t figure out a way to make these systems greener. Not all hope is lost in the relentless pursuit of building more state-of-the-art (SOTA) systems; we can make changes to help mitigate environmental impacts. Specifically, carbon accounting can help guide our actions. In fact, there are things that we can start doing this week that can help!

To delve deeper, read the full article here.

🔬 Research summaries:

Against Interpretability: a Critical Examination

“Explainability”, “transparency, “interpretability” … these are all terms that are employed in different ways in the AI ecosystem. Indeed, we often hear that we should make AI more “interpretable” and/or more “explainable”. In contrast, the author of this paper challenges the idea that “interpretability” and the like should be values or requirements for AI. First, it seems that these concepts are not really intuitively clear and technically implementable. Second, they are mostly proposed not as values in themselves but as means to reach some other valuable goals (e.g. fairness, respect for users’ privacy). And so the author argues that rather than directing our attention to “interpretability” or “explainability” per se, we should focus on the ethical and epistemic goals we set for AI while also making sure we can adopt a variety of solutions and tools to reach those goals.

To delve deeper, read the full summary here.

Transparency as design publicity: explaining and justifying inscrutable algorithms

It is often said that trustworthy AI requires systems to be transparent and/or explainable. The goal is to make sure that these systems are epistemically and ethically reliable, while also giving people the chance to understand the outcomes of those systems and the decisions made based on those outcomes. In this paper, the solution proposed stems from the relationship between “design explanations” and transparency: if we have access to the goals, the values and the built-in priorities of an algorithm system, we will be in a better position to evaluate its outcomes.

To delve deeper, read the full summary here.

The government mechanisms currently used to oversee human decision-making often fail when applied to automated decision-making systems (“ADMS”). In this paper, the researchers propose the feasibility and effectiveness of ethics-based auditing (“EBA”) as a ‘soft’ yet ‘formal’ governance mechanism to regulate ADMS and also discuss the policy implications of their findings.

To delve deeper, read the full summary here.

Summoning a New Artificial Intelligence Patent Model: In the Age of Pandemic

The article analyzes the challenges posed by the current patent law regime when applied in the context of Artificial Intelligence (AI) in general and especially at the time of covid pandemic. The article also proposes creative solutions to the hurdles of patenting AI technology by establishing a new patent track model for AI inventions.

To delve deeper, read the full summary here.

📰 Article summaries:

How Big Tech Is Pitching Digital Elder Care to Families

What happened: As the pandemic rolled on through all parts of the world, elder care facilities felt a particular twinge of isolation. In a woefully underprepared ecosystem, with constant understaffing, the elder population remained isolated and family members turned to consumer devices like Apple Watches and Alexas to step in partially in place of caregiver responsibilities, especially around monitoring and alerting in case of accidents. But, this comes with a slew of privacy and consent problems, given that elders are less likely to understand implications of the use of such technologies.

Why it matters: As an example, for elders with dementia, consent becomes problematic as their state of mind may not be such that they are fully able to grasp what it means to be monitored via an audio or visual device. In addition, their ability to withdraw consent also becomes limited if circumstances change. Then, the deployment of such technologies also have second-order effects, for example, the conversations of those around with such monitoring devices are also captured, not necessarily with their consent.

Between the lines: It is not surprising that such technology has taken off. There is an untapped market for technology in elder care and companies are trying to dive into this sector (as also covered in AI Ethics Brief #43). Also, caregivers tend to have a fair bit of power over elders and even through “benevolent coercion” nudge them into using technology that they might not otherwise be comfortable with. Finally, and most importantly, technology cannot serve as a replacement for human warmth and care. The rapid deployment of technology as a replacement for functions that are provided by human caregivers will only shift ecosystem investing away from what actually needs to be done (training, hiring, and paying well for human caregivers) towards technological solutions.

Facebook, Citing Societal Concerns, Plans to Shut Down Facial Recognition System

What happened: In a move to “find the right balance,” Facebook is going to be deactivating facial recognition technology within its ecosystem citing concerns with how this technology is used and what it powers. With the recent rebrand to Meta, Facebook is on a warpath to set its public image right. The feature was used to power automatic tagging of people in uploaded pictures and videos, something that would help the network deepen connections and make it more frictionless for users to associate their account with visual assets on the site. The technology was also used to power capabilities to detect if someone might be impersonating you on the site and to provide accessibility features like reading out descriptions of photos for blind users.

Why it matters: Given the fines that the company faced from the FTC and the state of Illinois citing violations of privacy, this is a win for privacy advocates to get Meta to shut down this feature. Approximately 1 billion facial recognition templates will also be deleted from the site and there are talks about controlling pictures’ visibility as well to limit how external companies like PimEyes and ClearviewAI can use these assets to train facial recognition technology.

Between the lines: Despite this announcement, something of note in the article is that Meta has not ruled out completely the use of facial recognition technology in future products. Though the recently released glasses in partnership with Ray-Ban don’t have it, this doesn’t mean that future products will never again have facial recognition technology. We also need to continue to pay attention to how this data that had been collected will be removed and how other policies change on Meta and related sites like Instagram which continue to be the largest repositories of facial images in the world.

How Alibaba tracks China’s delivery drivers

What happened: Getting meals delivered on time requires a coordinated effort across restaurants, service providers, and delivery drivers. With mounting pressure from consumers to get their deliveries on time, and a highly competitive landscape with many service providers trying to snatch up market share, innovation in tracking and estimating delivery times can offer an edge. In China, companies like Eleme, owned by Alibaba, with over 83 million monthly active users, have deployed more than 12,000 Bluetooth beacons to enable indoor tracking and figure out how long the driver waits for orders and when they enter and leave a restaurant.

Why it matters: The stated reason behind these deployments is that they will make the job of delivery drivers more efficient, since they won’t have to pull out their phones every few minutes to “check-in” with the system on their status. Automatically doing so through Bluetooth beacons and proximity will alleviate this. But, more accurate location-data and using that to tighten up delivery times will also increase pressure on an already under-compensated and strenuous job. Gig workers with few rights will be forced to operate under even more draconian circumstances.

Between the lines: In addition to the many labor rights problems with such a technology, including the well-being of workers and stress concerns, having so many Bluetooth beacons, both virtual and physical, pose unexplored challenges when it comes to exchanging so much location-based information constantly throughout the day. Perhaps, tempering our expectations as consumers on delivery times and aiding workers in getting better rights is a more fruitful investment of resources than enabling more stringent technology from micromanaging every aspect of their job.

From our Living Dictionary:

“Chatbots”

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

In case you missed it:

Breaking Your Neural Network with Adversarial Examples

Fundamentally, a machine learning model is just a software program: it takes an input, steps through a series of computations, and produces an output. In fact, all software has bugs and vulnerabilities, and machine learning is no exception.

One prominent bug – and security vulnerability – in current machine learning systems is the existence of adversarial examples. An attacker can carefully craft an input to the system to make it predict anything the attacker wants.

To delve deeper, read the full summary here.

Take Action:

Value Sensitive Design and the Future of Value Alignment in AI

This online roundtable discussion aims to discuss whether and how VSD can advance AI ethics in theory and practice. We expect a large audience of AI ethics practitioners and researchers. We will discuss the promises, challenges, and potential of VSD as a methodology for AI ethics and value alignment specifically.

The panelists are as follows:

Idoia Salazar President & co-founder of OdiselAI and Expert in European Parliament’s Artificial Intelligence Observatory.

Batya Friedman: Professor in the Information School, University of Washington, inventor and leading proponent of VSD.

Brian Christian: Journalist, UC Berkeley Science Communicator in Residence, NYT best-selling author of ‘Value Alignment’, Finalist LA Times Best Science & Technology Book.

Geert-Jan Houben (Pro Vice-Rector Magnificus Artificial Intelligence, Data and Digitalisation, TU Delft with opening remarks.

Jeroen van den Hoven: Professor of Ethics and Philosophy of Technology, TU Delft as moderator.