AI Ethics Brief #125: Auditing for human expertise, relative behavioral attributes, productive interdisciplinary dialogue, and more.

What are the emerging trends in the development of AI systems today?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What will be the impact of the declining use of Q&A websites like Stack Overflow in favor of using AI assistants like Copilot?

✍️ What we’re thinking:

Poor facsimile: The problem in chatbot conversations with historical figures

Regulating Artificial Intelligence: The EU AI Act – Part 1 (i)

Experience at AIES Montreal 2023

🤔 One question we’re pondering:

What are the new frontiers of AI development that have caught your attention?

🔬 Research summaries:

Relative Behavioral Attributes: Filling the Gap between Symbolic Goal Specification and Reward Learning from Human Preferences

Atomist or holist? A diagnosis and vision for more productive interdisciplinary AI ethics dialogue

Emerging trends: Unfair, biased, addictive, dangerous, deadly, and insanely profitable

📰 Article summaries:

How AI-powered software development may affect labor markets | Brookings

The Senate’s AI Future Is Haunted by the Ghost of Privacy Past | WIRED

How AI-powered software development may affect labor markets | Brookings

📖 Living Dictionary:

What is an example of diffusion models impact?

🌐 From elsewhere on the web:

How AI companies can avoid the mistakes of social media

💡 ICYMI

Auditing for Human Expertise

🤝 You can now refer your friends to The AI Ethics Brief!

Thank you for reading The AI Ethics Brief — your support allows us to keep doing this work. If you enjoy The AI Ethics Brief, it would mean the world to us if you invited friends to subscribe and read with us. If you refer friends, you will receive benefits that give you special access to The AI Ethics Brief.

How to participate

1. Share The AI Ethics Brief. When you use the referral link below, or the “Share” button on any post, you'll get credit for any new subscribers. Simply send the link in a text, email, or share it on social media with friends.

2. Earn benefits. When more friends use your referral link to subscribe (free or paid), you’ll receive special benefits.

Get a 3-month comp for 25 referrals

Get a 6-month comp for 75 referrals

Get a 12-month comp for 150 referrals

🤗 Thank you for helping get the word out about The AI Ethics Brief!

🚨 The Responsible AI Bulletin

We’ve restarted our sister publication, The Responsible AI Bulletin, as a fast-digest every Sunday for those who want even more content beyond The AI Ethics Brief. (our lovely power readers 🏋🏽, thank you for writing in and requesting it!)

The focus of the Bulletin is to give you a quick dose of the latest research papers that caught our attention in addition to the ones covered here.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours and we’ll answer it in the upcoming editions.

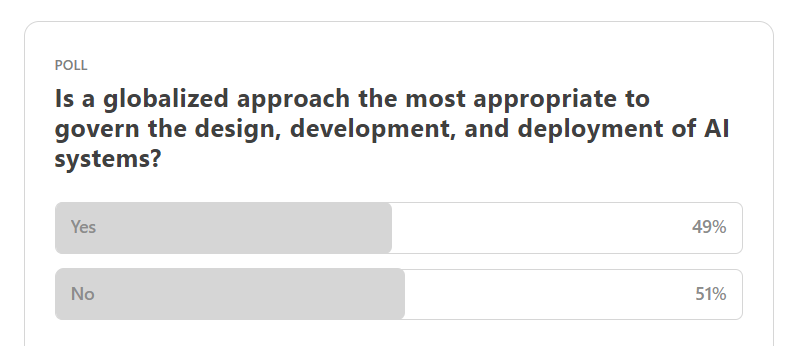

Here are the results from the previous edition for this segment:

Pointing to a recent article, one of our readers wrote in to ask, “What will be the impact of the declining use of Q&A websites like Stack Overflow in favor of using AI assistants like Copilot?”

The biggest loss is the loss of camaraderie (as much as there is hate speech and trolling, there are also good conversations and connections made on community forums) between community members, which often lead to projects and collaborations in the future. For example, the open-source community thrives based on exchanges on forums like Stack Overflow.

AI assistants often cobble together an answer, dropping nuance and context, which hampers the quality of answers, especially on topics that benefit from lengthy exchanges to tease out the specific reasons for one approach over another. (often the case with security-related programming discussions on Stack Overflow)

An extension of the above is an overreliance on AI systems, called automation bias, whereby we miss out on opportunities for deeper thinking and development when engaging in more complex topics.

And finally, given the penchant that we currently have for scooping up all internet data to build bigger and more powerful AI systems, perhaps the loss of new human-generated content will negatively impact the quality of training datasets for future AI systems, especially when they tend to not do so well on AI-generated data. (see: Self-consuming Generative Models go MAD)

Based on how you answered the above poll, what do you believe tilts usage in favor of one over the other? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Poor facsimile: The problem in chatbot conversations with historical figures

WHAT HAPPENED: A recent article in the Washington Post had a journalist interview Harriet Tubman, anthropomorphizing the chatbot and faulting it for not engaging in critical race theory (CRT). It faced backlash from the community, who were (rightfully) enraged that this was in poor taste, especially when there is tons of good literature available about this very important historical figure.

FOGGY VISION: It is important to recognize that AI systems often provide a poor representation and imitation of a person's true identity. As a reference, it can be compared to a blurry JPEG image, lacking depth and accuracy. AI systems are also limited by the information that has been published and captured in their training datasets. The responses they provide can only be as accurate as the data they have been trained on. It is crucial to have extensive and detailed data in order to capture the relevant tone and authentic views of the person being represented.

EROSION OF THINKING: While accuracy is an important measure, relying solely on Q&A with an AI chatbot version of an article can lead to a decline in critical reading skills. Instead of actively engaging with the text to develop their own understanding and placing it within the context of other literature and references, individuals may simply rely on the chatbot for answers.

NOT HUMAN: Additionally, the anthropomorphization of AI systems can exacerbate ethical issues. Referring to a bot as "she" or "her" can create a false sense of interaction and human-like qualities, blurring the lines between technology and humanity. This raises concerns about the appropriate use and ethical implications of AI technology.

It is crucial for media outlets and society as a whole to critically examine the ethics of AI and consider its limitations, potential impact on critical thinking, and the importance of preserving a clear distinction between human and artificial intelligence.

To delve deeper, read the full article here.

Regulating Artificial Intelligence: The EU AI Act – Part 1 (i)

Since the original formulation and publication of the EU AI Act, the Commission has introduced certain amendments to the EU AI Act (The Act). The Commission’s proposal aims to ensure that AI deployed in the market and used is safe and respects the law and fundamental rights, ensuring legal certainty to facilitate innovation and investment in AI.

To delve deeper, read the full article here.

Experience at the AIES Montreal 2023

A delightful conference that took place in our home city, covering everything related to Responsible AI and bringing together researchers and practitioners from around the world to discuss, debate, and move the field forward in thinking and practicing the science and art of Responsible AI. Emily Dardaman, from my staff at the BCG Henderson Institute, shared her experience at the conference.

Deciding Who Decides: AIES 2023 - Day 1

Democratizing AI: AIES 2023 - Day 2

Seeing the invisible: AIES 2023 - Day 3

To delve deeper, read the full article series here.

🤔 One question we’re pondering:

Building on the closing thought in the “Ask an AI Ethicist” segment, we’ve been thinking about what are going to be the new techniques and sources of data that will move us beyond the current generation of AI systems that have exhausted the use of most human-generated data on the internet and suffer when trained on AI-generated data. What are the new frontiers of AI development that have caught your attention?

We’d love to hear from you and share your thoughts back with everyone in the next edition:

🔬 Research summaries:

Reinforcement learning or reward learning from human feedback (e.g., preferences) is a powerful tool for humans to advise or control AI agents. Unfortunately, it is also expensive since it usually requires a prohibitively large number of human preference labels. In this paper, we go beyond binary preference labels and introduce Relative Behavioral Attributes, which allow humans to efficiently tweak the agent’s behavior through judicious use of symbolic concepts (e.g., increasing the softness or speed of the agent’s movement).

To delve deeper, read the full summary here.

Atomist or holist? A diagnosis and vision for more productive interdisciplinary AI ethics dialogue

The role of ethics in AI research has sparked fierce debate among AI researchers on social media, at times devolving into counter-productive name-calling and threats of “cancellation.” We diagnose the growing polarization around AI ethics issues within the AI community, arguing that many of these ethical disagreements stem from conflicting ideologies we call atomism and holism. We examine the fundamental political, social, and philosophical foundations of atomism and holism. We suggest four strategies to improve communication and empathy when discussing contentious AI ethics issues across disciplinary divides.

To delve deeper, read the full summary here.

Emerging trends: Unfair, biased, addictive, dangerous, deadly, and insanely profitable

A survey of the literature suggests social media has created a Frankenstein Monster that is exploiting human weaknesses. We cannot put our phones down, even though we know it is bad for us (and society). Just as we cannot expect tobacco companies to sell fewer cigarettes and prioritize public health ahead of profits, so too, it may be asking too much of companies (and countries) to stop trafficking in misinformation, given that it is so effective and so insanely profitable (at least in the short term).

To delve deeper, read the full summary here.

📰 Article summaries:

How AI-powered software development may affect labor markets | Brookings

What happened: Generative AI tools are being integrated into professional workflows and business applications, raising questions about their impact on worker productivity and labor demand. These tools, driven by deep learning models trained on extensive datasets, can generate content, perform preliminary reasoning, and answer questions. Notable examples like GitHub Copilot and Amazon CodeWhisperer have shown potential across the software development life cycle, including code suggestion and documentation writing.

Why it matters: Unlike previous AI technologies that mainly affected routine tasks, generative AI tools have the potential to impact non-routine jobs like teaching and design. The example of software development illustrates how these tools can reshape knowledge work. While there's potential for increased developer productivity through generative AI, this doesn't necessarily translate to fewer developers. Past technological innovations in software development, like compilers and high-level languages, have led to greater demand and more developers, suggesting a complex relationship between technology and labor.

Between the lines: There's hope that generative AI tools can enhance developers' performance and narrow performance gaps. If preliminary findings hold, these tools could attract more individuals to tech careers, addressing the growing demand for software developers. The broader adoption of generative AI underscores the importance of education in using these tools responsibly and effectively. Policymakers and firms developing AI tools should consider their potential impact on employment and inequality. They should explore incentives like a universal basic income that scales with non-labor income to address potential job shifts. Effective policy is needed to ensure that the economic benefits of generative AI are harnessed while preparing the workforce for these advancements.

The Senate’s AI Future Is Haunted by the Ghost of Privacy Past | WIRED

What happened: The rise of generative artificial intelligence has prompted the US Senate to finally address long-postponed privacy reform discussions. The debate centers around whether the personal data of Americans, constantly bought and sold, should be treated separately from the AI advancements pursued by companies like OpenAI and Google. While some senators like Marco Rubio and Ted Cruz believe in minimal regulation to foster innovation, concerns about AI's transformative potential and privacy implications have pushed lawmakers to consider action.

Why it matters: Senators like Rubio and Cruz advocate for laissez-faire regulation, asserting that AI innovation should proceed unhampered. However, history reveals that unbridled growth in the tech industry has led to issues like data collection by Google and content control by Facebook and Twitter. As the AI revolution further empowers tech companies, some senators prioritize ensuring American AI leadership over stricter regulations, which could impose a restriction on industry growth and international competitiveness.

Between the lines: The urgency for action on AI is finally pushing senators into action, albeit at a slow pace. Recent AI briefings have heightened awareness about the potential risks and rewards of AI proliferation. Senators have attached generative AI amendments to the National Defense Authorization Act (NDAA), reflecting growing concern over AI's security implications. Besides technological debates, there's also a focus on AI's impact on democracy, prompting discussions about political disclosure requirements and potential safeguards against political manipulation.

Why Generative AI Won’t Disrupt Books | WIRED

What happened: In early 2023, as concerns about AI tools like ChatGPT were growing, a tweet by Gaurav Munjal, co-founder of Unacademy, suggested that AI could make books more engaging by animating them. While this idea might make sense in the context of educational tools, it drew criticism for assuming that traditional reading lacked engagement. Munjal was among several tech entrepreneurs promoting AI solutions for writing, including Sudowrite's Story Engine, which faced backlash for trivializing writing challenges.

Why it matters: The tech industry has repeatedly tried to disrupt the book world, often claiming to understand readers' needs better than they do. Despite the technological impact on various industries, attempts to revolutionize books haven't been successful, as people continue to buy and enjoy traditional books. Many of these disruptions were based on misperceptions of what was broken or needed fixing in the industry, revealing a lack of understanding.

Between the lines: AI's role in the book world isn't new, with projects like electronic literature experiments existing for years. However, the tech industry's attempts to overhaul reading with AI-generated content fall flat, as these approaches often misunderstand the essence of traditional books. While AI can create new mediums, it's unlikely to replace or revolutionize established forms like novels. As tech proponents make grand promises, it's important to remember the many unsuccessful disruptors that have come and gone, while reading remains a cherished and enduring pastime for many.

📖 From our Living Dictionary:

What is an example of diffusion models’ impact?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

How AI companies can avoid the mistakes of social media

Abhishek Gupta, founder and principal researcher at the Montreal AI Ethics Institute, said “this is one of the biggest issues that is causing a lot of strife within many companies” when it comes to moving forward with generative A.I. plans, specifically citing strife between the business and privacy/legal functions.

“We are struggling with similar, if not identical, issues around governance approaches to a powerful general-purpose technology that is even more distributed and deep-impacting than other technological waves” he said when asked if he fears we’ll repeat the same mistakes made with social media platforms in terms of not properly mitigating potential harms prior to product release and widespread use.

The nature of the complexity of A.I. systems adds a complicating factor, Gupta said, but he argues that we need to think ahead on how the landscape of problems and solutions is going to evolve.

To delve deeper, read the full article here.

💡 In case you missed it:

In this work, we develop a statistical framework to test whether an expert tasked with making predictions (e.g., a doctor making patient diagnoses) incorporates information unavailable to any competing predictive algorithm. This ‘information’ may be implicit; for example, experts often exercise judgment or rely on intuition which is difficult to model with an algorithmic prediction rule. A rejection of our test thus suggests that human experts may add value to any algorithm trained on the available data. This implies that optimal performance for the given prediction task will require incorporating expert feedback.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

the combination of expert systems or knowledge based systems and inference systems (ML) to produce smaller but more capably systems... best of both worlds - Transformers networks can produce knowledge but requires huge amounts of training and stores it wastefully, spread in the network.