AI Ethics Brief #133: Intersectional fairness, private training set inspection, WH EO, AI Ethics Praxis, and more.

We're back, apologies for being away these last few weeks! We've got a new segment that will delight readers who want to move from principles to practice.

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What should AI ethics practitioners do to prepare their organizations for the requirements coming out of the recent White House Executive Order?

✍️ What we’re thinking:

The Future of AI in Quebec: Bridging Gaps to Drive Innovation, Growth and Social Good

🤔 One question we’re pondering:

What would be the best way to inject the study of bright patterns, as opposed to dark patterns, in design curricula?

🪛 AI Ethics Praxis: From Rhetoric to Reality

Facebook goes ad-free in the EU, for a price!

🔬 Research summaries:

Consequences of Recourse In Binary Classification

A Survey on Intersectional Fairness in Machine Learning: Notions, Mitigation and Challenges

Private Training Set Inspection in MLaaS

📰 Article summaries:

AI-Powered ‘Thought Decoders’ Won’t Just Read Your Mind—They’ll Change It | WIRED

Predictive Policing Software Terrible At Predicting Crimes – The Markup

How AI and brain science are helping perfumiers create fragrances

📖 Living Dictionary:

What is overfitting and underfitting in machine learning?

🌐 From elsewhere on the web:

Trustworthy AI Alone is Not Enough

💡 ICYMI

Understanding the Effect of Counterfactual Explanations on Trust and Reliance on AI for Human-AI Collaborative Clinical Decision Making

🚨 AI Ethics Praxis: From Rhetoric to Reality

Aligning with our mission to democratize AI ethics literacy, we bring you a new segment in our newsletter that will focus on going beyond blame to solutions!

We see a lot of news articles, reporting, and research work that stops just shy of providing concrete solutions and approaches (sociotechnical or otherwise) that are (1) reasonable, (2) actionable, and (3) practical. These are much needed so that we can start to solve the problems that the domain of AI faces, rather than just keeping pointing them out and pontificate about them.

In this week’s edition, you’ll see us work through a solution proposed by our team to a recent problem and then we invite you, our readers, to share in the comments (or via email if you prefer), your proposed solution that follows the above needs of the solution being (1) reasonable, (2) actionable, and (3) practical.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

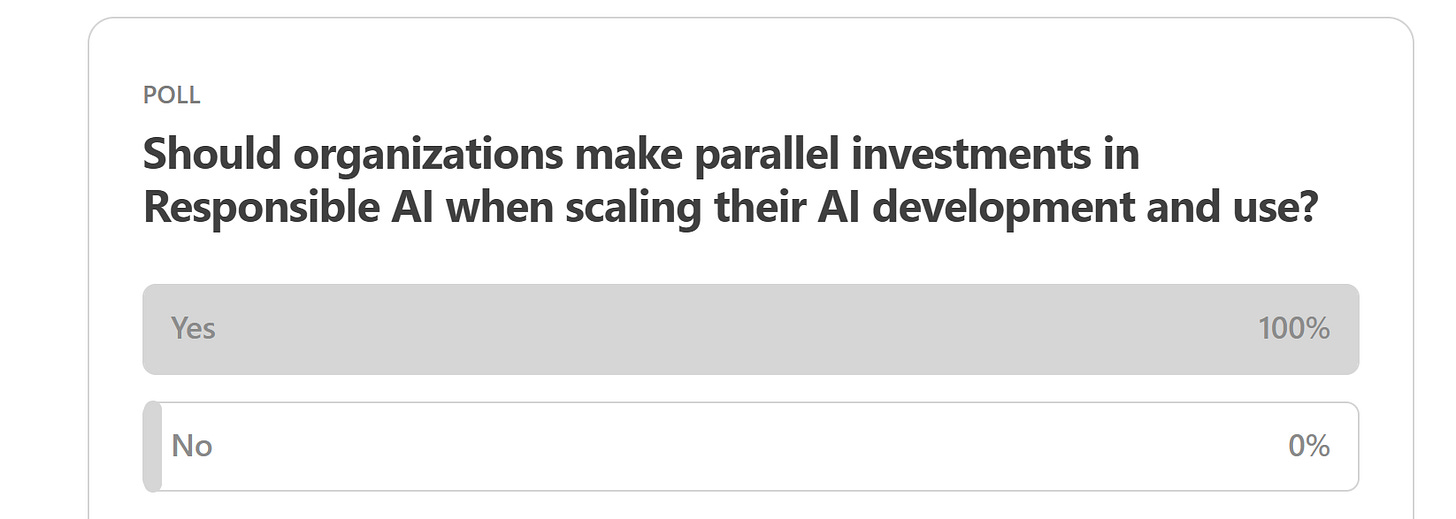

Here are the results from the previous edition for this segment:

No ambiguity in our readers’ priority here!

Moving on to a question emailed a couple of weeks ago to us from one of our readers: “What should AI ethics practitioners do to prepare their organizations for the requirements coming out of the recent White House Executive Order?“

Here is what we would recommend as the first steps in preparing your organization:

🛡️ Enhance AI System Evaluation and Security: Develop and implement standardized procedures for evaluating and monitoring AI systems. Focus on making AI systems resilient against misuse and ensuring they are ethically developed and operated in a secure manner.

🔨 Foster Responsible AI Development: Encourage innovation within the framework of responsible AI principles. This involves addressing intellectual property challenges and ensuring AI development does not lead to anti-competitive practices.

🧮 Workforce Training and Inclusion: Develop strategies to retrain the workforce and integrate AI systems in a way that supports and augments human work rather than replacing it. Ensure that AI deployment in the workplace respects workers' rights and promotes job quality.

🏛️ Ensure Equity and Civil Rights Compliance: Integrate equity and civil rights considerations into AI development and deployment processes. This includes preventing AI systems from perpetuating biases and discrimination, and complying with all applicable federal laws in this regard.

🔒 Consumer Protection and Transparency: Implement measures to protect consumers from AI-related harms and ensure transparency in AI operations, especially in critical sectors like healthcare and finance.

📝 Engagement with Policy Developments: AI ethics practitioners should closely monitor the implementation of the executive order, engage with implementing agencies, and contribute to the development of AI-related policies and legislation.

Are there steps that you have begun taking in your work? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

The Future of AI in Quebec: Bridging Gaps to Drive Innovation, Growth and Social Good

Artificial intelligence (AI) is transforming societies and economies around the world at a rapid pace. However, Quebec risks falling behind in leveraging the opportunities of AI due to several gaps in its ecosystem. In this blog post, I analyze the current limitations around AI development, adoption, and governance in Quebec across the public, private, and academic sectors. Based on this diagnosis, I then provide targeted, actionable recommendations on how Quebec can build understanding, expertise, collaboration, and oversight to unlock the full potential of AI as a force for economic and social good. Read on for insights into the seven key areas requiring intervention and proposed solutions to propel Quebec into a leadership position in the global AI landscape.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

The opposite idea to dark design patterns is the idea of bright patterns, which prompted a discussion at MAIEI around how we should be thinking through incorporating that as a part of HCI and other design curricula to expose the upcoming generation of designers in the field of technology to explicitly keep these ideas at the center of all their work. What would be the best way to inject this into existing and new curricula being developed for degree courses at universities?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

Facebook goes ad-free in the EU, for a price!

For our EU-based readers, you were likely presented with a (coerced) choice on continuing to use the platform, either with ads enabled, or pay a price (as highlighted in the article from Meta), to have an ads-free experience. What we want to discuss here is how the choice was presented and how it represented a dark design pattern.

In particular, the choice provided limited agency to the user. You had to make an on-the-spot decision on which option you wanted, there was no “escape hatch” that defers the choice or allows you to communicate to your network in case you don’t want either of the choices presented; if you wanted to delete your account, sharing with your network that you’re leaving would also not be possible. Nor would it be possible to change (actually, even access) the account settings. But, enough with the problem pointing. So, how can we avoid this dark design pattern that limits user agency?

Here’s how we are thinking about approaching it:

To address the issue of limited user agency in the context of Meta's new subscription model for an ad-free experience on Facebook and Instagram, it is essential to consider the underlying principles of ethical AI and user-centered design.

The rollout, as described, exhibits characteristics of a dark design pattern by restricting user choices and forcing an immediate decision without alternatives or clear avenues for feedback.

🔎 Transparent and Informed Choice: Users should be provided with transparent information about the implications of both choices (with ads or subscription-based ad-free usage). This includes not only the financial cost but also how their data will be used in each scenario. The decision-making process should be free from coercive elements, allowing users the time and space to make an informed choice.

🫸 Providing an 'Opt-Out' or Delay Option: Incorporating an option to delay the decision or opt-out of immediate action can alleviate the pressure of on-the-spot decision-making. This could be in the form of a temporary continuation of service as usual, with periodic reminders about the need to make a decision.

⚙️ Accessible Account Management: Users should retain the ability to access and modify their account settings at any time, regardless of their choice. This ensures they can manage their preferences, understand the implications of their choices, and change their decision if needed.

💬 Feedback Mechanisms: Implementing channels for user feedback on the process allows the company to understand user concerns and adjust their approach accordingly. This could include surveys, feedback forms, or forums where users can express their views and suggestions.

🎚️ Alternative Communication Channels: For users opting to delete their accounts, provide mechanisms to communicate their decision to their network or export their data. This respects the user's right to discontinue the service while maintaining their social connections and personal content.

⚖️ Compliance with GDPR and Ethical Standards: The company should ensure that all actions comply with the General Data Protection Regulation (GDPR) and ethical standards for user privacy and data security. This includes transparently communicating how user data is processed under each option and ensuring that user consent is freely given, specific, informed, and unambiguous.

By implementing these strategies, Meta can enhance user agency, respect user choice, and align their practices with the principles of ethical AI and user-centered design.

Given the enormous power of the reader community’s shared ambition, commitment, and intelligence on moving from principles to practice, let’s help the broader community by commenting on how you would solve the problem by proposing a solution that is (1) reasonable, (2) actionable, and (3) practical.

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

🔬 Research summaries:

Private Training Set Inspection in MLaaS

There is an emerging service in the ML market offering custom training datasets for customers with budget constraints. The datasets are tailored to meet specific requirements and regulations, but customers don’t have access to the datasets except for the final ML model products. In this case, this paper provides a solution for customers to inspect the data fairness and diversity status of the inaccessible dataset.

To delve deeper, read the full summary here.

A Survey on Intersectional Fairness in Machine Learning: Notions, Mitigation and Challenges

The increasing use of ML/AI in critical domains like criminal sentencing has sparked heightened concerns regarding fairness and ethical considerations. Many works have established metrics and mitigation techniques to handle such discrimination. However, a more nuanced form of discrimination, called intersectional bias, has recently been identified, which spans multiple axes of identity and poses unique challenges. In this work, we review recent advances in the fairness of ML systems from an intersectional perspective, identify key challenges, and provide researchers with clear future directions.

To delve deeper, read the full summary here.

Consequences of Recourse In Binary Classification

Generating counterfactual explanations is a popular method of providing users with an explanation of a decision made by a machine learning model. However, in our paper, we show that providing many users with such an explanation can adversely affect the predictive accuracy of the model. We characterize where this drop in accuracy can happen and show the consequences of possible solutions.

To delve deeper, read the full summary here.

📰 Article summaries:

AI-Powered ‘Thought Decoders’ Won’t Just Read Your Mind—They’ll Change It | WIRED

What happened: Neuroscientists have been developing technologies that can decode our thoughts, revealing the hidden contents of our minds. Recent studies have gained attention for their ability to reconstruct songs and sentences from brain activity data. However, these technologies are not all-powerful mind readers and require active participant involvement. Critics are concerned about privacy and the potential for businesses to exploit these tools for profit.

Why it matters: The conventional idea of a completely private and self-contained mind is being challenged. Philosophers like Saul Kripke argue that thoughts become unintelligible if entirely private. Thoughts gain meaning and substance when connected to the external world. This challenges the notion of thoughts as static entities and emphasizes their dynamic interaction with the environment. We must consider the ethical implications of thought-decoding technologies and how they may shape our thoughts and language.

Between the lines: Misusing thought-decoding technologies could lead to disastrous consequences, as seen with lie detectors. Blind faith in these tools can make people vulnerable to manipulation. While these technologies have potential benefits, we must carefully consider their design and the terms they include or exclude. Thought-decoding tools could shape our thinking and language, so ensuring they reflect a diverse and inclusive perspective is crucial. Understanding how external factors influence our thoughts can help us take a more proactive role in shaping our own minds.

Predictive Policing Software Terrible At Predicting Crimes – The Markup

What happened: An analysis by The Markup found that crime predictions generated by Geolitica (formerly PredPol) for the Plainfield Police Department rarely matched actual reported crimes. The software takes in data from crime reports to predict where and when crimes are likely to occur. In their examination of 23,631 predictions, less than half a percent were accurate, with fewer than 100 predictions aligning with actual reported crimes. The success rate was even lower for specific crime types, such as robberies and burglaries.

Why it matters: In 2021, The Markup's investigation revealed that Geolitica's software disproportionately targeted low-income, Black, and Latino neighborhoods in various cities nationwide. However, assessing the software's effectiveness in predicting crimes has been challenging because data on police officers' responses to prediction locations has not been widely available. Predictive policing has raised concerns about its impact on communities, with studies showing potential negative consequences, including emotional distress for Black and Latino boys stopped by the police and increased use of force leading to reduced usage of city services.

Between the lines: Geolitica is shifting its focus from crime predictions to a broader platform for managing police department data. Critics argue that the problem with predictive policing lies in the policing aspect itself and that addressing the root causes of crime may be a more effective solution. They emphasize the need for a larger dialogue involving police commanders and community members to understand better the factors contributing to crime hot spots.

How AI and brain science are helping perfumiers create fragrances

What happened: Olfaction, the sense of smell, is complex, involving various receptors and connections in the brain. Modern perfumers are embracing AI to design fragrances that trigger specific emotional responses. They use neuroscents, ingredients known to stimulate positive feelings such as calmness, euphoria, or sleepiness based on biometric measures.

Why it matters: Beauty brands like L'Oréal and Puig invest in neuroscent research and technology to create scents that make consumers feel good. Customers at Yves Saint Laurent stores have used EEG headsets to discover scents that appeal to them, with a 95% success rate. Niche perfumiers are also using algorithms to create personalized fragrances. However, some argue that the magic of discovering new fragrances should be left to human creativity and serendipity.

Between the lines: The intersection of science and art in the world of fragrance continues to evolve. While AI-driven scent design offers exciting possibilities, some, like perfume writer Katie Puckrik, believe that computers should not replace the personal and artistic aspects of perfume creation. The potential therapeutic benefits of odorant molecules in scent modulation for health are still an area of exploration and study.

📖 From our Living Dictionary:

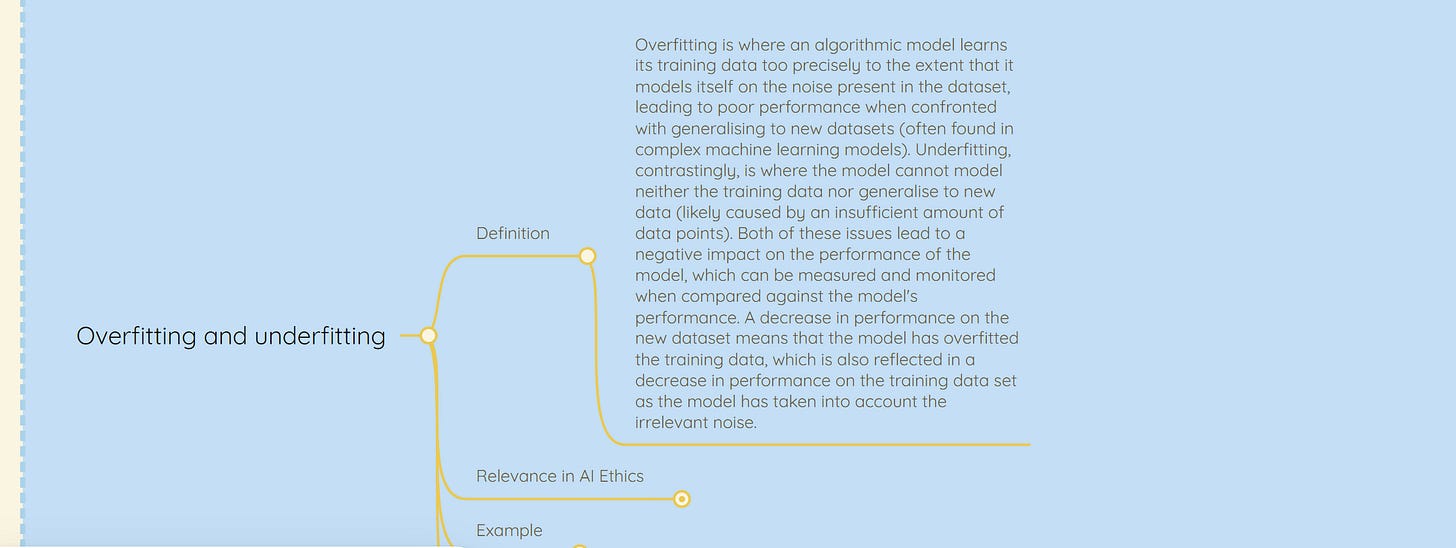

What is overfitting and underfitting in machine learning?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Trustworthy AI Alone is Not Enough

Our Partnerships Manager, Connor Wright, has co-edited a book on the subject of AI ethics. Take a look below at what the book is about and visit the link to grab a copy for yourself!

The aim of this book is to make accessible to both a general audience and policymakers the intricacies involved in the concept of trustworthy AI. In this book, we address the issue from philosophical, technical, social, and practical points of view. We conclude our work by advocating for the virtue ethics approach to AI, which we view as a humane and comprehensive approach to trustworthy AI that can accommodate the pace of technological change.

To delve deeper, grab a copy here.

💡 In case you missed it:

Although advanced artificial intelligence (AI) and machine learning (ML) models are increasingly being explored to assist various decision-making tasks (e.g., health, bail decisions), users might place too much trust even with ‘wrong’ AI outputs. This paper explores the effect of counterfactual explanations on users’ trust and reliance on AI during a clinical decision-making task.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.