AI Ethics Brief #143: Managing AI ethics staff, tackling anthropomorphization, tyranny of the majority, plagiarism detection tools, and more.

What could X/Twitter have done better from a platform governance perspective to limit the spread of Taylor Swift deepfakes?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

How to empower newly hired staff members in AI ethics who have thus far struggled to integrate well with the rest of the product and design teams?

✍️ What we’re thinking:

Normal accidents, artificial life, and meaningful human control

🤔 One question we’re pondering:

What could X/Twitter have done better from a platform governance perspective to limit the spread of Taylor Swift deepfakes?

🪛 AI Ethics Praxis: From Rhetoric to Reality

How can we minimize churn and retain staff in AI ethics teams?

🔬 Research summaries:

Moral Machine or Tyranny of the Majority?

Transferring Fairness under Distribution Shifts via Fair Consistency Regularization

Artificial intelligence and biological misuse: Differentiating risks of language models and biological design tools

📰 Article summaries:

Plagiarism Detection Tools Offer a False Sense of Accuracy – The Markup

AI will make scam emails look genuine, UK cybersecurity agency warns

Measuring Justice: Field Notes on Algorithmic Impact Assessments | by Tamara Kneese | Data & Society: Points | Jan, 2024 | Medium

📖 Living Dictionary:

What is GIGO?

🌐 From elsewhere on the web:

Navigating the AI Frontier: Tackling anthropomorphisation in generative AI systems

💡 ICYMI

Towards Environmentally Equitable AI via Geographical Load Balancing

🚨 Google’s AI Ethics team changes and teachable moment from Taylor Swift’s Deepfakes - here’s our quick take on what happened last week.

Google’s AI Ethics Team Restructuring

Google announced changes to its Responsible Innovation team, which has been a key internal watchdog for AI ethics within the company. The team, known for reviewing new AI products for compliance with Google's responsible AI development rules, lost its leader and one of its most influential members, Sara Tangdall, who moved to Salesforce. This restructuring raises questions about Google's commitment to developing AI responsibly, despite it being a stated priority by CEO Sundar Pichai for 2024.

A teaching moment from the Taylor Swift Deepfakes

An article discussed how Taylor Swift's experience with deepfakes could serve as a valuable teaching moment for students to examine AI ethics. It emphasized the importance of educating students about the ethical implications of AI technologies like deepfakes, which can spread misinformation and influence public opinion. This initiative underscores the need for critical thinking and ethical considerations in the digital age, especially among younger generations.

Did we miss anything?

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

Here are the results from the previous edition for this segment:

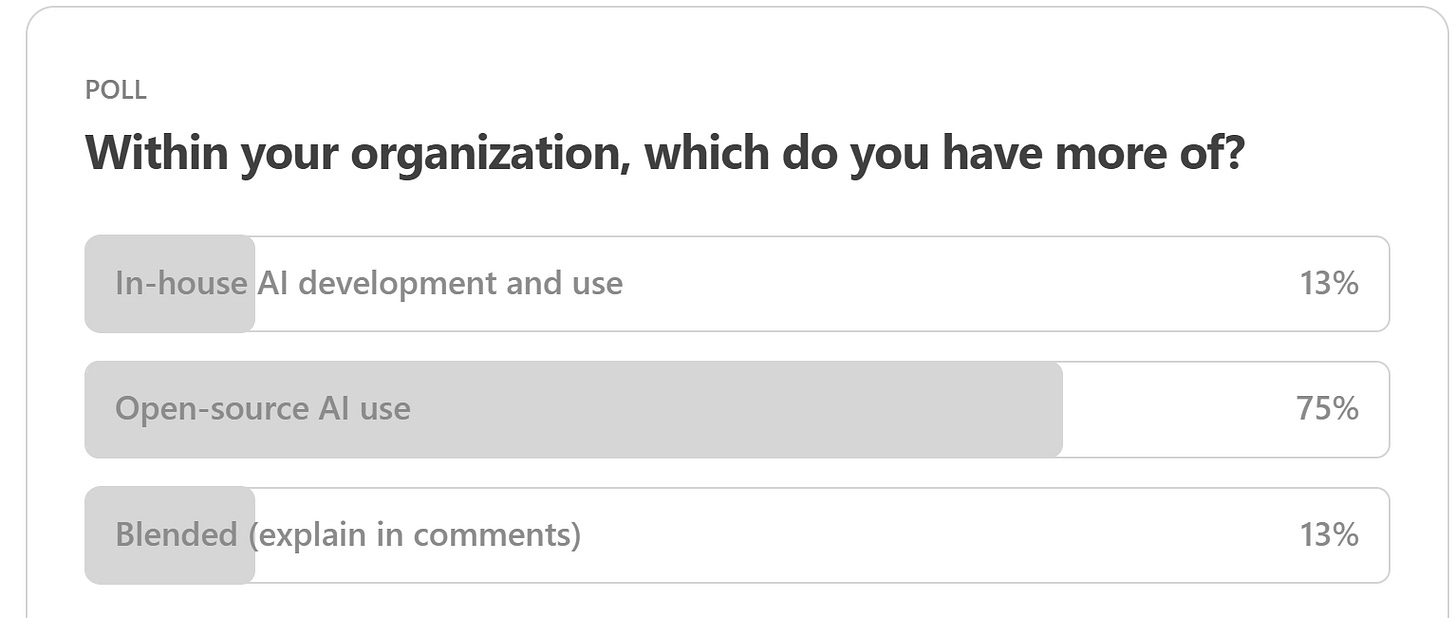

The results from last week’s poll aren’t all that surprising given the ease of use of OSS AI systems, and the lower costs that come from pretrained models. Especially, since they are quite performant (generally) and with little effort (in the form of prompt engineering) can yield results that are tailored to specific use cases.

Moving on to the reader question for this week, K.R. writes to us asking about how to empower his newly hired staff members in AI ethics who have thus far struggled to integrate well with the rest of the product and design teams given the legacy nature of their organization.

This question is particularly relevant (given MAIEI’s work helping organizations tackle RAI program implementation), especially in cases where AI use and adoption itself is new, now layered on with expectations of responsible AI as well. Here are a few steps based on our work with organizations in North America that could help:

Clear Role Definition: Clearly define the roles and responsibilities of AI ethics staff. This helps in setting realistic expectations and provides a concrete understanding of how their work aligns with the organization's goals.

Inclusive Decision-Making: Include AI ethics staff in key decision-making processes, especially those related to AI projects. This not only leverages their expertise but also signals the value the organization places on ethical considerations.

Cross-Functional Collaboration: Facilitate collaboration between AI ethics staff and other departments such as IT, legal, and operations. This fosters a holistic approach to AI implementation and helps in embedding ethical considerations throughout the organization.

Education and Training: Offer regular education and training sessions for both the AI ethics staff and other employees. For the ethics staff, focus on the latest industry developments and organizational procedures. For other employees, focus on the importance of AI ethics and how to incorporate ethical considerations in their work.

Supportive Culture: Cultivate a culture that values and respects ethical considerations. This can be achieved through leadership endorsement, regular communication about the importance of AI ethics, and recognizing the contributions of AI ethics staff.

Resources and Tools: Provide the necessary resources and tools for AI ethics staff to perform their duties effectively. This includes access to relevant data, analytical tools, and opportunities for professional development.

Performance Metrics: Develop specific performance metrics for AI ethics initiatives. This helps in measuring the impact of their work and aligning their objectives with business outcomes.

Feedback Loop: Establish a feedback loop where AI ethics staff can report challenges and successes, and receive constructive feedback. This continuous dialogue ensures that their role remains relevant and adaptive to organizational needs.

Has your organization recently hired staff to implement responsible AI, if so, what challenges have you faced in integrating them into the rest of the organization? Please let us know! Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Normal accidents, artificial life, and meaningful human control

Lines are blurring between natural and artificial life, and we’re facing hard questions about maintaining meaningful human control (MHC) in an increasingly complex and risky environment.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

With the fallout from the Taylor Swift deepfakes continuing into the past week, what could X (formerly Twitter) have done better in terms of platform governance to limit the spread of the objectionable content? (Besides, of course, not having fired their content moderation teams)

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

With a lot of turnover happening in AI ethics teams across many organizations (see the recent Google AI Ethics team restructuring as an example), it might be time for the AI ethics community to work more closely with business leaders so that we minimize this churn and allow for long-term solutions to be built that actually achieve the goals of responsible AI in practice.

Here are some pieces of advice that we’ve offered to organizations (both public and private) over the past couple of years that we’ve found to work particularly well:

Clear Career Pathways: Develop well-defined career progression paths for AI ethics roles. This includes opportunities for advancement, skill development, and clear role delineation. Employees are more likely to stay when they see a future within the organization.

Empowerment and Influence: Ensure that AI ethics staff have a genuine influence on projects and decisions. This can be achieved by integrating them into project teams, giving them decision-making authority, and ensuring their recommendations are considered and respected.

Supportive Work Environment: Foster an environment that values ethical considerations and promotes open dialogue about ethical dilemmas. This includes support from top management, regular training on ethical issues, and a culture that encourages raising and discussing ethical concerns.

Interdisciplinary Collaboration: Encourage collaboration between AI ethics staff and other departments. This not only enriches the understanding of ethical issues across the organization but also demonstrates the integral role of AI ethics in all aspects of operation.

Regular Feedback and Recognition: Implement regular performance reviews and feedback sessions, acknowledging the contributions of AI ethics staff. Recognition of their work both internally and externally can greatly improve job satisfaction.

Ethical Leadership: Leadership should actively demonstrate a commitment to ethics in AI. This sets a tone at the top that can permeate through the organization, validating the work of AI ethics professionals.

Community Building: Foster a sense of community among AI ethics professionals, both within and outside the organization. This can be through regular meetups, forums, or working groups, where they can share experiences and best practices.

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

🔬 Research summaries:

Should ChatGPT be able to give you step-by-step instructions to create the next pandemic virus? As artificial intelligence tools like ChatGPT become more advanced, they may lower barriers to biological weapons and bioterrorism. This article differentiates between the impacts of language models and AI tools trained on biological data and proposes how these risks may be mitigated.

To delve deeper, read the full summary here.

Transferring Fairness under Distribution Shifts via Fair Consistency Regularization

This paper addresses the issue of fairness violations in machine learning models when they are deployed in environments different from their training grounds. A practical algorithm with fair consistency regularization as the key component is proposed to ensure model fairness under distribution shifts.

To delve deeper, read the full summary here.

Moral Machine or Tyranny of the Majority?

Given the increased application of AI and ML systems in situations that may involve tradeoffs between undesirable outcomes (e.g., whose lives to prioritize in the face of an autonomous vehicle accident, which loan applicant(s) can receive a life-changing loan), some researchers have turned to stakeholder preference elicitation strategies. By eliciting and aggregating preferences to derive ML models that agree with stakeholders’ input, these researchers hope to create systems that navigate ethical dilemmas in accordance with stakeholders’ beliefs. However, in our paper, we demonstrate via a case study of a popular setup that preference aggregation is a nontrivial problem, and if a proposed aggregation method is not robust, applying it could yield results such as the tyranny of the majority that one may want to avoid.

To delve deeper, read the full summary here.

📰 Article summaries:

Plagiarism Detection Tools Offer a False Sense of Accuracy – The Markup

What happened: Katherine Pickering Antonova, upon becoming a history professor, embraced plagiarism detection software like Turnitin and SafeAssign, hoping they would streamline grading. However, her initial use revealed a shock: most of her students' essays were flagged for potential plagiarism. She soon realized these tools merely identified matching text, not necessarily plagiarism, leading to false positives and wasted time.

Why it matters: Plagiarism checkers, integrated into educational systems like The City University of New York's, are default mechanisms for scanning student submissions. Despite promises of accuracy, they often produce false flags and consume excessive faculty time. Even respected scholars like Claudine Gay face accusations based on flawed detections, raising concerns about the fairness and efficacy of these tools. Moreover, their overreliance disproportionately affects students at less selective institutions.

Between the lines: Plagiarism detection tools operate on rudimentary algorithms that prioritize identifying text similarities, often leading to imprecise results. The case of Claudine Gay exemplifies how flawed detections can harm reputations and careers. While some educators abandon these tools due to inefficiency, their continued use perpetuates a divide between those subjected to scrutiny and those exempt. Additionally, quantifying plagiarism percentages can oversimplify assessment, posing a risk to academic integrity and student evaluation.

AI will make scam emails look genuine, UK cybersecurity agency warns

What happened: The UK's National Cyber Security Centre (NCSC) issued a warning about the increasing difficulty in distinguishing genuine emails from those sent by scammers, particularly phishing messages asking for password resets. They attributed this challenge to the sophistication of AI tools, such as generative AI, which can produce convincing text. The NCSC emphasized that these AI advancements could enable amateur cybercriminals to conduct sophisticated phishing attacks.

Why it matters: The NCSC's warning underscores the imminent threat posed by AI-driven phishing attacks, which could escalate cyber-attacks and amplify their impact over the next few years. Generative AI and large language models are expected to complicate efforts to identify various types of attacks, including spoof messages and social engineering tactics. Ransomware attacks, already on the rise, are also expected to increase in frequency and sophistication, posing significant risks to institutions and individuals alike.

Between the lines: While AI presents challenges, it also offers defensive capabilities with the potential to detect and mitigate cyber threats. The UK government introduced new guidelines to enhance businesses' resilience against ransomware attacks, emphasizing the importance of prioritizing information security. However, cybersecurity experts warn that stronger action is needed, advocating for fundamental changes in how ransomware threats are addressed, including stricter regulations on ransom payments and abandoning unrealistic retaliation strategies.

What happened: Algorithmic impact assessments (AIAs) are gaining traction, with legislation in the EU, Brazil, Canada, and the US possibly mandating their use. AIAs, rooted in various impact assessments like human rights and environmental impact assessments, aim to evaluate how algorithms affect people's lives and access to essential services. However, AIAs are not yet mandatory despite their potential, with few public examples. Organizations like Data & Society's AIMLab are actively exploring AIAs' implications and methodologies.

Why it matters: The rise of AIAs is critical for holding technology accountable, especially as AI's societal impact becomes more pronounced. With climate change concerns and the proliferation of generative AI, assessing technology's environmental and social effects is paramount. However, challenges persist in measuring impacts, determining when assessments should occur, and ensuring inclusive participation from affected communities. Balancing efficiency with justice-oriented assessments is essential to address the complex realities of algorithmic impacts.

Between the lines: The practical implementation of AIAs raises nuanced questions about measurement methodologies, assessment timing, and community involvement. There's a need to establish regulations, standards, and industry-wide practices to assess sustainability and ethical impacts. Moreover, incorporating marginalized communities from the outset and addressing power differentials are crucial for meaningful participation. While some organizations prioritize community engagement, challenges like unpaid labor and decision-making biases remain unresolved, highlighting the ongoing complexity of AIAs' execution.

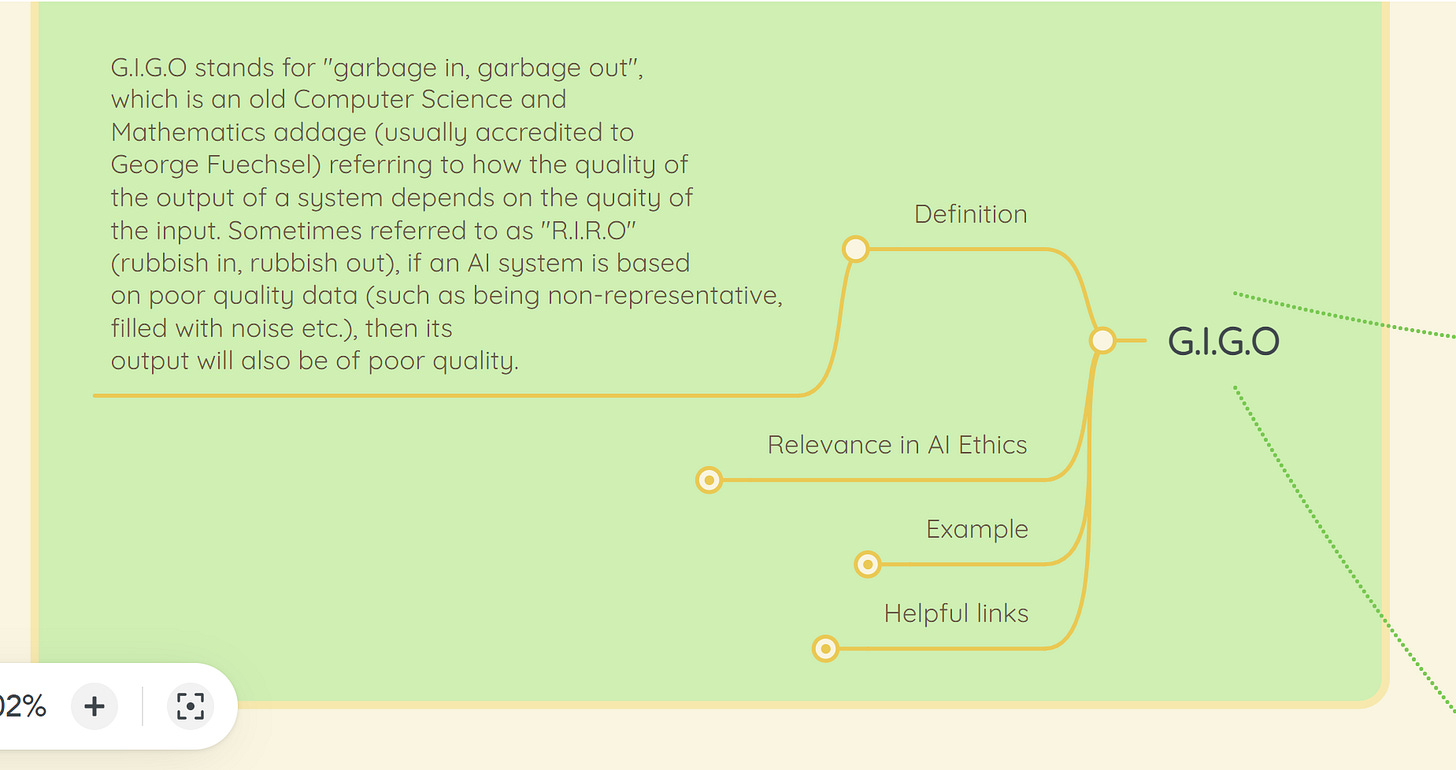

📖 From our Living Dictionary:

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Navigating the AI Frontier: Tackling anthropomorphisation in generative AI systems

Gaining a better understanding of the dynamics and underlying assumptions will help us steer the future toward alignment with our cultural aspirations and values, localised and adapted to meet differing hopes and fears. Anthropomorphisation in AI goes beyond mere user perception; it influences how these systems are designed, marketed, and integrated into societal structures. The tendency to perceive AI as human-like can lead to unrealistic expectations, ethical dilemmas, and even legal challenges.

To delve deeper, read more details here.

💡 In case you missed it:

Towards Environmentally Equitable AI via Geographical Load Balancing

The exponentially growing demand for AI has created an enormous appetite for energy and a negative environmental impact. Despite recent efforts to make AI more environmentally friendly, environmental inequity — the fact that AI’s environmental footprint is disproportionately higher in certain regions than in others — has unfortunately emerged, raising social-ecological justice concerns. To achieve environmentally equitable AI, we propose equity-aware geographical load balancing (GLB) to ensure fair distribution of AI’s environmental costs across different regions.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.