AI Ethics Brief #118: Responsible AI Licenses, normal accidents, consent in voice assistants, move fast and fix things, and more.

What is the best way to educate policymakers to ask more meaningful questions of BigTech when it comes to harm from AI systems?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

With the vote on the EU AI Act, auditing and conformity assessments will become increasingly important. To what extent will this alter market structures in favor of larger companies who have resources to meet these requirements?

✍️ What we’re thinking:

Normal accidents, artificial life, and meaningful human control

Responsible AI Licenses: social vehicles toward decentralized control of AI

🤔 One question we’re pondering:

What is the best way to educate policymakers to ask more meaningful questions of BigTech when it comes to harm from AI systems?

🔬 Research summaries:

Responsible AI In Healthcare

Can You Meaningfully Consent in Eight Seconds? Identifying Ethical Issues with Verbal Consent for Voice Assistants

DC-Check: A Data-Centric AI checklist to guide the development of reliable machine learning systems

📰 Article summaries:

An algorithm intended to reduce poverty in Jordan disqualifies people in need | MIT Technology Review

Rethinking AI benchmarks: A new paper challenges the status quo of evaluating artificial intelligence | VentureBeat

Suicide Hotlines Promise Anonymity. Dozens of Their Websites Send Sensitive Data to Facebook | The Markup

📖 Living Dictionary:

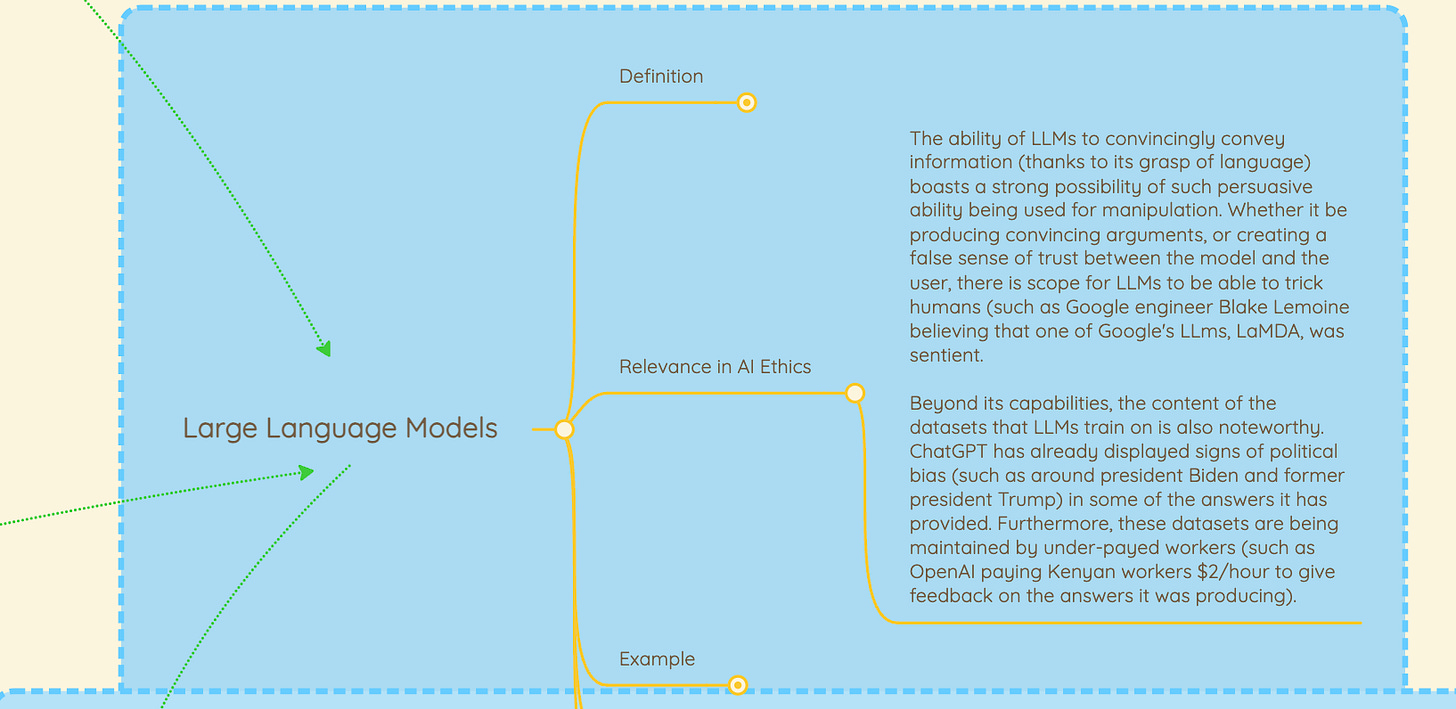

What is the relevance of LLMs in AI ethics?

🌐 From elsewhere on the web:

Move Fast and Fix Things

💡 ICYMI

Subreddit Links Drive Community Creation and User Engagement on Reddit

But first, our call-to-action this week:

Generated using DALL-E 2 with the prompt “isometric scene with high resolution in pastel colors with flat icon imagery depicting researchers reading books in a university library“

We are looking for summer book recommendations that have been published in the last 6-12 months that touch on Responsible AI - especially those that highlighted unique aspects of the Responsible AI discussion, such as the environmental impacts of AI that are less discussed than issues such as bias and privacy.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours and we’ll answer it in the upcoming editions.

With the vote on the EU AI Act, auditing and conformity assessments will become increasingly important. To what extent will this alter market structures in favor of larger companies who have resources to meet these requirements?

What a tremendous achievement it is to have a concrete regulation that has been passed in the EU! It will certainly have an impact around the world, moderated perhaps by the Brussels Effect. Governance can become an opportunity for companies to accelerate innovation and profitability as I’ve argued here. Yet, it also comes with potentially market structure altering effects, in particular low-resourced SMEs and startups who might find themselves in a tight spot. Allocating resources towards meeting EU AI Act requirements (where applicable) will become a price to enter the market (a warranted one when it comes to end-user and ecosystem well-being).

In the short-run, this will definitely fall in favor of larger organizations who have resources on the ready to deploy towards meeting these requirements. Over time, with some deliberate planning and calls from the AI ecosystem, if compliance mechanisms and tooling are made available in an OSS fashion, this might enable organizations on the other end of the resource spectrum to participate equally in the ecosystem, ultimately enriching the choices that the end users have in AI-enabled products/services. It, hopefully, will also promote a race-to-the-top in terms of ethical-by-design approaches being adopted more broadly.

What do you believe will be the impact of the EU AI Act on the market structure for AI-enabled products? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Normal accidents, artificial life, and meaningful human control

Lines are blurring between natural and artificial life, and we’re facing hard questions about maintaining meaningful human control (MHC) in an increasingly complex and risky environment.

Ever heard of a “normal accident?” Stanford University and University of Milan researchers found that complex systems with tightly coupled components suffer from major accidents if they run long enough. At a certain level of complexity, these accidents – which arise from multiple trivial causes – are unavoidable.

To delve deeper, read the full article here.

Responsible AI Licenses: social vehicles toward decentralized control of AI

This article explores a new licensing paradigm in the AI space – Open & Responsible AI Licenses (Open RAIL) – from a social perspective as a social institution with the potential of setting future community norms for the respect and responsible sharing of AI artifacts.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

What is the best way to educate policymakers to ask more meaningful questions of BigTech when it comes to harm from AI systems?

We’ve seen many gaffes in the past when it comes to policymakers trying to understand how technology products/services affect their constituents. It seems that things are getting better, e.g., with the recent OpenAI hearing. It brings up an important question around how we can continue on that path of improvement - given the critical role that they play in ensuring that companies are held accountable and policymaking adopts a well-informed perspective.

There are some educational workshops offered by organizations like Stanford HAI for Washington DC. Are there other initiatives and resources that do a good job?

We’d love to hear from you and share your thoughts back with everyone in the next edition:

🔬 Research summaries:

This article discusses open problems, implemented solutions, and future research in responsible AI in healthcare. In particular, we illustrate two main research themes related to the work of two laboratories within the Department of Informatics, Systems, and Communication (DISCo) at the University of Milano-Bicocca. The problems addressed, in particular, concern uncertainty in medical data and machine advice and the problem of online health information disorder.

To delve deeper, read the full summary here.

Instead of requiring users to switch to companion apps, domestic voice assistants (VAs) like Alexa are beginning to let people give verbal consent to data sharing. This work describes ways that current implementations break key informed consent principles and outlines open questions for the research and developer communities around the future development of verbal consent.

To delve deeper, read the full summary here.

DC-Check: A Data-Centric AI checklist to guide the development of reliable machine learning systems

Revolutionary advances in machine learning (ML) algorithms have captured the attention of many. However, to truly realize the potential of ML in real-world settings, we need to consider many aspects beyond new model architectures. In particular, a critical lens on the data used to train the ML algorithms is a crucial mindset shift. This paper introduces a data-centric AI framework called DC-Check, as an actionable checklist for practitioners and researchers to elicit such data-centric considerations through the different stages of the ML pipeline: Data, Training, Testing, and Deployment. DC-Check is the first standardized framework to engage with data-centric AI and aims to promote thoughtfulness and transparency before system development.

To delve deeper, read the full summary here.

📰 Article summaries:

What happened: An investigation by Human Rights Watch reveals that an algorithmic system called Takaful, funded by the World Bank to determine eligibility for financial assistance in Jordan, is likely excluding people who should qualify. The algorithm ranks families based on socioeconomic indicators, but applicants argue that it oversimplifies their economic situations and can inaccurately or unfairly assess their poverty level. The algorithmic system has cost over $1 billion, and similar projects are being funded in other countries.

Why it matters: The report highlights the need for greater transparency in government programs that utilize algorithmic decision-making. The findings reveal distrust and confusion among the families interviewed regarding the ranking methodology. Experts emphasize the importance of scrutinizing algorithms in welfare systems to avoid excluding those in need and to ensure fairness.

Between the lines: This case raises concerns about the negative impact of poorly designed algorithms restricting access to necessary funds. The World Bank, which funded the program, plans to provide more information in July 2023. While data technology and AI-enabled systems can enhance efficiency and fairness in social protection, this incident underscores the need for careful implementation and consideration of the specific contexts and biases involved.

What happened: Artificial intelligence (AI) systems like GPT-4 and PaLM 2 have surpassed human performance on various tasks, as measured by benchmarks. However, this paper challenges the validity of these benchmarks, stating that they fail to capture the true capabilities and limitations of AI systems, potentially leading to false conclusions about their safety and reliability.

Why it matters: The paper highlights the importance of understanding the capabilities and limitations of AI systems for their development to be safe and fair. While convenient, the use of aggregate metrics in evaluating AI systems lacks transparency and detail on critical tasks. Without access to granular data, independent researchers will struggle to verify or replicate the results published in papers.

Between the lines: To address these challenges, the paper suggests guidelines such as publishing granular performance reports and developing new benchmarks focusing on specific capabilities. However, the authors caution that this transparency may encourage companies to downplay the limitations and failures of their models, selectively showcasing favorable evaluation results to create the impression of superior capability and reliability.

What happened: Websites providing mental health crisis resources have been found to send sensitive visitor data to Facebook without visitors' knowledge. These websites, associated with the national mental health crisis hotline, transmit data through the Meta Pixel tool, including signals when visitors attempt to call for emergencies. Sometimes, hashed names and email addresses are also sent to Facebook, which can be easily unscrambled.

Why it matters: The data collected by the Meta Pixel can be used by Facebook to link website visitors to their accounts, even if they don't have a Facebook account. Despite being hashed, the names and email addresses can be easily unscrambled. The incident raises concerns about privacy and the potential misuse of sensitive information by Facebook/Meta, especially considering the lack of oversight and transparency in how the data is cataloged and used.

Between the lines: The Meta Pixel is primarily used for tracking and targeted advertising on Facebook, but its presence on websites providing mental health crisis resources poses a significant privacy risk. Organizations and businesses may unknowingly send sensitive information to Facebook through the pixel, including data related to health and finance, despite Meta's policies prohibiting such practices. The discovery has led to changes in how some organizations use the pixel, legal repercussions for Meta, and questions from lawmakers regarding data handling practices.

📖 From our Living Dictionary:

What is the relevance of LLMs in AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

“There are those that look at things the way they are, and ask why? I dream of things that never were, and ask why not?” – George Bernard Shaw

There is a tension between those who cling to the status quo and innovators who constantly chase new possibilities. History has shown us that the latter will dominate the marketplace, but for how long? In recent years, leading innovative companies have made headlines for disseminating scientific misinformation, eliminating teams devoted to harm prevention, releasing politically tone-deaf messages, and many other instances of recklessness. Coupled with the introduction of artificial intelligence and other emerging technologies, serious missteps in ethics, governance, and culture have caused the schism between the agents of change and opponents of it to widen substantially. These ethical blunders, plus outright paranoia, have led to cries for stricter regulation and for companies to pull back from exploration. We believe that the answer isn’t to stop innovating or to give in to the naysayers of progress for fear of pitchforks. The best way to right our course and stay ahead of the unintended consequences of new technology is to let Purpose guide the way.

To delve deeper, read the full article here.

💡 In case you missed it:

Subreddit Links Drive Community Creation and User Engagement on Reddit

On Reddit, subreddit links are often used to directly reference another community. This use potentially drives traffic or interest to the linked subreddit. To understand and explore this phenomena, we performed an extensive observational study. We found that subreddit links not only drive activity within referenced subreddits, but also contribute to the creation of new communities. These links therefore give users the power to shape the organization of content online.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

Who is use AI in the world. Developed countries or under devoloping countries? Can you giv me some percentage about this subject.