AI Ethics Brief #120: Challenges in AI regulation, cognitive biases in XAI-assisted decision-making, debiasing vision-language models, and more.

How can we judge whether an algorithmic audit is comprehensive?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

How can we judge whether an algorithmic audit is comprehensive?

✍️ What we’re thinking:

ChatGPT is a single-player experience. That’s about to change.

A Look at the American Data Privacy and Protection Act

🤔 One question we’re pondering:

What are some countries that you’ve been tracking doing policymaking or other work in Responsible AI?

🔬 Research summaries:

How Cognitive Biases Affect XAI-assisted Decision-making: A Systematic Review

An error management approach to perceived fakeness of deepfakes: The moderating role of perceived deepfake targeted politicians’ personality characteristics

Why reciprocity prohibits autonomous weapons systems in war

📰 Article summaries:

The three challenges of AI regulation - Brookings

Rise of the robots raises a big question: what will workers do? - The Guardian

The people paid to train AI are outsourcing their work… to AI - MIT Technology Review

📖 Living Dictionary:

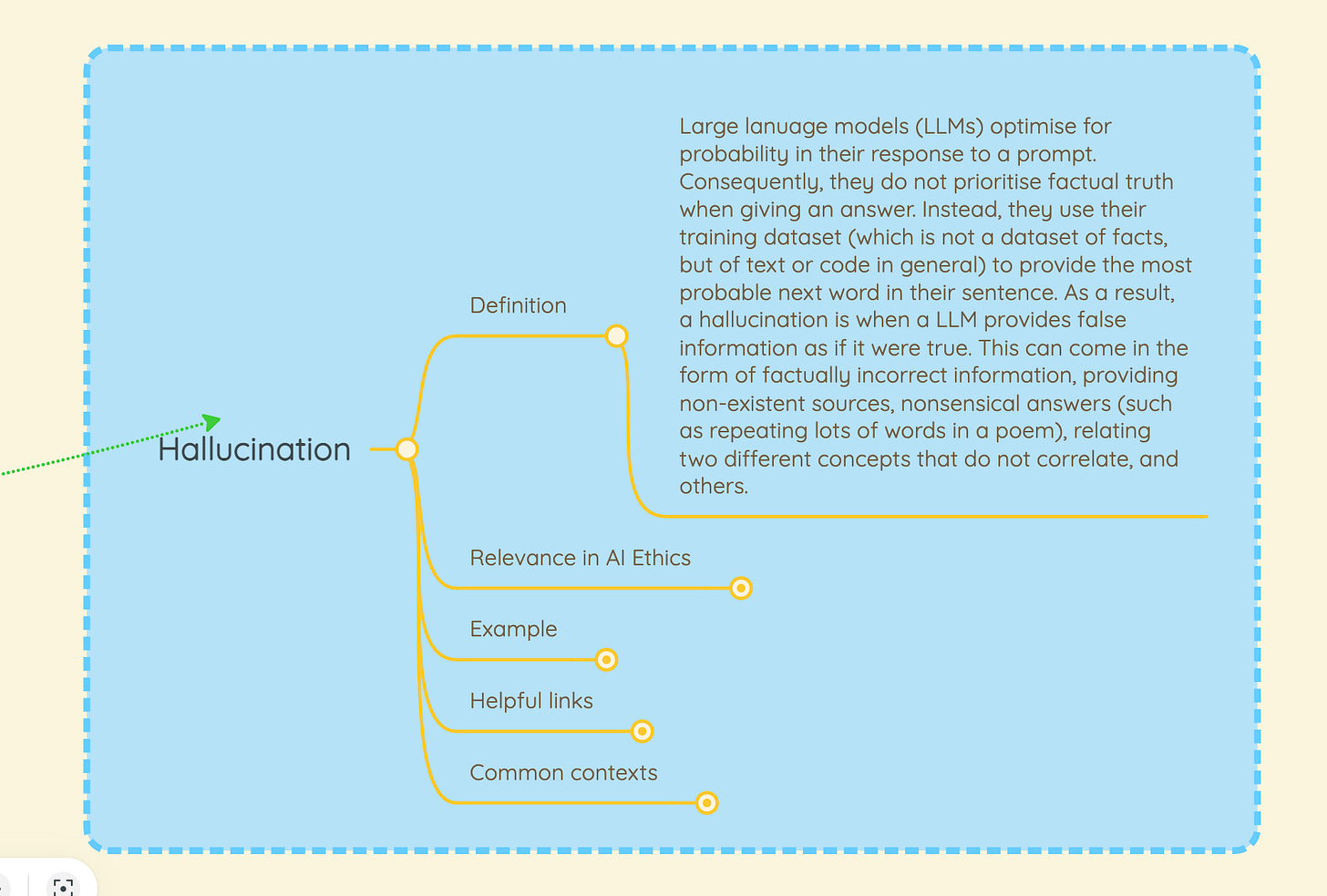

What is hallucination?

🌐 From elsewhere on the web:

Quand une « fausse » chanson devient virale

💡 ICYMI

A Prompt Array Keeps the Bias Away: Debiasing Vision-Language Models with Adversarial Learning

🤝 You can now refer your friends to The AI Ethics Brief!

Thank you for reading The AI Ethics Brief — your support allows us to keep doing this work. If you enjoy The AI Ethics Brief, it would mean the world to us if you invited friends to subscribe and read with us. If you refer friends, you will receive benefits that give you special access to The AI Ethics Brief.

How to participate

1. Share The AI Ethics Brief. When you use the referral link below, or the “Share” button on any post, you'll get credit for any new subscribers. Simply send the link in a text, email, or share it on social media with friends.

2. Earn benefits. When more friends use your referral link to subscribe (free or paid), you’ll receive special benefits.

Get a 3-month comp for 25 referrals

Get a 6-month comp for 75 referrals

Get a 12-month comp for 150 referrals

🤗 Thank you for helping get the word out about The AI Ethics Brief!

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours and we’ll answer it in the upcoming editions.

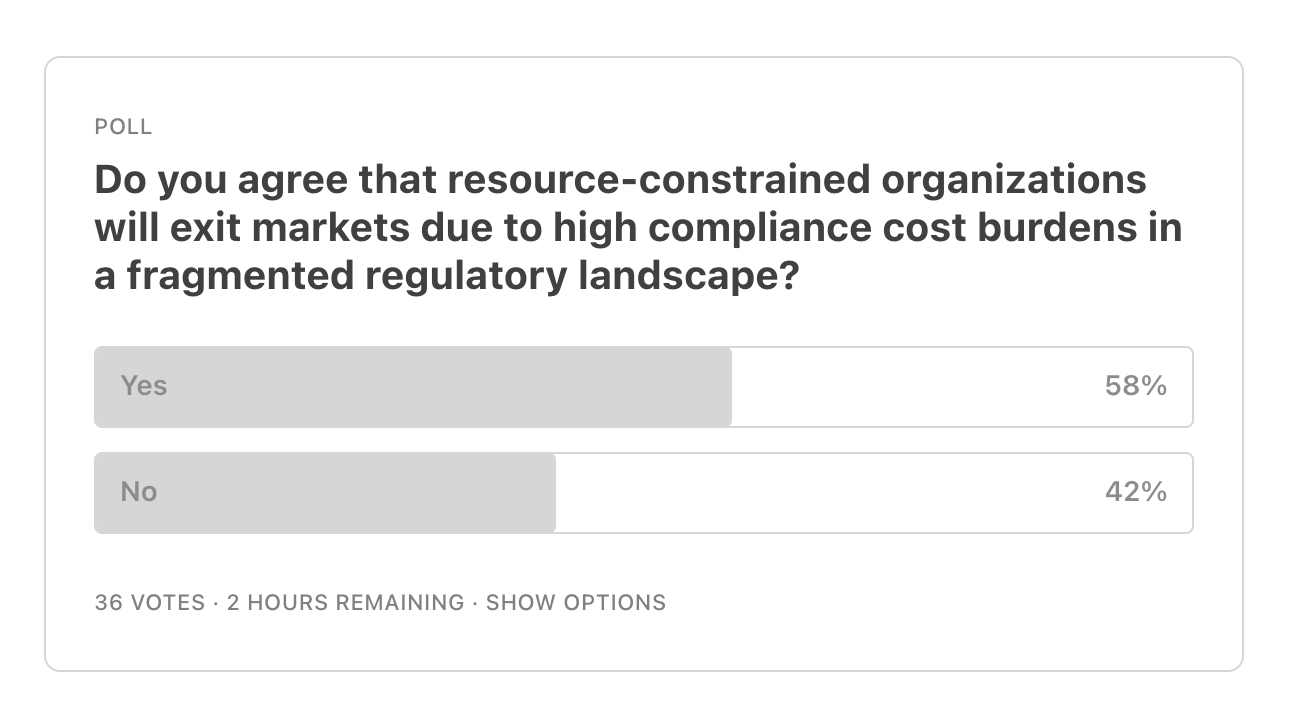

Here are last week’s results for this segment:

One of our readers posed to us this question over a live chat: "How can we judge whether an algorithmic audit is comprehensive?”

This is a very relevant question, given the state of activity around algorithmic audits in the domain of Responsible AI. For the most part, thinking about auditing just in terms of the algorithms already hints at them not being comprehensive to begin with.

We need to adopt a systems design thinking approach:

AI systems usually exist as part of larger products or services and interact within a social ecosystem characterized by cultural and contextual specificities.

Systems design thinking involves considering how components of an AI system interact with the environment in which they are deployed, rather than analyzing them in isolation.

This approach helps uncover feedback loops and externalities that could have ethical consequences, such as privacy violations or biases.

Drawing system diagrams that explicitly include external elements and identifying feedback loops can offer a more holistic view of the impacts.

The learning component of the AI system is crucial. Understanding how the system learns through interaction with the environment is key to building resilience, ensuring it remains within safe and reliable operational parameters.

An auditing approach that captures these points, and most importantly, trains auditors in both technical and social sciences is critical for the success of the audit to achieve the goals of Responsible AI.

What are some examples of algorithmic auditing approaches that adopt an approach like the one described here? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

ChatGPT is a single-player experience. That’s about to change.

We’re used to human teammates, with all their wonders and surprises. But, are we ready for machine teammates?

To delve deeper, read the full article series here.

A Look at the American Data Privacy and Protection Act

Data privacy is finally getting attention at the federal level in the US. This past summer, bipartisan Senators drafted the American Data Privacy and Protection Act (ADPPA) to provide consumers with data privacy rights and protections. While the passage of this bill is unlikely this session, there is interest at the federal level to codify consumer data protections.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

An ongoing discussion this week amongst the collaboration network at MAIEI was around under-recognized countries who are doing work in the domain of Responsible AI. In particular, most of the coverage around the world has focused on the efforts from the EU and US. There is some work being done in China, Singapore, India, Brazil, amongst other countries. What are some countries that you’ve been tracking doing policymaking or other work in Responsible AI?

We’d love to hear from you and share your thoughts back with everyone in the next edition:

🔬 Research summaries:

How Cognitive Biases Affect XAI-assisted Decision-making: A Systematic Review

The use of Explainable AI (XAI) technology is developing rapidly alongside claims that it will improve AI’s ethical and trustworthy characteristics in decision-making processes. This paper, however, reviews how human cognitive biases can affect, and be affected by, explainability systems. After a systematic review of the relationship between cognitive biases and XAI, the authors provide a roadmap for future XAI development that acknowledges and embraces human cognitive processes.

To delve deeper, read the full summary here.

Does the trustworthiness of the politician as the subject of a deepfake video affect how trustworthy we think the video is? Would a description of a deepfake that accompanies a video help? This study explores just how testing deepfakes can be, with the crux of the learning found in the public perception of the politician.

To delve deeper, read the full summary here.

Why reciprocity prohibits autonomous weapons systems in war

Conversations and the (likely) deployment of autonomous weapons systems (AWS) in warfare have considerably advanced. This paper analyzes the morality of deploying AWS and ultimately argues for a categorical ban. It arrives at this conclusion by avoiding hypothetical technological advances. Instead, it focuses on the relationship between humans and machines in the context of international humanitarian law principles jus in bello.

To delve deeper, read the full summary here.

📰 Article summaries:

The three challenges of AI regulation - Brookings

What happened: Several prominent AI leaders, including CEOs of OpenAI, Microsoft, and Google, have called for government regulation of AI activities. While initial support for regulation was expressed, disagreements and challenges arose, highlighting the complexity of implementing effective AI regulation. This article discusses three challenges for AI oversight: “dealing with the velocity of AI developments, parsing the components of what to regulate, and determining who regulates and how.”

Why it matters: Establishing rules and legal guardrails is crucial to prevent reckless practices in the corporate AI race. The current regulatory frameworks are insufficient and outdated, built on assumptions from the industrial era. Self-regulation by corporations is unlikely to address the risks associated with AI adequately, and there is a need for a specialized agency with expertise and agility to oversee AI developments.

Between the lines: The lack of comprehensive government regulations in the digital age has allowed dominant digital companies to shape the rules in their favor, particularly regarding data control and market dominance. The responsibility now lies in determining who will create AI policies, with a need for an expert agency that embraces new forms of oversight, including the use of AI itself. Regulation should focus on mitigating the effects of AI while avoiding stifling innovation, striking a balance between protecting the public interest and promoting investment.

Rise of the robots raises a big question: what will workers do? - The Guardian

What happened: The rapid advancements in AI, particularly the development of large language models like ChatGPT, have sparked discussions and concerns about the future of jobs. The increasing sophistication of robots and AI software has the potential to transform the economy and impact various roles beyond low-paid staff.

Why it matters: The outcome is not predetermined and will depend on how these technologies are implemented and the policies to support the transition to new jobs. Policymakers need to consider the impact on individuals and communities affected by automation and ensure there are opportunities for rewarding employment in emerging technologies.

Between the lines: The fear of mass job losses due to automation is growing, but it is not inevitable. The focus should be on adopting new technologies thoughtfully and implementing supportive policies. While robots may easily replace some jobs, policymakers must address the challenges faced by those most affected and create strategies to promote employment opportunities in emerging industries.

The people paid to train AI are outsourcing their work… to AI - MIT Technology Review

What happened: A study has found that many people hired to train AI models are outsourcing the work to AI models themselves, such as using OpenAI's ChatGPT. Companies often rely on gig workers to complete tasks that are difficult to automate, and this data is used to train AI models. The reliance on AI-generated data to train AI models can introduce errors and false information into the models, amplifying them over time.

Why it matters: Using AI models to generate data for training AI models can lead to a cycle of errors and misinformation. This poses a challenge in ensuring the accuracy and reliability of AI systems. It also highlights the need for methods to distinguish between human-generated and AI-generated data and raises concerns about the reliance on gig workers for crucial data cleanup tasks.

Between the lines: The study emphasizes the importance of developing mechanisms to verify the data source, distinguishing between human and AI-generated contributions. It also underscores the issue of relying on gig workers for data preparation tasks. The AI community must assess which tasks are most susceptible to automation and find ways to prevent the unintended consequences of using AI-generated data in training.

📖 From our Living Dictionary:

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Quand une « fausse » chanson devient virale

C’est à s’y méprendre : sur la chanson Heart on My Sleeve, les voix de Drake et The Weeknd se répondent sur un air hip-hop. On pourrait facilement croire à une réelle collaboration entre les deux vedettes canadiennes. Mais voilà, le morceau, rapidement devenu viral, a été créé de toutes pièces par l’intelligence artificielle (IA). Décryptage d’un phénomène qui risque de changer à jamais l’industrie de la musique.

To delve deeper, read the full article here.

💡 In case you missed it:

A Prompt Array Keeps the Bias Away: Debiasing Vision-Language Models with Adversarial Learning

Large-scale vision-language models are becoming more pervasive in society. This is concerning given the evidence of the societal biases manifesting in these models and the potential for these biases to be ingrained in society’s perceptions forever – hindering the natural process of norms being challenged and overturned. In this study, we successfully developed efficient but cheap computational methods for debiasing these out-of-the-box models while maintaining performance. This shows promise for empowering individuals to combat these injustices.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.