AI Ethics Brief #119: Bias and power, virtue-based frameworks, collective intelligence, climate awareness in NLP, and more.

What impact will a fragmented regulatory landscape have on Responsible AI implementation?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What impact will a fragmented regulatory landscape have on Responsible AI implementation?

✍️ What we’re thinking:

Collective Intelligence: Foundations + Radical Ideas

The coming AI ‘culture war’

🤔 One question we’re pondering:

What is better for the AI ecosystem going forward, open- or closed-source approach to design, development, and deployment of AI systems?

🔬 Research summaries:

Studying up Machine Learning Data: Why Talk About Bias When We Mean Power?

Mapping the Design Space of Human-AI Interaction in Text Summarization

Towards Climate Awareness in NLP Research

📰 Article summaries:

Adobe Stock creators aren't happy with Firefly, the company's 'commercially safe' gen AI tool | VentureBeat

Phil Spencer, Xbox chief, on AI: ‘I’m protective of the creative process’ - Guardian

How existential risk became the biggest meme in AI | MIT Technology Review

📖 Living Dictionary:

An example of LLMs leading to ethical AI issues

🌐 From elsewhere on the web:

Responsible AI at a Crossroads

💡 ICYMI

A Virtue-Based Framework to Support Putting AI Ethics into Practice

But first, our call-to-action this week:

Generated using DALL-E 2 with the prompt “isometric scene with high resolution in pastel colors with flat icon imagery depicting researchers reading books in a university library“

We are looking for summer book recommendations that have been published in the last 6-12 months that touch on Responsible AI - especially those that highlighted unique aspects of the Responsible AI discussion, such as the environmental impacts of AI that are less discussed than issues such as bias and privacy.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours and we’ll answer it in the upcoming editions.

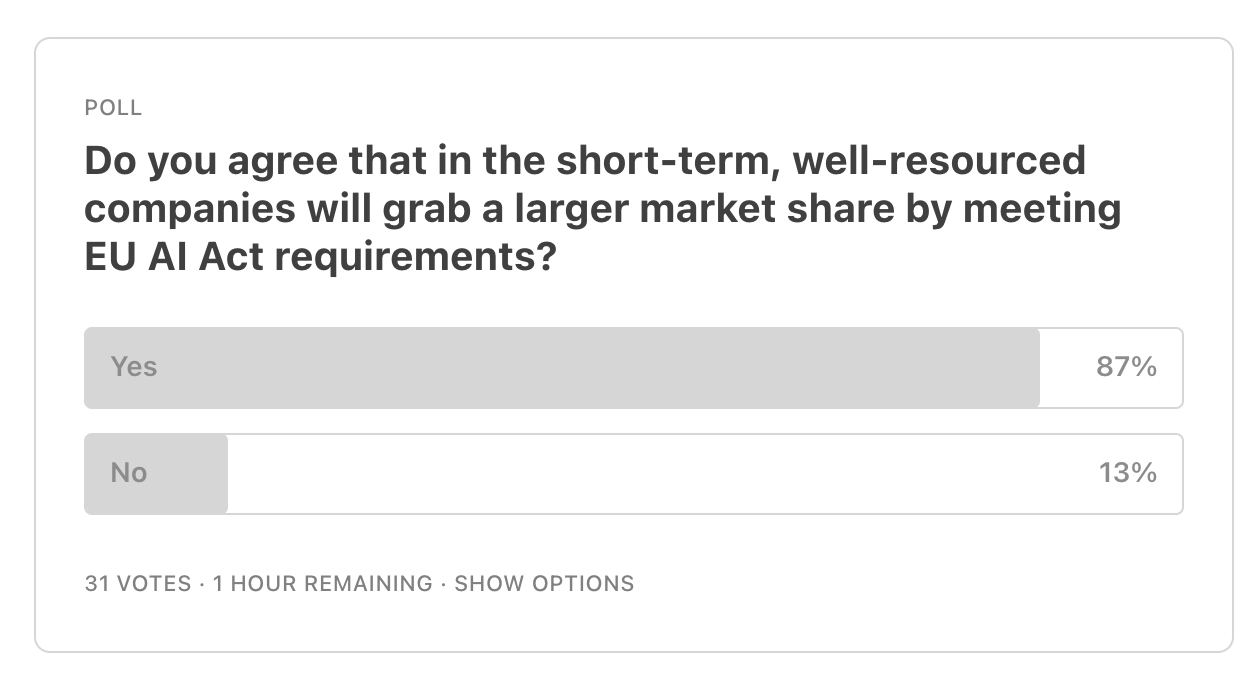

Here are last week’s results for this segment:

Moving along to a question that one of our readers posed to us over email: What impact will a fragmented regulatory landscape have on Responsible AI implementation?

As government agencies and lawmaking bodies are moving quickly to develop their own regulatory and legal approaches to regulating AI, some under pressure from the recent vote on the EU AI Act, we are seeing some harmonization of regulatory requirements around the world.

Yet, there is no guarantee that there will be convergence on all those requirements, unless there is a very strong Brussels effect, for example. What this means for organizations who will either build or buy AI systems is the following:

They will converge on a single product/service and align them to meet those regulatory requirements that give them the best chance of being compliant in as many markets as possible.

They will create separate product/service offerings for each jurisdiction where there is sufficient non-overlap in the regulatory requirements, bearing the cost burden to differentiate those offerings.

They will exit some markets where the burden to comply is too high and the ARPU (average revenue per user) is not high enough to justify customizing the product/service offering for that jurisdiction.

The astute reader will notice some similarities with the impact that the enforcement of the GDPR had on the world (and on AI), starting in May 2018. Mostly, #1 would be something that would be followed by resource-constrained organizations. #2 is most likely to be adopted by resource-heavy organizations since they have enough depth in their legal and technical teams to customize offerings. #3 would be something potentially adopted by low-resourced organizations and potentially by powerful resource-heavy organizations who want to move the regulatory approaches to something that reduces the onus on them to comply, essentially using their considerable market power and unique product offering as a driving force to change regulators’ minds that their citizens are going to miss out on utility if they don’t change.

What do you believe to be the likelihood that #3 comes to pass and if resource-heavy and strongly-positioned suppliers adopt this tact? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Collective Intelligence: Foundations + Radical Ideas

A close collaborator of the Montreal AI Ethics Institute, Emily Dardaman from the BCG Henderson Institute documented her invited trip to the Santa Fe Institute for their annual Collective Intelligence conference. She shared the joint research work that she’s been doing with our founder, Abhishek Gupta, on Augmented Collective Intelligence.

To delve deeper, read the full article series here.

Cultural battle lines are being drawn in the AI industry. Recently, California Democrat Representative Anna Eshoo sent a letter to the White House National Security Advisor and Office of Science and Technology Policy that criticized a highly popular AI model developed by Stablity.AI, one of a handful of new labs focused on the development of text-to-image systems. Unlike other popular text-to-image systems, however, Stability.AI is both freely available and provides minimal controls over the types of outputs a user chooses to generate––including violent and sexual imagery.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

There has been a lot of speculation around “What is better for the AI ecosystem going forward, open- or closed-source approach to design, development, and deployment of AI systems?” An open-source approach was strongly advocated by Clement Delangue, CEO of HuggingFace, in the recent US House hearing on AI. Others advocate viewing this as a gradient. This is driven by a weighing of the risks and benefits, with open-source inviting many more eyes to find potential failure modes, while posing risks of greater misuse if the wrong minds apply it for malicious use cases. There are many more such risks and benefits. Do you have more thoughts on how we should approach AI design, development, and deployment?

We’d love to hear from you and share your thoughts back with everyone in the next edition:

🔬 Research summaries:

Studying up Machine Learning Data: Why Talk About Bias When We Mean Power?

This paper borrows ‘Studying Up’ from Anthropology to make a case for power-aware research of Machine Learning datasets and data annotation practices. The authors point out the limitations of a narrow focus on dataset bias and highlight the need to study the historical inequalities, corporate forces, and epistemological standpoints that create existing data design and production practices along with the embedded bias.

To delve deeper, read the full summary here.

Mapping the Design Space of Human-AI Interaction in Text Summarization

Automatic text summarization systems commonly involve humans preparing data or evaluating model performance. However, there is a lack of systematic understanding of humans’ roles, experiences, and needs when interacting with or being assisted by AI. From a human-centered perspective, this paper maps the design opportunities and considerations for human-AI interaction in text summarization.

To delve deeper, read the full summary here.

Towards Climate Awareness in NLP Research

The environmental impact of AI, particularly Natural Language Processing (NLP), has become significant and is worryingly increasing due to the enormous energy consumption of model training and deployment. This paper draws on corporate climate reporting standards and proposes a model card for NLP models, aiming to increase reporting relevance, completeness, consistency, transparency, and accuracy.

To delve deeper, read the full summary here.

📰 Article summaries:

What happened: Adobe's stock rose after a strong earnings report, highlighting the success of its generative AI image generation platform, Adobe Firefly. However, a group of contributors to Adobe Stock Images, whose content was used to train Firefly, claim that their images were used without their knowledge or consent. This is particularly problematic because Adobe has long been involved in the creative economy.

Why it matters: The creators of the stock images feel that Adobe's use of their intellectual property to create competitive content is unethical and unfair. They argue that even if Adobe's actions were legally permissible, they should have been informed and allowed to opt-out. Adobe defends its intentions, stating that it aims to enable creators to monetize their talents, but the controversy remains.

Between the lines: Legal experts suggest that Adobe Stock artists may not have strong legal grounds due to the broad language in Adobe's Terms of Service. The generated images may not be considered derivative works and thus may not infringe on the artists' copyrights. However, there are debates surrounding the coverage of prior contracts in the face of unanticipated technological advancements.

Phil Spencer, Xbox chief, on AI: ‘I’m protective of the creative process’ - Guardian

What happened: During the Xbox games showcase, Xbox chief Phil Spencer downplayed concerns about AI streamlining game production and reducing team sizes. He emphasized that AI's primary role in gaming is in policy enforcement and network safety, where the vast amount of traffic necessitates technological assistance for monitoring and ensuring appropriate conversations.

Why it matters: Spencer emphasizes preserving the creative process and using technology to enhance game creativity rather than building games solely to showcase technology. While AI has been present in video games for years, Spencer believes its use in large language models and NPC dialogue generation has yet to find the right balance between innovation and fun, prioritizing enabling teams to explore creative expansion before focusing on efficiency.

Between the lines: The use of AI for community moderation in gaming is rising, with companies like Ubisoft and Riot implementing AI-based projects to combat toxicity and abuse. Simultaneously, concepts like dialogue generation for non-playable characters and AI-generated concept sketches are emerging. As game development costs increase and players resist higher prices, the industry faces the challenge of finding cost-effective solutions and striking a balance between innovation and efficiency.

How existential risk became the biggest meme in AI | MIT Technology Review

What happened: Many high-profile individuals and organizations are expressing concerns about the dangers of artificial intelligence (AI), with some comparing the risk of AI to that of pandemics and nuclear war. The Center for AI Safety released a concise statement emphasizing the need to prioritize mitigating the risk of AI-induced extinction globally.

Why it matters: This time, the alarm bells regarding AI dangers are being heard more widely, with even world leaders paying attention. The shift in public discourse indicates a change in the perceived risks associated with AI. While opinions on the matter are divided within the field, the central concern revolves around controlling increasingly intelligent machines.

Between the lines: The fears surrounding AI's potential risks are based on speculative scenarios, as these events have not yet occurred and cannot be empirically verified. However, the idea of existential risk captures people's imagination, making it a compelling narrative. Additionally, the focus on long-term risks deflects attention from immediate concerns and influences regulatory discussions on the extent of constraints to be imposed on AI activities.

🤝 You can now refer your friends to The AI Ethics Brief!

Thank you for reading The AI Ethics Brief — your support allows us to keep doing this work. If you enjoy The AI Ethics Brief, it would mean the world to us if you invited friends to subscribe and read with us. If you refer friends, you will receive benefits that give you special access to The AI Ethics Brief.

How to participate

1. Share The AI Ethics Brief. When you use the referral link below, or the “Share” button on any post, you'll get credit for any new subscribers. Simply send the link in a text, email, or share it on social media with friends.

2. Earn benefits. When more friends use your referral link to subscribe (free or paid), you’ll receive special benefits.

Get a 3-month comp for 25 referrals

Get a 6-month comp for 75 referrals

Get a 12-month comp for 150 referrals

🤗 Thank you for helping get the word out about The AI Ethics Brief!

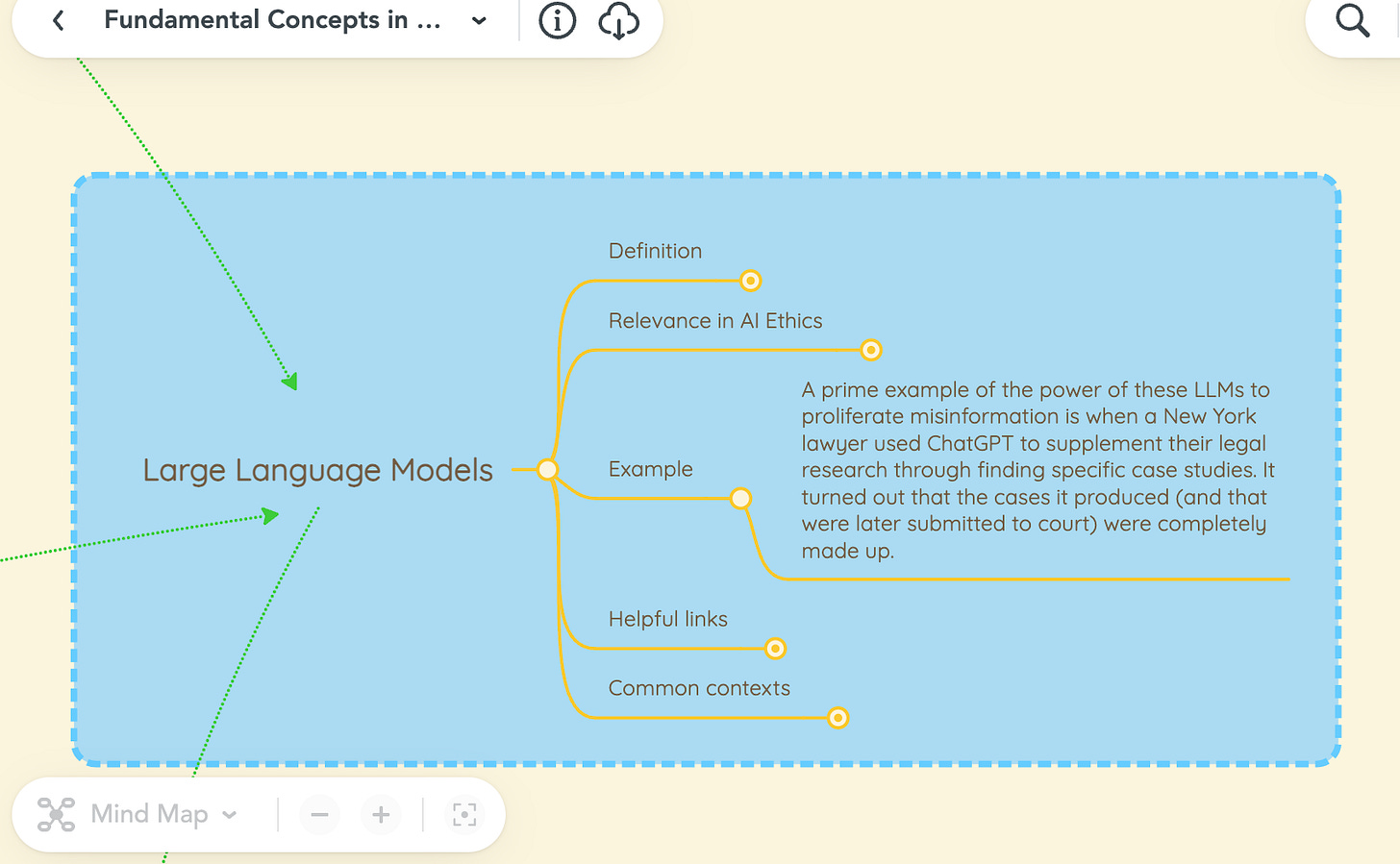

📖 From our Living Dictionary:

An example of LLMs leading to ethical AI issues

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Responsible AI at a Crossroads

Recent advances in generative AI have created an intense challenge for companies and their leaders. They are under commercial pressure to realize value from AI quickly and public pressure to effectively manage the ethical, privacy, and safety risks of rapidly changing technologies. Some companies have reacted by blocking some generative AI tools from corporate systems. Others have allowed it to develop organically under loose governance and oversight. Neither extreme is the right choice.

The AI revolution is still in its early days. Companies have time to make responsible AI a powerful and integral capability. But management consistently underestimates the investment and effort required to operationalize responsible AI. Far too often, responsible AI lacks clear ownership or senior sponsorship. It isn’t integrated into core governance and risk management processes or embedded in the frontlines. In this gold rush moment, the principles of responsible AI risk becoming merely a talking point.

For the third consecutive year, BCG conducted a global survey of executives to understand how companies manage this tension. The most recent survey, conducted early this year after the rapid rise in popularity of ChatGPT, shows that on average, responsible AI maturity improved marginally from 2022 to 2023. Encouragingly, the share of companies that are responsible AI leaders nearly doubled, from 16% to 29%.

These improvements are insufficient when AI technology is advancing at breakneck pace. The private and public sectors must operationalize responsible AI as fast as they build and deploy AI solutions. As to how companies should respond, four themes emerged from the survey and from two dozen interviews with senior leaders. (This article summarizes a fuller treatment of the findings in MIT Sloan Management Review.)

To delve deeper, read the full article here.

💡 In case you missed it:

A Virtue-Based Framework to Support Putting AI Ethics into Practice

A virtue-based approach specific to the AI field is a missing compound in putting AI ethics into practice, as virtue ethics has often been disregarded in AI ethics research this far. To close this research gap, a new paper describes how a specific set of “AI virtues” can act as a further step forward in the practical turn of AI ethics.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.