AI Ethics Brief #138: Brushstrokes and bytes, human intervention's impact on GenAI outputs, AI use in credit reporting, and more.

Which jurisdictions will be the ones to watch out for who are doing innovative work in the area of developing meaningful regulations?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

How should we measure the impact of human intervention in AI-generated outputs?

✍️ What we’re thinking:

Blending Brushstrokes with Bytes: My Artistic Odyssey from Analog to AI

🤔 One question we’re pondering:

Which jurisdictions will be the ones to watch out for who are doing innovative work in the area of developing meaningful regulations?

🪛 AI Ethics Praxis: From Rhetoric to Reality

What are some downstream implications of organizational restructuring with the advent and adoption of GenAI?

🔬 Research summaries:

Worldwide AI Ethics: a review of 200 guidelines and recommendations for AI governance

On the Generation of Unsafe Images and Hateful Memes From Text-To-Image Models

Sex Trouble: Sex/Gender Slippage, Sex Confusion, and Sex Obsession in Machine Learning Using Electronic Health Records

📰 Article summaries:

Underage Workers Are Training AI | WIRED

Researchers Refute a Widespread Belief About Online Algorithms | Quanta Magazine

The Age of Principled AI

📖 Living Dictionary:

What are AI-generated text detection tools?

🌐 From elsewhere on the web:

Why ethical AI requires a future-ready and inclusive education system

💡 ICYMI

Responsible Use of Technology in Credit Reporting: White Paper

🚨 AI Ethics Praxis: From Rhetoric to Reality

Aligning with our mission to democratize AI ethics literacy, we bring you a new segment in our newsletter that will focus on going beyond blame to solutions!

We see a lot of news articles, reporting, and research work that stops just shy of providing concrete solutions and approaches (sociotechnical or otherwise) that are (1) reasonable, (2) actionable, and (3) practical. These are much needed so that we can start to solve the problems that the domain of AI faces, rather than just keeping pointing them out and pontificate about them.

In this week’s edition, you’ll see us work through a solution proposed by our team to a recent problem and then we invite you, our readers, to share in the comments (or via email if you prefer), your proposed solution that follows the above needs of the solution being (1) reasonable, (2) actionable, and (3) practical.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

Here are the results from the previous edition for this segment:

A narrow(-ish) preference for open-source foundation models (FMs) over closed-source models which makes sense given the promise (and delivery of that promise via Llama and other FMs in terms of comparable performance) that these FMs have brought to light. It’s great to see how the ecosystem is spawning innovation across geographies and domains with variations on some fundamental approaches. What is most interesting is that we have healthy competition (for now!) that hopefully carries forward into 2024 bringing us innovation not just in the performance of these FMs but also in the ethics and safety aspect of design, development, and deployment as well.

This week, one of our readers, Rana B., asked us “How should we measure the impact of human intervention in AI-generated outputs?” This is very interesting, especially in the age of copilots where Generative AI systems and humans have an exchange back-and-forth to create written text, images, videos, and audio outputs. Since this is such a broad area of exploration, let’s focus on how human intervention will impact the quality of the outputs from the AI system:

1. Performance Metrics: These can be used to quantify the quality of the AI's output before and after human intervention. For instance, in a customer support context, the productivity of agents using an AI tool increased by nearly 14%, with the greatest benefits seen among less experienced and lower-skilled workers.

2. Quality Evaluation Methods: Both automatic and manual methods can be used to evaluate the quality of generative AI content. Automatic methods use algorithms or models to compute metrics and generate scores, while manual methods use human evaluators or experts to judge the content and provide feedback or ratings.

3. Human-in-the-Loop Systems: In these systems, humans play a crucial role in training AI models from scratch or intervening in the system to improve the output. The impact of human intervention can be measured by comparing the output before and after the intervention.

4. Cost of Intervention: In some cases, the cost of human intervention can be quantified. For example, if a human overwrites a recommendation made by an algorithm, the algorithm can calculate the cost of this intervention in terms of revenue.

5. Customer Satisfaction: Customer satisfaction metrics can be used to measure the quality of AI-generated content. For instance, in a customer support context, measures of customer satisfaction showed no significant change after the introduction of an AI tool, suggesting that productivity improvements did not come at the expense of interaction quality.

6. Content Quality: AI-generated content can sometimes be of higher quality than content created by humans, due to the fact that AI models are capable of processing large amounts of data and identifying patterns that humans might miss.

What are some ways that you’ve seen the most effective uses of human intervention in the outputs from GenAI systems? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Blending Brushstrokes with Bytes: My Artistic Odyssey from Analog to AI

In this intricate tapestry of artistry and technology, I chart my transformative journey from the tactile world of analog art to the boundless realms of digital creation and AI integration. Embracing the unpredictable allure of wet-on-wet ink techniques, I harmonize them with the precision of digital tools, crafting a visual language that parallels my evolving understanding of AI’s role in art. This narrative isn’t just about adapting to new tools; it’s a deeper exploration of how AI, while streamlining certain aspects of creativity, also challenges the traditional notions of artistic growth, learning, and the very essence of time in the creative process.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

In 2023, there was a lot more emphasis on Responsible AI, especially from the standpoint of developing regulations and bringing them to fruition. The EU AI Act, for example, was a monumental, multi-year effort that finally passed muster, despite involving a lot of differing views, industry interventions, divergent agendas, and bringing together stakeholders from many nations. Will 2024 carry this momentum forward and which jurisdictions will be the ones to watch out for who are doing innovative work in the area of developing meaningful regulations?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

Generative AI (GenAI) dominated headlines this year and amidst all those discussions, questions of ethics and responsible innovation became much more mainstream than even 2022. One of the potential impacts that this has had is in terms of how organizations should think about how they structure themselves, in particular, how such a restructuring might have downstream implications for the work that we do in Responsible AI (RAI):

Here are some key change that we see taking place:

🕸️ Decentralized Decision Making: GenAI can process vast amounts of information, speeding up and improving decision-making processes. This could lead to more decentralized decision-making, with frontline workers using GenAI tools to make decisions that once required multiple levels of approval. For work in RAI, this means that we will have a greater opportunity to incorporate insights from those who are closest to the problems encountered in the field, e.g., the rank-and-file employees.

🧑🤝🧑 Flatter Organizational Structures: With decision-making and approval processes pushed to the front lines, there may be less reliance on middle management positions, leading to flatter organizational structures with fewer layers of hierarchy. Again, this has the potential to increase empowerment across the organization, and will allow for lesser heard voices to start making a bigger impact in how ethical issues are tackled by the organization.

🏋️ Cross-Functional Teams: The integration of GenAI is expected to lead to more cross-functional teams, where individuals from different departments come together to manage and deploy AI applications. This is particularly useful when we have participants from design, legal, marketing, business, engineering, product, all working together to surface and address ethical challenges and tradeoffs that will inevitably arise in the design, development, and deployment of some of these GenAI solutions.

🔔 New Roles and Positions: The introduction of GenAI is likely to create new roles and positions within organizations. We are already seeing new roles like prompt engineers becoming a role that can have an impact in terms of how much value can be extracted from an AI system when done well. They are also useful in surfacing bias and other issues in these systems, e.g., through red-teaming activities. We will only see the importance of such roles increase over time.

💬 Collaboration Enhancement: AI can improve collaboration by providing tools and platforms that enable seamless coordination. It can also foster a more agile, flexible, and informed team environment. In particular, sometimes similar challenges have been encountered by other teams within the organization and finding and building on those solutions can be challenging. The use of GenAI as a knowledge search engine can greatly aid in that process allowing teams to spend more time focused on the specifics of their particular case while standing on the shoulders of giants (their peers!)

📈 Productivity Improvement: AI can augment the employee experience and assist employees in ways that many workers may not even expect, potentially leading to increased productivity. A side effect of this increased productivity is potentially having more time within product development lifecycles to dedicate towards addressing ethics issues which would otherwise often take a second-grade priority in the face of delivery and business pressures.

However, it's important to note that the integration of AI into teams can also present challenges. For instance, a study found that individual human workers became less productive after AI became part of their team, and overall team performance suffered when an automated worker was added. Therefore, careful planning and management will be crucial to successfully integrate GenAI into the workplace and reap its potential benefits.

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

🔬 Research summaries:

Worldwide AI Ethics: a review of 200 guidelines and recommendations for AI governance

To determine whether a global consensus exists regarding the ethical principles that should govern AI applications and to contribute to the formation of future regulations, this paper conducts a meta-analysis of 200 governance policies and ethical guidelines for AI usage published by different stakeholders worldwide.

To delve deeper, read the full summary here.

On the Generation of Unsafe Images and Hateful Memes From Text-To-Image Models

Text-to-Image models are revolutionizing the way people generate images. However, they also pose significant risks in generating unsafe images. This paper systematically evaluates the potential of these models in generating unsafe images and, in particular, hateful memes.

To delve deeper, read the full summary here.

Considering sex and gender in medical machine learning research may help improve health outcomes, especially for underrepresented groups such as transgender people. However, medical machine learning research tends to make incorrect assumptions about sex and gender that limit model performance. In this paper, we provide an overview of the use of sex and gender in machine learning models, discuss the limitations of current research through a case study on predicting HIV risk, and provide recommendations for how future research can do better by incorporating richer representations of sex and gender.

To delve deeper, read the full summary here.

📰 Article summaries:

Underage Workers Are Training AI | WIRED

What happened: The story revolves around underage workers who, during the pandemic, found employment in the global artificial intelligence supply chain. These young workers, mainly from East Africa, Venezuela, Pakistan, India, and the Philippines, engage in tasks such as data labeling for AI algorithms through platforms like Toloka. The work, often done by minors using fake details to bypass age checks, involves tasks ranging from simple image identification to complex content moderation.

Why it matters: The exploitation of underage workers in the AI supply chain raises ethical concerns. These workers, paid per task with meager remuneration, often engage in digital servitude for minimal livelihood. Bypassing age verification measures is a common practice facilitated by lenient platforms, and the physical distance between workers and tech giants creates an invisible workforce governed by different rules. The low pay and one-sided flow of benefits from the Global South to the Global North evoke parallels with a form of colonialism.

Between the lines: While some underage workers find the platforms beneficial, the low pay compared to in-house tech employees and the one-way flow of benefits raise uncomfortable questions about the nature of this work. The story highlights the potential exploitation and challenges faced by underage workers in the digital gig economy. The uneven exchange rates, limited earning potential, and increased demand for tasks create a complex dynamic, emphasizing the need for ethical considerations in AI-driven gig work.

Researchers Refute a Widespread Belief About Online Algorithms | Quanta Magazine

What happened: The text explores the realm of online algorithms, specifically focusing on the challenging "k-server problem." This problem involves efficiently dispatching agents to fulfill requests as they arrive, with applications ranging from technicians and firefighters to ice cream salespeople. Despite decades of research, a paper published in November challenged the belief that there is always an algorithmic solution for the k-server problem. The authors demonstrated that, in some cases, every algorithm falls short, refuting the long-held randomized k-server conjecture.

Why it matters: Researchers typically view these problems as games against an adversary who selects requests to make online algorithms perform poorly. The randomized k-server conjecture, suggesting the existence of a randomized algorithm achieving a specific performance goal, has been widely accepted. However, winning a Best Paper Award, this recent paper disproves this conjecture by creating complex spaces and request sequences that prevent any algorithm from reaching the expected performance. While this result is theoretical, it helps define algorithmic performance expectations. In practice, real-world scenarios often surpass theoretical worst-case predictions.

Between the lines: The paper's theoretical findings, acknowledged with a Best Paper Award, represent a significant milestone in the field. Despite its theoretical nature, the research provides insights into algorithm performance expectations. However, the practical application of algorithms in real-world scenarios often exceeds theoretical worst-case predictions. The methodology used in the paper, which also set cutoffs for other randomized algorithms, highlights the technique's power. The unexpected outcome suggests that future findings in the field may continue to defy researchers' expectations, offering new insights and challenges.

What happened: The recent narrative surrounding AI spans from recognizing its transformative potential across various sectors to concerns about existential risks. Amid calls to halt AI development, industries acknowledge its positive impacts, envisioning breakthroughs in physics, national defense, medicine, and beyond. DeepMind's AlphaFold exemplifies AI's power, solving the long-standing "gene-folding" problem and revolutionizing disease detection and drug development. In navigating the future of AI, there's a shift toward prioritizing Responsible AI, emphasizing its net positive contributions over debates about the morality of AI development.

Why it matters: Responsible AI involves three key domains: safety, governance, and ethics. It ensures control over AI systems, aligns with policies and regulations, and assesses the ethical implications of deployment. In the evolving landscape, organizations failing to deploy AI effectively risk constraints, limited impact, and outdated decision-making. Responsible AI is not a hindrance but a discipline guiding organizations to navigate risks before unleashing AI systems, fostering innovation with confidence. It involves weighing risks against possibilities providing a framework for strategic risk evaluation.

Between the lines: The ultimate goal is confidently deploying AI systems in their safety, transparency, and ethical adherence. Responsible AI becomes a language and framework for discussing and measuring risk within the broader AI portfolio. Standardizing perspectives on AI enables organizations to assess its use across diverse objectives, mitigating pressure and risk. Ideally, Responsible AI principles should become ingrained in AI practices, transcending compliance and evolving into a standard for all AI work. As AI capabilities and scale continue to grow, embracing a calculated approach to risks and rewards positions organizations to harness AI's transformative potential for enduring positive change.

📖 From our Living Dictionary:

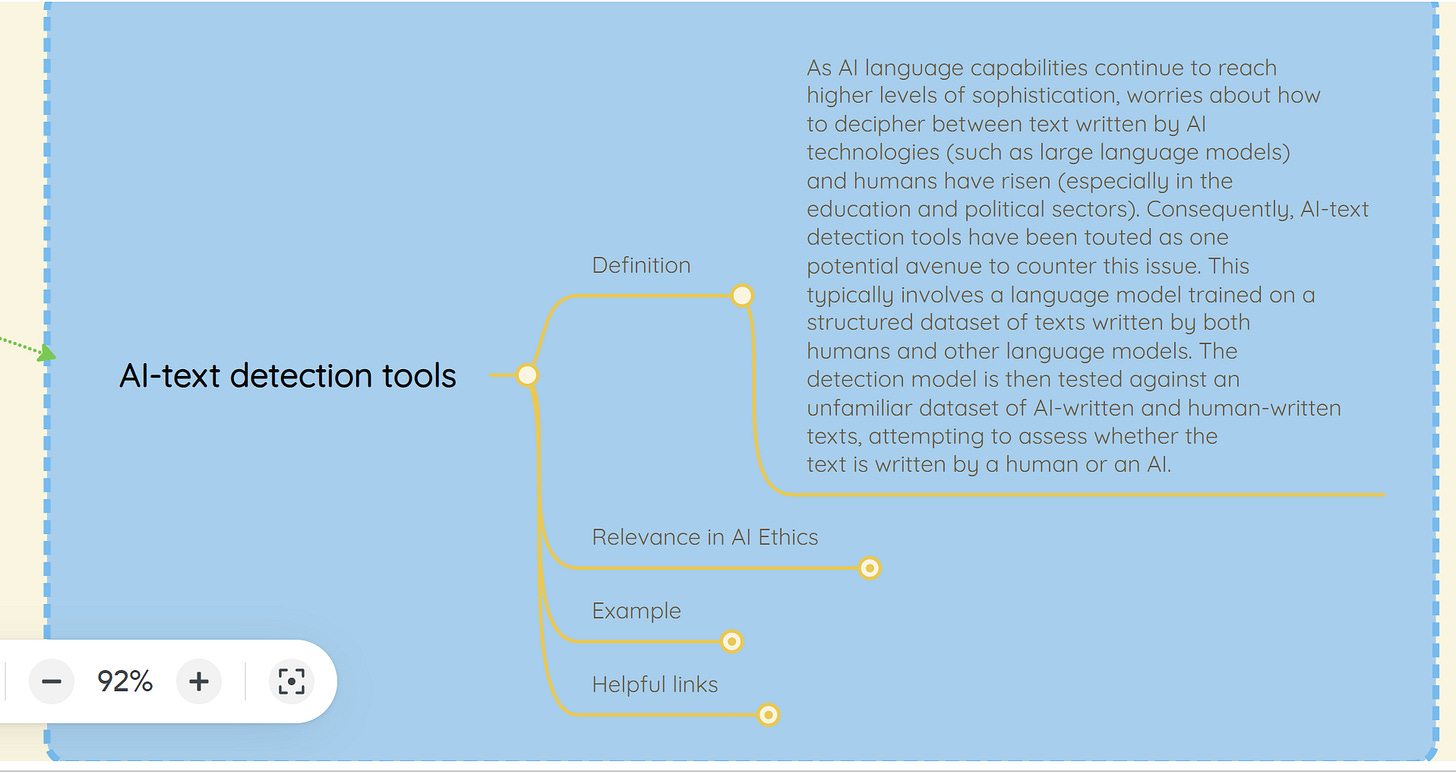

What are AI-generated text detection tools?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Why ethical AI requires a future-ready and inclusive education system

Research shows that marginalized groups are particularly susceptible to job loss or displacement due to automation.

We must address the fundamental issue of AI education and upskilling for these underrepresented groups.

Public and private sector stakeholders can collaborate to ensure an equitable future for all.

To delve deeper, read the full article here.

💡 In case you missed it:

Responsible Use of Technology in Credit Reporting: White Paper

Technology is at the core of credit reporting systems, which have evolved significantly over the past decade by adopting new technologies and business models. As disruptive technologies have been increasingly adopted around the globe, concerns have arisen over possible misuse or unethical use of these new technologies. These concerns inspired international institutions and national authorities to issue high-level principles and guidance documents on responsible technology use. While adopting new technologies benefits the credit reporting industry, unintended negative outcomes of these technologies from ethics and human rights perspectives must also be considered. Therefore, the white paper focuses on the responsible use of technology in credit reporting and, in particular, the ethical concerns with respect to the use of AI.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.