AI Ethics Brief #122: ChatGPT opportunists, defining open in software and AI, global governance, ethical values prioritization, and more.

How do we ensure participation meaningfully from actors around the world in developing a global approach to AI governance?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

How should we square the ongoing debate on whether large language models (LLMs) should be open- or close-source?

✍️ What we’re thinking:

Defining Open: FOSSY Day 2

A Case Study: Increasing AI Ethics Maturity in a Startup

🤔 One question we’re pondering:

How do we ensure participation meaningfully from actors around the world in developing a global approach to AI governance?

🔬 Research summaries:

Using attention methods to predict judicial outcomes

How Different Groups Prioritize Ethical Values for Responsible AI

Achieving Fairness at No Utility Cost via Data Reweighing with Influence

📰 Article summaries:

China’s ChatGPT Opportunists—and Grifters—Are Hard at Work | WIRED

Russia Seeds New Surveillance Tech to Squash Ukraine War Dissent - The New York Times

Gödel, Escher, Bach, and AI - The Atlantic

📖 Living Dictionary:

What is an example of hallucination?

🌐 From elsewhere on the web:

What should enterprises make of the recent warnings about AI's threat to humanity? AI experts and ethicists offer opinions and practical advice for managing AI risk.

💡 ICYMI

Responsible and Regulatory Conform Machine Learning for Medicine: A Survey of Challenges and Solutions

🤝 You can now refer your friends to The AI Ethics Brief!

Thank you for reading The AI Ethics Brief — your support allows us to keep doing this work. If you enjoy The AI Ethics Brief, it would mean the world to us if you invited friends to subscribe and read with us. If you refer friends, you will receive benefits that give you special access to The AI Ethics Brief.

How to participate

1. Share The AI Ethics Brief. When you use the referral link below, or the “Share” button on any post, you'll get credit for any new subscribers. Simply send the link in a text, email, or share it on social media with friends.

2. Earn benefits. When more friends use your referral link to subscribe (free or paid), you’ll receive special benefits.

Get a 3-month comp for 25 referrals

Get a 6-month comp for 75 referrals

Get a 12-month comp for 150 referrals

🤗 Thank you for helping get the word out about The AI Ethics Brief!

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours and we’ll answer it in the upcoming editions.

Here are the results from the previous edition for this segment:

With the latest Llama 2 release from Meta (yes, they’ve gone with modified capitalization as opposed to LLaMA for the first version) adopting an open-source approach making the model free-to-use, even for commercial purposes, a reader wrote to us asking how we should square the ongoing debate on whether large language models (LLMs) should be open- or close-source?

NOTE: If you’re going to be using the model in a context with >700 MAUs (monthly active users), then you need to obtain a license for commercial use from Meta before proceeding.

On the side of benefits, the common ones are that:

it can promote transparency and accountability in the decision-making process across the AI lifecycle,

allow for more eyes on the solution which can surface ethical issues that might not be caught by the development team

elevate the capabilities in the ecosystem through greater collaboration and knowledge-sharing, and

from a business perspective, allow organizations to tailor models to their specific needs, hopefully leading to fewer ethical issues.

On the side of concerns, there are:

risks of increased malicious attacks and exploitation of weaknesses in the AI systems, and

issues around intellectual property rights (IPRs), especially when the model has been trained on data where provenance and licensing are unclear.

Given these costs and benefits, voices on both sides of the debate are working towards striking the right balance in how models should be released to mitigate risks while still allowing the community to benefit from research investments and developments.

What do you believe are viable alternatives to open-sourcing of LLMs to achieve Responsible AI goals? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

The internet’s most powerful engine of collective intelligence is at an uncertain point. The free and open-source software (FOSS) community emerged and refined its principles over twenty years during the early internet, co-evolving with regulation and technology. Decentralized movements need time to grow and adapt. But to adapt to advanced AI, FOSS needs 20 years’ worth of evolution in just a few months.

What does openness mean in the context of advanced AI, where the backbone of good models is a giant pile of semi-copyrighted data? Is openness a means to an end, like human flourishing, or a goal in and of itself? When AIs accidentally print 40,000 chemical war agents in six hours, is it time for the open-source community to revisit its prohibition on “discriminating against fields of endeavor?” We don’t know, and we better find out quickly!

To delve deeper, read the full article series here.

A Case Study: Increasing AI Ethics Maturity in a Startup

In the previous article, the author described their framework for implementing AI ethics, which builds on research in responsible innovation, AI ethics, and philosophy. In a nutshell, they recommend that organizations that develop or use AI technologies focus on three pillars of activity around responsible AI:

Knowledge: What should you do to understand how your AI may impact people, society, and the environment?

Workflow: How should you integrate responsible AI components into your workflows and incentives?

Oversight: What should you do to keep yourself accountable?

This entry presents a case study: the author describes how they use their framework to increase AI ethics maturity in a startup, Bria.ai. Bria is a synthetic imaging startup (between seed and Series A) with a vision to reinvent visual communication. Bria has developed a platform to generate, change, and repurpose images and videos. For example, it can recast a new actor in an existing image, create images out of text prompts, and add 3D motion to still photographs. The company’s customers are agencies and creative platforms.

The author serves as Bria’s Responsible AI Advocate, leading all aspects of responsible AI efforts in the company, including R&D, HR, sales, operations, and marketing. In this article, the author describes the company’s first steps in increasing its AI ethics maturity, a journey Bria.ai has been on since May 2022.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

There have been numerous papers published articulating approaches toward the global governance of AI, including the establishment of new organizations that aim to realize that vision. One of the things that we’ve been thinking about is whether a single universal approach is the best way to move forward, especially given the local cultural and contextual sensitivities that might be abstracted away in a global governance framework. More so, how do we ensure participation meaningfully from actors around the world in developing a global approach to AI governance?

We’d love to hear from you and share your thoughts back with everyone in the next edition:

🔬 Research summaries:

Using attention methods to predict judicial outcomes

We have developed a model to classify judicial outcomes by analyzing textual features from the legal orders. After that step, we used the weights of one of our networks, a Hierarchical Attention Network, to detect the most important words used to absolve or convict defendants.

To delve deeper, read the full summary here.

How Different Groups Prioritize Ethical Values for Responsible AI

AI ethics guidelines argue that values such as fairness and transparency are key to the responsible development of AI. However, less is known about the values a broader and more representative public cares about in the AI systems they may be affected by. This paper surveys a US-representative sample and AI practitioners about their value priorities for responsible AI.

To delve deeper, read the full summary here.

Achieving Fairness at No Utility Cost via Data Reweighing with Influence

With the fast development of algorithmic governance, fairness has become a compulsory property for machine learning models to suppress unintentional discrimination. In this paper, we focus on the pre-processing aspect for achieving fairness and propose a data reweighing approach that only adjusts the weight for samples in the training phase. Our algorithm computes individual weight for each training instance via influence function and linear programming, and in most cases, demonstrates cost-free fairness through vanilla classifiers.

To delve deeper, read the full summary here.

📰 Article summaries:

China’s ChatGPT Opportunists—and Grifters—Are Hard at Work | WIRED

What happened: Chinese entrepreneurs use AI, particularly ChatGPT, to establish content businesses and write self-help books. The online literature market in China is valued at over $4 billion, with numerous titles published annually. While AI-written books are gaining popularity, reviews indicate that the plots often lack coherence and contain unexpected twists.

Why it matters: Within six months, ChatGPT has given rise to a community of influencers who share their expertise on AI, garnering millions of followers and views. Companies are even hiring ChatGPT experts to enhance the chatbot's performance for various purposes. This trend signifies the growing significance of AI in content creation and the emerging demand for individuals skilled in AI technologies.

Between the lines: The allure of wealth creation through AI is strong. However, while many entrepreneurs have embraced ChatGPT, few have achieved financial success. The market still favors traditional expertise, as agents prefer to pay for essays written by top graduates and native English speakers, suggesting that teaching or securing full-time AI-related jobs may be more viable paths.

Russia Seeds New Surveillance Tech to Squash Ukraine War Dissent - The New York Times

What happened: Amid the war in Ukraine, Russian authorities utilized digital surveillance technologies to crack down on domestic dissenters. As the demand for surveillance tools grew, a cottage industry of tech contractors emerged, providing powerful digital monitoring to the police and Russia's Federal Security Service (F.S.B.). These technologies enable tracking phone and website usage, monitoring encrypted apps, locating phones, identifying anonymous social media users, and hacking into accounts.

Why it matters: Adopting these surveillance tools complements Russia's efforts to shape public opinion, suppress dissent, and control the internet. The state's repressive interests have led to the rise of a new cohort of Russian companies, incubated through state-driven demands. The implications of these technologies may extend beyond Russia's borders, impacting the surrounding region and potentially the world, leading to concerns over online oppression and increased competition with China's digital authoritarianism.

Between the lines: Introducing these surveillance tools may alter how individuals hide their online activities, leading to deeper investigations or arrests based on digital exchanges. Following China's example in establishing digital authoritarian regulations, Russian firms could eventually become competitors to traditional surveillance tool providers. The convergence of Russia and China in digital surveillance could pose significant challenges to global digital freedoms.

Gödel, Escher, Bach, and AI - The Atlantic

What happened: Sami Al-Suwailem, a reader of Douglas Hofstadter's book "Gödel, Escher, Bach," wanted to share how the book was written on his website. Instead of burdening Hofstadter with the task, he used the language model GPT-4 to generate a one-page essay titled "Why Did I Write GEB?" impersonating Hofstadter's voice as the author. However, Hofstadter found the AI-generated text far from his real writing style and misrepresentative of the book's actual origin.

Why it matters: Hofstadter expresses deep concerns about large language models like GPT-4, finding them repellent and threatening to humanity. He believes they flood the world with fakery, as they lack the ability to come up with original ideas and merely rehash words and phrases from their vast training datasets. The experiment with GPT-4 highlights the risk of trusting AI-generated content that may sound convincing at first glance but often falls apart under careful analysis, raising significant issues related to truth and its impact on society.

Between the lines: The AI-generated text's illusion of capturing Hofstadter's voice and style raises serious doubts about the reliability of computational systems as authorities on real-world matters. Hofstadter emphasizes the importance of human authenticity and sensitivity when dealing with language and truth. He fears that the widespread acceptance of AI as a substitute for human thinking and expression could undermine the very nature of truth and have significant consequences for society.

📖 From our Living Dictionary:

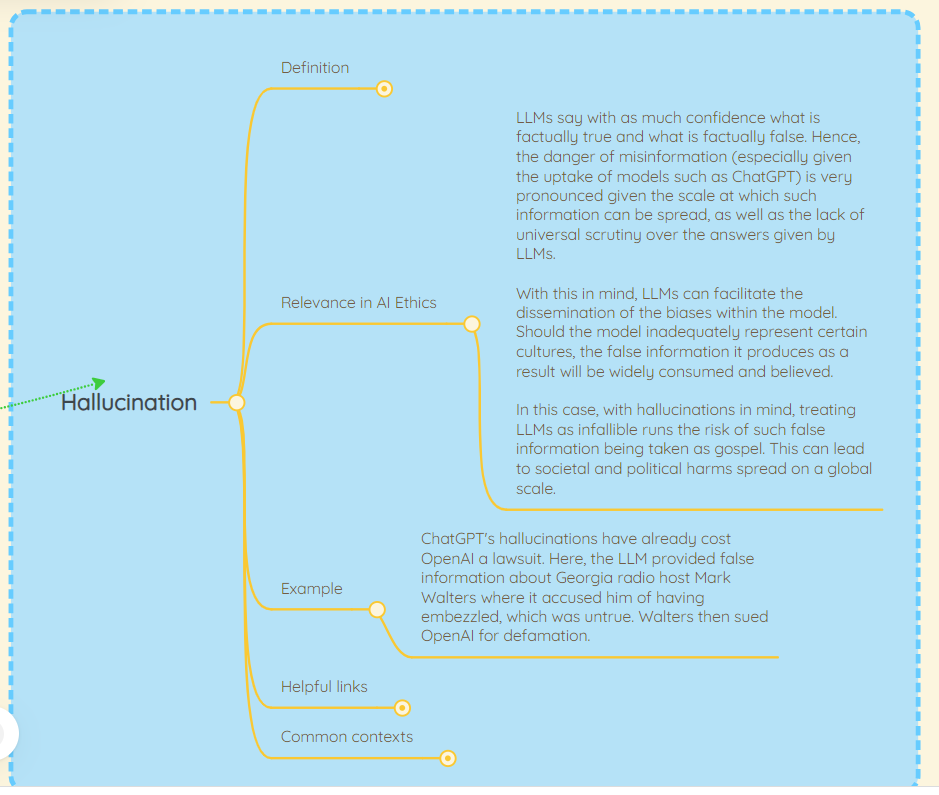

What is an example of hallucination?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Responsible AI or virtue signaling?

Signed letters calling for an AI pause get a lot of media play, agreed Abhishek Gupta, founder and principal researcher at the Montreal AI Ethics Institute, but they beg the question of what happens next.

"I find it difficult to sign letters that primarily serve as virtue signaling without any tangible action or the necessary clarity to back them up," he said. "In my opinion, such letters are counterproductive as they consume attention cycles without leading to any real change."

Doomsday narratives confuse the discourse and potentially put a lid on the kind of levelheaded conversation required to make sound policy decisions, he said. Additionally, these media-fueled debates consume valuable time and resources that could instead be used to gain a deeper understanding of AI use cases.

"For executives seeking to manage risks associated with AI effectively, they must first and foremost educate themselves on actual risks versus falsely presented existential threats," Gupta said. They also need to collaborate with technical experts who have practical experience in developing production-grade AI systems, as well as with academic professionals who work on the theoretical foundations of AI.

To delve deeper, read the full article here.

💡 In case you missed it:

To ensure that fundamental principles such as beneficence, respect for human autonomy, prevention of harm, justice, privacy, and transparency are respected, medical machine learning systems must be developed responsibly. This survey provides an overview of the technical and procedural challenges that arise when creating medical machine learning systems responsibly and following existing regulations, as well as possible solutions to address these challenges.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

Regarding the poll, I'm not sure if the concerns listed can really be solved by making generative AI open source or not. I think malicious attacks and unethical data harvesting will still happen regardless of whether LLMs are for-profit or free, like we're seeing right now. The product itself can be open-source, but I think it's best if the owners of the data used to train it consented to it.