AI Ethics Brief #129: Fairness in generative models, open-source and EU AI Act, impact of AI art on the creative industry, and more.

Can emerging AI governance be an opportunity for innovation for business leaders?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What are some of the best ways to contribute to policymaking efforts today when you don’t have a seat at the table?

✍️ What we’re thinking:

Deciphering Open Source in the EU AI Act

The Impact of AI Art on the Creative Industry

🤔 One question we’re pondering:

What are some names of individuals and organizations that need to be included in the upcoming discussions in US lawmaking bodies and the UK AI Safety Summit?

🔬 Research summaries:

Fair Generative Model Via Transfer Learning

CRUSH: Contextually Regularized and User Anchored Self-Supervised Hate Speech Detection

Deepfakes and Domestic Violence: Perpetrating Intimate Partner Abuse Using Video Technology

📰 Article summaries:

Inside the AI Porn Marketplace Where Everything and Everyone Is for Sale

Large language models aren’t people. Let’s stop testing them as if they were. | MIT Technology Review

Are self-driving cars already safer than human drivers? | Ars Technica

📖 Living Dictionary:

What is the relevance of smart city to AI ethics?

🌐 From elsewhere on the web:

Emerging AI Governance is an Opportunity for Business Leaders to Accelerate Innovation and Profitability

💡 ICYMI

A Matrix for Selecting Responsible AI Frameworks

🚨 The Responsible AI Bulletin

We’ve restarted our sister publication, The Responsible AI Bulletin, as a fast-digest every Sunday for those who want even more content beyond The AI Ethics Brief. (Our lovely power readers 🏋🏽, thank you for writing in and requesting it!)

The focus of the Bulletin is to give you a quick dose of the latest research papers that caught our attention in addition to the ones covered here.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours and we’ll answer it in the upcoming editions.

Here are the results from the previous edition for this segment:

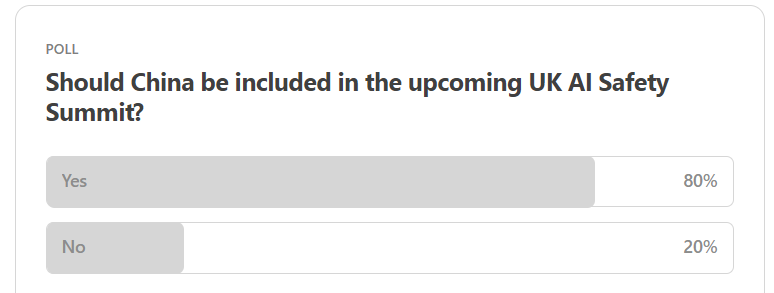

We have a very clear signal from last week’s newsletter on the subject of including China in the upcoming UK AI Safety Summit.

On to this week’s question from a reader, “What are some of the best ways to contribute to policymaking efforts today when you don’t have a seat at the table?” This question is really close to our hearts, and we’re glad that our reader has shared this with us as a provocation for this week. In many recent discussions, we’re seeing the more prolific and famous individuals within the field being invited to share their views on how AI governance and regulations should be shaped going into the future. So, how do enthusiastic community members contribute to these discussions? One way to do so is via responding to open calls for participation, e.g., the ones from the US Copyright Office and the FTC, amongst other agencies and governments.

Another effective way is by writing op-eds and columns and sharing your thoughts for consumption by the community so that they could be included in the latest thinking on the subject and when decisions are being made. Finally, you can also engage with other community members and produce academic literature that can shape long-term thinking in the field.

While there may not always be opportunities to be directly involved or have a seat at the table, there are still other ways to be actively involved. In 2018, when Canada held the G7 presidency, we held multiple sessions, inviting participation from 400+ community members to carry their voices with us in the room when we were invited to participate in shaping the discussion on the future of work. We hope to continue doing that through our literacy-building efforts and opportunities for community members to connect with each other.

What are some suggestions that you would offer to US policymakers and their staffers to make the consultation process more inclusive? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Deciphering Open Source in the EU AI Act

Now that the debates around the EU AI Act are getting into their final stages (the Trilogue has already started), Hugging Face and other co-signatories (i.e., Creative Commons, GitHub, Open Future, LAION, and Eleuther AI) have raised their voices through a position statement standing for a clearer framework for open source and open science in the EU AI Act.

Open source has been increasingly relevant for the EU’s digital economy these past years. Research sponsored by the European Commission has outlined the core role of open source (OS) for the EU’s innovation policy and, more precisely, in critical industrial contexts such as standardization or AI. A study from the European Commission rightly states:

“Throughout the years, openness in AI emerged as either an industry standard or a goal called for by researchers or the community.”

Whereas OS is seen as an innovation booster, generative AI and its massive adoption have considerably upset the initial plan for the overall EU AI regulatory approach. It was not easy to anticipate that a new set of artifacts different from code would be the new open wave (i.e., machine learning models).

The regulatory debate has been directly impacted by constant AI product releases, such as Large Language Models (“LLMs”) in the market. The scope of the EU AI Act started with AI systems (2021), then AI systems and General purpose AI systems (2022), to finally now, AI systems, General purpose AI systems, and Foundation Models (2023).

To delve deeper, read the full article here.

The Impact of AI Art on the Creative Industry

Over the past 20 years, I’ve watched with horror as automation, greed, and exploitation has laid waste to the field of graphic and web design, and now the rise of AI generative art threatens to do even worse to concept artists and illustrators. My video calls for society to focus on what’s important so that AI serves us instead of the other way around.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

With so many legislative and regulatory efforts underway across the US, UK, and the EU, we’ve been paying more and more attention to who is invited to the table to contribute to the discussions. What we’re seeing so far is a very small set of people who are making their presence felt at each of these venues, potentially posing a risk of collapsing diversity into a less rich subset. What are some names of individuals and organizations that need to be included in the upcoming discussions in US lawmaking bodies and the UK AI Safety Summit?

We’d love to hear from you and share your thoughts back with everyone in the next edition:

🔬 Research summaries:

Fair Generative Model Via Transfer Learning

With generative AI and deep generative models becoming more widely adopted in recent times, the question of how we may tackle biases in these models is becoming more relevant. This paper discusses a simple yet effective approach to training a fair generative model in two different settings: training from scratch or adapting an already pre-trained biased model.

To delve deeper, read the full summary here.

CRUSH: Contextually Regularized and User Anchored Self-Supervised Hate Speech Detection

“Put your sword back in its place,” Jesus said to him, “for all who draw the sword will die by the sword.” because “hate begets hate” – Gospel of Matthew, verse 26:52.

We draw inspiration from these words and the empirical evidence of the clustering tendency of hate speech provided in the works of Matthew et al. (2020) to design two loss functions incorporating user-anchored self-supervision and contextual regularization in hate speech. These are incorporated in the pretraining and finetuning phases to improve automatic hate speech detection in social media significantly.

To delve deeper, read the full summary here.

Deepfakes and Domestic Violence: Perpetrating Intimate Partner Abuse Using Video Technology

Deepfake technology’s increasing ease and availability indicates a worrying trend in technology-facilitated sexual abuse. This article argues that while deepfake technology poses a risk to women in general, victims of domestic abuse are at particular risk because perpetrators now have a new means to threaten, blackmail, and abuse their victims with non-consensual, sexually explicit deepfakes.

To delve deeper, read the full summary here.

📰 Article summaries:

Inside the AI Porn Marketplace Where Everything and Everyone Is for Sale

What happened: On the website CivitAI, users have access to AI models capable of generating pornographic scenarios using real images of real people taken from across the internet without their consent. While this practice is prohibited on the site, CivitAI hosts AI models of specific individuals that can be combined with pornographic models to create non-consensual sexual images. A 404 Media investigation uncovered a surge in communities dedicated to advancing this practice, facilitated by websites allowing users to generate many of these images, revealing that the AI porn issue is more extensive than previously thought. The investigation also highlighted the exploitation of sex workers whose images power these AI-generated pornographies.

Why it matters: Mage, a platform that provides Text-to-Image AI models, allows users to create personalized content, including explicit imagery, for a fee. While Mage claims to employ automated moderation to prevent abuse, 404 Media found that AI-generated non-consensual sexual images are easily accessible on the platform. This raises concerns about content moderation and the commercialization of AI-generated explicit content.

Between the lines: The concept of "the singularity," describing a point when AI surpasses human control, has gained attention with the availability of generative AI tools. However, there is no evidence that companies like OpenAI, Facebook, or Google have achieved this level of AI, leading to speculation that their AI tools as more powerful than they are. In the context of generative AI porn, an AI singularity has already occurred, leading to an explosion of non-consensual sexual imagery. This new form of pornography relies on quick AI-generated content, reshaping how sexual images are produced and shared, with significant ethical and privacy implications.

What happened: Recently, Webb and colleagues published a Nature article showcasing GPT-3's impressive ability to excel in tests assessing analogical reasoning—a crucial aspect of human intelligence. In some cases, GPT-3 performed better than undergraduate students. This achievement is part of a series of remarkable feats by large language models, including GPT-4, which passed various professional and academic assessments, raising the prospect of machines replacing white-collar jobs like teaching, medicine, journalism, and law. However, there is a lack of consensus on the true significance of these results.

Why it matters: The core issue lies in interpreting the results of tests for large language models. Traditional assessments designed for humans, such as high school exams and IQ tests, make certain assumptions about knowledge and cognitive skills. When humans excel, it's assumed they possess these skills. However, when large language models perform well on these tests, it's unclear what this performance truly signifies. Is it genuine understanding, a statistical trick, or repetition? Additionally, unlike humans, the performance of these models is often fragile, as minor test tweaks can drastically alter results. The question arises: Are they demonstrating true intelligence or merely relying on statistical shortcuts?

Between the lines: The central challenge revolves around understanding how these large language models achieve their remarkable feats. Some researchers advocate shifting the focus away from test scores and delving deeper into the mechanisms behind their reasoning. They argue that grasping the algorithms and mechanisms at work is essential to truly appreciating their intelligence. However, understanding the inner workings of these complex models is difficult. Yet, some believe it's theoretically possible to reverse-engineer these models to uncover the algorithms responsible for their test performance. This approach could provide insights into what these models have genuinely learned, moving beyond a fixation on test results to understand how they navigate these tests.

Are self-driving cars already safer than human drivers? | Ars Technica

What happened: In August, significant developments occurred in the realm of driverless taxis in San Francisco. The California Public Utilities Commission granted Google's Waymo and GM's Cruise permission to charge customers for autonomous taxi rides within the city. However, a week later, Cruise vehicles were involved in two serious crashes in quick succession. Following these incidents, the California Department of Motor Vehicles mandated that Cruise reduce its autonomous taxi fleet by half for the duration of the investigation.

Why it matters: Waymo and Cruise have opted to avoid freeway routes for their driverless cars despite these roads generally having fewer accidents per mile. This choice is significant because San Francisco's streets are notably chaotic compared to many other U.S. cities. Moreover, a small fraction of drivers, including teenagers, the elderly, and intoxicated individuals, are responsible for a disproportionate number of accidents. Experienced and alert drivers have accident rates well below the national average. Therefore, to achieve the safety levels of a skilled human driver, autonomous vehicles need to meet higher standards than the national average.

Between the lines: Developing a reliable benchmark for human driving performance remains challenging due to incomplete data on crashes reported to authorities. The key question for policymakers is whether to allow Waymo and Cruise to expand their autonomous services. Waymo appears safer than human drivers, while Cruise's technology shows promise but may require further data and evaluation. Access to public roads is crucial for testing and enhancing self-driving technology, as it cannot be effectively evaluated solely in controlled environments.

📖 From our Living Dictionary:

What is the relevance of the smart city to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

As AI capabilities rapidly advance, especially in generative AI, there is a growing need for systems of governance to ensure we develop AI responsibly in a way that is beneficial for society. Much of the current Responsible AI (RAI) discussion focuses on risk mitigation. Although important, this precautionary narrative overlooks the means through which regulation and governance can promote innovation.

Suppose companies across industries take a proactive approach to corporate governance. In that case, we argue that this could boost innovation (similar to the whitepaper from the UK Government on a pro-innovation approach to AI regulation) and profitability for individual companies as well as for the entire industry that designs, develops, and deploys AI. This can be achieved through a variety of mechanisms we outline below, including increased quality of systems, project viability, a safety race to the top, usage feedback, and increased funding and signaling from governments.

Organizations that recognize this early can not only boost innovation and profitability sooner but also potentially benefit from a first-mover advantage.

To delve deeper, read the full article here.

💡 In case you missed it:

A Matrix for Selecting Responsible AI Frameworks

Process frameworks for implementing responsible AI have proliferated, making it difficult to make sense of the many existing frameworks. This paper introduces a matrix that organizes responsible AI frameworks based on their content and audience. The matrix points teams within organizations building or using AI towards tools that meet their needs, but it can also help other organizations develop AI governance, policy, and procedures.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

“Who guards the guardians?” How to achieve an effective but agile supervisory mechanism including consequences and appeals. Our current judicial system seems too cumbersome. The consequences of corporate libertarian license have become all too clear