AI Ethics Brief #136: Diversity and LLMs, EU AI Act and competitiveness, avoiding burnout in RAI, platform power in GenAI, and more.

Is Artificial General Intelligence (AGI) already here?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What will the impact of the EU AI Act be on the competitiveness of EU-based AI companies?

✍️ What we’re thinking:

Does diversity really go well with Large Language Models?

🤔 One question we’re pondering:

What are some key lessons that we can draw from the GDPR to help prepare for the EU AI Act?

🪛 AI Ethics Praxis: From Rhetoric to Reality

What can we do to avoid burnout when working in Responsible AI?

🔬 Research summaries:

Tiny, Always-on and Fragile: Bias Propagation through Design Choices in On-device Machine Learning Workflows

Examining the Impact of Provenance-Enabled Media on Trust and Accuracy Perceptions

Reduced, Reused, and Recycled: The Life of a Benchmark in Machine Learning Research

📰 Article summaries:

Signal President Meredith Whittaker on resisting government threats to privacy

Artificial General Intelligence Is Already Here

‘Is this an appropriate use of AI or not?’: teachers say classrooms are now AI testing labs

📖 Living Dictionary:

What is the relevance of griefbots to AI ethics?

🌐 From elsewhere on the web:

Coworking: Abhishek Gupta tames machines

💡 ICYMI

Exploring Antitrust and Platform Power in Generative AI

🚨 AI Ethics Praxis: From Rhetoric to Reality

Aligning with our mission to democratize AI ethics literacy, we bring you a new segment in our newsletter that will focus on going beyond blame to solutions!

We see a lot of news articles, reporting, and research work that stops just shy of providing concrete solutions and approaches (sociotechnical or otherwise) that are (1) reasonable, (2) actionable, and (3) practical. These are much needed so that we can start to solve the problems that the domain of AI faces, rather than just keeping pointing them out and pontificate about them.

In this week’s edition, you’ll see us work through a solution proposed by our team to a recent problem and then we invite you, our readers, to share in the comments (or via email if you prefer), your proposed solution that follows the above needs of the solution being (1) reasonable, (2) actionable, and (3) practical.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

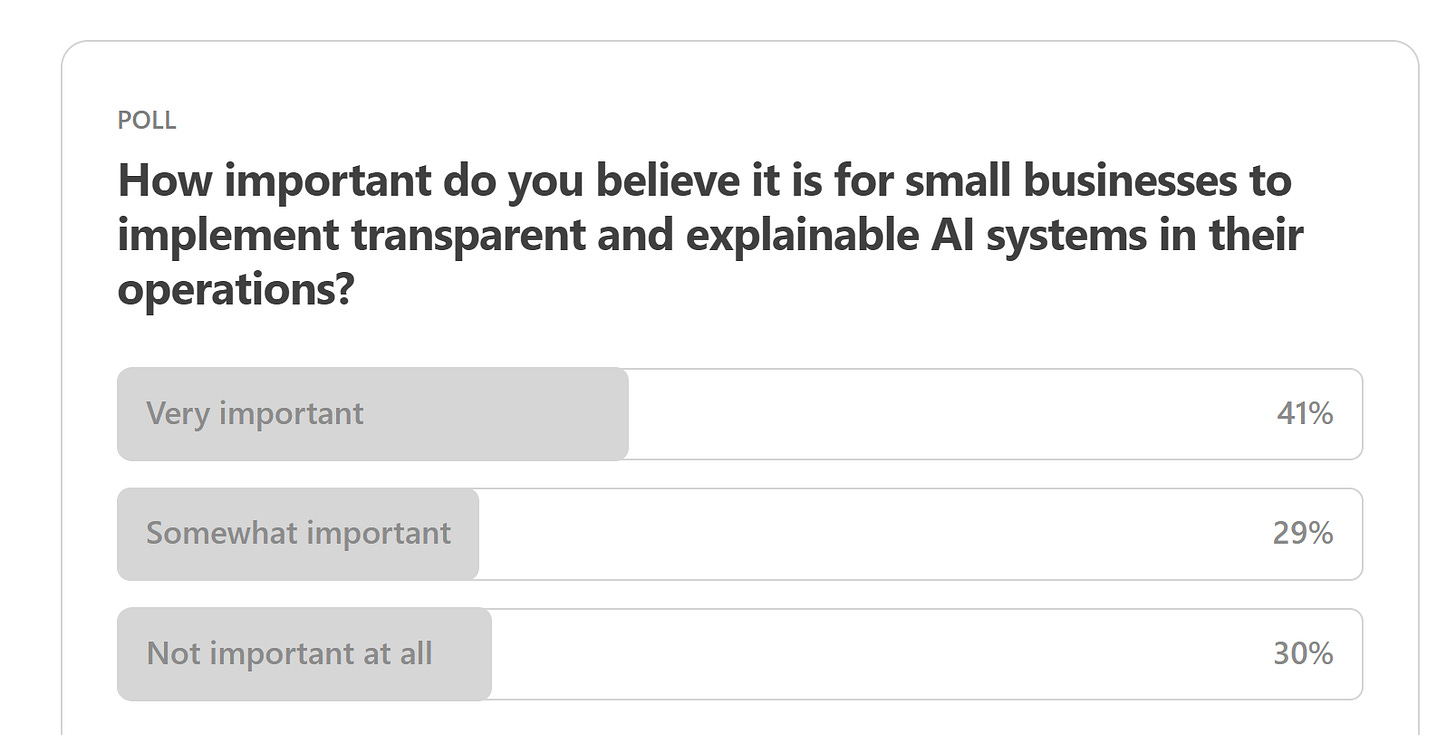

Here are the results from the previous edition for this segment:

Last week’s results showcase that even if you’re a small company, expectations around following Responsible AI remain and it behooves the owners and operators of the company to invest in realizing ethical AI principles in practice.

One of our readers, Mina K., wrote in asking about the impact that the EU AI Act will have on the competitiveness of companies originating in Europe against the incumbents and dominant North American firms in terms of AI innovation. This is a great question!

We saw in 2018 with the release of the GDPR that there was a global effect on how privacy was incorporated into products and services, yet this time around, it seems that the effect might be more muted, especially, given the large sums of money at stake and how fundamentally AI is affecting every industry and profession.

Regulatory Burden and Compliance Costs: The EU AI Act introduces stringent regulations and oversight for AI systems, which could increase the regulatory burden and compliance costs for Europe-based AI companies. This could potentially disadvantage smaller companies that may struggle to meet these requirements due to limited resources. High compliance costs could also lead to innovative companies moving their activities abroad and investors withdrawing their capital from European AI.

Impact on Innovation and R&D: It could potentially stifle innovation and R&D in AI. The US government has raised concerns that the legislation could curb investment in the technology and favor large AI companies at the expense of smaller rivals. Some of Europe's top business leaders have also warned that the rules go too far, especially in regulating generative AI and foundation models, which could hurt the bloc's competitiveness and spur an exodus of investment.

As Responsible AI adherents and practitioners, what do you think will be the biggest impacts for companies based in Europe? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Does diversity really go well with Large Language Models?

Statistical models like LLMs can vary in their ability to consider minority or outlier data points. Generally, traditional statistical models tend to prioritize the majority of data, and outliers or minority data points may have less influence on the model’s predictions or statistics. Language models trained on large, diverse datasets may capture a broader range of language patterns, including those used by minority groups. However, they may still be influenced by the prevalence of specific language patterns in the training data.

Especially in the context of text data and language modeling, outliers could refer to rare or unusual language patterns, words, or phrases that occur infrequently in the training data. LLMs may not explicitly ignore these outliers, but they are less likely to generate them because these machines aim to produce coherent and contextually relevant text based on the patterns they have learned from their training data.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

Given the above discussion on the EU AI Act’s impact, we’ve been exploring how to address the competitiveness question - especially if there are lessons to be learned from the rollout of GDPR and the response of companies to adopting their tech stacks and legal operations to meet the requirements set forth in the GDPR. What are some key lessons that we can draw from the GDPR to help prepare for the EU AI Act?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

2023 has been a long year, with challenges and developments taking place almost at a weekly pace! For Responsible AI practitioners, especially a lot of our readers who wrote in to chat with us, expressed a feeling of being overwhelmed. Some even mentioned burnout as a large cause for them to pull back their efforts or even leave the field entirely. This is extremely sad for our field given that we need our collective expertise now more than ever! What can we do to mitigate burnout? We’ve shared thoughts previously in a MIT Technology Review article before but wanted to share a few more ideas for consideration: (We’ve kept these brief in terms of what we’ve found to be most effective and of course, they draw from how to deal with burnout in general, yet we hope that these will be helpful)

To avoid burnout in the field of Responsible AI, practitioners can:

🎯 Set realistic goals and priorities: Establish achievable objectives and focus on the most important tasks to prevent being overwhelmed by the workload. There are some issues in AI systems, especially from a socio-technical perspective that reside outside your sphere of control, spend less effort on those and instead invest more toward those items that are within your sphere of control.

🧱 Establish boundaries and routines: Create a clear separation between work and personal life, and develop routines that promote a healthy work-life balance. Often the issues can seem all-consuming, especially when you engage with them on social media platforms that are sub-optimal in having a nuanced and meaningful conversation. People tend to talk past each other and it can be a draining experience, avoid that when possible!

🌴 Engage in self-care activities: Dedicate time to activities that promote relaxation and well-being, such as exercise, meditation, or hobbies, to recharge and maintain mental health. While this may seem the most obvious, we need you to be at your best to tackle some of the most challenging aspects of our work, especially when Responsible AI priorities clash with other priorities such as business goals within your organization.

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

🔬 Research summaries:

On-device machine learning (ML) is used by billions of resource-constrained Internet of Things (IoT) devices – think smart watches, mobile phones, smart speakers, emergency response, and health-tracking devices. This paper investigates how design choices during model training and optimization can lead to unequal predictive performance across gender groups and languages, leading to reliability bias in device performance.

To delve deeper, read the full summary here.

Examining the Impact of Provenance-Enabled Media on Trust and Accuracy Perceptions

Emerging technical provenance standards have made it easier to identify manipulated visual content online, but how do users actually understand this type of provenance information? This paper presents an empirical study where 595 users of social media were given access to media provenance information in a simulated feed, finding that while provenance does show effectiveness in lowering trust and perceived accuracy of deceptive media, it can also, under some circumstances, have similar effects on truthful media.

To delve deeper, read the full summary here.

Reduced, Reused, and Recycled: The Life of a Benchmark in Machine Learning Research

In AI research, benchmark datasets coordinate researchers around shared problems and measure progress toward shared goals. This paper explores the dynamics of benchmark dataset usage across 43,140 AI research papers published between 2015 and 2020. We find that AI research communities are increasingly concentrated on fewer and fewer datasets and that these datasets have been introduced by researchers situated within a small number of elite institutions. Concentration on research datasets and institutions has implications for the trajectory of the field and the safe deployment of AI algorithms.

To delve deeper, read the full summary here.

📰 Article summaries:

Signal President Meredith Whittaker on resisting government threats to privacy

What happened: Meredith Whittaker, President of the Signal Foundation, took on the role just over a year ago and has faced political threats to encryption since then. Signal's messaging app, known for its strong privacy stance and end-to-end encryption, has faced bans in various countries, including China, Egypt, and Iran. Legislation in the U.K., India, and Brazil could require Signal to moderate content, potentially compromising its encryption. Whittaker sees these laws as existential threats to Signal and is actively addressing them.

Why it matters: Signal is navigating privacy legislation in various countries, but with a small team and limited resources, they rely on a network of global partners in the digital rights and policy space. While Signal faces infrastructural disadvantages compared to major social media companies, its unique commitment to privacy sets it apart. Signal's role in providing high-availability tech at scale without participating in surveillance is unparalleled, given the new political economy of the tech industry.

Between the lines: Signal is resolute in its commitment to end-to-end encryption, even when governments demand access to user data. Whittaker emphasizes that they cannot provide information, as it's technically impossible due to their encryption guarantees. Signal's open-source code and protocol have withstood extensive testing, ensuring privacy. The company is also actively working to find ways to maintain service access for users in the face of government bans, particularly in repressive regimes. She acknowledges the challenge of navigating a complex technological ecosystem and defending Signal's integrity in the face of bad-faith efforts to sow doubt.

Artificial General Intelligence Is Already Here

What happened: The text discusses the concept of Artificial General Intelligence (AGI) and how advanced AI language models like ChatGPT have partially achieved it. These "frontier models" exhibit many flaws, including biases, citation errors, and occasional arithmetic mistakes. While imperfect, they represent a significant advancement in AI capabilities and are considered the first examples of AGI, surpassing the abilities of previous AI generations.

Why it matters: AGI's definition and measurement are subject to debate. Some propose new terms like "Artificial Capable Intelligence" and suggest modern Turing Tests as metrics, such as the ability to generate wealth online. However, these metrics have limitations, and better tests are needed to assess the true capabilities of AGI models. It's crucial to distinguish linguistic fluency from intelligence and be cautious about conflating intelligence with consciousness. There is no clear method to measure consciousness in AI, and debates on this topic are currently unresolvable. To understand AGI's impact on society, questions about its implications, benefits, and potential harms must be addressed.

Between the lines: The text delves into the debate about consciousness in AI and the challenges of measuring it. It highlights that the consciousness debate is often rooted in untestable beliefs and suggests separating intelligence from consciousness and sentience. Additionally, the text touches on the political economy of AI, emphasizing the economic implications of AGI and its potential to exacerbate existing disparities. It calls for open discussions on who benefits, who is harmed, and how to maximize benefits while minimizing harm fairly and equitably.

‘Is this an appropriate use of AI or not?’: teachers say classrooms are now AI testing labs

What happened: Since OpenAI released ChatGPT, educators like high school teacher Vicki Davis have been reevaluating their teaching methods. Davis, also an IT director at her school, helped establish a policy allowing students to use AI tools for certain projects while discussing them with teachers. The introduction of AI has prompted teachers to consider when and how to incorporate AI in their lessons, raising questions about its appropriate use.

Why it matters: The emergence of generative AI tools like ChatGPT has prompted educators to explore their potential in the classroom while being cautious about preventing cheating. Many teachers worry that overreliance on AI could worsen the learning loss experienced by students during the pandemic. The rapid evolution of AI in education poses challenges, and teachers are concerned about balancing its benefits and potential pitfalls.

Between the lines: Several AI apps designed for students have surfaced recently, including Photomath, which can provide math solutions and explanations. Some students have used such tools for cheating during the pandemic, impacting their learning experiences when returning to in-person classes. However, AI tools, like Khanmigo by Khan Academy, encourage active learning by asking questions and providing guidance rather than directly giving answers. The distinction between AI tutors and cheating tools lies in the training processes, with Khan Academy emphasizing the importance of active learning. As more AI tutors enter the market, there is a concern that some may prioritize cheating over genuine learning experiences, potentially challenging the educational landscape.

📖 From our Living Dictionary:

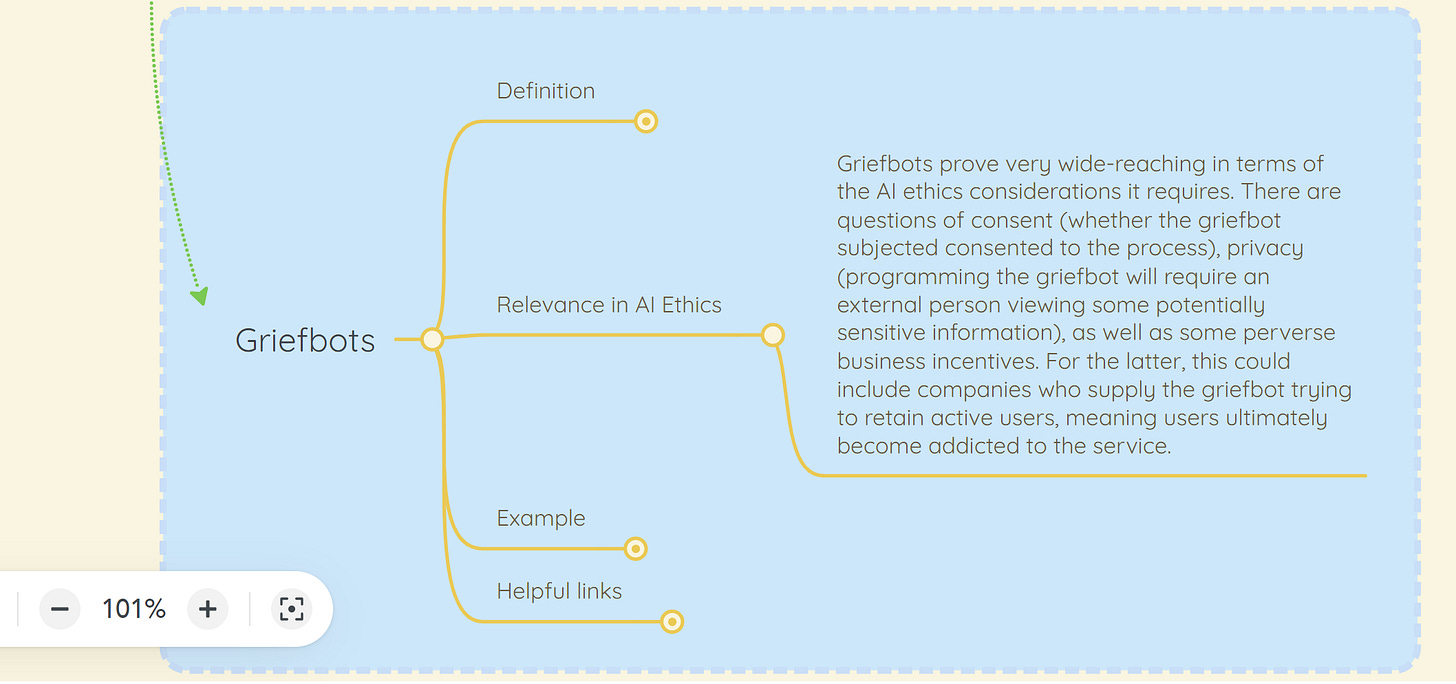

What is the relevance of griefbots to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Coworking: Abhishek Gupta tames machines

The principal researcher and founder of the Montreal AI Ethics Institute works to build “ethical, safe, and inclusive AI systems and organizations.”

To delve deeper, read the full article here.

💡 In case you missed it:

Exploring Antitrust and Platform Power in Generative AI

The concentration of power among a few technology companies has become the subject of increasing interest and many antitrust lawsuits. In the realm of generative AI, we are once again witnessing the same companies taking the lead in technological advancements. Our short workshop article examines the market dominance of these corporations in the technology stack behind generative AI from an antitrust law perspective.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

The EU AI act will help SMEs to manage regulatory issues in a straight forward way instead of being less aware of ethical aspects of AI development. The US and many other countries will follow since US AI manufacturers wants to sell to Europe. the AI act has impact on AI-system used in Europe, irrespectively of where the AI-system developer is physically or legally located.