AI Ethics Brief #135: Responsible open foundation models, change management for responsible AI, augmented datasheets, and more.

What small(er) companies can do to implement AI ethics within their organizations?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What small(er) companies can do to implement AI ethics within their organizations?

✍️ What we’re thinking:

Writing problematic code with AI’s help

🤔 One question we’re pondering:

How can we develop comprehensive strategies and tools to counteract the harmful effects of AI-driven disinformation and protect the integrity of information in an increasingly interconnected world?

🪛 AI Ethics Praxis: From Rhetoric to Reality

What can be done to address some organizational and change management challenges that companies face when embarking on their responsible AI journey?

🔬 Research summaries:

Governing AI to Advance Shared Prosperity

Augmented Datasheets for Speech Datasets and Ethical Decision-Making

Investing in AI for Social Good: An Analysis of European National Strategies

📰 Article summaries:

Eyes on the Ground: How Ethnographic Research Helps LLMs to See

How to Promote Responsible Open Foundation Models

We Don’t Actually Know If AI Is Taking Over Everything - The Atlantic

📖 Living Dictionary:

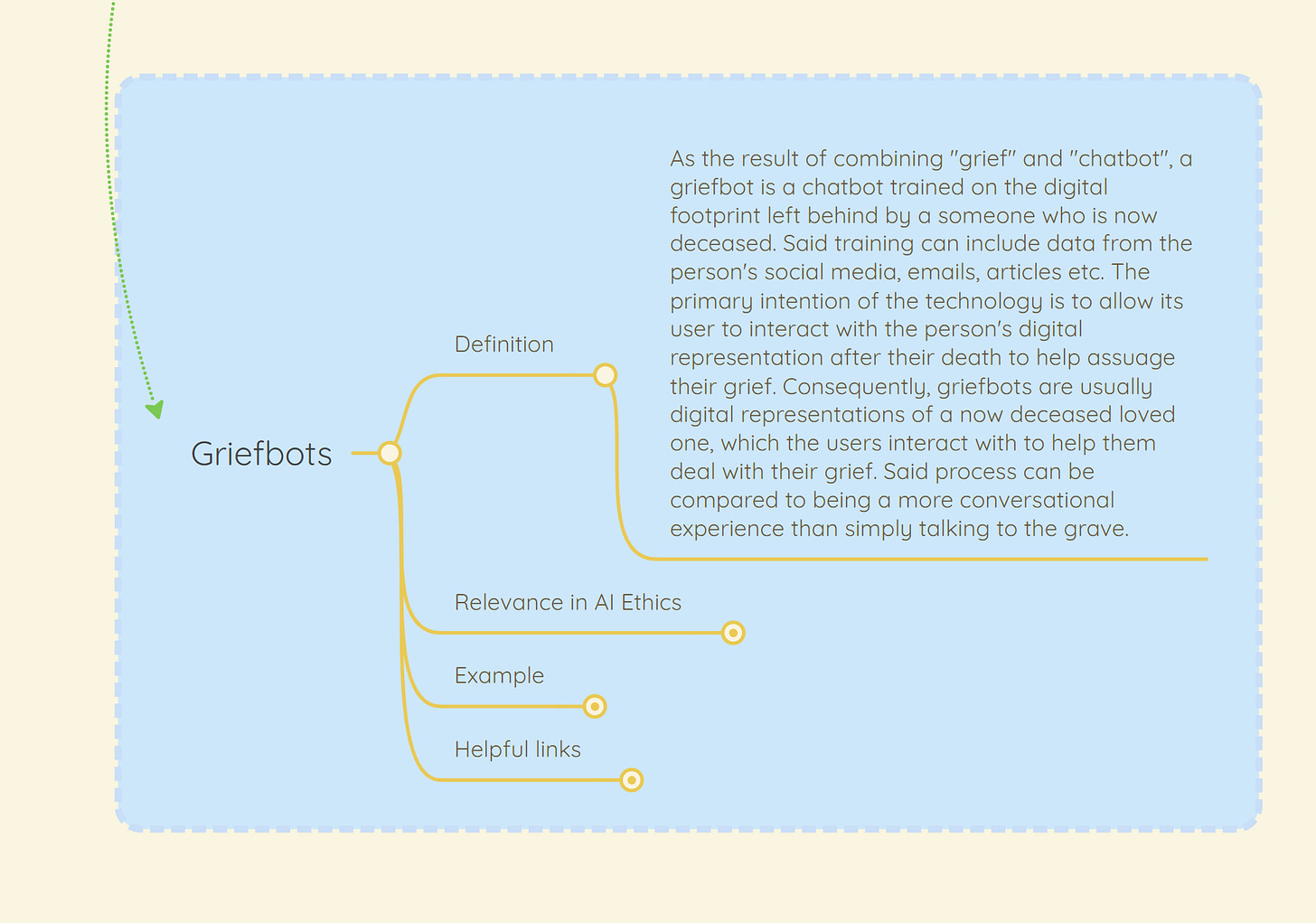

What are griefbots?

🌐 From elsewhere on the web:

Beware the Emergence of Shadow AI

💡 ICYMI

The GPTJudge: Justice in a Generative AI World

🚨 AI Ethics Praxis: From Rhetoric to Reality

Aligning with our mission to democratize AI ethics literacy, we bring you a new segment in our newsletter that will focus on going beyond blame to solutions!

We see a lot of news articles, reporting, and research work that stops just shy of providing concrete solutions and approaches (sociotechnical or otherwise) that are (1) reasonable, (2) actionable, and (3) practical. These are much needed so that we can start to solve the problems that the domain of AI faces, rather than just keeping pointing them out and pontificate about them.

In this week’s edition, you’ll see us work through a solution proposed by our team to a recent problem and then we invite you, our readers, to share in the comments (or via email if you prefer), your proposed solution that follows the above needs of the solution being (1) reasonable, (2) actionable, and (3) practical.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

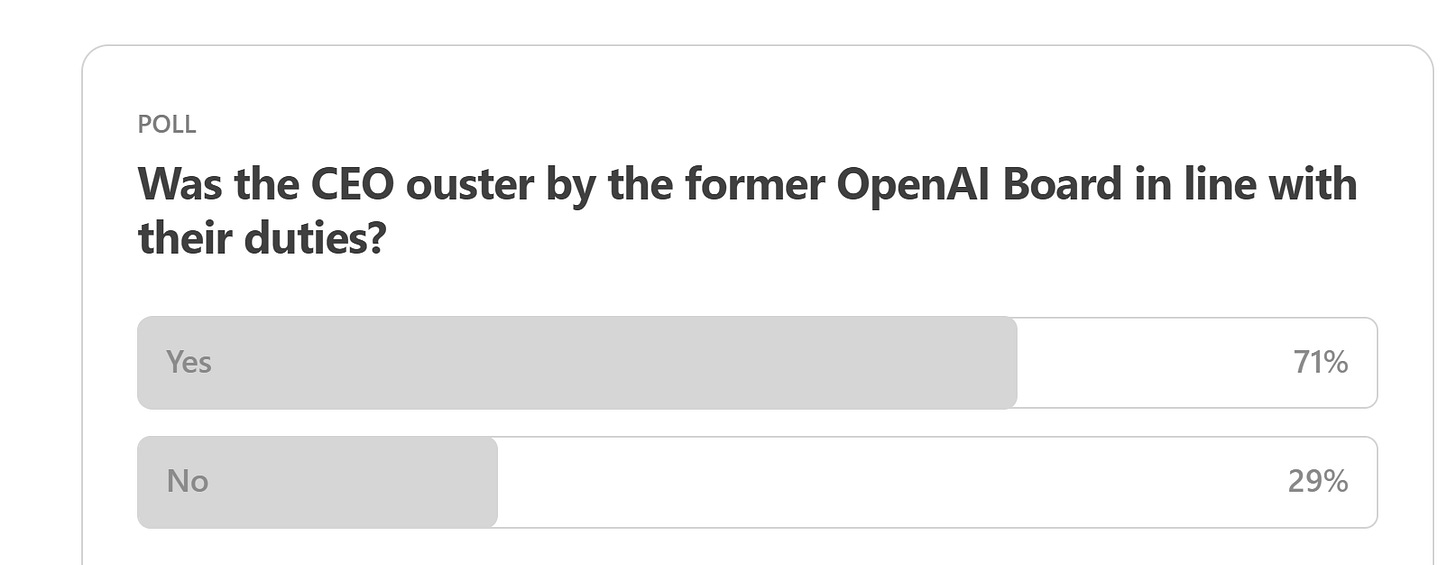

Here are the results from the previous edition for this segment:

One of our readers, Daniel S., wrote in to ask about what small(er) companies can do to implement AI ethics within their organizations? This is a great question because it is not too common to see easily implementable solutions and frameworks for small-scale organizations who don’t have the resources necessary to deploy full-fledged frameworks and onerous committee and third-party tools to meet newly demanded Responsible AI requirements.

Collaboration and Learning: Small businesses might not have all the resources needed for implementing ethical AI. Collaborating with industry partners, participating in forums, and learning from case studies can be beneficial.

Scalable and Flexible Solutions: Choose AI solutions that are scalable and flexible, allowing you to adapt as the business grows and as ethical standards evolve.

Employee Training and Awareness: Educate your employees about the ethical use of AI. This includes understanding the limitations of AI, recognizing potential biases in algorithms, and the importance of ethical data handling.

There are of course many other things that can be done but the three points above form a good starting point for those who are looking to get started on this journey.

And to what extent are current tools and solutions accessible to small(er) organizations to achieve the above? Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Writing problematic code with AI’s help

Humans trust AI assistants too much and end up writing more insecure code. At the same time, they gain false confidence that they have written well-functioning and secure code.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

The ongoing Middle Eastern conflict has seen a surge in AI-generated disinformation, with images and videos being manipulated to spread false narratives and fuel tensions. This has raised concerns about the role of AI in exacerbating the situation and the challenges it poses to discerning truth from falsehood. While some efforts have been made to combat disinformation using AI, such as Microsoft's work on detecting manipulated content, the rapid spread of false information remains a significant issue. As AI continues to advance and become more accessible, it is crucial to consider how societies can effectively address the challenges posed by AI-generated disinformation. How can we develop comprehensive strategies and tools to counteract the harmful effects of AI-driven disinformation and protect the integrity of information in an increasingly interconnected world?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

Thinking deeply this week on what it would take to move from principles to practice, in our work, we most often see failures to address some organizational and change management challenges that companies face when embarking on their responsible AI journey. Addressing these head-on is imperative for success:

💼 Leadership Commitment and Strategy Development:

Top-down Commitment: Secure a commitment from top leadership to prioritize responsible AI. This could involve the formulation of a clear strategy that integrates ethical AI practices into the core business objectives.

Ethical AI Governance Structure: Establish a governance structure for ethical AI decision-making, including roles like Chief Ethics Officer or AI Ethics Boards, to provide oversight and guidance on AI projects.

⌨️ Organizational Culture and Training:

Embedding AI Ethics in Culture: Foster a culture where ethical considerations are as critical as technical performance. This involves promoting a mindset where employees feel responsible and empowered to raise ethical concerns.

Training and Awareness: Implement comprehensive training programs to educate employees about the importance of responsible AI, covering topics like bias, fairness, and data privacy. This training should be tailored to different roles within the organization, from developers to executives.

🗒️ Policy and Process Integration:

Development of Ethical Guidelines: Create detailed ethical guidelines and standards that provide clear directives on how to approach AI development and deployment responsibly.

Process Integration: Integrate these ethical guidelines into the entire AI lifecycle, from design to deployment and monitoring. This includes ethical risk assessments, regular audits, and the establishment of protocols for transparency and accountability.

🧑🏽🤝🧑🏽 Stakeholder Engagement and Transparency:

Multi-Stakeholder Involvement: Engage a diverse range of stakeholders, including users, regulatory bodies, and civil society groups, in the development and deployment of AI systems. This helps to ensure a variety of perspectives and needs are considered.

Transparent Communication: Develop a communication strategy that openly discusses how AI systems are developed, the measures taken to ensure their ethical use, and how they impact stakeholders.

📈 Incentive and Performance Metrics:

Rethinking Metrics: Redefine success metrics to include ethical considerations. This means not just evaluating AI systems based on accuracy or efficiency but also on how well they align with ethical principles.

Incentivizing Ethical Behavior: Design incentive structures that reward not only high performance but also adherence to ethical practices. This could include recognition programs, career advancement opportunities, or other benefits.

➿ Continuous Monitoring and Feedback Loops:

Ethical Audits and Reporting: Regularly conduct ethical audits of AI systems to assess compliance with established guidelines. This should be coupled with transparent reporting mechanisms for both internal and external stakeholders.

Feedback Mechanisms: Establish channels for employees, users, and other stakeholders to provide feedback on AI systems, ensuring that ethical considerations are continuously updated and refined.

🤝🏽 Collaboration and External Partnerships:

Cross-Industry Collaboration: Engage with other organizations, industry groups, and academic institutions to share best practices and learn from others' experiences in implementing responsible AI.

Consulting External Experts: Work with ethicists, social scientists, and legal experts to gain external perspectives and insights on ethical AI implementation.

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

🔬 Research summaries:

Governing AI to Advance Shared Prosperity

A growing number of leading economists, technologists, and policymakers express concern over excessive emphasis on using AI to cut labor costs. This chapter attempts to give a comprehensive overview of factors driving the labor-saving focus of AI development and use. It argues that understanding those factors is key to designing effective approaches to governing the labor market impacts of AI.

To delve deeper, read the full summary here.

Augmented Datasheets for Speech Datasets and Ethical Decision-Making

The lack of diversity in datasets can lead to serious limitations in building equitable and robust Speech-language Technologies (SLT), especially along dimensions of language, accent, dialect, variety, and speech impairment. To encourage standardized documentation of such speech data components, we introduce an augmented datasheet for speech datasets, which can be used in addition to “Datasheets for Datasets.”

To delve deeper, read the full summary here.

Investing in AI for Social Good: An Analysis of European National Strategies

This research paper examines the AI strategies of EU member states, and this summary explores it in light of the recently approved EU Artificial Intelligence Act (AIA). The research paper explores the countries’ plans for responsible AI development, human-centric and democratic approaches to AI. The paper categorizes the focus areas of 11 countries’ AI policies and highlights the potential negative impacts of AI systems designed for social outcomes. The analysis provides valuable insights for policymakers and the public as the EU progresses with AI regulation.

To delve deeper, read the full summary here.

📰 Article summaries:

Eyes on the Ground: How Ethnographic Research Helps LLMs to See

What happened: Generative models like GPT-4, trained with billions of parameters, have reached impressive capabilities, prompting discussions about the need for primary qualitative research. Services like Synthetic Users use these models to let researchers and non-researchers define the users they want to interview and generate simulated responses to their questions. This technology offers a compelling prospect to product leaders, as it facilitates research by generating and analyzing synthetic data, potentially speeding up projects and reducing costs. Stripe Partners' data science and social science teams have conducted experiments over the past 18 months to explore the value of large language models (LLMs) for research. They will present their findings at the 2023 EPIC conference.

Why it matters: LLMs can accelerate specific forms of analysis when given training examples. Tools like ChatGPT, Bing, Claude, and Google Bard empower researchers with limited computer or data science training to streamline analytical tasks, particularly when dealing with extensive qualitative datasets. LLMs can save time and enhance the accessibility of such datasets. Still, it's crucial for researchers to "train" the models with precise category definitions and correct analysis examples, a process known as "few-shot prompting." However, LLMs need help in contexts where understanding the context is essential, posing limitations.

Between the lines: The text implies that LLMs, even with human reinforcement learning, cannot effectively scale to handle every evolving complex context. Ethnographers, experts in cultural observation, have a unique advantage because they can explore uncharted territory, and businesses remain interested in complex domains for a competitive edge. Ethnographers' values become clearer as LLMs' capabilities are defined. This suggests the emergence of data science-literate ethnographers who can work with LLMs to accelerate their work, focusing on the complex domains they are uniquely qualified to explore.

How to Promote Responsible Open Foundation Models

What happened: Foundation models, such as Stable Diffusion 2, BLOOM, Pythia, Llama 2, and Falcon, have become crucial in the AI ecosystem. These open foundation models, which enable transparency and accessibility, are central to the industry but raise concerns about potential risks. Policymakers are grappling with the question of whether these models should be regulated, and this issue is pivotal in AI regulation discussions, particularly in the context of the EU AI Act and congressional inquiries in the United States. Princeton's Center for Information Technology Policy and Stanford's Center for Research on Foundation Models (CRFM) recently organized a workshop to address these concerns. The workshop discussed principles, practices, and policy regarding open foundation models and featured a keynote by Joelle Pineau, VP of AI Research at Meta.

Why it matters: Pineau emphasized the importance of open foundation models in accelerating innovation. Openness encourages developers to maintain high standards, fosters collaboration for faster and better solutions, and builds trust through transparency. In her view, responsible innovation necessitates extreme transparency to promote participation and trust. Pineau outlined Meta's approach to releasing open foundation models, stating that the company aims to open-source all project components, but this is not always feasible for various reasons.

Between the lines: The panel discussion on AI policy drew parallels between the current situation and the history of open-source software, emphasizing the power of openness in driving innovation. Peter Cihon (GitHub) pointed out the need for greater responsibility among developers due to the substantial capabilities of foundation models. He discussed the potential impact of the EU AI Act on these models and proposed a tiered regulatory approach. Zarek highlighted the common use of open-source software in the U.S. federal government and its role in providing regulatory guidance. Ho summarized the legislative debate on regulating foundation models, recommending adverse event reporting, auditing, and red teaming as part of the regulatory framework. The key question is whether unique regulations are needed for open foundation models, given their marginal risks compared to the alternatives.

We Don’t Actually Know If AI Is Taking Over Everything - The Atlantic

What happened: The rapid proliferation of chatbots and AI-powered applications has brought AI technology to the forefront, but there is growing concern about the secrecy surrounding AI models. Once developed through open research, many AI technologies are now hidden within corporations that provide limited information about their capabilities and development. This lack of transparency has raised issues, such as using books without the author's consent. To address this problem, Stanford University's Center for Research on Foundation Models (CRFM) has launched an index to measure the transparency of major AI companies, including OpenAI and Google. The index evaluated companies based on 100 criteria, and all the companies received a low score, indicating a significant lack of transparency.

Why it matters: The newly launched transparency index evaluates AI companies on various criteria, focusing on inputs, model details, and downstream impacts. This information is essential for various stakeholders, including researchers, policymakers, and consumers. The index also highlights industry-wide information gaps, such as copyright protections for training data, disclosure of data sources, and acknowledgment of model biases. The most striking finding is that all companies have poor disclosures regarding impact, which hampers regulatory oversight and consumer awareness of AI's pervasive use in their lives.

Between the lines: The index underscores the pervasive secrecy in the AI industry, making it challenging for regulators and consumers to understand the extent of AI's influence. The lack of transparency is not a new issue in Silicon Valley, with tech platforms becoming increasingly opaque as they gain dominance. The normalization of secrecy in the tech industry has made it challenging to hold companies accountable and understand the full scope of their impact. The index highlights the critical need for transparency in AI development, especially as AI technologies become integral to various aspects of our lives.

📖 From our Living Dictionary:

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Beware the Emergence of Shadow AI

The enthusiasm for generative AI systems has taken the world by storm. Organizations of all sorts– including businesses, governments, and nonprofit organizations– are excited about its applications, while regulators and policymakers show varying levels of desire to regulate and govern it.

Old hands in the field of cybersecurity and governance, risk & compliance (GRC) functions see a much more practical challenge as organizations move to deploy ChatGPT, DALL-E 2, Midjourney, Stable Diffusion, and dozens of other products and services to accelerate their workflows and gain productivity. An upsurge of unreported and unsanctioned generative AI use has brought forth the next iteration of the classic "Shadow IT" problem: Shadow AI.

To delve deeper, read the full article here.

💡 In case you missed it:

The GPTJudge: Justice in a Generative AI World

This article provides a comprehensive yet comprehensible description of Generative Artificial Intelligence (GenAI), how it functions, and its historical development. It explores the evidentiary issues the bench and bar must address to determine whether actual or asserted (i.e., deepfake) GenAI output should be admitted as evidence in civil and criminal trials. It offers practical, step-by-step guidance for judges and attorneys to follow in meeting the evidentiary challenges posed by GenAI in court. Finally, it highlights additional impacts that GenAI evidence may have on the law and the justice system.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

On your question in this brief: "How can we develop comprehensive strategies and tools to counteract the harmful effects of AI-driven disinformation and protect the integrity of information in an increasingly interconnected world?".

1. First off, there was never any assurance of integrity of information anytime in history, given how power has been wielded by monarchs, despots, etc, pre-democracy. Power decided what is truth and not until a new power vanquished the old. By the time we came along to democracy and liberal democracy, there is the expectation of truth production that has sanction (Sophia Rosenfeld), and we kind expect that truth trumps everything. So we have to start with power, political power in particular and with our own democratic and anti-democratic agency to add to or disrupt disinformation power.

2. For comprehensiveness of strategies, we need to decompose the problem into different segments where independent agency and incentives are play.

a) Disinformation producers - like politicians, culture warriors and even cavalier journalists.

b) Distributors - social media, search, news aggregator apps, news media (amplifiers, legitimizes, key nodes).

c) We the people-participants - our behavior and responses to information overload.

On the disinformation producers: My claim is that it is not information disorder at work, but a kind of cultural disorder, one where powerful actors do not want democratic culture to take further hold/expand, because they lose power and relevance in those contexts. For these folks generative AI is a force multiplier like nothing else.

Separate from the actors are two other factors:

1. Narratives, (facts and values) - the pathways along with stories are told (Bryan Stevenson's metaphor). These connect producers to people in participative ways.

2. Business models: specific business models at the distributors end actually make it hard to tackle the problems.

We have to imagine a suite of strategies located at different part of this chain, upstream, midstream and downstream and use democratic culture as a long-term anchor.