AI Ethics Brief #149: Preventing bloat in AI ethics processes, AI consent futures, ghosting the future, AI watermarking 101 ++

Should international summits on AI ethics have a stronger focus on binding commitments?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🚨 Quick take on recent news in Responsible AI:

AI Safety Summit Seoul 2024

🙋 Ask an AI Ethicist:

How do you prevent bloat in AI ethics processes within an organization?

✍️ What we’re thinking:

How data governance technologies can democratize data sharing for community well-being

🤔 One question we’re pondering:

Should international summits on AI ethics have a stronger focus on binding commitments?

🪛 AI Ethics Praxis: From Rhetoric to Reality

A guide on process fundamentals for Responsible AI implementation

🔬 Research summaries:

AI Consent Futures: A Case Study on Voice Data Collection with Clinicians

On the Perception of Difficulty: Differences between Humans and AI

Ghosting the Machine: Judicial Resistance to a Recidivism Risk Assessment Instrument

📰 Article summaries:

City’s watchdog finds ShotSpotter rarely leads to evidence of gun crimes, investigatory stops - Chicago Sun-Times

On the Societal Impact of Open Foundation Models

AI Watermarking 101: Tools and Techniques

📖 Living Dictionary:

What is the relevance of algorithmic pricing to AI ethics?

🌐 From elsewhere on the web:

‘Cesspool of AI crap’ or smash hit? LinkedIn’s AI-powered collaborative articles offer a sobering peek at the future of content

💡 ICYMI

International Institutions for Advanced AI

🚨 AI Safety Summit Seoul 2024 - here’s our quick take on what happened recently.

The AI Safety Summit recently took place in Seoul, with some valuable outcomes in the form a declaration by the participating countries towards ethical AI, commitments for frontier AI models, and a global AI safety network. Yet, as is true with all such efforts, there is always room for more to be done. Here are some additional considerations for the next iteration of the summit and actions that can be taken in the interim:

1. Enhanced International Collaboration and Coordination

Expand Participation: While the summit saw participation from 27 countries and the EU, efforts should be made to include more nations, especially from the Global South, to ensure a truly global perspective on AI safety and governance.

Regular Summits and Working Groups: Establish more frequent summits and interim working groups to maintain momentum and address emerging issues promptly. This could include regional meetings to address specific local challenges and opportunities.

2. Clear and Enforceable Regulations

Develop Binding Regulations: Move from voluntary commitments to binding international regulations that ensure compliance across borders. This could involve creating an international AI regulatory body with enforcement powers.

Harmonize Standards: Work towards harmonizing AI safety standards globally to avoid regulatory fragmentation and ensure consistent safety practices. This will also help with mitigating any hindering effects on trade and export/import of technologies due to different regulatory requirements in jurisdictions of trading partners.

3. Focus on Transparency and Accountability

Public Reporting: Mandate regular public reporting on AI safety practices and risk assessments by companies and governments. This would enhance transparency and build public trust in AI technologies.

Independent Audits: Implement independent audits of AI systems and safety practices to ensure compliance with established standards and identify areas for improvement. Building standards around this would aid in the process of better and more consistent outcomes.

Did we miss anything?

Sign up for the Responsible AI Bulletin, a bite-sized digest delivered via LinkedIn for those who are looking for research in Responsible AI that catches our attention at the Montreal AI Ethics Institute.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

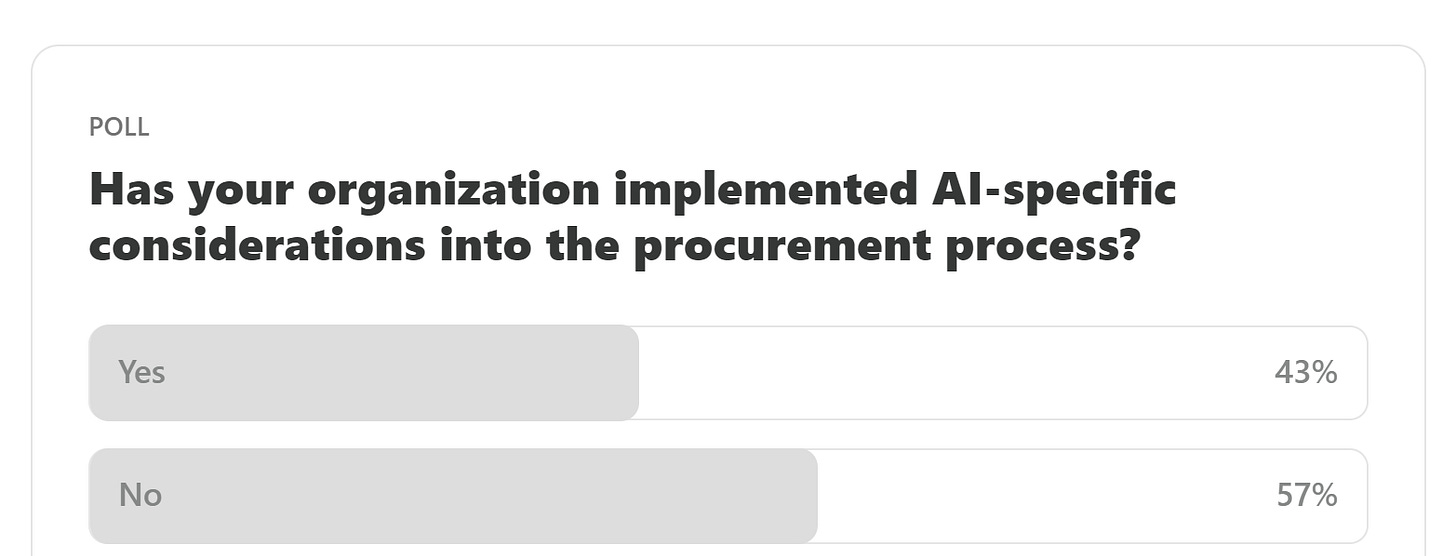

Here are the results from the previous edition for this segment:

A bit unfortunate to see a somewhat even split in terms of the implementation of AI-specific considerations into the procurement process. There is already some good getting started guides on procurement processes, for both public and private sectors, that should have boosted adoption rates of these considerations.

One of our more active readers, John M., recently sent in a dilemma facing him as he implemented Responsible AI within his organization. In particular, he ran into issues of processes becoming overly complex and burdensome as different ethical guidelines and policies were put in place and new committees and technical requirements were spun up to address the need for responsible AI across different product development teams. This led to a disincentivization in program adherence and he asked what he could do to combat that.

This is a common point of failure that we see as we work with organizations around the world, who in their (correct!) enthusiasm to implement responsible AI, (incorrectly!) go overboard with the complexity of the processes that they put in place to operationalize it. In a recently published article titled Keep It Simple, Keep It Right: A Maxim for Responsible AI, we help address this question from John.

The simplest way of doing something is often the right way. This maxim not only holds true in life but is also a powerful strategy for designing and implementing RAI programs. By embracing simplicity, we can create AI systems that are easier to understand, maintain, and govern—ultimately ensuring they align with ethical standards and societal values.

Simplicity enhances trust, transparency, and accountability in AI systems and the processes used to govern them.

Implementing simplicity involves clear documentation, modular design, automated monitoring, user-centric approaches, and incremental deployment.

Given the low rates of AI-specific considerations in the procurement processes, what do you think are the primary reasons for this issue? Please let us know! Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

How data governance technologies can democratize data sharing for community well-being

Data sharing efforts to allow underserved groups and organizations to overcome the concentration of power in our data landscape. A few special organizations, due to their data monopolies and resources, are able to decide which problems to solve and how to solve them. But even though data sharing creates a counterbalancing democratizing force, it must nevertheless be approached cautiously. Underserved organizations and groups must navigate difficult barriers related to technological complexity and legal risk. To examine what those common barriers are, one type of data sharing effort—data trusts—are examined, specifically the reports commenting on that effort. To address these practical issues, data governance technologies have a large role to play in democratizing data trusts safely and in a trustworthy manner. Yet technology is far from a silver bullet. It is dangerous to rely upon it. But technology that is no-code, flexible, and secure can help more responsibly operate data trusts. This type of technology helps innovators put relationships at the center of their efforts.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

Building off the momentum from the recently held AI Safety Summit, we’ve been (somewhat) contentious and heated discussions internally around the value of such gatherings if they don’t produce binding outcomes. That is, we’ve been pondering whether a much stronger focus should be made in these gatherings on producing binding outcomes rather than looser commitments, statements, alignment, etc. which could be achieved asynchronously throughout the year as well.

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

We often get asked about what should be some of the guiding principles when designing processes for a successful RAI program implementation? A recently published article titled A guide on process fundamentals for Responsible AI implementation shares some insights on that:

Strategic Alignment: Align authority and responsibility to ensure decision-makers are accountable, fostering a culture of ownership in RAI initiatives.

Adaptive Processes: Implement changes incrementally and safely through parallel structures and modular development, minimizing disruptions.

Holistic Incentives: Design incentive structures that align technical performance with ethical and societal impact, motivating diverse stakeholders to contribute to the success of RAI efforts.

🔬 Research summaries:

AI Consent Futures: A Case Study on Voice Data Collection with Clinicians

Artificial intelligence (AI) applications, including those based on machine learning (ML) models, are increasingly developed for high-stakes domains such as digital health and health care. This paper foregrounds clinicians’ perspectives on new forms of data collection that are actively being proposed to enable AI-based assistance with clinical documentation tasks. We examined the prospective benefits and harms of voice data collection during health consultations, highlighting eight classes of potential risks that clinicians are concerned about, including clinical workflow disruptions, self-censorship, and errors that could impact patient eligibility for services.

To delve deeper, read the full summary here.

On the Perception of Difficulty: Differences between Humans and AI

In the realm of human-AI interfaces, quantifying instance difficulty for specific tasks presents methodological complexities. This research investigates prevailing metrics, revealing inconsistencies in evaluating perceived difficulty across human and AI agents. This paper seeks to elucidate these disparities through an empirical approach, advancing the development of more effective and reliable human-AI interaction systems, considering the diverse skills and capabilities of both humans and AI agents.

To delve deeper, read the full summary here.

Ghosting the Machine: Judicial Resistance to a Recidivism Risk Assessment Instrument

Algorithmic risk assessment is often presented as an ‘evidence-based’ strategy for criminal justice reform. In practice, AI-centric reforms may not have the positive impacts their proponents expect. Through a community-informed interview-based study of judges and other legal bureaucrats, this paper qualitatively evaluates the impact of a recidivism risk assessment instrument recently adopted in the state of Pennsylvania. I find that judges overwhelmingly ignore the new tool, largely due to organizational factors unrelated to individual distrust of technology.

To delve deeper, read the full summary here.

📰 Article summaries:

What happened: The Office of the Inspector General in Chicago released a critical report revealing that ShotSpotter technology, utilized by the city's police department, often fails to result in investigatory stops or the discovery of evidence related to gun crimes. Analysis of over 50,000 ShotSpotter notifications from January to May found that only a tiny percentage directly led to gun-related offenses or investigative stops. Deputy Inspector General Deborah Witzburg emphasized the substantial costs associated with using ShotSpotter, including community concerns and potential negative outcomes when officers respond to alerts with limited information.

Why it matters: The report raises significant concerns about the efficacy of ShotSpotter technology in addressing gun violence, highlighting a lack of concrete evidence linking its use to crime reduction or the recovery of firearm-related evidence. Moreover, extending the ShotSpotter contract without transparent deliberation underscores the need for greater scrutiny and evaluation of such decisions. While ShotSpotter maintains its accuracy and importance in detecting gunfire, skepticism remains regarding its overall effectiveness in improving public safety, prompting a call for evidence-based policymaking.

Between the lines: City officials and stakeholders are divided on the report's implications, with some emphasizing the potential life-saving benefits of ShotSpotter technology. Taliaferro argues that the quick response facilitated by ShotSpotter alerts has directly contributed to saving lives, outweighing concerns about its limited efficacy in crime prevention. On the other hand, Alderman Anthony Beale suggests that the effectiveness of ShotSpotter is contingent upon the ability of law enforcement to pursue suspects, highlighting broader issues surrounding police policies and resource allocation. The debate underscores the complex interplay between technology, law enforcement practices, and community safety in addressing urban crime challenges.

On the Societal Impact of Open Foundation Models

What happened: Last October, President Biden issued an Executive Order directing the National Telecommunications and Information Administration (NTIA) to investigate the benefits and risks of open foundation models, like Meta's Llama 2 and Stability's Stable Diffusion. The NTIA has now solicited public input through a list of over 50 questions to inform its report, which will influence U.S. policy on these models. Meanwhile, a collaborative paper involving 25 authors from various sectors has been released to clarify the societal impact of open foundation models and propose a risk assessment framework.

Why it matters: Recent research, notably from MIT, raised concerns about the potential misuse of open language models for creating bioweapons, prompting calls for stricter regulation or even bans on open foundation models. However, subsequent studies from the RAND Institute and OpenAI found no significant difference in risk between using language models and accessing information from the Internet. This highlights the necessity of comparing risks from open models with existing technology and developing a clear methodology for risk assessment.

Between the lines: The paper offers practical recommendations for stakeholders: developers should clarify responsibilities with downstream users, researchers should adopt a standardized risk assessment framework, policymakers should evaluate proposed regulations with empirical evidence, and competition regulators should assess the impact of openness on model benefits systematically. Addressing conceptual confusion and enhancing empirical evidence aims to foster informed decision-making and mitigate potential risks associated with open foundation models.

AI Watermarking 101: Tools and Techniques

What happened: In recent months, there has been a surge in 'deepfake' incidents, where AI-generated content, ranging from images of celebrities to recordings of public figures like Joe Biden, circulates widely on social media. This content is often used for various purposes, from selling products to spreading misinformation, posing significant risks to individuals and society. To address this, efforts have been made to develop watermarking techniques for AI-generated content, aiming to add authenticity markers to mitigate misuse.

Why it matters: Watermarking and related techniques play a crucial role in combating deepfakes and non-consensual image manipulation. Tools like Glaze and Photoguard subtly alter images to thwart AI algorithms, while systems like Truepic provide metadata linking content to its provenance. However, the balance between openness and security is delicate, as open access to watermarking and detection tools can be exploited by malicious actors to circumvent safeguards—nonetheless, advancements in watermarking promise to enhance content authenticity and combat AI-generated disinformation.

Between the lines: The proliferation of deepfakes poses significant challenges, from disinformation campaigns to privacy violations. Hugging Face, among others, emphasizes the importance of quickly identifying AI-generated content and advocates for robust watermarking solutions. While not foolproof, watermarking can serve as a vital tool in safeguarding against the misuse of AI and promoting trust in digital content.

📖 From our Living Dictionary:

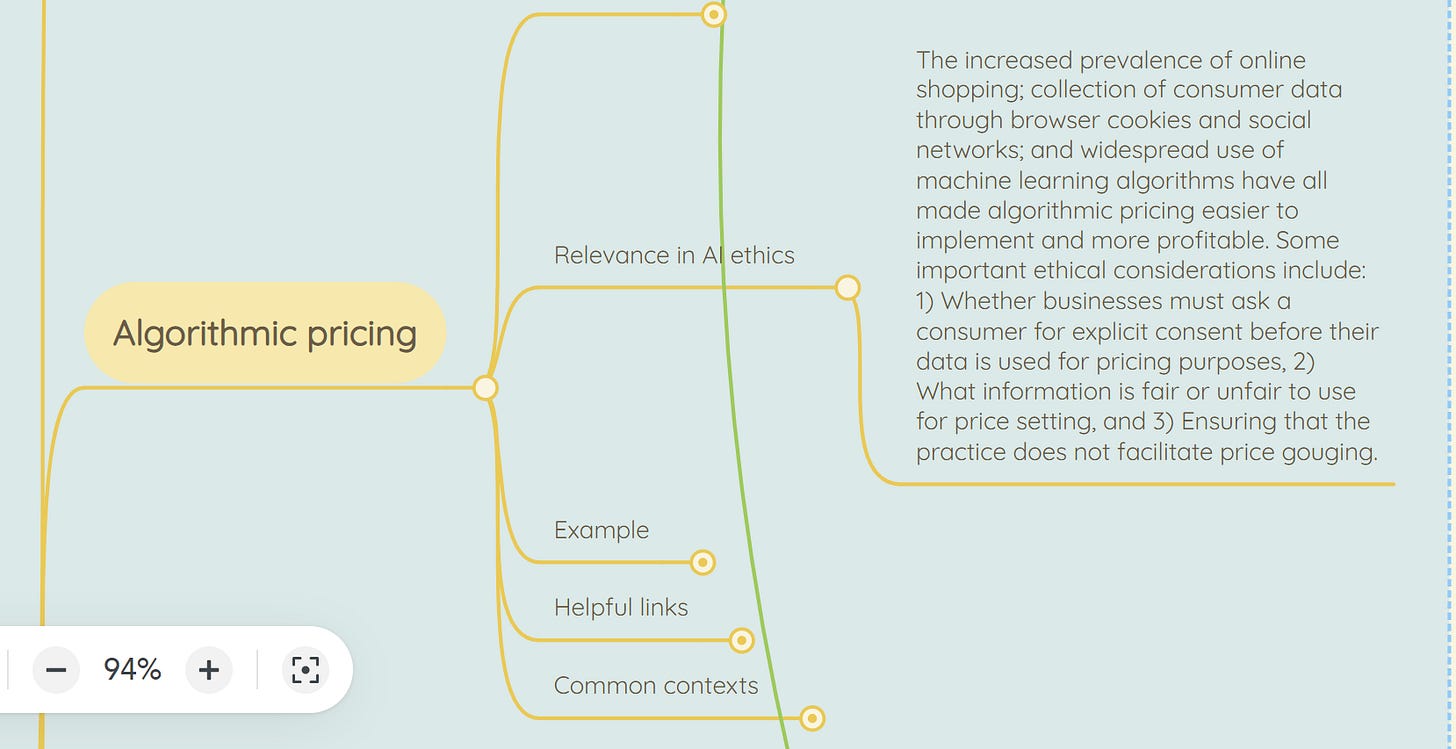

What is the relevance of algorithmic pricing to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Abhishek Gupta, founder and principal researcher at the Montreal AI Ethics Institute, pointed to a recent paper, “Self-Consuming Generative Models Go MAD,” that showed AI-generated content entering the training feedback loop reduces the performance of future generations of models. “The underlying sources of the generative system largely remain the same, i.e., the internet-scale datasets—this means that source diversity for inputs would potentially be reduced,” he explained.

To delve deeper, read more details here.

💡 In case you missed it:

International Institutions for Advanced AI

International efforts may be useful for ensuring the potentially transformative impacts of AI are beneficial to humanity. This white paper provides an overview of the kinds of international institutions that could help harness the benefits and manage the risks of advanced AI.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

The Montreal AI Ethics Institute's weekly brief is a fantastic resource for staying updated on AI ethics. It offers valuable insights and research summaries. Highly recommend checking it out and supporting their mission!