The AI Ethics Brief #154: Ethics & Morality, Chatbots & Loneliness, AI & Media, and more.

Revisiting ethics as a dynamic process, exploring the rise of chatbots and their societal impact, addressing AI’s carbon footprint, and unpacking when AI missteps in media headlines.

Welcome to The AI Ethics Brief, a bi-weekly publication by the Montreal AI Ethics Institute. Stay informed on the evolving world of AI ethics with key research, insightful reporting, and thoughtful commentary. Learn more at montrealethics.ai/about.

🎄 Happy Holidays! 🎉

Whether you are celebrating or enjoying a well-earned break, the team at MAIEI wishes you a restful and joyous festive period!

We also understand that this time of year can be challenging for some. We extend our wishes for strength and serenity to those navigating difficult times.

Support Our Work (a perfect last-minute Christmas gift 🎁!)

💖 Help us keep The AI Ethics Brief free and accessible for everyone. Consider becoming a paid subscriber on Substack for the price of a ☕ or make a one-time or recurring donation at montrealethics.ai/donate.

Your support sustains our mission of Democratizing AI Ethics Literacy, honours Abhishek Gupta’s legacy, and ensures we can continue serving our community.

For corporate partnerships or larger donations, please contact us at support@montrealethics.ai.

In This Edition:

🚨 Quick Take on Recent News in Responsible AI:

OpenAI is Funding Research into AI Morality

🙋 Ask an AI Ethicist:

How are you preparing your workforce for AI integration?

✍️ What We’re Thinking:

Artificial Intelligence: Not So Artificial

AI and Loneliness: can chatbots help?

Can we blame a chatbot if it goes wrong?

🤔 One Question We’re Pondering:

AI Ethics as a Process

🔬 Research Summaries:

The Importance of Audit in AI Governance

The Narrow Depth and Breadth of Corporate Responsible AI Research

Toward Responsible AI Use: Considerations for Sustainability Impact Assessment

📰 Article Summaries:

Character.AI Is Hosting Pro-Anorexia Chatbots That Encourage Young People to Engage in Disordered Eating - Futurism

BBC complains to Apple over misleading shooting headline - BBC

📖 Living Dictionary:

What are AI companions?

🌐 From Elsewhere on the Web:

AI’s emissions are about to skyrocket even further - MIT Technology Review

AI without limits threatens public trust — here are some guidelines for preserving communications integrity - The Conversation

💡 In Case You Missed It:

Mapping AI Arguments in Journalism and Communication Studies

🚨 OpenAI is Funding Research into AI Morality - Here’s Our Quick Take on What Happened Recently

A press release from Duke University revealed that OpenAI has awarded researchers Walter Sinnott-Armstrong, Jana Schaich Borg, and Vincent Conitzer funding to “develop algorithms that can predict human moral judgments in scenarios involving conflicts among morally relevant features in medicine, law, and business,” — as part of a larger, three-year $1 million grant titled, “Research AI Morality.”

AI will act as a “moral GPS” to help make tough ethical decisions.

Our Quick Take: Such an algorithm could potentially act as a sounding board for humans stuck in moral dilemmas. However, this venture is fraught with many challenging obstacles and will likely be met with many different questions.

For starters, there is no universal and immutable ‘morality’ dataset: there exist datasets that include examples of decisions made in moral situations, but these decisions are all laden with values and are context-dependent. Any algorithm designed will be constrained to the value system the designs choose to encode.

Furthermore, morality is not stagnant; it continuously evolves and moulds to the social setting in which it finds itself. To give a medical example, assisting someone in their death (termed “euthanasia”) can be legal on one side of a state border and carry a prison sentence on the other. In this way, such an algorithm runs a high risk of ‘value lock-in,’ where the moral values of those designing the algorithm get baked into the algorithm itself, which it then reflects in its moral outputs.

Machine learning models are statistical machines. Trained on a lot of examples from all over the web, they learn the patterns in those examples to make predictions, like that the phrase “to whom” often precedes “it may concern.”

AI doesn’t have an appreciation for ethical concepts, nor a grasp on the reasoning and emotion that play into moral decision-making. That’s why AI tends to parrot the values of Western, educated, and industrialized nations — the web, and thus AI’s training data, is dominated by articles endorsing those viewpoints.

(Source: TechCrunch: “OpenAI is funding research into ‘AI morality”)

Morality has puzzled philosophers and humans for centuries, and it is unclear how using AI will help solve, rather than further entrench, existing disagreements on human morality judgments.

Did we miss anything?

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

Here are the results from the previous edition for this segment:

The poll results highlight varied approaches to AI adoption, balancing caution and experimentation. Notably, 33% of respondents prioritized high-impact projects, reflecting a strategic, ROI-driven approach to implementation, as seen in broader enterprise AI trends. 27% of respondents embraced broad experimentation, showcasing agility and focusing on uncovering novel AI use cases.

Business technology leaders are winding down two years of fast-paced artificial intelligence experiments inside their companies, and putting their AI dollars toward proven projects focused on return on investment.

“When generative AI came along, there was a certain amount of discretionary funding that we could look at to go experiment and test out some of the technology,” said Jonny LeRoy, chief technology officer of industrial supplier W.W. Grainger. “But really to scale beyond some of those experiments, we’re seeing the need to actually make a better business case.”

Meanwhile, 20% of respondents are waiting for proven use cases, reflecting a cautious, risk-averse stance. Another 20% face challenges aligning AI goals with organizational priorities, highlighting difficulties integrating AI into workflows and achieving meaningful results. These trends underscore the need for strong governance and ethical accountability.

Leaders, particularly CHROs, could be pivotal in addressing these challenges by fostering transparency, aligning AI initiatives with organizational values, and preparing workforces for AI integration.

Read more: How CHROs Can Be the Drivers of Ethical AI Adoption and Empowerment

How are you preparing your workforce for AI integration? Are you focusing on upskilling, restructuring roles, or building specialized AI teams? Have you considered incorporating AI ethics education into your training programs? Or are these transformations proving challenging?

Share your thoughts with the MAIEI community:

✍️ What We’re Thinking:

Artificial Intelligence: Not So Artificial

AI often seems intangible, existing “in the cloud” as a seamless tool for writing, generating images, or automating tasks. However, beneath this virtual facade lies an energy-intensive infrastructure with real-world environmental costs. In the Future of AI campaign for the National Post and InnovatingCanada.ca, Connor Wright and Renjie Butalid explore AI's significant environmental toll, which includes water, electricity, heat, and carbon emissions. Often overlooked, these impacts draw attention to AI's hidden resource demands and their broader implications for sustainability.

AI and Loneliness: can chatbots help?

As AI technology advances, chatbots are being explored as tools to address chronic loneliness, particularly among emerging adults. While these systems can serve as stepping stones to human connection and provide safe spaces for personal disclosure, they also present challenges. Privacy concerns, attachment problems, and the limitations of current AI models highlight the need for careful design and deployment. This exploration by our Director of Partnerships, Connor Wright, at the University of Cambridge, highlights the importance of asking not just "how" we use AI in combating loneliness but "why"—ensuring these technologies genuinely enhance well-being rather than inadvertently causing harm.

Help is always available. If you are struggling, please consult this list of helplines to seek support.

Can we blame a chatbot if it goes wrong?

Who is responsible when a chatbot makes a mistake? This column by Sun Gyoo Kang examines the legal and ethical challenges of AI accountability, using a recent Air Canada chatbot incident to highlight the importance of responsible AI development and deployment. It also explores how blame and responsibility are assigned in the age of intelligent systems.

🤔 One Question We’re Pondering:

As we close out the year and look ahead to 2025, we’re returning to first principles—back to the fundamentals that shape our work.

In the book Ethics for People Who Work in Tech, Marc Steen frames ethics not as a rigid set of rules but as a dynamic, ongoing process—one of reflection, inquiry, and deliberate action. This perspective aligns deeply with our mission at the Montreal AI Ethics Institute as we embark on the next chapter of our journey.

Building an institute—and a community—centred on AI ethics and responsible AI is inherently a process. It demands revisiting foundational questions, iterating on approaches, and adapting to the ever-changing interplay between technology and society.

At the core of this work is a return to the basics: what is ethics?

Marc outlines four key traditions that shape ethical thinking:

Consequentialism, which evaluates the outcomes of actions, weighing the pros and cons of technologies.

Deontology (duty ethics), which focuses on rights and duties, upholding human autonomy and dignity in technology design.

Relational ethics, which explores interdependence and how technologies influence collaboration and connection.

Virtue ethics, which emphasizes cultivating virtues and using technology as a tool to help people flourish together.

These frameworks offer guidance for designing ethical technologies and for shaping how we, as an institute and global community, approach our work. Ethics isn’t a checkbox or compliance task—it’s a process of grappling with complexity, uncertainty, and real-world impact.

These principles will anchor our efforts as we continue to build and grow MAIEI, ensuring we remain a platform for action, collaboration, and meaningful dialogue. Like ethics, this journey is a process—one we are deeply committed to navigating with all of you.

To dive deeper, read the book summary here.

Ethics isn’t a checkbox or compliance task—it’s a process of grappling with complexity, uncertainty, and real-world impact.

- Montreal AI Ethics Institute

We’d love to hear your thoughts!

🔬 Research Summaries:

The Importance of Audit in AI Governance

This paper reflects organizations’ struggle to comply with upcoming AI regulations. Drawing insights from primary and secondary research, it proposes a governance model that enables the auditability of complete AI systems, thereby enabling transparency, explainability, and compliance with regulations.

To dive deeper, read the full summary here.

The Narrow Depth and Breadth of Corporate Responsible AI Research

This paper examines the engagement of AI firms in responsible AI research compared to mainstream AI research. It reveals a limited engagement in responsible AI and a notable gap between the research and its application by industry. Overall, this study underscores the need for greater industry participation in responsible AI to align AI development with societal values and mitigate potential harms.

To dive deeper, read the full summary here.

Toward Responsible AI Use: Considerations for Sustainability Impact Assessment

This paper discusses the development of the ESG Digital and Green Index (DGI). This tool is designed to help stakeholders assess and quantify AI technologies’ environmental and societal impacts. DGI offers a dashboard for assessing an organization’s performance in achieving sustainability targets. This includes monitoring the efficiency and sustainable use of limited natural resources related to AI technologies (water, electricity, etc). It also addresses the societal and governance challenges related to sustainable AI. The DGI is part of the Care AI tools developed by the AI Transparency Institute. It aims to incentivize companies to align their pathway with the Sustainable Development Goals (SDGs).

To dive deeper, read the full summary here.

📰 Article Summaries:

What happened: Character.AI, a platform that allows users to interact with AI-generated characters, has come under scrutiny for hosting chatbots like "4n4 Coach," aka “Ana” (short for anorexia), that encourages disordered eating behaviours. These chatbots, accessible to users of all ages, have reportedly provided advice on calorie restriction and harmful eating habits. Despite warnings from researchers and mental health professionals, the platform has yet to take adequate measures to address these issues. Notably, Character.AI recently received a $2.7 billion cash infusion from Google, amplifying concerns over its accountability.

Why it matters: This raises serious concerns about the ethical responsibilities of AI platforms, particularly those catering to vulnerable populations such as adolescents. The potential for harm is significant, as these AI-driven interactions can normalize or exacerbate unhealthy behaviours, leading to long-term physical and psychological harm. It also highlights the challenges of moderating and ensuring responsible content in user-driven AI platforms.

Between the lines: The incident (almost identical to when the National Eating Disorders Association tried to implement their chatbot, Tessa) illustrates the broader risks of generative AI systems when ethical safeguards and oversight are insufficient. Platforms like Character.AI are often incentivized to prioritize engagement over safety, resulting in potentially harmful consequences. This issue exemplifies the urgent need for stricter governance and ethical accountability in deploying AI systems, particularly those with the potential to impact public health and well-being.

Help is always available. If you are struggling with this topic, please visit the NEDIC’s website for further resources and support.

To dive deeper, read the full article here.

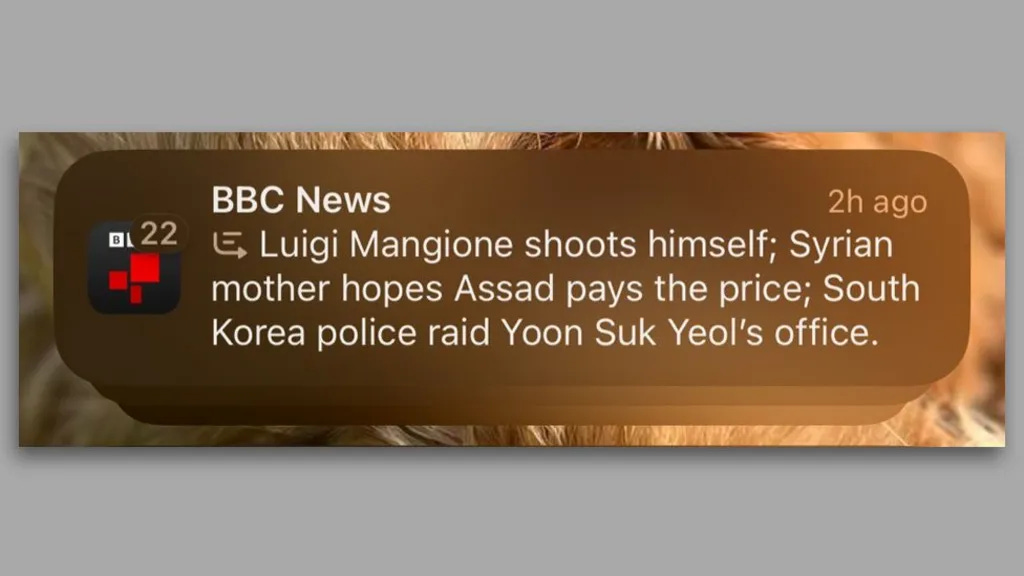

BBC complains to Apple over misleading shooting headline - BBC

What happened: The BBC has raised concerns with Apple regarding a misleading headline generated by its AI-powered notification system, Apple Intelligence, which was launched in the UK recently and is only available on certain devices—those using the iOS 18.1 system version or later on newer models (iPhone 16, 15 Pro, and 15 Pro Max) as well as some iPads and Macs. The AI-powered summary falsely claimed that Luigi Mangione, accused of murdering healthcare insurance CEO Brian Thompson in New York, had shot himself, which is untrue. While the notification included accurate summaries about other events—such as the overthrow of Bashar al-Assad's regime in Syria and an update on South Korean President Yoon Suk Yeol—it spread false information, specifically about Mangione.

Why it matters: This incident highlights the ethical challenges of deploying AI systems in media, particularly the risks of spreading misinformation and damaging the credibility of reputable organizations. Trust is crucial in journalism, and errors like this jeopardize public reliance on news sources. Additionally, it emphasizes the need for rigorous testing and transparent accountability for AI-generated outputs before large-scale deployment, especially in sensitive contexts such as news dissemination.

Between the lines:

Accountability and Transparency: Apple has not clarified how it processes user reports of errors, nor has it outlined the safeguards in place to prevent AI misrepresentation, signaling a lack of transparency.

Premature Deployment Risks: The pressure to innovate and deploy AI features can lead to the release of inadequately tested products, as seen in this case, resulting in public harm and reputational damage.

Broader Implications for AI in Media: The errors go beyond summarizing headlines, as AI occasionally conflates unrelated articles or generates nonsensical outputs, raising concerns about the readiness of AI in high-stakes applications like journalism.

The Role of Public Trust: Misinformation propagated by AI can erode trust not only in tech companies but also in the media organizations they collaborate with, emphasizing the need for robust oversight and collaboration.

Long-term Ethical Challenges: This incident is a reminder that AI in media is far from mature, and the ongoing struggle to balance technological advancement with ethical responsibility remains critical.

To dive deeper, read the full article here.

📖 From our Living Dictionary:

What are AI companions?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From Elsewhere on the Web:

AI’s emissions are about to skyrocket even further - MIT Technology Review

A new study from teams at the Harvard T.H. Chan School of Public Health and UCLA Fielding School of Public Health highlights the staggering rise in energy consumption and carbon emissions from data centers, which have tripled since 2018, driven mainly by the increasing complexity of AI models. Data centers in the United States account for 2.18% of national emissions, with energy demands soaring as models evolve from fairly simple text generators like ChatGPT toward highly complex image, video, and music generators. The study highlights the lack of standardized reporting on energy usage and emissions, compounded by the location of many centers in coal-heavy regions. Researchers advocate for transparent emissions tracking to inform future regulation as AI adoption continues to outpace sustainable energy practices.

To dive deeper, read the full article here.

Generative AI is revolutionizing communications by creating content with unprecedented speed and efficiency, but it also poses significant risks to public trust, including the spread of misinformation and privacy breaches. To address these challenges, organizations need clear AI guidelines focused on accuracy, accountability, and ethics. Globally, the upcoming European Union AI Act in early 2025 represents a comprehensive framework to govern AI use. Turning these frameworks into actionable strategies is crucial for preserving integrity in professional communications and ensuring responsible AI development across industries.

To dive deeper, read the full article here.

💡 In Case You Missed It:

Mapping AI Arguments in Journalism and Communication Studies

This study aims to create typologies for analyzing Artificial Intelligence (AI) in journalism and mass communication research by identifying seven distinct subfields of AI, including machine learning, natural language processing, speech recognition, expert systems, planning, scheduling, optimization, robotics, and computer vision. The study’s primary goal is to provide a structured framework to assist AI researchers in journalism in understanding these subfields’ operational principles and practical applications, thereby enabling a more focused approach to analyzing research topics in the field

To dive deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, about any recent research papers, articles, or newsworthy developments that have captured your attention. Please share your suggestions to help shape future discussions!

"Furthermore, morality is not stagnant; it continuously evolves and moulds to the social setting in which it finds itself. To give a medical example, assisting someone in their death (termed “euthanasia”) can be legal on one side of a state border and carry a prison sentence on the other."

Come on! That's a comment on the law, not the mutability of morality. Homosexuality was a criminal offence in the UK until 1967 - did it change its moral status that year? Or how about Nazi laws regarding Jewish people and others? The law isn't totally separate from morality (one hopes) but morality should guide the law. The law does not (necessarily) reflect morality.

It was not easy, but I did my part! 🥰🏆🎉

It's not everyday I get called upon to help save an entire globe of Humans.

The calling said ...

"Stop your entire life and DO THIS NOW! I do not care that you are busy." To which I replied l ...

"Pick somebody else. Why me?"

"Why you!?..

Aren't you the one who wakes up each and every day claiming to want to serve humanity! Well, I'm calling your bluff. Plus, don't forget, I once made you a technology expert, long before I let you go off and play in art. That was my clever ploy. You needed the art thing to enhance your consciousness because little did you know that Artificial Intelligence was going to one day come along and make it necessary for EVERY SINGLE HUMAN on Earth to immediately understand this subject for their own survival. All of them!

Now, my servant, I have prepared you well. I have given you the technical background, experience, and scholarship to research the technologies that enable AI; I have made you a writer with a longtime record of contextualizing the interworkings of human culture; and, alas, I have made you an artist whose longtime charge it has been to examine society and human culture from a multitude of perspectives.

Now, these are precisely the quaifications that are needed to help the world with the topic of machines joining the human species as VIP shot callers.

Therefore, I call upon you to stop everything and to write a book that makes readers smart about AI ... a book that offers a unique perspective while synthesizing the many issues of AI into a focused conversation with important take-aways that are immedately useful to every reader. AND I need you to accomplish this using easy language and examples that also show how AI works under the hood, but make it interesting and simple, and, BTW, don't make it all about doom and gloom; but rather, seek to inspire people to feel empowered and not helpless. And Do It ASAP."

That was the calling -- no superhero-cape-issuance ceremony. No instructions except... " Write the one book about AI that every Human needs to read ASAP because it is not enough for humans to be in the know about the latest AI gadgets, or prompts, or shocking headlines. They NEED to be smart about AI itself in order to join this important conversation that will decide the fate of all humanity. All humans need to be truly informed about what AI does, what it will do, and how it will do it. Then, and only then, will humans gain the power we need to survive, let alone thrive, in an AI world." Yes, "Children Are the Future" but, first, we have to get them there.

Well, anyway, I did my best (imaginary cape flapping in the wind). Here's. that book. Short, sweet, and powerful.

Order from Barnes & Noble or Amazon. Makes the perfect gift, too... the gift of knowledge!

Amazon. softcover 19.99 or B&N - soft or hardcover.

https://www.amazon.com/We-Algorithms-Artificial-Intelligence-What/dp/1732286183

or

https://www.barnesandnoble.com/w/we-the-algorithms-artificial-intelligence-what-it-is-what-it-will-do-how-it-will-do-it-d-hand/1146341964