AI Ethics Brief #144: Mechanisms of AI policy adoption, scientists' view on GenAI potential, incorporating ethics into GTM strategy, and more.

What are some good resources that are keeping track of how the regulatory landscape is evolving?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

What strategies do you recommend for international cooperation to ensure the development of globally responsible AI systems?

✍️ What we’re thinking:

Regulating computer vision & the ongoing relevance of AI ethics

🤔 One question we’re pondering:

What are some good resources that are keeping track of how the regulatory landscape is evolving?

🪛 AI Ethics Praxis: From Rhetoric to Reality

How should business leaders incorporate ethics into their go-to-market (GTM) strategy?

🔬 Research summaries:

Discursive framing and organizational venues: mechanisms of artificial intelligence policy adoption

Implications of Distance over Redistricting Maps: Central and Outlier Maps

Scientists’ Perspectives on the Potential for Generative AI in their Fields

📰 Article summaries:

The Evolution of Enforcing our Professional Community Policies at Scale | LinkedIn Engineering

How OpenAI is approaching 2024 worldwide elections

Each Facebook User is Monitored by Thousands of Companies – The Markup

📖 Living Dictionary:

What is the relevance of GIGO to AI ethics?

🌐 From elsewhere on the web:

Open-Sourcing Highly Capable Foundation Models

💡 ICYMI

Toward an Ethics of AI Belief

🚨 Regulations and efforts galore in the US towards Responsible AI - here’s our quick take on what happened last week.

U.S. Government's AI Regulatory Vision

The U.S. Administration has taken steps to task agencies with the next steps in regulatory approaches for AI. This includes promoting a comprehensive vision that enhances safe and responsible AI innovation, promotes competition, and encourages policies that are robust and flexible. The U.S. is looking to advance a model of AI regulation in concert with countries like Japan, the UK, and Australia, which are taking sector-specific approaches to regulating AI. Hundreds of bills have been introduced in statehouses across the country this year, emphasizing the need for federal leadership on AI regulation.

AI Safety Consortium

The U.S. Department of Commerce announced the creation of the AI Safety Institute Consortium (AISIC), which includes major tech companies like OpenAI, Google, Microsoft, Meta, Apple, and others. The consortium is an outcome of President Biden's executive order on AI and is tasked with developing guidelines for red-teaming, capability evaluations, risk management, safety and security, and watermarking synthetic content.

These developments indicate a growing emphasis on creating a regulatory framework that ensures the responsible development and deployment of AI technologies, with a focus on safety, security, and ethical considerations. The involvement of major tech companies and the push for global interoperability suggest a collaborative effort to shape the future of AI regulation both in the U.S. and internationally.

Did we miss anything?

Sign up for the Responsible AI Bulletin, a bite-sized digest delivered via LinkedIn for those who are looking for research in Responsible AI that catches our attention at the Montreal AI Ethics Institute.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

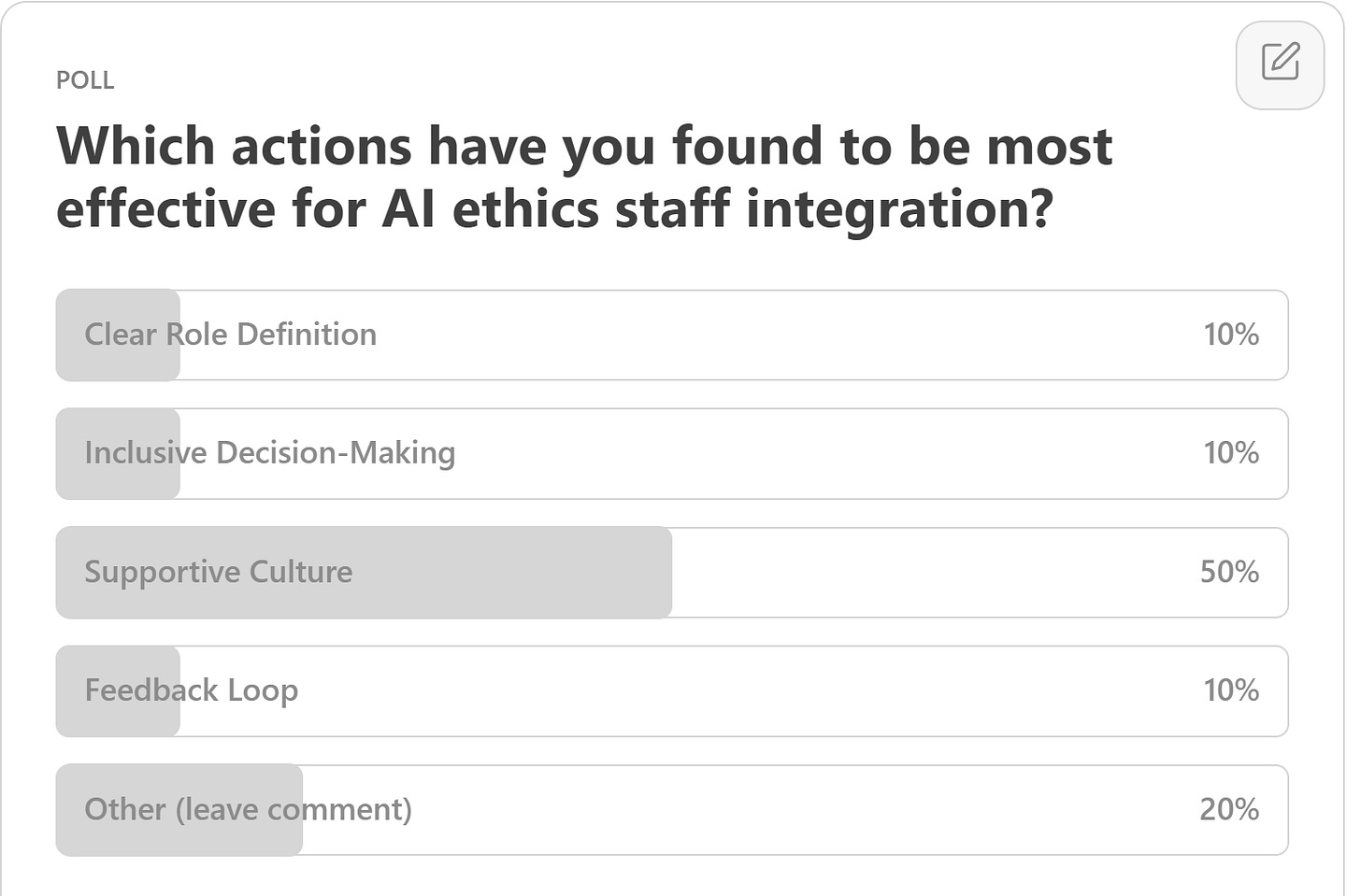

Here are the results from the previous edition for this segment:

Absolutely agreed with how our community has responded to the above question, having a supportive culture is one of the prime requirements for staff working on AI ethics to be effective in their roles, especially given the fraught nature of the challenges and (sometimes) misaligned incentives that exist between ethical and business goals.

On to the reader question of the week then. One of our readers, P.R., wrote in to ask, given the diverse cultural and regulatory landscapes across the globe, how does our research take into account different global perspectives on AI ethics, and what strategies do you recommend for international cooperation to ensure the development of globally responsible AI systems?

Following are some efforts (some of which MAIEI staff is a part of) and approaches that we’ve found useful in approaching international cooperation towards research and development in Responsible AI:

Multilateral Frameworks

Encourage participation in international forums and multilateral agreements that aim to set global standards for AI ethics, similar to the UNESCO Recommendation on the Ethics of Artificial Intelligence. These frameworks can help harmonize regulations and promote a common understanding of AI ethics that respects cultural differences.

Cross-Cultural Research Collaborations

Foster partnerships across institutions worldwide to conduct research that integrates diverse ethical perspectives into AI development. This can be facilitated through international research grants, joint workshops, and conferences that aim to bridge cultural divides and promote a shared understanding of ethical AI.

Global AI Ethics Standards

Support the development of international standards for AI ethics, such as those being developed by the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. These standards can provide a common foundation for AI ethics that accommodates cultural diversity while ensuring responsible AI development globally.

Are there other approaches that you adopt towards addressing the question asked by our reader this week? Please let us know! Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Regulating computer vision & the ongoing relevance of AI ethics

What should we do about computer vision´s potential ethical and societal implications? This column discusses whether computer vision requires special treatment concerning AI governance, how the EU´s AI Act tackles computer vision´s potential implications, why AI ethics is still needed after the AI Act, and what implications of computer vision deserve more attention in public and political debate.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

With all the developments happening in the US towards regulating AI systems, what are some good resources that are keeping track of how the regulatory landscape is evolving?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

Given the advice we’ve shared with organizations who are seeking to bring Generative AI systems to their users and customers, one of the questions that gets asked often by business leaders is how they should incorporate ethics into their go-to-market (GTM) strategy?

Here are the things that we’ve found to be most useful as the starting point for organizations:

Transparency

Communication about the AI's functionality, training data, and decision logic should be clear and straightforward.

Actionable Steps: Implement a transparency protocol detailing the AI's algorithms, data sources, and decision-making processes.

Privacy & Data Governance

Individual privacy must be safeguarded, and data usage should be both ethical and legal.

Actionable Steps: Enact strong data governance, adhering to privacy laws like GDPR and CCPA, and utilize data anonymization and encryption as needed.

Safety & Security

AI systems should be secure against attacks and safe for public use.

Actionable Steps: Implement security measures, conduct threat assessments, and maintain ongoing surveillance for vulnerabilities.

Ethical Use & Impact

The broader societal impact of AI should be considered, aiming for a positive contribution.

Actionable Steps: Consult with a diverse set of stakeholders to gauge societal impacts and guide the development towards ethical uses.

Regulatory Compliance

Compliance with existing and forthcoming AI regulations is essential.

Actionable Steps: Keep abreast of AI regulations and establish a system for ongoing legal compliance.

Continuous Improvement

Ethical AI practices should evolve through continuous learning and updating.

Actionable Steps: Set up mechanisms for regular policy and practice evaluations, incorporating new insights and societal values.

This is not meant to be exhaustive since there is a lot more that needs to be done once these first few considerations are implemented!

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

🔬 Research summaries:

Discursive framing and organizational venues: mechanisms of artificial intelligence policy adoption

This article explores the impact of the European Union’s Artificial Intelligence Act (EU AI Act) on Nordic policymakers and their formulation of national AI policies. This study investigates how EU-level AI policy ideas influence national policy adoption by examining government policies in Norway, Sweden, Finland, Iceland, and Denmark. The findings shed light on the dynamics between ideational policy framing and organizational capacities, highlighting the significance of EU regulation in shaping AI policies in the Nordic region.

To delve deeper, read the full summary here.

Implications of Distance over Redistricting Maps: Central and Outlier Maps

In recent years, computational and sampling methods have had a significant impact on redistricting and gerrymandering. In this paper, we introduce a distance measure over redistricting maps and study its implications. First, we identify a central map that mirrors the Kemeny ranking in a scenario where a committee is voting over a collection of redistricting maps to be drawn. Second, we also show how our distance measure can be used to possibly detect gerrymandered maps. Specifically, some redistricting instances known to be gerrymandered are found to be outliers, being in the 99th percentile in terms of their distance from our central maps.

To delve deeper, read the full summary here.

Scientists’ Perspectives on the Potential for Generative AI in their Fields

There is potential for Generative AI to have a substantive impact on the methods and pace of discovery for a range of scientific disciplines. We interviewed twenty scientists from a range of fields (including the physical, life, and social sciences) to gain insight into whether or how Generative AI technologies might add value to the practice of their respective disciplines, including not only ways in which AI might accelerate scientific discovery (i.e., research) but also other aspects of their profession, including the education of future scholars and the communication of scientific findings.

To delve deeper, read the full summary here.

📰 Article summaries:

The Evolution of Enforcing our Professional Community Policies at Scale | LinkedIn Engineering

What happened: LinkedIn has shared insights into the development and evolution of its anti-abuse platform, focusing on managing account restrictions. They highlight using advanced tools like CASAL and machine learning models to detect malicious intent promptly. The platform's growth necessitated continuous improvements to infrastructure and policies, enabling efficient management of member restrictions while enhancing the user experience.

Why it matters: LinkedIn's approach to member restrictions evolved from a reliance on relational databases to implementing innovative solutions like server-side caching and full refresh-ahead cache. The platform's exponential growth presented challenges in maintaining system efficiency and consistency, prompting a fundamental overhaul. LinkedIn prioritized principles such as high QPS, low latency, and operational simplicity to ensure seamless restriction enforcement across its extensive product offerings.

Between the lines: LinkedIn's journey underscored the importance of starting simple and scaling thoughtfully to avoid unnecessary complexity. They emphasized humility, proactivity, and collaboration in addressing system limitations and driving efficiency. Continuous benchmarking and experimentation were crucial in adapting to evolving challenges and maintaining system resilience amidst growth.

How OpenAI is approaching 2024 worldwide elections

What happened: In anticipation of the 2024 elections across major democracies, efforts are underway to ensure technology is not misused to undermine the electoral process. Collaborative measures are being taken to safeguard against potential abuse, emphasizing accurate voting information, enforcing policies, and enhancing transparency. Cross-functional teams are investigating and addressing potential threats, reflecting a commitment to platform safety.

Why it matters: The responsible use of technology is crucial for the integrity of elections, and initiatives are in place to prevent abuse, such as misleading content and impersonation tactics. Tools are continually refined to improve factual accuracy, reduce bias, and prevent misuse for political campaigning or discouraging voter participation. Transparency measures, including enhanced image provenance and access to real-time news reporting, aim to empower voters to assess information and make informed decisions.

Between the lines: Collaboration with organizations like the National Association of Secretaries of State underscores a proactive approach to election integrity. Lessons learned from these partnerships will inform strategies globally. Ongoing efforts will focus on adapting and learning from various stakeholders to prevent the potential misuse of technology in elections worldwide.

Each Facebook User is Monitored by Thousands of Companies – The Markup

What happened: Consumer Reports conducted a study utilizing data from 709 volunteers' Facebook archives, revealing extensive tracking by numerous companies sending data to Facebook. This form of surveillance, known as "server-to-server" tracking, is typically hidden from users. While the study's sample may not be fully representative, it offers valuable insights into online data collection practices.

Why it matters: The study sheds light on the scale and implications of online tracking, particularly concerning events and custom audiences that involve collecting data outside of Meta's platforms. Such practices enable targeted advertising based on users' activities, even outside of social media platforms. Recommendations from Consumer Reports highlight the need for policy interventions to ensure data minimization, enhance transparency, and empower consumers to control their privacy.

Between the lines: The study underscores the disconnect between users' expectations of online privacy and the reality of pervasive tracking. Calls for policy reforms, including data minimization strategies and enhanced transparency measures, emphasize the need to shift the burden of privacy protection from consumers to corporations. However, consumers may remain vulnerable to extensive tracking practices without comprehensive federal privacy laws.

📖 From our Living Dictionary:

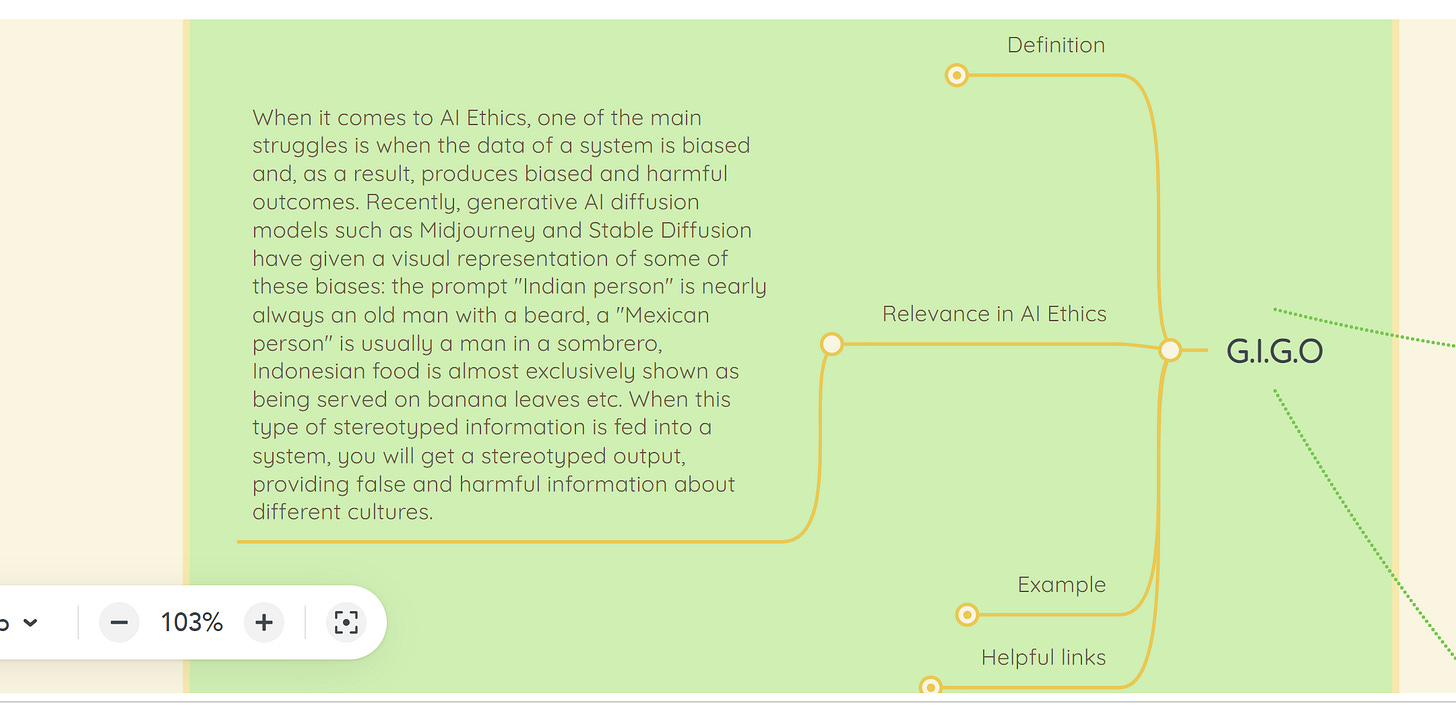

What is the relevance of GIGO to AI ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

Open-Sourcing Highly Capable Foundation Models

Recent decisions by leading AI labs to either open-source their models or to restrict access to their models has sparked debate about whether, and how, increasingly capable AI models should be shared. Open-sourcing in AI typically refers to making model architecture and weights freely and publicly accessible for anyone to modify, study, build on, and use. This offers advantages such as enabling external oversight, accelerating progress, and decentralizing control over AI development and use. However, it also presents a growing potential for misuse and unintended consequences. This paper offers an examination of the risks and benefits of open-sourcing highly capable foundation models. While open-sourcing has historically provided substantial net benefits for most software and AI development processes, we argue that for some highly capable foundation models likely to be developed in the near future, open-sourcing may pose sufficiently extreme risks to outweigh the benefits. In such a case, highly capable foundation models should not be open-sourced, at least not initially. Alternative strategies, including non-open-source model sharing options, are explored. The paper concludes with recommendations for developers, standard-setting bodies, and governments for establishing safe and responsible model sharing practices and preserving open-source benefits where safe.

To delve deeper, read more details here.

💡 In case you missed it:

Philosophical research in AI has hitherto largely focused on the ethics of AI. In this paper, we, an ethicist of belief and a machine learning scientist, suggest that we need to pursue a novel area of research in AI – the epistemology of AI, and in particular, ethics of belief for AI. We suggest four topics in extant work on the ethics of (human) belief that can be applied to ethics of AI belief, including the possibility that AI algorithms such as the COMPAS algorithm may be doxastically wrong in virtue of their predictive beliefs and that they may perpetrate a new kind of belief-based discrimination. We discuss two important, relatively nascent areas of philosophical research that have not yet been recognized as research in the ethics of AI belief: the decolonization of AI epistemic injustice in AI.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.