AI Ethics Brief #145: Stakeholder selection, QA for AI, matrix to select RAI framework, humans needed for AI, responsible design patterns, and more.

Are there any pitfalls that organizations should be aware of when embarking on these audits by hiring external firms?

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

Support our work through Substack

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

This week’s overview:

🙋 Ask an AI Ethicist:

How should we do stakeholder selection for implementing responsible AI in practice?

✍️ What we’re thinking:

Navigating the AI Frontier: Tackling anthropomorphisation in generative AI systems

🤔 One question we’re pondering:

Are there any pitfalls that organizations should be aware of when embarking on these audits by hiring external firms?

🪛 AI Ethics Praxis: From Rhetoric to Reality

What should we be doing to perform QA for AI systems?

🔬 Research summaries:

Putting collective intelligence to the enforcement of the Digital Services Act

A Matrix for Selecting Responsible AI Frameworks

Towards a Framework for Human-AI Interaction Patterns in Co-Creative GAN Applications

📰 Article summaries:

The AI tools that might stop you getting hired

Effective AI regulation requires understanding general-purpose AI | Brookings

The AI-Fueled Future of Work Needs Humans More Than Ever | WIRED

📖 Living Dictionary:

🌐 From elsewhere on the web:

What the US AI Safety Consortium means for enterprises

💡 ICYMI

Responsible Design Patterns for Machine Learning Pipelines

🚨 Sora! Generating compelling videos from text prompts and related ethical concerns - here’s our quick take on what happened in the last few days.

The release of OpenAI's Sora, a new generative AI tool capable of creating videos from text prompts, has sparked a wide range of ethical concerns. These concerns primarily revolve around the potential for misuse in spreading disinformation, copyright and intellectual property issues, job displacement in the entertainment industry, and the societal impact of deepfakes.

Disinformation and Misuse

One of the most pressing ethical concerns is the potential for Sora to amplify disinformation risks. Given its ability to generate realistic video content from textual descriptions, there is a fear that it could be used to create convincing fake news or misleading content, which could have serious implications for public health measures, influence elections, or even burden the justice system with fake evidence. The ease with which realistic videos of any scene can be described and generated poses a significant risk in a world already grappling with disinformation.

Copyright and Intellectual Property

Another major concern is related to copyright and intellectual property rights. Generative AI tools like Sora require vast amounts of data for training, and there has been criticism over the lack of transparency regarding the sources of this training data. This issue is not unique to Sora but is a common critique of large language models and image generators. The case of famous authors suing OpenAI over potential misuse of their materials highlights the broader concerns about the ethical use of copyrighted content in training AI models.

Job Displacement

The introduction of Sora also raises concerns about job displacement within the entertainment industry. With the capability to generate 60-second video clips from short text prompts, there is an estimated 21% reduction in US film, TV, and animation jobs by 2026 due to AI. This technological leap forward could significantly impact the livelihoods of those working in creative industries

Societal Impact of Deepfakes

The societal impact of deepfakes, particularly those that are pornographic, is another ethical concern. Video generators like Sora could enable direct threats to targeted individuals through the creation of deepfake content, which could have devastating repercussions on the lives of affected individuals and their families. The potential for misuse in creating sexually explicit or otherwise harmful content targeting specific individuals underscores the need for robust safeguards and ethical considerations in the deployment of such technology.

Did we miss anything?

Sign up for the Responsible AI Bulletin, a bite-sized digest delivered via LinkedIn for those who are looking for research in Responsible AI that catches our attention at the Montreal AI Ethics Institute.

🙋 Ask an AI Ethicist:

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

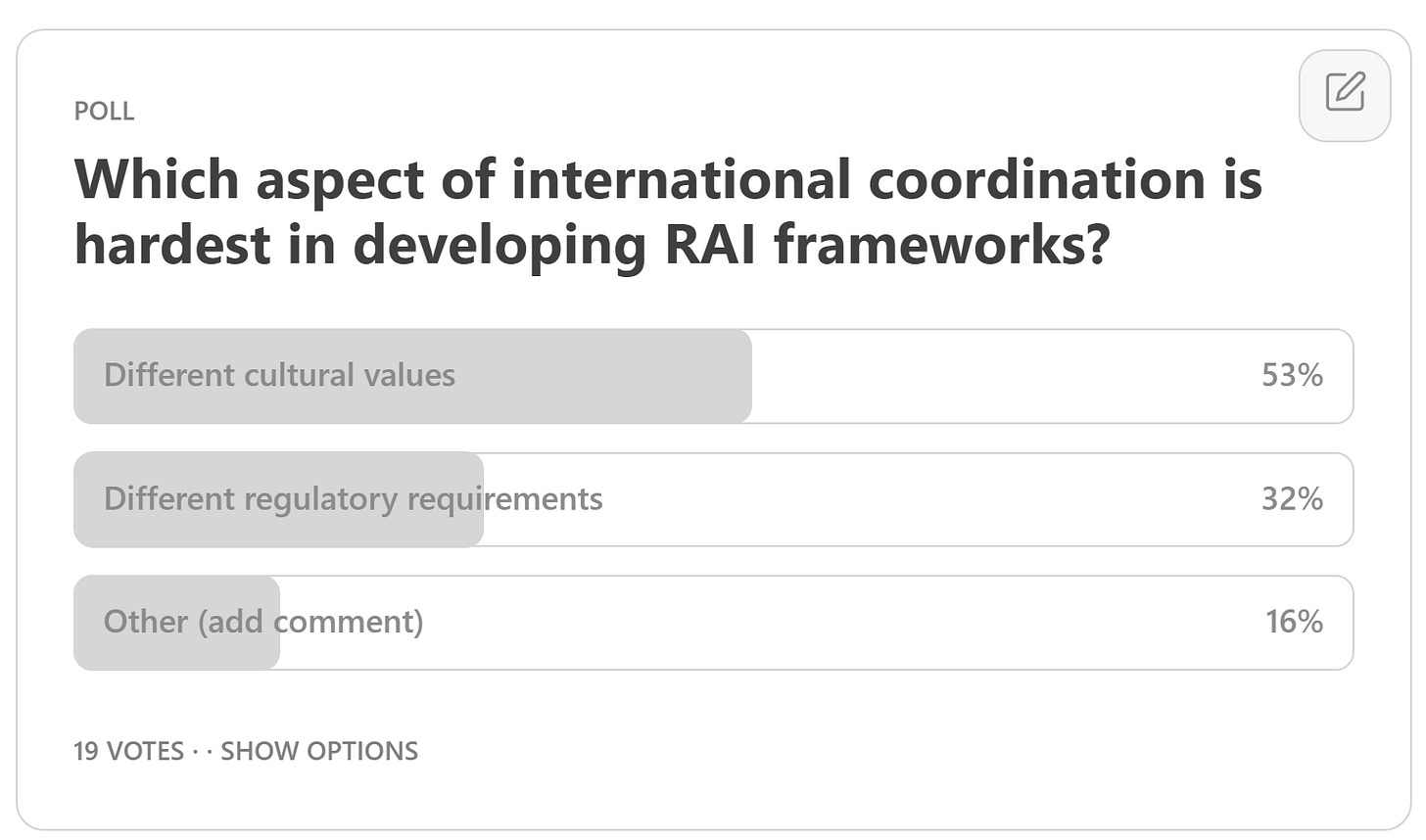

Here are the results from the previous edition for this segment:

Not much of a surprise here with the vast differences and nuances that exist in different cultural values globally, that it would be the hardest in developing any universal responsible AI principles. At least for them to be relevant and respectful of local norms and customs, each of the attempts at coordinating these frameworks will require consultations with local stakeholders who best understand the context and nuances that are essential to make such frameworks useful in practice.

Moving on to the reader question for this week, Q.M. asks us “How should we do stakeholder selection for implementing responsible AI in practice?” This is a great question, one that we’ve partially covered in the past, yet stakeholder selection is an important enough topic warranting discussion again. We’ve found the following to constitute a practical and inclusive approach to sourcing and engaging stakeholders from local ecosystems:

Identify and Understand Stakeholders: Start by identifying a diverse range of stakeholders who will be affected by the AI system. This includes not only end-users but also those indirectly impacted, such as community members, local businesses, and relevant authorities. Understanding their concerns, needs, and expectations through qualitative research methods such as interviews, surveys, and focus groups is crucial. This step ensures that the recruitment process is inclusive and recognizes the value of diverse perspectives in developing responsible AI.

Engage and Build Trust: Engagement should be initiated through transparent, open communication about the goals, potential benefits, and risks associated with the AI system. It's essential to build trust by demonstrating commitment to ethical principles, including privacy, fairness, and accountability, from the outset. Workshops, public forums, and regular updates can be effective ways to involve stakeholders in the project's lifecycle, allowing them to voice concerns, provide feedback, and contribute to decision-making processes. Ensuring that stakeholders understand their role and the impact of their contribution can foster a sense of ownership and commitment to the project.

Co-create and Collaborate: Encourage active collaboration by involving stakeholders in co-creation sessions where they can contribute ideas and feedback on the design, implementation, and evaluation of the AI system. This collaborative approach should be structured to empower stakeholders, giving them a real influence over decisions that affect them. Utilize tools and methodologies that facilitate effective participation for all, including those who might not have a technical background. Ensuring that the process is accessible and equitable is key to successful co-creation.

Throughout these steps, it's important to maintain an ongoing dialogue, be responsive to stakeholder feedback, and be willing to adapt strategies based on what is learned. This approach not only enhances the ethical development of AI systems but also contributes to their social acceptability and success by ensuring they are grounded in the values and needs of the communities they aim to serve.

How have you engaged local stakeholders in your AI projects? Please let us know! Share your thoughts with the MAIEI community:

✍️ What we’re thinking:

Navigating the AI Frontier: Tackling anthropomorphisation in generative AI systems

Although anthropomorphisation in AI can improve user interaction and trust in systems, it can lead to unrealistic expectations, ethical dilemmas, and even legal challenges.

To delve deeper, read the full article here.

🤔 One question we’re pondering:

What are some organizations who are doing great work in the field of auditing AI systems? Especially, given that this is going to become mandatory requirements under many regulations. Are there any pitfalls that organizations should be aware of when embarking on these audits by hiring external firms?

We’d love to hear from you and share your thoughts with everyone in the next edition:

🪛 AI Ethics Praxis: From Rhetoric to Reality

As different regulations come into effect around the world, one of the key requirements being demanded by regulators is to be able to audit and evaluate AI systems that are designed, developed, or used by an organization. We believe that this journey begins with a critical practice from the world of products (physical) of quality assurance (QA). So, what should we be doing to perform QA for AI systems?

From our experience advising different organizations, we’ve found the following to be a great way to embark on the QA journey for AI systems:

Define Clear Objectives and Metrics:

Establish clear, measurable objectives for what the AI system is intended to achieve. This includes accuracy, performance, fairness, and reliability metrics.

Define Key Performance Indicators (KPIs) to quantitatively measure the system's performance against its objectives.

Understand and Prepare Data:

Ensure a thorough understanding of the data that will be used to train, validate, and test the AI model. This includes data sourcing, data quality, and data relevance.

Implement data preprocessing steps to clean, normalize, and partition the data effectively, ensuring it is representative of real-world scenarios the AI system will encounter.

Implement Rigorous Testing Protocols:

Develop a suite of testing protocols that cover unit tests, integration tests, system tests, and acceptance tests.

Include tests for model performance (e.g., accuracy, precision, recall) as well as tests for bias, fairness, and robustness to adversarial attacks.

Validation and Cross-validation:

Use techniques like k-fold cross-validation to ensure that the model generalizes well across different subsets of the data.

Validate the model against unseen data to test its predictive capabilities in real-world scenarios.

Establish Continuous Integration and Continuous Deployment (CI/CD) Pipelines:

Set up CI/CD pipelines to automate the testing and deployment of AI models. This ensures that any changes to the model or the data it’s trained on are automatically tested for quality before deployment.

Monitor and Update Regularly:

Once deployed, continuously monitor the AI system’s performance to catch and correct any drift in data or model degradation over time.

Implement feedback loops that allow users to report issues, which can be used to further refine and improve the model.

Ethical and Regulatory Compliance:

Ensure the AI system complies with ethical guidelines and regulatory requirements, particularly those related to data privacy (e.g., GDPR, HIPAA) and AI ethics.

Conduct regular ethical audits to assess the impact of the AI system on various stakeholders and make adjustments as necessary.

Documentation and Transparency:

Maintain comprehensive documentation of the AI system, including its design, implementation details, data sources, and performance metrics.

Foster transparency by documenting the decision-making processes of the AI model, which is crucial for accountability and trust.

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

🔬 Research summaries:

Putting collective intelligence to the enforcement of the Digital Services Act

Cooperation between regulators and civil society organizations is an excellent way to foster a comprehensive approach, address society concerns, and ensure effective governance in areas such as policymaking, enforcement, and protection of rights. Building upon this premise, the present report offers concrete recommendations for designing an efficient and influential expert group with the European Commission to lead operational and evidence-based enforcement of the Digital Services Act.

To delve deeper, read the full summary here.

A Matrix for Selecting Responsible AI Frameworks

Process frameworks for implementing responsible AI have proliferated, making it difficult to make sense of the many existing frameworks. This paper introduces a matrix that organizes responsible AI frameworks based on their content and audience. The matrix points teams within organizations building or using AI towards tools that meet their needs, but it can also help other organizations develop AI governance, policy, and procedures.

To delve deeper, read the full summary here.

Towards a Framework for Human-AI Interaction Patterns in Co-Creative GAN Applications

While Generative Adversarial Networks (GANs) have become a popular tool for designing artifacts in various creative tasks, a theoretical foundation for understanding human-GAN co-creation is yet to be developed. We propose a preliminary framework to analyze co-creative GAN applications and identify four primary interaction patterns between humans and AI. The framework enables us to discuss the affordances and limitations of the different interactions in the co-creation with GANs and other generative models.

To delve deeper, read the full summary here.

📰 Article summaries:

The AI tools that might stop you getting hired

What happened: Hilke Schellmann, a journalism professor, delved into the world of AI tools for hiring by experimenting with myInterview, a one-way video interview system. She found discrepancies in the tool's assessment, realizing that her performance was judged primarily on intonation rather than content. Despite her experiments, the tool's assessment remained inconsistent, raising questions about its reliability in evaluating candidates.

Why it matters: Schellmann's investigation, detailed in her book "The Algorithm," sheds light on the increasing reliance on AI and algorithms in hiring and performance evaluation. She argues that these tools, while promising efficiency, are based on flawed principles and can perpetuate discrimination. The lack of transparency in how these tools operate and their potential for bias underscores the need for scrutiny and regulation in their use.

Between the lines: Schellmann highlights the opacity surrounding AI tools in hiring, emphasizing the risk of inadvertent discrimination and the challenges candidates face. She calls for HR departments to be more critical of these tools and advocates for regulatory oversight to ensure fairness and transparency in their deployment. Meanwhile, she suggests that job seekers can leverage AI tools like ChatGPT to navigate the hiring process more effectively, signaling a potential shift in power dynamics.

Effective AI regulation requires understanding general-purpose AI | Brookings

What happened: Recent years have seen significant advancements in evaluating and mitigating bias and adverse impacts in machine learning and AI models. However, the latest generation of AI models, like GPT-4, presents new challenges due to their general-purpose nature. Unlike previous models with clear contexts of use, these newer models are being utilized for a wide range of tasks, leading to unforeseen risks and impacts.

Why it matters: Regulating AI requires a nuanced understanding of its real-world applications and associated risks. Current evaluation methods and regulations often struggle to keep pace with the diverse uses of modern AI models. Obtaining better information about how these models are used is crucial for policymakers to prioritize regulatory efforts effectively and address the actual impacts of AI technologies.

Between the lines: While government mandates and company disclosures offer some insights into AI use, they may not capture the full spectrum of applications, particularly those outside highly regulated industries. With access to extensive user interaction data, tech companies play a pivotal role in providing comprehensive information about AI usage. However, concerns about privacy and competition may hinder their willingness to share data. Collaboration between researchers, regulators, and tech companies is essential to develop regulations grounded in real-world use cases and mitigate the risks associated with modern AI technologies.

The AI-Fueled Future of Work Needs Humans More Than Ever | WIRED

What happened: AI is poised to reshape the landscape of work, akin to the transformative impact of the internet in the 1990s. This shift presents both challenges and opportunities, urging a shift towards a skills-first mindset. Employees are encouraged to view their roles as a collection of tasks, adapting to the changing nature of work as AI advances.

Why it matters: The rapid development of AI technologies is driving significant changes in the skills required for various jobs, with a projected 65 percent shift by 2030. This evolution underscores the increasing importance of skills such as problem-solving and strategic thinking alongside AI literacy. Employers recognize the need for a skills-based approach to talent acquisition and development, as demonstrated by the growing demand for AI-related skills and the emphasis on people skills in the workplace.

Between the lines: The future of work will prioritize people skills and collaboration alongside technological advancements. AI is seen as a tool to enhance efficiency rather than replace human input, allowing employees to focus on more valuable aspects of their roles. Leaders and employees alike are urged to embrace AI while fostering a culture of continuous learning and skill development, leading to a work environment that is more human-centered and fulfilling.

📖 From our Living Dictionary:

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

🌐 From elsewhere on the web:

What the US AI Safety Consortium means for enterprises

The Biden administration has created the US AI Safety Institute Consortium to coordinate AI risk management efforts. Experts weigh in on the new opportunities, challenges, shortcomings and how it compares to efforts in Europe and China.

To delve deeper, read more details here.

💡 In case you missed it:

Responsible Design Patterns for Machine Learning Pipelines

This research paper explores integrating ethical considerations into the machine learning (ML) pipeline. It examines “What AI engineering practices and design patterns can be utilized to ensure responsible AI development?” and proposes a comprehensive approach to promote responsible AI design.

To delve deeper, read the full article here.

Take Action:

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

When talking about the impact of Sora, at the end you touched on the "Societal Impact of Deepfakes" and briefly mentioned the "potential" of creating sexually explicit content.

Whilst the press focuses on the negative effects of deepfakes of misinformation, the reality is that 96% of deepfakes are of non-consensual sexual nature and 99% target women.

Deepfake porn not only aims to silence and get revenge from women - who lack protections from this use of technology in most countries around the work - but it's a well-oiled industry where the likes of Amazon, Etsy, Microsoft, GitHub, Visa, and Mastercard are key players and benefit from it.

You can cross-reference my assertions below

https://patriciagestoso.com/2023/12/18/navigating-the-digital-battlefield-women-and-deepfake-survival/

https://patriciagestoso.com/2024/02/14/inside-the-digital-underbelly-the-lucrative-world-of-deepfake-porn/

As you said, the potential of Sora for misuse is huge but it's important to highlight that some populations are more prone to bear the brunt than others.

We need firm national and cross-border regulation not only for the creators and distributors of deepfake porn but for the companies that make it a business.